Introduction

In this article we will look at how to Read / Write Amazon DynamoDB in SSIS. ZappySys developed many AWS related components but in this article we will look at 3 Tasks/Components for DynamoDB Integration Scenarios (Read, Write, Update, Bulk Insert, Create / Drop Table etc.). We will discuss how to use SSIS DynamoDB Source Connector , SSIS DynamoDB Destination Connector and SSIS DynamoDB ExecuteSQL Task . DynamoDB Source connector supports familiar SQL Like query language so any DBA or SQL user can learn it in no time. ZappySys developed highly sophisticated engine to turn your SQL Query into native DynamoDB query. For more information check this help file

Extract data from Amazon DynamoDB in SSIS

Prerequisites

Before we perform the steps listed in this article, you will need to make sure the following prerequisites are met:

- SSIS designer installed. Sometimes it is referred to as BIDS or SSDT (download it from the Microsoft site).

- Basic knowledge of SSIS package development using Microsoft SQL Server Integration Services.

- Make sure ZappySys SSIS PowerPack is installed (download it, if you haven’t already).

- (Optional step). Read this article, if you are planning to deploy packages to a server and schedule their execution later.

More from ZappySys Blog

Video Tutorial – Read/Write/Update data in Amazon DynamoDB in SSIS

Below video tutorial will show you from start to end how to use ZappySys Components to perform various AWS DynamoDB Integration Tasks such as Reading Bulk data, Writing bulk data, Executing ad-hock Command for DynamoDB (such as Create/Drop Table). Get Row Count etc.

Using Amazon DynamoDB Emulator for Local Testing

If you don’t have AWS Cloud account and you still want to try DynamoDB related options in SSIS then you can use FREE Local Emulator for DynamoDB.

- Download it from here.

- Extract to local folder (e.g. c:\tools\dynamodb_local

- Create a batch file there call it RunDynamo.bat and you can put command like below.

Or (Java Home Path not set use below)1"java.exe" -Djava.library.path=./DynamoDBLocal_lib -jar DynamoDBLocal.jar -sharedDb

1"C:\Program Files\Java\jdk--YOUR-VERSION\bin\java.exe" -Djava.library.path=./DynamoDBLocal_lib -jar DynamoDBLocal.jar -sharedDb - Double click Batch file to launch DynamoDB Local instance

Connect to DynamoDB in SSIS

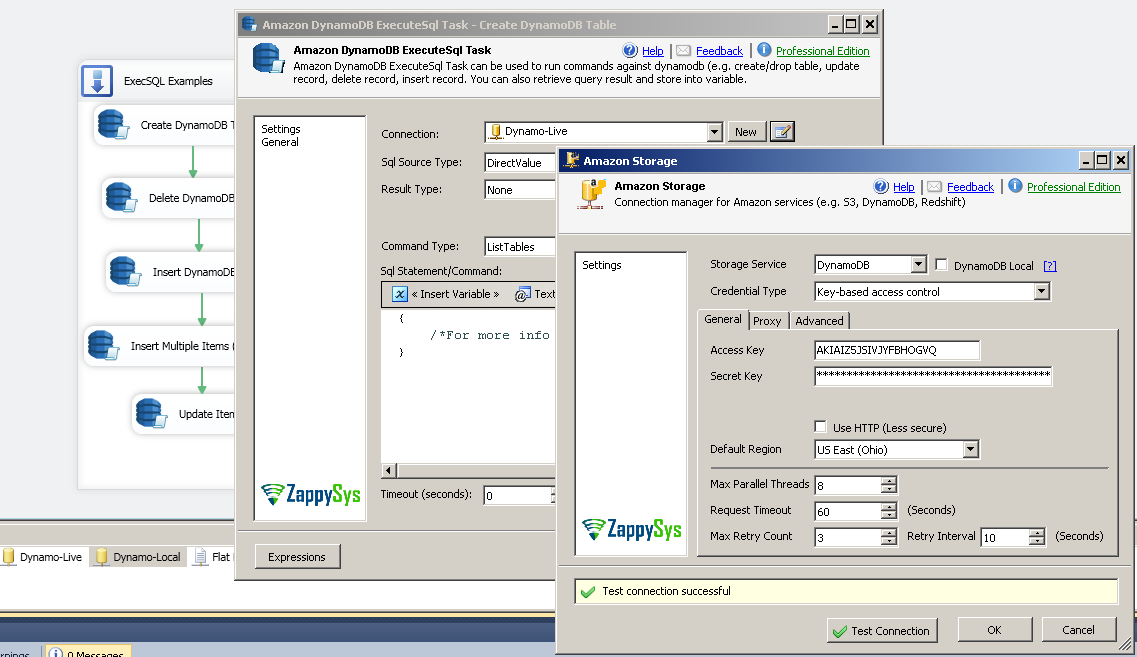

Now lets look at step by step how to Connect to DynamoDB in SSIS. For Simple demo we will use ZS Amazon DynamoDB ExecuteSQL Task but in next section we will show you How to Read / Write DynamoDB Data using other SSIS Components.

Create SSIS DynamoDB Connection / List Tables Example

- Installed SSIS PowerPack if you have not done it.

- Open SSIS Package

- Drag and drop ZS Amazon DynamoDB ExecuteSQL Task from SSIS Toolbox

- Double click to edit the task

- Click New button next to Connection Dropdown

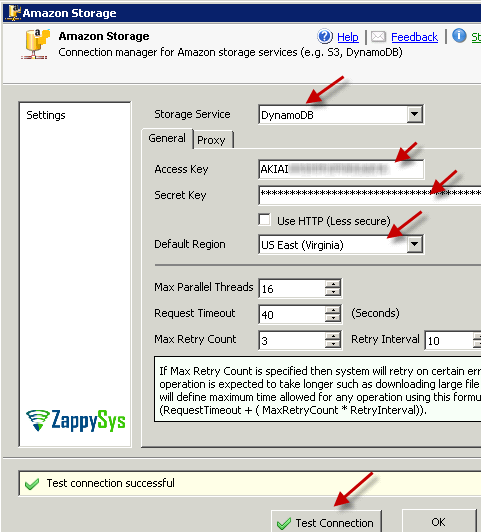

- Select DynamoDB from Service Type. If you are connecting to Local DynamoDB then check DynamoDB Local option else enter your AWS Account Access Key and Secret Key to connect to Cloud Instance.

- Click Test Connection to confirm and click OK to save Connection.

- Back to ExecuteSQL Task UI, Click on Examples dropdown and select command like List Tables.

- Click Test / Preview button.

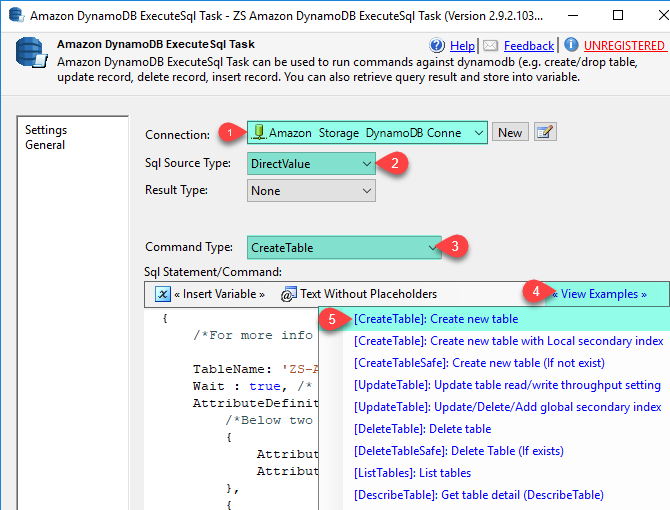

Create DynamoDB Table Example

Now lets look at how to create DynamoDB table in SSIS Amazon DynamoDB ExecuteSQL Task.

Change your Command Type Option to CreateTableSafe (Safe Suffix means, do not throw error if table already exists).

Enter the following Command and Click Test / Preview Result button to create new table called Customers. Primary Key for table is CustomerID.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

{ /*For more info visit : http://docs.aws.amazon.com/amazondynamodb/latest/APIReference/API_CreateTable.html */ TableName: 'Customers', Wait : true, /* Wait until table status is ACTIVE */ AttributeDefinitions: [ /*Below two columns used in Composite Primary Key */ { AttributeName: 'CustomerID', AttributeType: 'S' } ], KeySchema: [ { AttributeName: 'CustomerID', KeyType: 'HASH' } ], ProvisionedThroughput: { ReadCapacityUnits: 5, WriteCapacityUnits: 5 } } |

Write data to Amazon DynamoDB in SSIS

Loading data into Amazon DynamoDB can be easily done using SSIS DynamoDB Destination Connector. It supports loading data two different ways. You can insert Raw JSON documents or Masp individual columns too. Let’s look at both ways.

Create Sample DynamoDB Table (via AWS Console)

- In order to connect to Amazon DynamoDB from SSIS you will need Access Key and Secret Key. Ask your SysAdmin or responsible person to provide that to you. Your keys will look something like this: (this is just example key which may differ in your case)

Click here to read more how to get your AccessKey and Secret Key12AccessKey: AKIAIOSFODNN7EXAMPLESecretKey: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY - For this sample exercise we will need new DynamoDB table (with CustomerID column as HASH key, see below screenshots). To create new dynamoDB table perform following steps

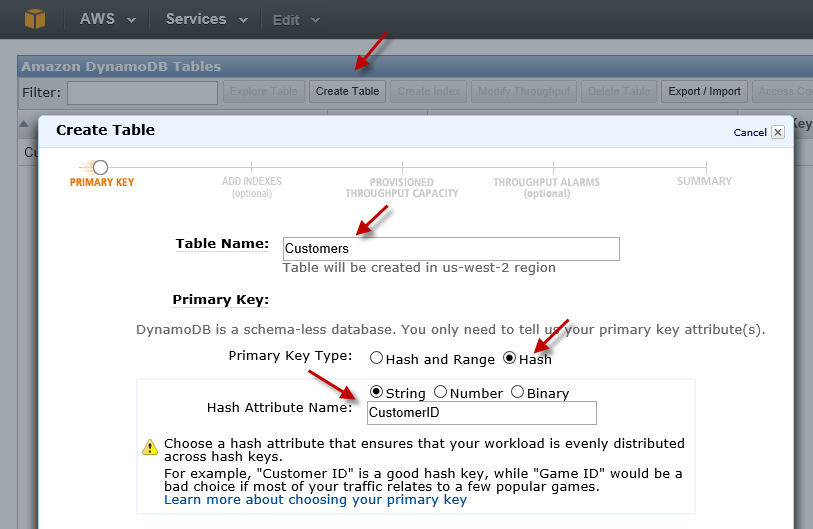

- Click on create table under DynamoDB console

- On Primary Key screen give table name (e.g. Customer), Pick Key Type=HASH, enter keyname=CustomerID and click next

- On index screen no need to enter anything for this exercise so just click next

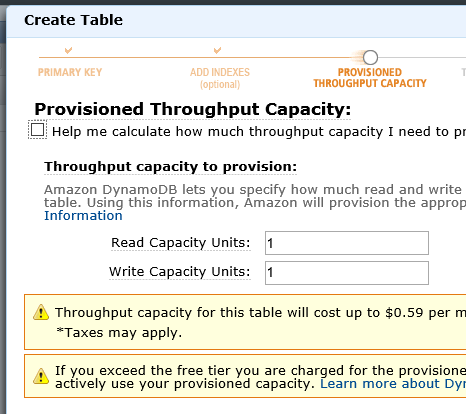

- On Read/Write throughput just enter 1 in both fields and click next

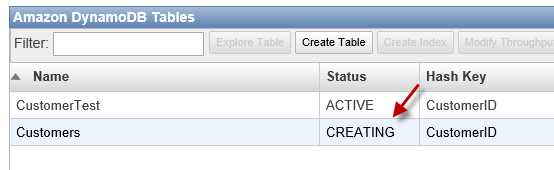

- Review and finalize table creation by clicking Create. It may take few minutes to provision DynamoDB table (Check for AVAILABLE status)

- Once you have created DynamoDB table, got Account Key (its like UserID) and Secret Key (its like password) you may proceed to next section.

Load data into DynamoDB (Column Mappings Mode)

First lets look at how to load sample Customers data into DynamoDB Table using Simple column mapping mode.

- From the SSIS toolbox drag and drop Data Flow Task on the control flow designer surface.

- Double click on the Data Flow task to see Data Flow designer surface.

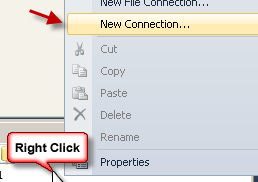

- Right Click in Connection Manager Panel and select [New Connection…] menu item.

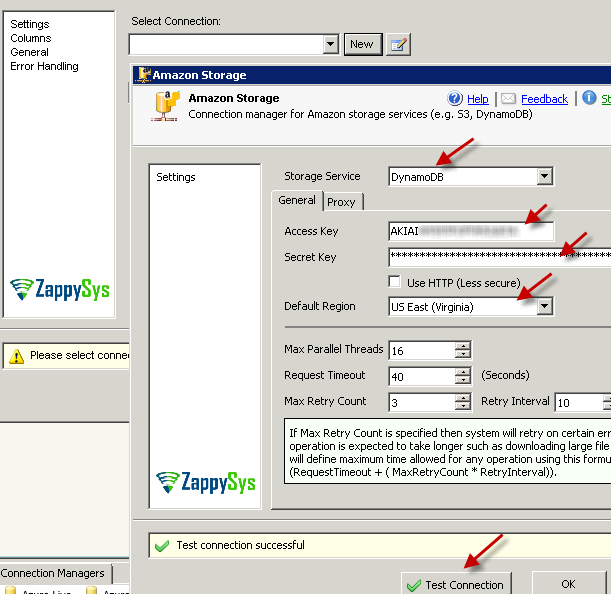

Create new SSIS connection

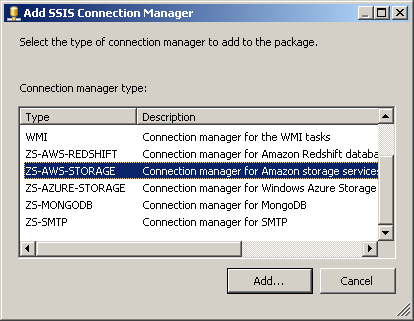

- Pick ZS-AWS-STORAGE connection type. On New Connection Dialogbox Select ServiceType from Top Dropdown, Enter AccessKey and SecrentKey and Click Test connection to verify credentials.

- Click OK to save connection information.

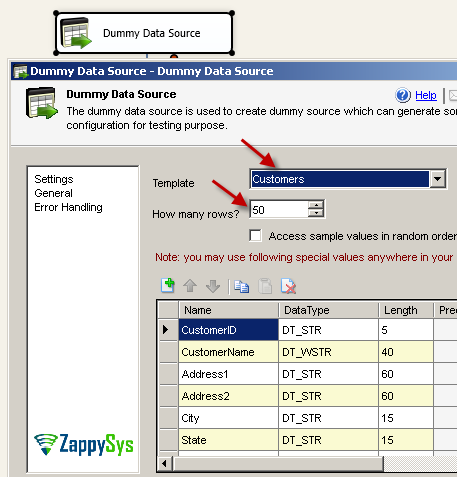

- From the SSIS toolbox drag and drop ZS Dummy Data Source on the dataflow designer surface.

- Double click Dummy Data Source to configure it.

- From Template pick Customer and enter row count=50 (For sample dataset has less than 100 unique values so lets pick small number).

- From the SSIS toolbox drag and drop Amazon DynamoDB Destination on the dataflow designer surface.

- Now single click on the Dummy Data Source, once you see blue arrow from source … connect it to DynamoDB Destination.

- Now double click Amazon DynamoDB Destination to configure it.

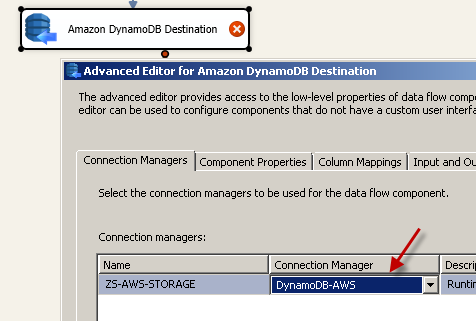

- On [Connection Manager] tab select DynamoDB connection manager.

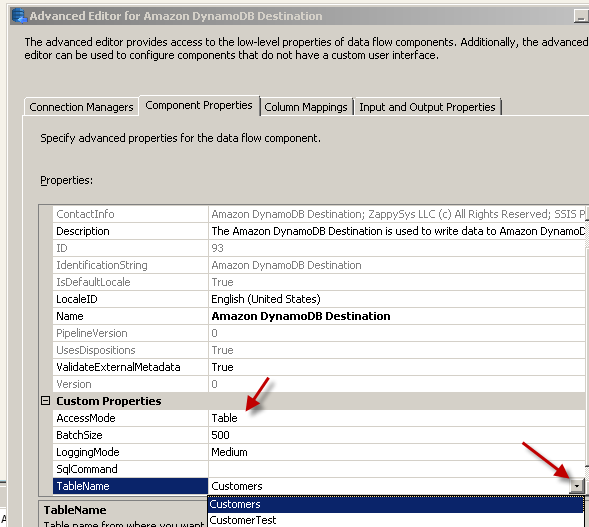

- Click on [Component Properties] tab and select AccessMode=Table and Pick DynamoDB table from Tables dropdown list.

Configure SSIS Amazon DynamoDB Destination Adapter – Component Tab

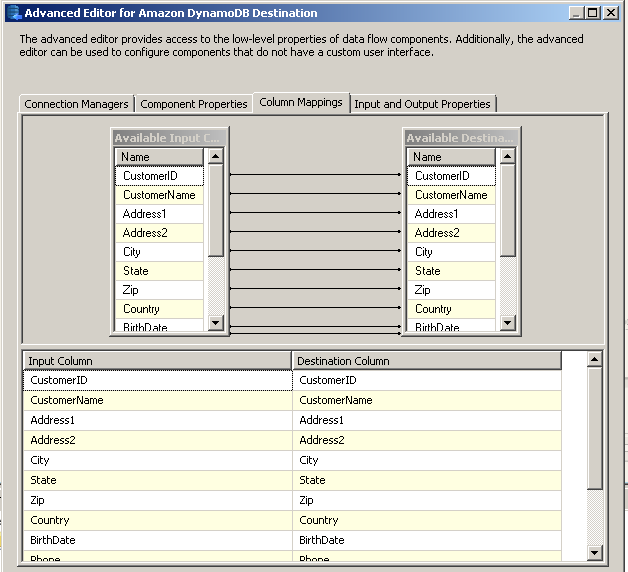

- Click on [Mappings] tab and verify mapping. If dynamoDB table is new table and its empty then all input columns will be automatically mapped. If DynamoDB table is existing table then you can manually pick mapping columns by dragging it from source list to target list.

Configure SSIS Amazon DynamoDB Destination Adapter – Mapping Tab

- Click OK to save settings.

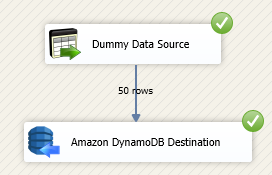

- Execute the package and verify target data by going to your AWS Console under DynamoDB section.

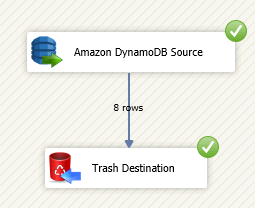

Execute SSIS Amazon DynamoDB Destination Adapter – Load Data

- That’s it. Now you can go back to your AWS COnsole and verify inserted data in DynamoDB Table.

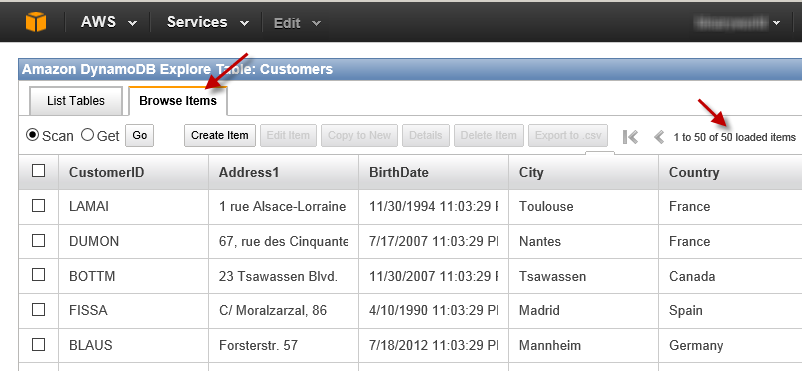

SSIS Load Test – View DynamoDB Data in AWS Console – Record Count Check

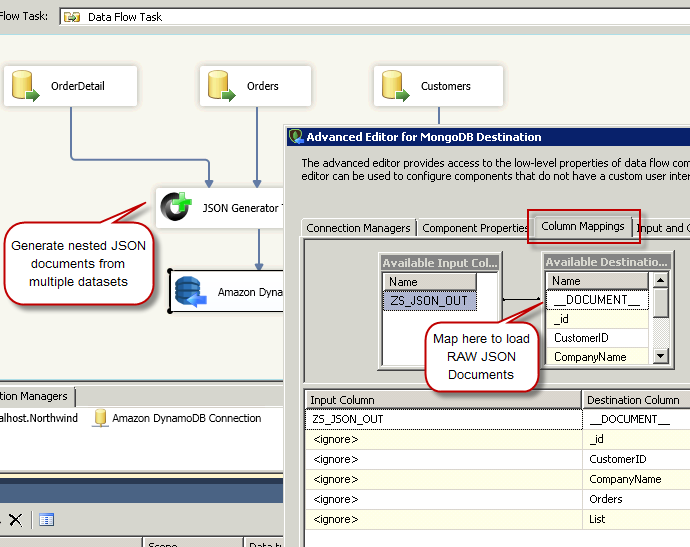

Insert JSON documents into DynamoDB (RAW Mode)

Previous simple approach can be used if you have simple JSON structure in DynamoDB (i.e. no nested array). Now let’s look at how to create nested JSON documents and load into DynamoDB using RAW loading approach. With this method you can create nested JSON from multiple SQL Server tables or other sources (e.g. File, Oracle, MySQL, DB2).

You can use JSON Generator Transform or Template Transform to generate JSON documents in Data flow and then load into DynamoDB. Check these article to learn more on JSON Generator use cases.

Read from Amazon DynamoDB in SSIS

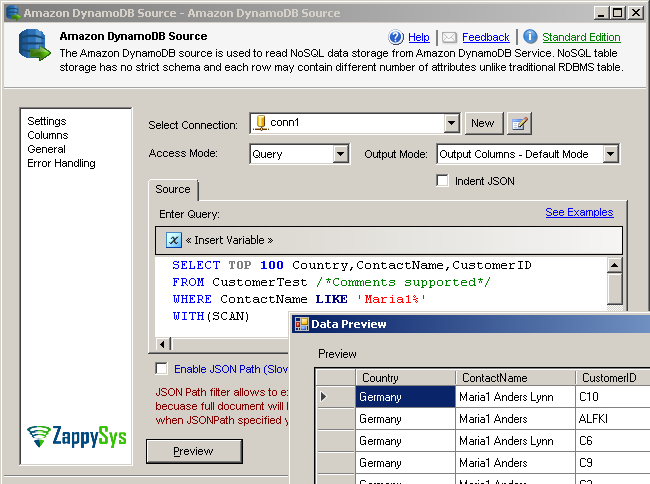

Now lets move onto the next part – How to read data from DynamoDB in SSIS. DynamoDB is NoSQL database and it doesn’t have support for SQL Query language like any other RDBMS. But no worry.. If you using SSIS DynamoDB Source Connector then it supports custom SQL query language so it turns SQL into DynamoDB native API commands. This makes it super easy for any SQL Developer to use connector in drag and drop manner saving huge amount of time.

Let’s see how to read data from DyanmoDB Table

- Drag data flow

Specifying Options in WITH Clause of DynamoDB Query:

- Specify SCAN mode using SCAN option

1select * from mytable where SomeColumn > 55 WITH(SCAN) - Specify SCAN mode with LIMIT of 1000 rows

1select * from mytable where SomeColumn > 55 WITH(SCAN, LIMIT=1000) - Specify QUERY mode using QUERY option

1select * from mytable where MyHashKeyColumn = 'XYZ' And MyRangeKey > '1223' WITH(QUERY) - Specify QUERY mode using QUERY option, and use of INDEX

1select * from mytable where MyHashKeyColumn = 'XYZ' And MyRangeKey > '1223' and Phone LIKE '111-%' WITH(QUERY, INDEX=idxPhone)

Query Amazon DynamoDB with Date/Time column

There is no Datetime datatype in DynamoDB but if you are storing your date values in ISO 8601 DateTime Format as string then your query will understand automatically if you specify >, >=, <, <= or BETWEEN Filter Condition

|

1 |

select * from Orders where OrderDate > '2015-12-31T23:59:59.000Z' WITH(SCAN) |

Using SSIS variable to query DynamoDB Date/Time column

If you want to make your query dynamic then you can use SSIS variable placeholder (use quote around it). If your variable DataType is datetime then you can format it using following way so it has ISO date format. If your variable DataType is String then make sure its correctly formatted.

|

1 |

select * from Orders where OrderDate > '{{User::vMyDate,yyyy-MM-ddTHH:mm:ssZ}}' WITH(SCAN) |

Click here to see various ISO date formats

Amazon DynamoDB Query Examples (DynamoDB Filter) :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

-- Selecting all columns select * from CustomerTest -- Selecting multiple columns select CustomerID,CompanyName from CustomerTest -- Selecting multiple columns, where some attribute name has space in it select CustomerID,[Contact Title] from CustomerTest -- Find records where City attribute is missing (Missing is different than actual NULL value {City: null, Id:1} -vs- {Id:1} ) select * from CustomerTest Where City IS NULL -- Find records where City attribute is NULL (i.e. attribute is found but value is NULL e.g. {City: null, Id:1} ) select * from CustomerTest Where City = NULL -- Using Where clause with simple condition, select all columns select * from CustomerTest Where CustomerID='ALC3R' -- Limiting records returned from query using TOP clause (Similar as LIMIT found in some RDBMS query engine) select top 7 * from CustomerTest -- Using OR select * from CustomerTest Where CustomerID = 'ALFKI' OR CustomerID = 'BOLID' -- Using AND select * from CustomerTest Where CustomerID = 'ALFKI' AND Age > 3 -- Using comparison operators select * from CustomerTest Where CustomerID <> 'ALFKI' select * from CustomerTest Where CustomerID != 'ALFKI' select * from CustomerTest Where Age > 5 select * from CustomerTest Where Age >= 5 select * from CustomerTest Where Age < 5 select * from CustomerTest Where Age = 5 AND CustomerID = 'C5' |

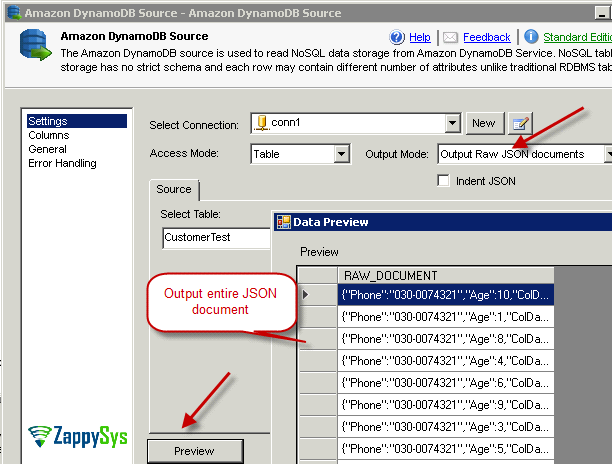

Amazon DynamoDB Source Connector Screenshots

Amazon DynamoDB Source – Configure Connection

Amazon DynamoDB Source – Query DynamoDB using SQL language

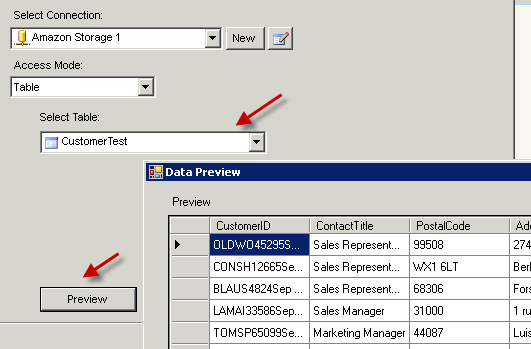

Query Amazon DynamoDB – Read from Table Mode

Query Amazon DynamoDB – Read from Table Mode

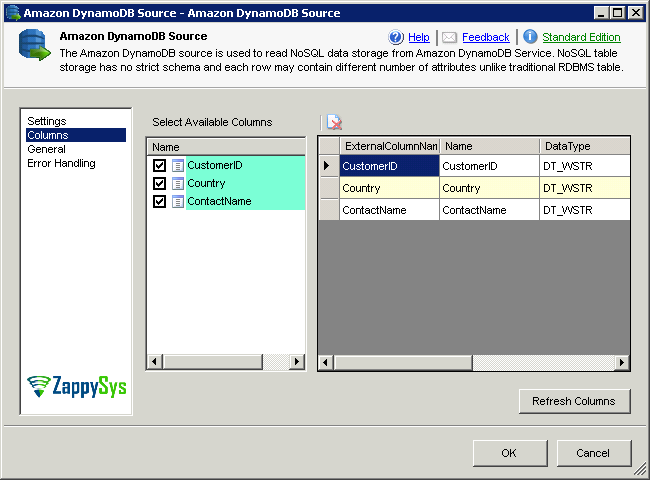

Amazon DynamoDB Source – Select Columns

Amazon DynamoDB Source – Select Columns

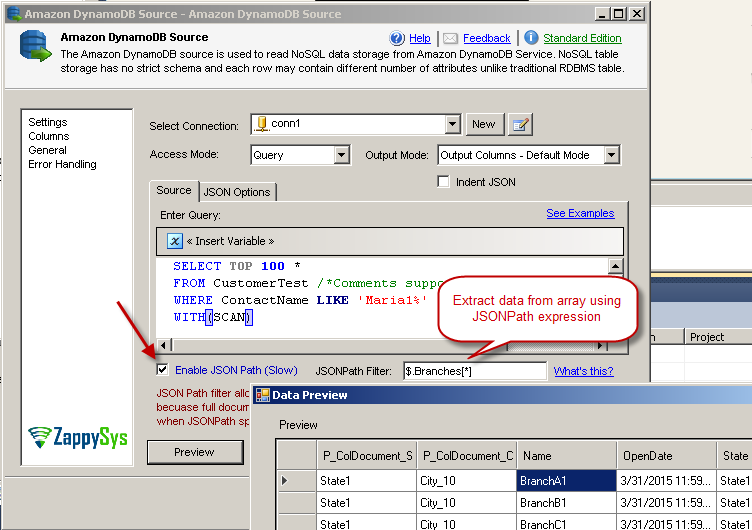

Query Amazon DynamoDB – Read from nested array using JSON Path

Query Amazon DynamoDB – Read from nested array using JSON Path

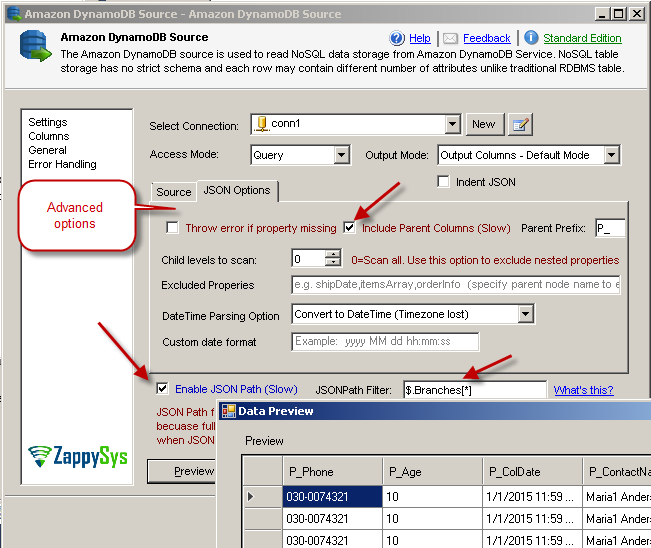

Query Amazon DynamoDB – Advanced JSON Filter Options

Query Amazon DynamoDB – Extract RAW JSON documents

Amazon DynamoDB Source – Extract data from DynamoDB

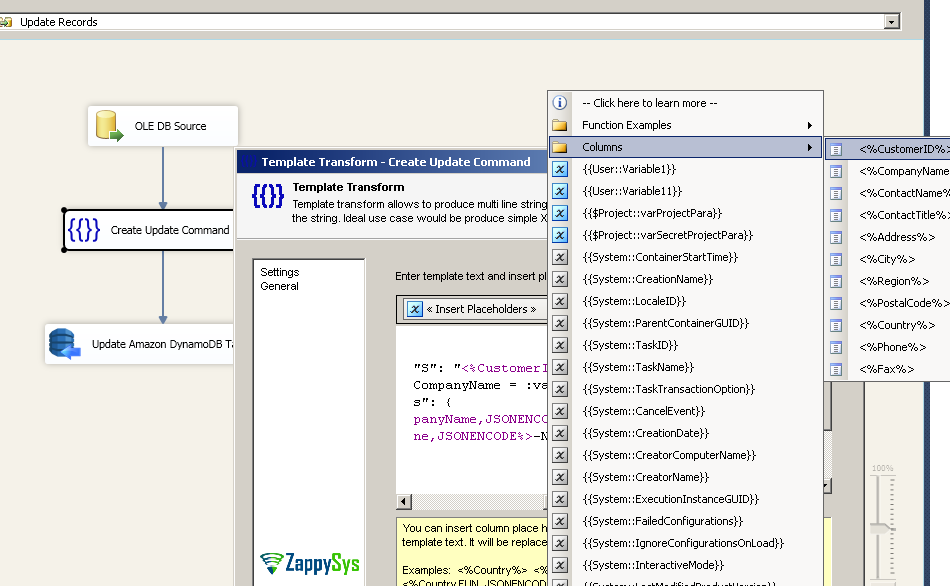

Update Documents in DynamoDB

Now let’s look at how to Update Documents in DynamoDB. Amazon DynamoDB does not allow Bulk Update so you have to do row by row processing. You have to submit UpdateItem Command as per this document. We will update Document for matching condition and update specific columns in this example. By default Document is matched By Key unless you supply additional Condition in the command (e.g. “ConditionExpression”: “LastPostedBy = :val2” ) .

- Drag ZS Dummy Data Source and configure like previous section with Customers Template. You can also use OLEDB Source for read DB data.

- Now drag ZS Template Transform. You can enter command like below to match record by Key and Update CompanyName and Phone fields (for demo we added -MY-NEW suffix in the data )

If you have multi Key then use like this (e.g. CustomerID + ProductID )123456789{"TableName": "Customers","Key": { "CustomerID": { "S": "<%CustomerID%>" } },"UpdateExpression": "set CompanyName = :val1, Phone = :val2","ExpressionAttributeValues": {":val1": {"S": "<%CompanyName,JSONENCODE%>-MY-NEW"},":val2": {"S": "<%Phone,JSONENCODE%>-MY-NEW"}}}

Also you can add additional condition on the top of Key JOIN criteria like below (e.g. Match Country too)1"Key": { "CustomerID": { "S": "<%CustomerID%>" } , "ProductID": { "S": "<%ProductID%>" } },

1234567891011{"TableName": "Customers","Key": { "CustomerID": { "S": "<%CustomerID%>" } },"UpdateExpression": "set CompanyName = :val1, Phone = :val2","ConditionExpression": "Country = :val3","ExpressionAttributeValues": {":val1": {"S": "<%CompanyName,JSONENCODE%>-MY-NEW"},":val2": {"S": "<%Phone,JSONENCODE%>-MY-NEW"},":val3": {"S": "<%Country,JSONENCODE%>"}}} - Connect Template Transform to ZS DynamoDB Destination

- Now drag ZS Amazon DynamoDB Destination

- On first tab Select Connection

- On second tab Change Action to Update, Select Table as Customers

- On Mappings tab Map TemplateOutput column to __DOCUMENT__ column. Remove any other mappings. If you dont see columns in Target just click Refresh.

- Thats it now run Data flow to Update records. AFter execution you can review updated records in DynamoDB.

Call/Execute Ad-Hoc DynamoDB commands

Sometimes you have need to call ad-hoc Amazon DynamoDB commands which are different than read/write. For that you can use Amazon DynamoDB ExecuteSQL Task.

Conclusion

Amazon DynamoDB is one of the most popular Managed NoSQL database in cloud at fraction of cost of traditional RDBMS. Extracting/Writing/Updating data into DynamoDB can be challenge without right toolset. ZappySys AWS Connectors/Tasks makes it super easy for various AWS Integration scenarios (S3, Redshift, DynamoDB, JSON, Redshift etc). Try SSIS PowerPack for FREE to uncover many more things you can do.