Introduction

REST API is becoming more and more common and with that you will see explosion in use of JSON data format. One of the questions we get a lot is “How to extract or read array from JSON data file” or “How to read multiple arrays from JSON data“. Example : Store JSON document has Customers[…] and Employees[…] arrays and lets say you want to extract both and save to target SQL server database. In this post you will learn how to make this possible and how to make it fast with some performance tips. We will use SSIS JSON Source (File, REST API Connector)

REST API is becoming more and more common and with that you will see explosion in use of JSON data format. One of the questions we get a lot is “How to extract or read array from JSON data file” or “How to read multiple arrays from JSON data“. Example : Store JSON document has Customers[…] and Employees[…] arrays and lets say you want to extract both and save to target SQL server database. In this post you will learn how to make this possible and how to make it fast with some performance tips. We will use SSIS JSON Source (File, REST API Connector)

If you want to learn how to parse Multi-Dimensional JSON arrays then check this article.

Video Tutorial – Read from multiple arrays from JSON document

Read array from JSON document

To extract subdocument or array content from JSON document you need to use JSON Path expression. Lets check following example JSON document.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

{ "store": { "employees": [ { "name": "bob", "hiredate": "2015-01-01" }, { "name": "sam", "hiredate": "2015-01-02" }, { "name": "ken", "hiredate": "2015-01-03" } ], "books": [ { "category": "reference", "author": "bob", "title": "hellooo1", "price": 1.95, "sections": [ "s1", "s2", "s3" ] }, { "category": "fiction", "author": "sam", "title": "hellooo2", "price": 1.96, "sections": [ "s4", "s1", "s3" ] }, { "category": "science", "author": "steve", "title": "hellooo3", "tag": "1bcd", "price": 11, "sections": [ "s9", "s2", "s3" ] } ], "location": { "street": "123 Main St.", "city": "Newyork", "state": "GA" } } } |

Below is example filter expression to extract common elements

|

1 2 3 4 5 6 7 8 9 10 |

$.store.books[*] //get all books for store $.store.employees[*] //get all employees for store $.store.books[*].sections[*] //get all sections from all books $.store.books[*].author //get all authors of all books for store $.store.books[*] //get all books for store $.store.books[2] //get 3rd book record $.store.books[:2] //get first 2 books from the top $.store.books[-2:] //get last 2 books $.store.books[?(@.price < 10)] //get books where price is less than 10 $.store.books[?(@.tag)] //filter all books with tag |

Read single array from JSON data (JSON Path expression)

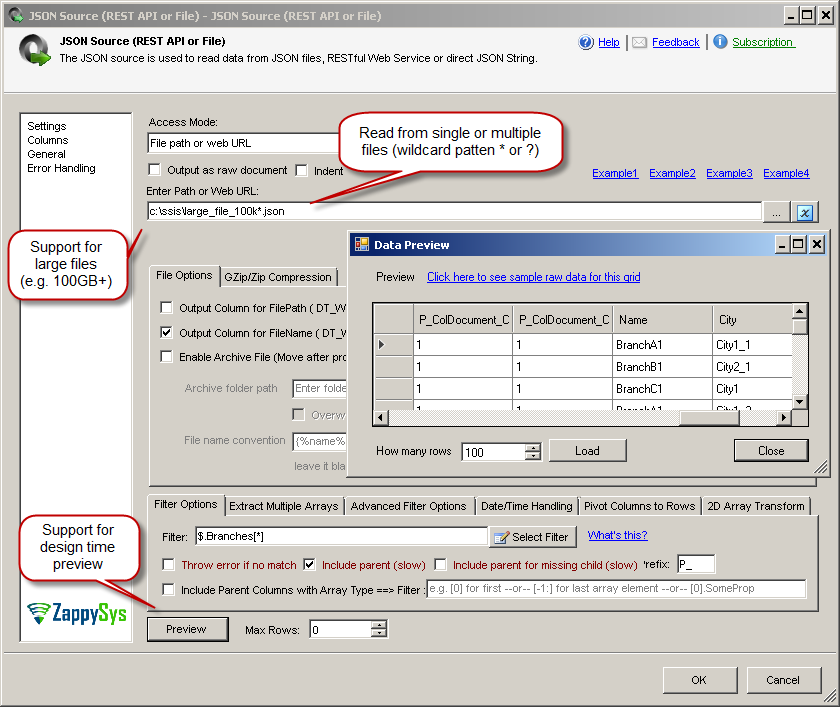

SSIS PowerPack comes with powerful REST API Connectors for JSON and XML . In below example screenshot you will see how to extract single array section from your JSON File. But if you have multiple array sections (e.g. books and employees from above example) then you have to use Multiple JSON Source Connectors for same JSON File.

Read multiple arrays from JSON data

If you have scenario to extract multiple arrays from same JSON (e.g. extract Employees and Books from store ) then you have two options. You can pick any approach which suites your need (Method-1 is recommended).

- Method-1: Use single JSON Source with [Output As Document] option and feed that to JSON Parser Transform

- Method-2: Use multiple JSON Sources with different filter expressions

- Method-3: Enable Array Flattening Option (Useful if you have smaller arrays – i.e. less than 10 items) – Version v2.7.6+

Now lets discuss each approach in detail.

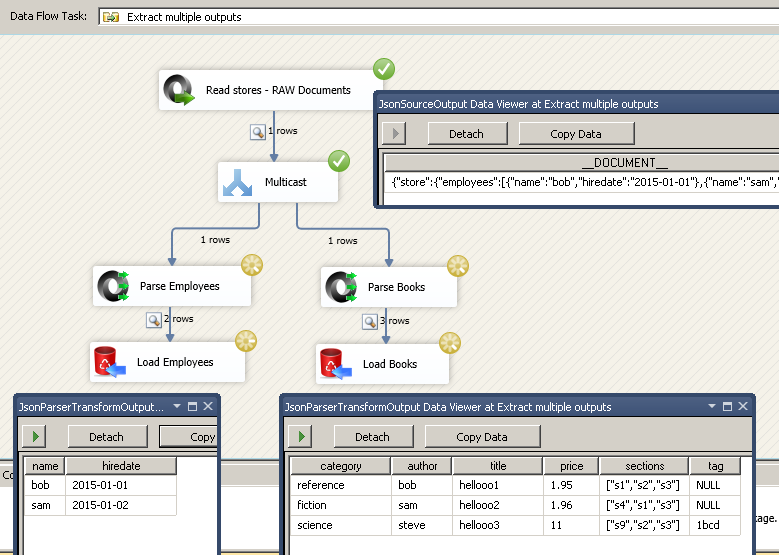

Method-1: Use single JSON Source along with JSON Parser Transform

SSIS PowerPack v2.2 released new JSON Parser Transform and XML Parser Transform which can be used to parse any input JSON/XML data coming from upstream in pipeline. It parse input string column into multiple columns how JSON/XML Source does but the only difference is JSON Parser takes String as input from existing upstream column.

Here are the steps to parse multiple JSON arrays using JSON Parser Transform. For example purpose we will use sample store json listed above.

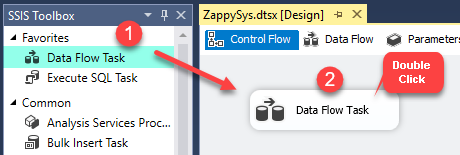

- Drag new data flow in SSIS Control Flow designer. Double click on Data flow

- Drag ZS JSON Source from SSIS Toolbox. Configure Source, Filter and other property.

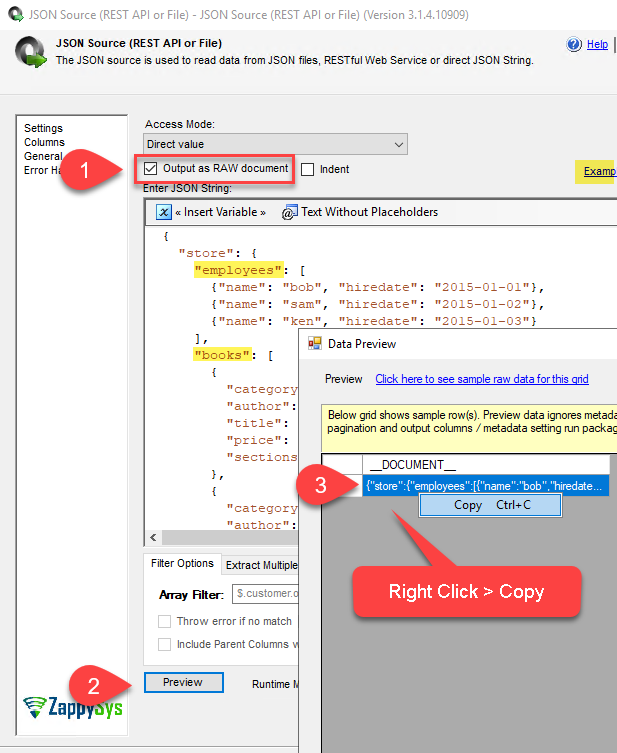

- Check [Output As Document] option (Found under Access Mode dropdown). Click preview. From grid copy one sample document from __DOCUMENT__ column. (Right click on Cell and Copy).This is raw JSON which we will use later to feed as sample in JSON Parser Transform (If you cant copy JSON then obtain some other way)

- Click OK to save JSON Source.

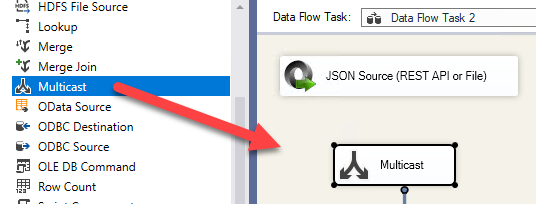

- Drag Multicast Transform (Native SSIS Transform) from Toolbox

- Connect JSON Source to Multicast Transform

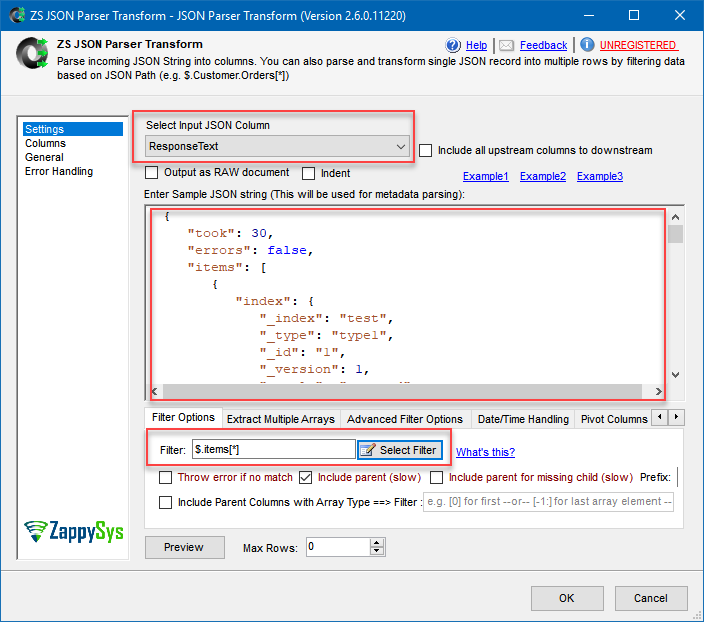

- Drag first JSON Parser Transform from Toolbox. Rename it to [Parse Employees]. Connect blue arrow coming from Multicast to JSON Parser Transform

- Drag second JSON Parser Transform from Toolbox. Rename it to [Parse Books]. Connect blue arrow coming from Multicast to JSON Parser Transform

- Double click [Parse Employees]. Select __DOCUMENT__ as upstream column. Enter sample JSON you obtained in step#3. Click on select Filter and highlight Employees node (i.e. $.store.employees[*] ). Click preview to see data.

- Very Important:Click Columns tab and verify metadata (e.g. datatype, length). If you think you need extra columns or bigger column length then change in the grid and check [Lock] option found in the last grid column. If column length is smaller than output data then you will get error message when you run package. You can review error message carefully to determine which column needs to be adjusted (Repeat this until you clear all errors).

- Click OK to save [Parse Employees].

- Double click [Parse Books]. Select __DOCUMENT__ as upstream column. Enter sample JSON you obtained in step#3. Click on select Filter and highlight Books node (i.e. $.store.books[*] ). Click ok. Click preview to see data.

- Perform same steps as #11 for [Parse Books] to set correct metadata.

- Click OK to save [Parse Books].

- Drag ZS Trash destination from Toolbox. Connect Trash destination to [Parse Employees]

- Drag ZS Trash destination from Toolbox. Connect Trash destination to [Parse Books]

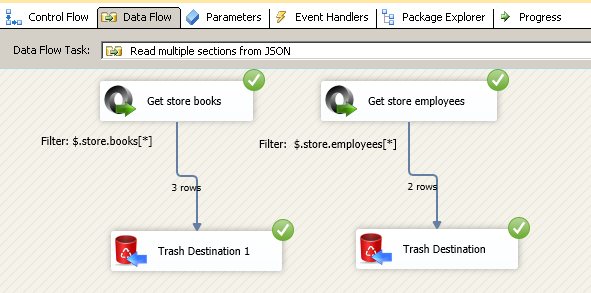

- Run the package and you will see both 2 outputs from different JSON Parser with different row count.

Here is how it will look like

Extract multiple array output from JSON file / REST API response in (SSIS JSON Source, JSON Parser Transform)

Method-2: Use multiple JSON Sources with different filter expressions

You can consider using multiple JSON Source (each source with unique filter expression). Since one JSON source can only extract one array or Document.. You have to use below technique to read multiple arrays from same JSON document.

Method-3: Use Array Flattening Option (Preferred for smaller arrays)

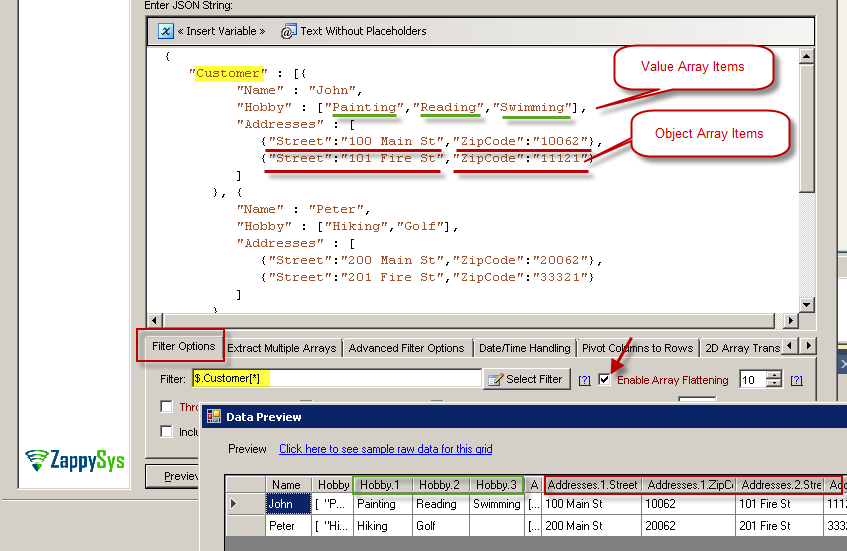

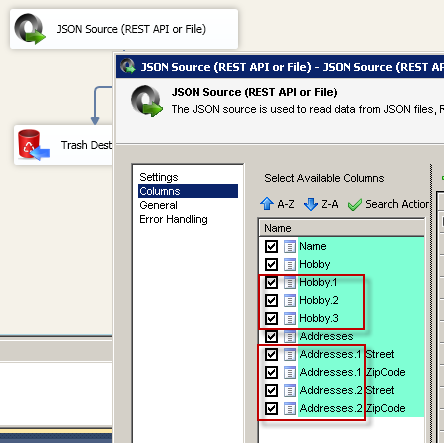

If you have array inside extracted record and you like to flatten it so each items of array becomes column then use newly introduced feature [Enable Array Flattening]

Consider JSON like below. What if you don’t like to store every detail from below JSON in just one table (i.e. rather than splitting in 3 tables Customers, CustomerHobbies , CustomerAddresses ?) . To achieve full denormalization ZappySys offers powerful yet simple one click option.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

{ "Customer" : [{ "Name" : "John", "Hobby" : ["Painting","Reading","Swimming"], "Addresses" : [ {"Street":"100 Main St","ZipCode":"10062"}, {"Street":"101 Fire St","ZipCode":"11121"} ] }, { "Name" : "Peter", "Hobby" : ["Hiking","Golf"], "Addresses" : [ {"Street":"200 Main St","ZipCode":"20062"}, {"Street":"201 Fire St","ZipCode":"33321"} ] } ] } |

To enable flattening check below option on Filter

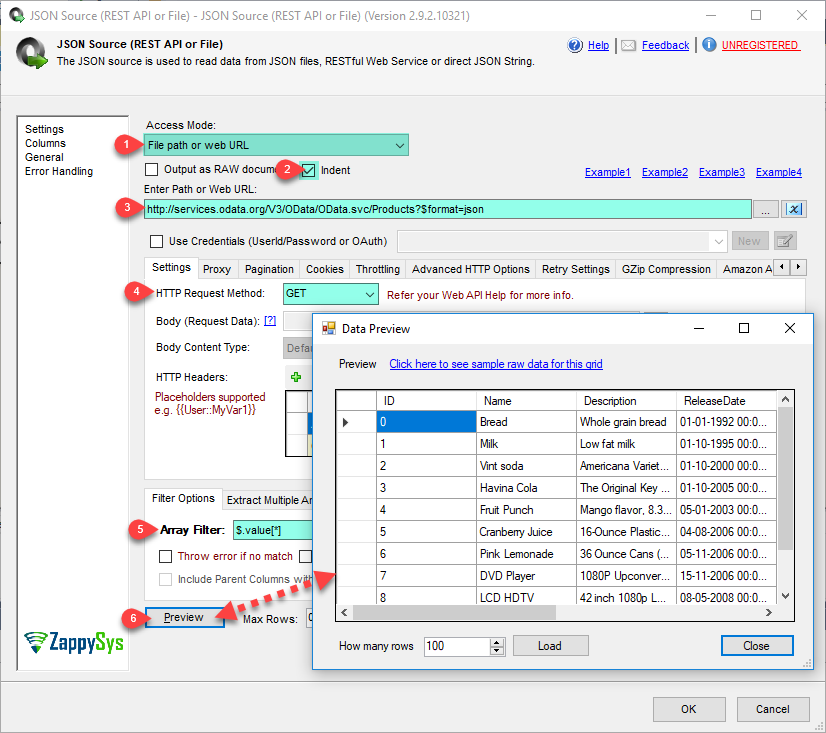

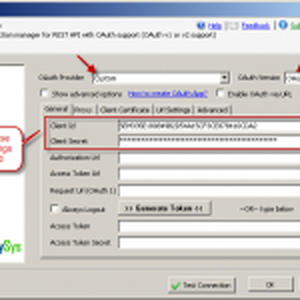

Using JSON REST API Connector to parse JSON data

If you want to access JSON data from REST API then you can use same JSON Source Connector. All you have to do is rather than local file path c:\data\xyz.json change it to URL as below and you will be able to access and filter JSON data using same technique as above.

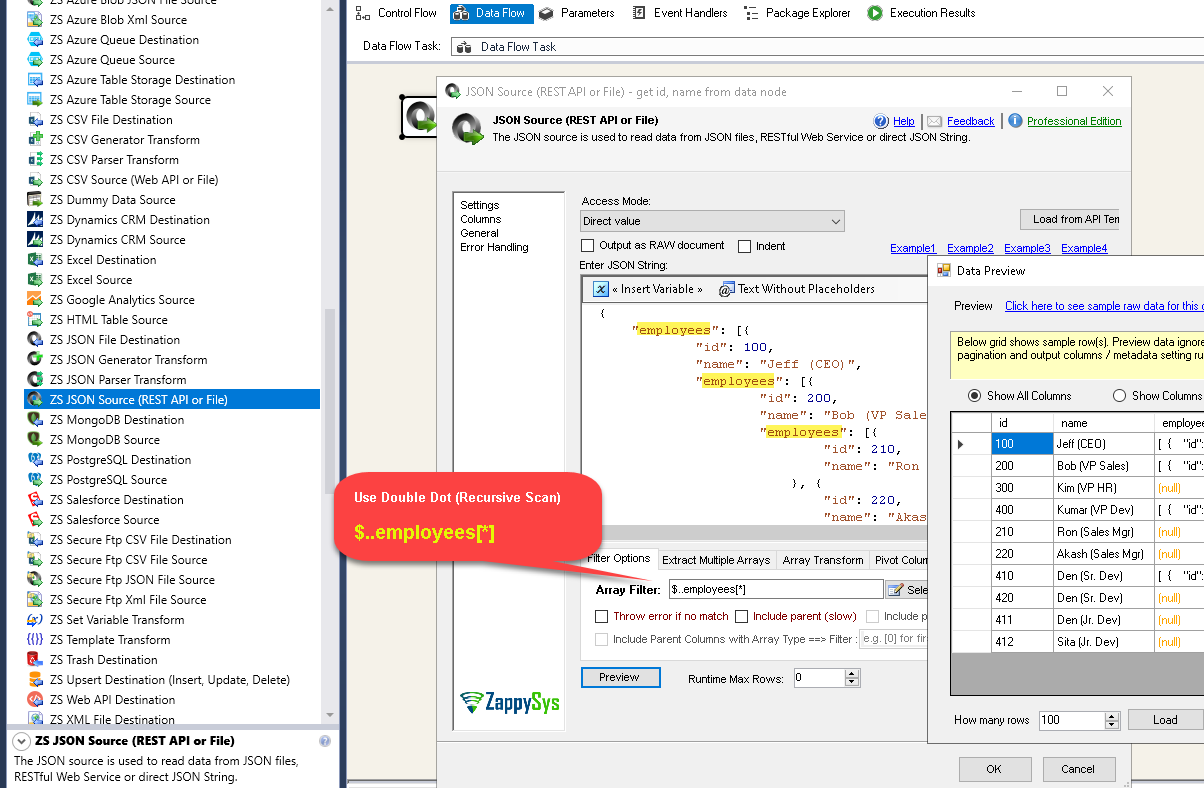

JSON Path for recursive data extract (Double dot expression)

There will be a time when you dont know how many levels deep you have to go for data extract. See below example. We will to get all employees id and name from hierarchy. For that you can use double dot like below. As you can see there are many nested levels and we want to extract id and name from each level so data can be

Expected Output

|

1 2 3 4 5 6 7 8 9 10 11 12 |

id name ------------------------- 100 Jeff (CEO) 200 Bob (VP Sales) 300 Kim (VP HR) 400 Kumar (VP Dev) 210 Ron (Sales Mgr) 220 Akash (Sales Mgr) 410 Den (Sr. Dev) 420 Den (Sr. Dev) 411 Den (Jr. Dev) 412 Sita (Jr. Dev) |

Sample Data

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

{ "employees": [ { "id": 100, "name": "Jeff (CEO)", "employees": [ { "id": 200, "name": "Bob (VP Sales)", "employees": [ { "id": 210, "name": "Ron (Sales Mgr)" }, { "id": 220, "name": "Akash (Sales Mgr)" } ] }, { "id": 300, "name": "Kim (VP HR)" }, { "id": 400, "name": "Kumar (VP Dev)", "employees": [ { "id": 410, "name": "Den (Sr. Dev)", "employees": [ { "id": 411, "name": "Den (Jr. Dev)" }, { "id": 412, "name": "Sita (Jr. Dev)" } ] }, { "id": 420, "name": "Den (Sr. Dev)" } ] } ] } ] } |

Configure JSON Source for Recursive Children Scan using Double Dot JSONPath

Open JSON Source and configure like below.

Set Filter as $..employees[*] (Notice how we used double dots in expression. This does recursive scan for N number of levels)

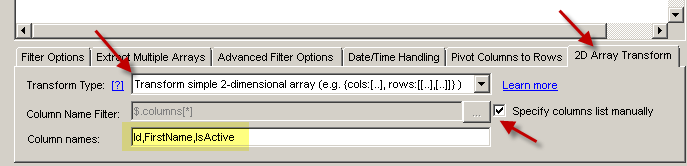

Parse Multi-Dimensional Array

If you want to learn more how to parse Multi-Dimensional JSON arrays then check this article full length article.

Sample JSON with Multi Dimensional array

|

1 2 3 4 |

{ "columns" : ["Id", "FirstName", "IsActive"], "rows" : [ [1,"bob",true], [2,"sam",false], [3,"joe",true] ] } |

Array Transformation Options for 2D array

Sample JSON with Multi Dimensional array (No columns in document)

|

1 2 3 |

{ "rows" : [ [1,"bob",true], [2,"sam",false], [3,"joe",true] ] } |

Array Transformation Options for 2D array

Performance Tips

In this section we will describe how to make your things faster.

Reduce number of requests to server by changing page size

If you are doing REST API paging to get full resultset then make sure you adjust page size to appropriate number so total requests sent to server is reduced. Each request to server adds overhead. Most of API supports page size parameter. Refer to your API documentation how to adjust page size.

Avoid multiple REST API Round trips by caching response

If you extracting multiple arrays from REST API response using above technique then we recommend you to use REST API Task so save response to File or variable first and then use cached JSON data multiple times. This approach is not as friendly as others but it can certainly reduce your total API requests.

Use GZip compression

If your REST API supports GZip compression then check Enable Gzip on Advanced tab of SSIS JSON Source (File, REST API Connector). This will compress response in Gzip format from server and when it comes to your server it will be automatically decompressed by JSON Source connector. This seeds up thing significantly if you have lots of data to download.

Uncheck include parent columns if not needed

By default JSON Source connect includes parent column (Level above last property in filter) from the Filter path you specify. For Example if you specify $.store.employees[*] and “Include parent columns” checked then It will extract all employees and then also include parent level columns for store (Tip: Parent column name start with P_ by default) . If you don’t need parent columns then uncheck from output, this reduces many steps during data extract process.

Download sample package

Click here to download SSIS 2012 Package (Will work in SSIS 2014, 2016)

Conclusion

In this post you learned how to extract data from JSON array and how to make data extract fast. Download SSIS JSON Source (File, REST API Connector) to try everything listed above.