Introduction

If you are looking around to find connectivity options to get Amazon AWS data in Power BI (e.g. Read from Amazon S3 files (CSV, JSON, XML) or get AWS API data such as Billing Data by calling REST API) then unfortunately as of now Power BI doesn’t support it natively.

If you are looking around to find connectivity options to get Amazon AWS data in Power BI (e.g. Read from Amazon S3 files (CSV, JSON, XML) or get AWS API data such as Billing Data by calling REST API) then unfortunately as of now Power BI doesn’t support it natively.

In this article, you will learn How to read Amazon S3 data in Power BI (Using S3 CSV Driver) and in later section we will also learn How to import data from AWS API Calls (e.g. Billing, EC2, S3, DynamoDB). We will use ZappySys REST / JSON Driver to access AWS data without any ETL or Programming.

In our previous article, we discussed how to access REST API data in Power BI (Read JSON / XML Web API). Now let’s learn how to connect to AWS in Power BI.

In this article, We will explore interesting scenarios such as below. It will help you to connect Amazon AWS in Power BI and import data from AWS without doing any ETL or programming.

- Import JSON file from S3 bucket in Power BI (Using Amazon S3 Driver for JSON Files).

- Read CSV file from S3 bucket in Power BI (Using Amazon S3 Driver for CSV Files).

- Read XML file from S3 bucket in Power BI (Using Amazon S3 Driver for XML Files).

- Call Amazon AWS REST API (JSON or XML) and get data in Power BI. Some examples of API calls.

- Get EC2 VM count and their Status in Power BI Dashboard

- Read data from AWS Athena Service

- Use AWS Cost / Billing API to display monthly Cost by Service

- Display summary of Redshift Clusters

- Extract response from Lambda function and display in Power BI

So let’s get started…

Requirements

- First, you will need to have Power BI Desktop (FREE) installed

- Make sure you have AWS Access Key and Secret Key to access AWS data or call API for the desired service

- On third place, our ZappySys ODBC Power Pack

Import Amazon S3 data in Power BI (CSV, JSON or XML Files)

In this section we will look at step by step approach to load Amazon S3 data in Power BI. Your file can be compressed (GZip, Zip) or un-compressed. We will use ZappySys Amazon S3 driver for CSV Files for demo but you can also use Amazon S3 JSON Driver or Amazon S3 Driver for XML Files same way for reading different file formats.

Configure ODBC DSN for Amazon S3 Files ( JSON / XML / CSV )

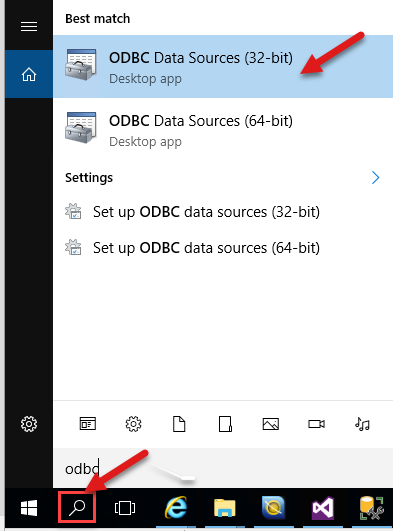

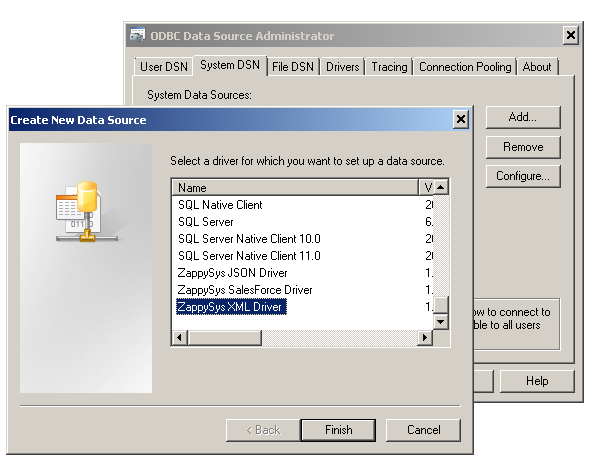

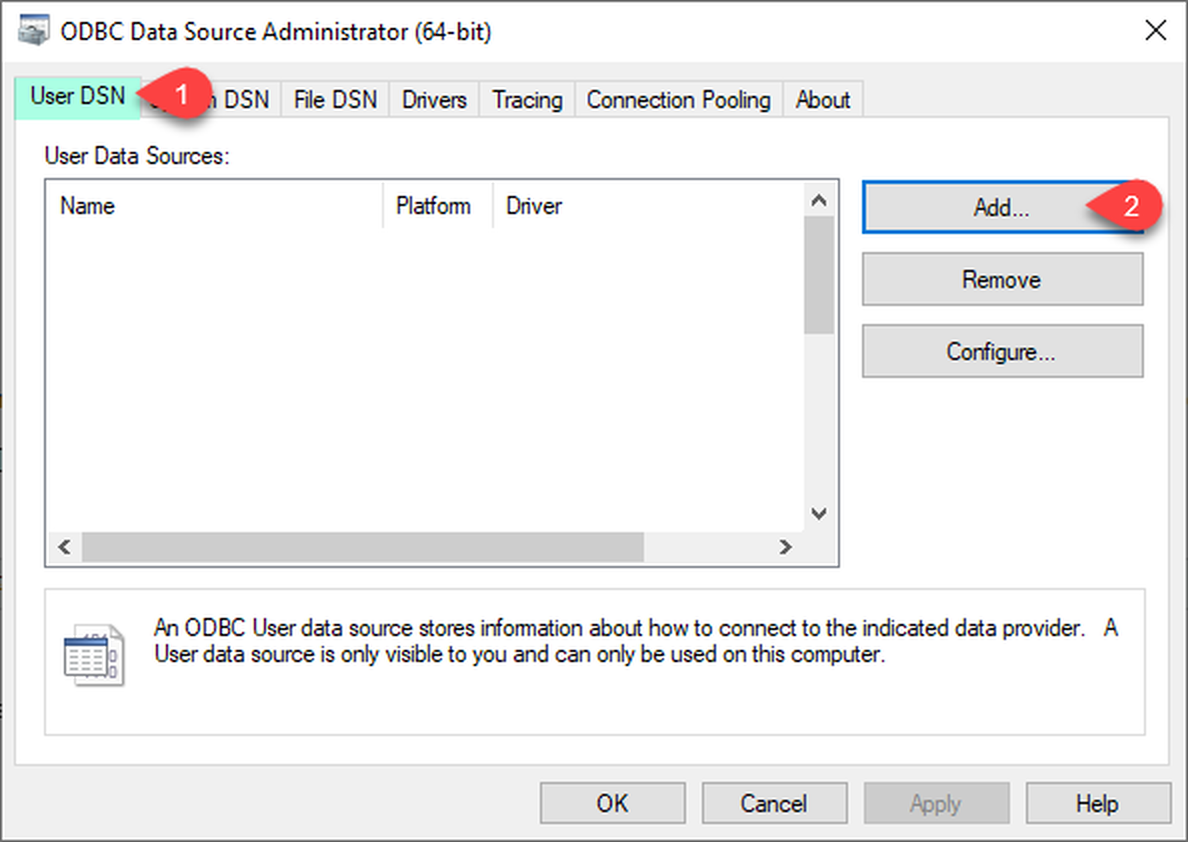

- Type odbcad32.exe in your search box and launch the DSN Config utility.

- you want access for yourself then stay of User DSN Tab. If you want grant access other users then go to System DSN tab. For SQL Server Integration go to System Tab and add new System DSN rather than User DSN. Click New button.

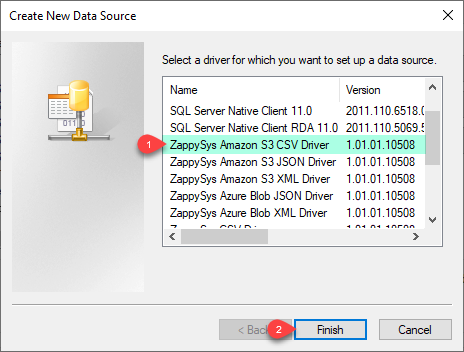

- From the Driver list Select ZappySys ODBC Driver. For this example select [ZappySys Amazon S3 CSV Driver].

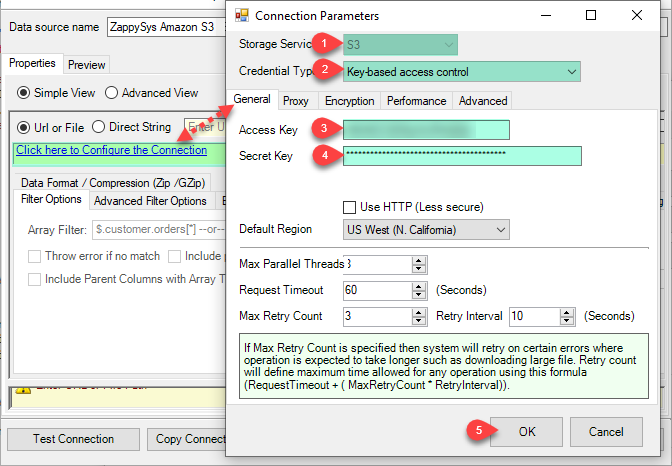

- Now, we need Amazon S3 Storage Connection. Lets create it.

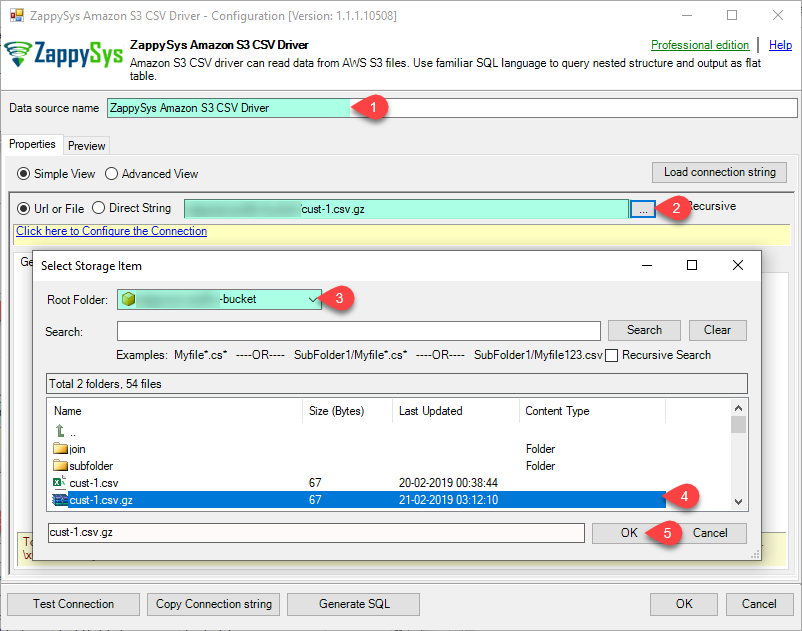

- Now, When you see DSN Config Editor with zappysys logo first thing you need to do is change default DSN Name at the top and Select your bucket and file from it.

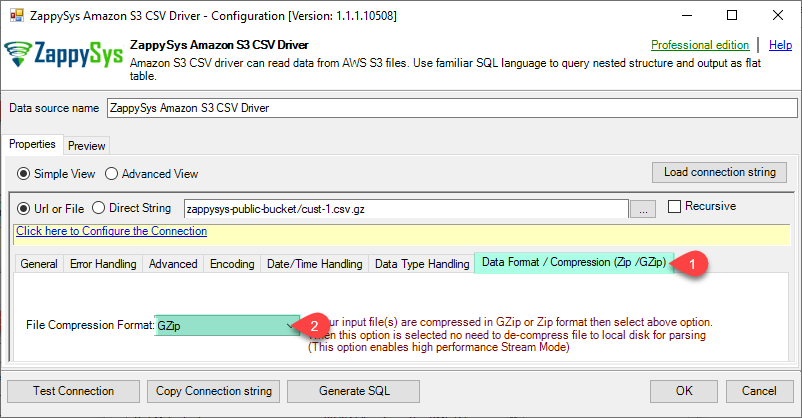

- Here, in the Compression (Zip/GZip) tab set suitable file format.

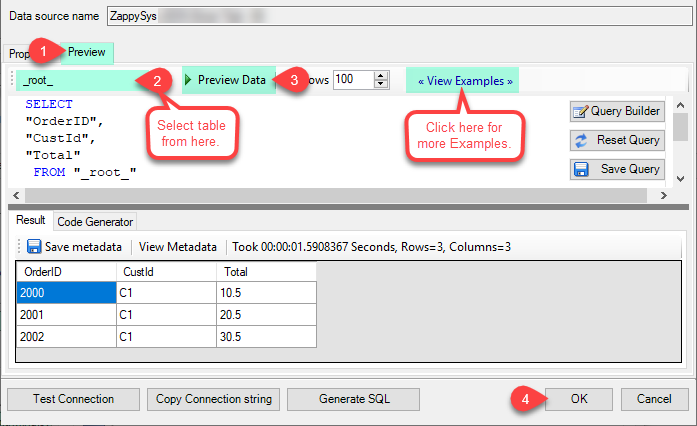

- Click on Preview Tab, Select Table from Tables Dropdown and select [value] and click Preview.

- If you are using DSN method then Click OK to save our DSN Configuration.

Load Amazon S3 Data in Power BI – using ODBC Driver Connection

In the previous section, we configured ODBC DSN for AWS API call and added the ZappySys drivers in the ODBC Driver Administrator with information to connect to AWS S3 Files. We queried S3 File using ZappySys Amazon S3 Driver for CSV Files. Now we will open Power BI and import the information from ODBC DSN we defined earlier.

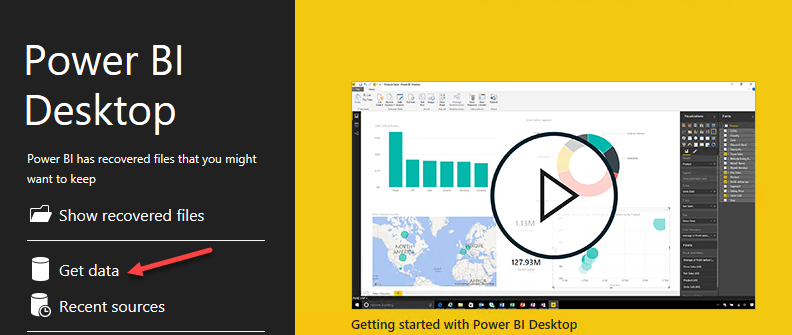

- Open Power BI Desktop and select the option Get data

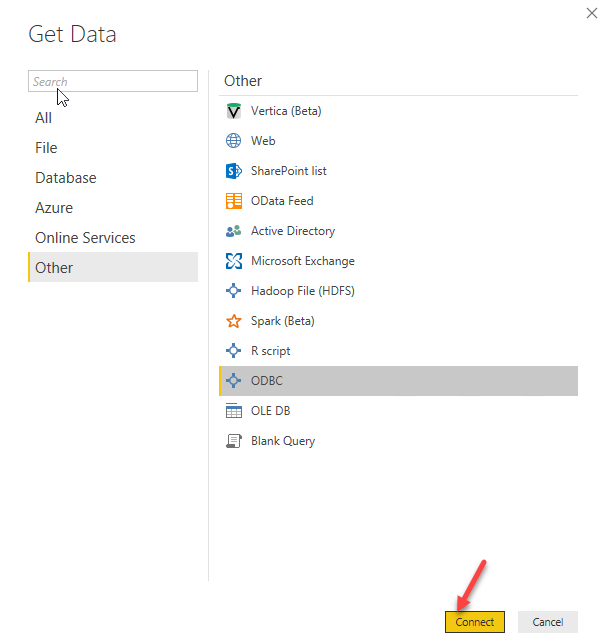

- Get Data will allow adding the ZappySys ODBC driver. Go to Other and select ODBC.

- Select ODBC DSN name from the DSN dropdown

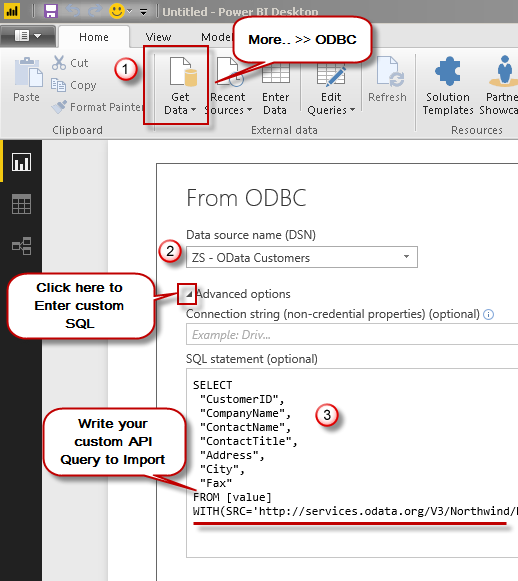

- Now its time to import data. Basically, there are two modes to import data. Table Mode and Query Mode. We will Import using Power BI Query Mode

- Select your DSN and click Advanced Option to enter custom SQL Query to Import your REST API data. You can use ODBC DSN Data sources Preview tool to generate SQL Query. For example, you can enter a query like below. WITH clause is optional but you can supply many options to override DSN UI settings (e.g. Path, Credentials ).

12345678910/*--------- Amazon S3 Driver - Read CSV File Example - Override UI credentials in WITH clause ----------*/SELECT * FROM $WITH (Src='Northwind-Bucket/Orders*.csv' --use wildcard * for pattern search-- ,AccessKey='AKIAxxxx' --not needed if you set in DSN connection-- ,SecretKey='a1wxxxxxxxxxxxxxx' --not needed if you set in DSN connection-- ,RegionName='us-west-1'-- ,ColumnDelimiter='|'-- ,HasColumnHeaderRow='False')

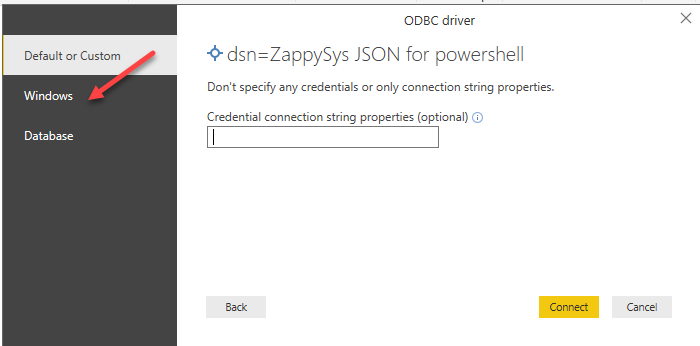

- On the next screen select Windows Authentication to continue (No userid and password).

- Click OK to import data in Power BI

- Now you can create custom Dashboard from imported Dataset.

AWS API Example – Call Amazon S3 API in Power BI

In our previous section we saw how to read Amazon S3 data using native ZappySys S3 Drivers (For CSV , JSON and XML Files), this approach is preferred way to read S3 Files for sure because it gives you UI to browse files, it gives you ability to read from multiple files and many more S3 specific features.

However in some cases you have to call REST API in a manual way using REST API driver rather than S3 Driver. In next section we will see more interesting use case of calling AWS API (e.g. Billing / Cost API).

Now lets look at steps – How to import Amazon S3 data Power BI by calling REST API manually. Steps listed below are almost identical for XML or JSON data format except for selecting Driver Type (i.e. JSON, XML, CSV).

Configure ODBC DSN for REST API ( JSON / XML / CSV )

To consume S3 data in Power BI, first we have to create ODBC DSN using ZappySys Driver (XML or JSON). Perform the following steps.

- To do this, we will first open the ODBC Data Source (32 bit):

- Use the User DSN page and press Add

- Add the ZappySys XML Driver if you are accessing XML files from S3 Bucket or calling any AWS API which returns data in XML format. Select JSON driver for JSON data (or API Access in JSON Format)

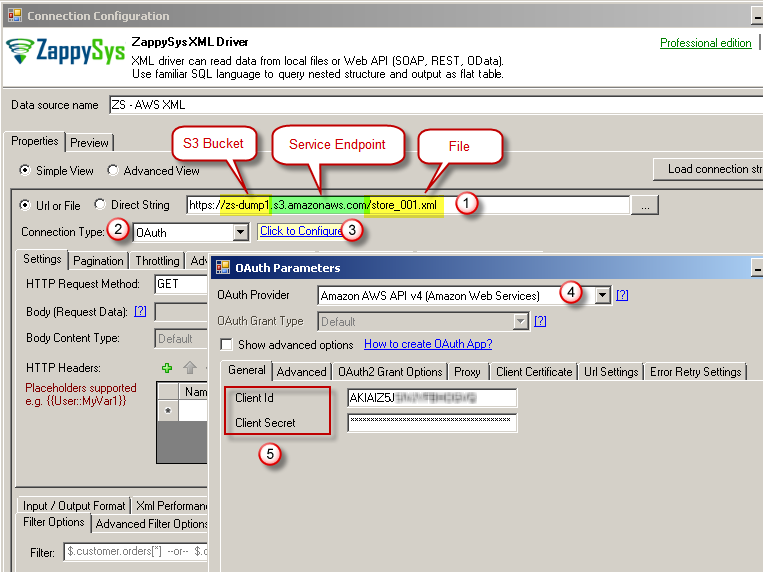

- It is time to connect with Amazon S3 File and read data. In Data Source (URL or File Path), we will use XML file URL as below. Notice that S3 URL has 3 parts (zs-dump1 is bucket name, s3.us-east-1.amazonaws.com is service endpoint for S3 (some service doesn’t require region) and store_001.xml is our file name. After you specify URL select Connection as per the screenshot.Syntax for URL : BucketName + ServiceURL + FilePath.If you are not sure what can be your service endpoint then check this table.

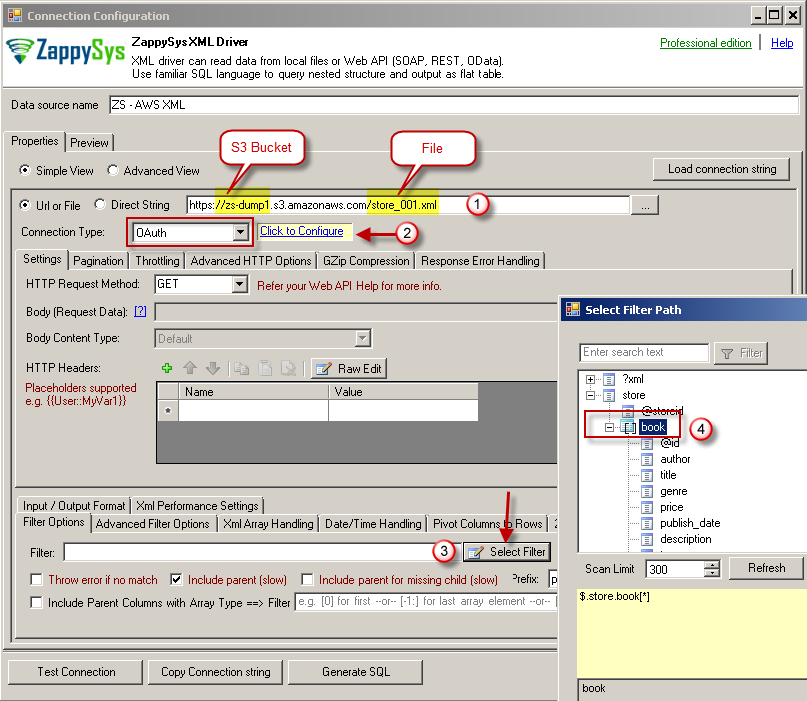

12345https://zs-dump1.s3.amazonaws.com/store_001.xml--OR--https://zs-dump1.s3.amazonaws.com/mysubfolder/store_001.xml--OR--https://zs-dump1.s3.us-east-1.amazonaws.com/mysubfolder/store_001.xmlNOTE: If a filename contains a space, e.g. “store abc.xml“, make sure to replace space with “%20” (“+” will not work). So “store abc.xml” should become “store%20abc.xml“. - Once you specify the URL and Credentials, Next select Filter (This helps to flatten the nested hierarchy of your Data). If you don’t have nested Hierarchy then skip this step.

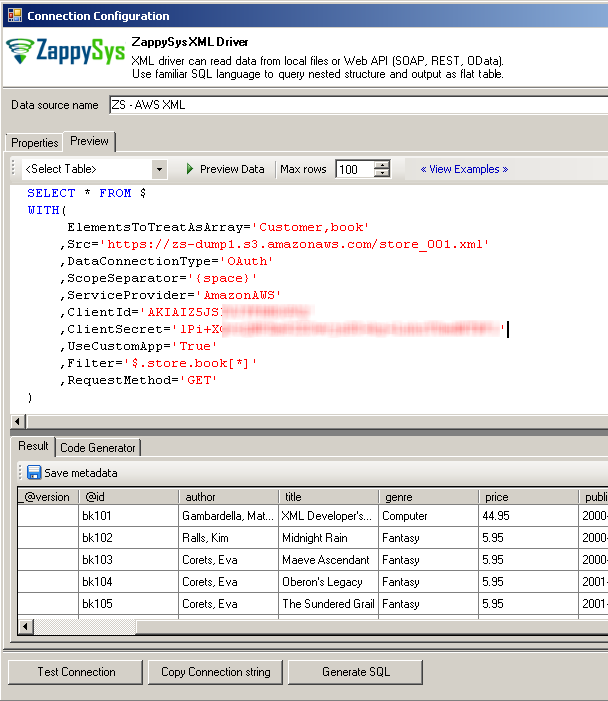

- Now go to Preview Tab and click Preview button in the toolbar. You can remove attributes in the query to use default attributes from Previous Tab. You can always override DSN level setting inside WITH clause of SQL.

- To learn more about query language click on View Examples

- Click OK to save your ODBC DSN settings.

Import AWS S3 File or AWS API data in Power BI dataset

Now let’s look at how to import Amazon S3 data in Power BI using ZappySys XML driver.

In the previous section, we configured ODBC DSN for AWS API call and added the ZappySys drivers in the ODBC Driver Administrator with information to connect to AWS REST API. We queried S3 File using ZappySys XML Driver. Now we will open Power BI and import the information from ZappySys JSON Driver via ODBC connectivity option in Power BI.

- Open Power BI Desktop and select the option Get data

- Get Data will allow adding the ZappySys ODBC driver. Go to Other and select ODBC.

- Select ODBC DSN name from the DSN dropdown

- Now its time to import data. Basically, there are two modes to import data. Table Mode and Query Mode. We will Import using Power BI Query Mode

- Select your DSN and click Advanced Option to enter custom SQL Query to Import your REST API data. You can use ODBC DSN Data sources Preview tool to generate SQL Query. For example, you can enter a query like below.

1234567SELECT "author", "title", "genre", "price", "publish_date", "description", "tag"FROM $WITH(Src='https://zs-dump1.s3.amazonaws.com/store_001.xml',Filter='$.store.book[*]',ElementsToTreatAsArray='book') - On the next screen select Windows Authentication to continue (No userid and password).

- Click OK to import data in Power BI

- Now you can create custom Dashboard from imported Dataset.

AWS API Example – Import Amazon AWS Cost / Billing data in Power BI

So far you have seen how to connect to S3 File. Now let’s look at another interesting example to call AWS API and display information in Power BI dashboard. For example purpose, we are going to call this AWS Billing and Cost Analysis API. Perform following steps.

- Create new DSN using Zappysys JSON Driver

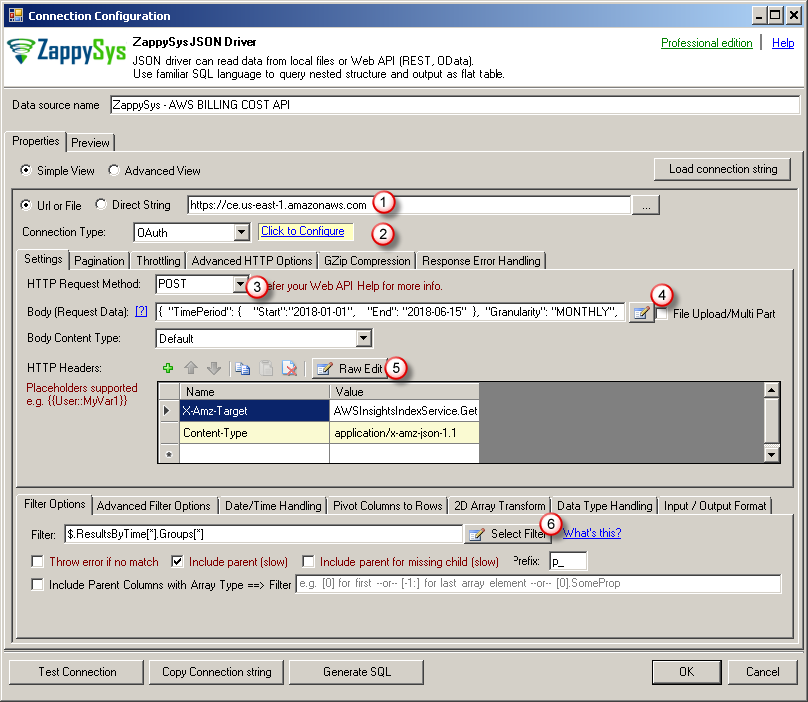

- Configure ODBC DSN as below

- Enter URL as we discussed in previous section. For example to call Billing API Service for East-1 Region we need to enter following way.

1https://ce.us-east-1.amazonaws.com - Select Connection Type as OAuth and click Configure Link.

- Select Provider as AWS API v4 and enter AWS Key and Secret. Click OK to save the connection.

- Select Method as POST

- Enter HTTP Request Headers as below (Note each Billing Cost API action will have different Header value for X-Amz-Target so refer API documentation

- Enter Request Body (Modify your report parameters). As you see in below request we are requesting billing data for 3 services for Jan-1-2018 till Jun-15-2018. If you dont know service name then simply refer to your Bill and copy Service Name in Values array.

1234567891011121314151617181920212223242526272829{"TimePeriod": {"Start":"2018-01-01","End": "2018-06-15"},"Granularity": "MONTHLY","Filter": {"Dimensions": {"Key": "SERVICE","Values": ["Amazon Simple Storage Service","Amazon DynamoDB","Amazon Redshift","AWS Key Management Service"]}},"GroupBy":[{"Type":"DIMENSION","Key":"SERVICE"},{"Type":"TAG","Key":"Environment"}],"Metrics":["BlendedCost", "UnblendedCost", "UsageQuantity"]} - Select Filter as $.ResultsByTime[*].Groups[*]

- Click Test Connection button see it shows OK.

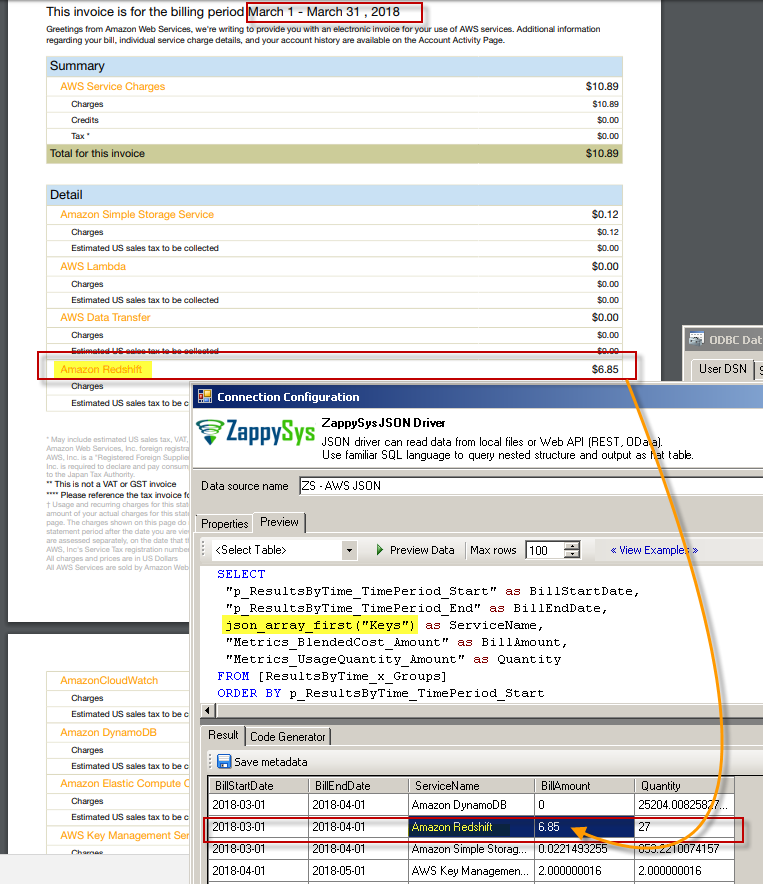

- If the connection is OK then switch to Preview Tab to test query.

- You can select Table name from the drop-down or type query as below

Simple-Query to Extract AWS Billing / Cost Information

12345678SELECT"p_ResultsByTime_TimePeriod_Start" as BillStartDate,"p_ResultsByTime_TimePeriod_End" as BillEndDate,json_array_first("Keys") as ServiceName,"Metrics_BlendedCost_Amount" as BillAmount,"Metrics_UsageQuantity_Amount" as QuantityFROM [ResultsByTime_x_Groups]ORDER BY p_ResultsByTime_TimePeriod_Start - You can also override attributes in your query using below syntax

Custom-Query to Extract AWS Billing / Cost Information (WITH clause)

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849SELECT"p_ResultsByTime_TimePeriod_Start" as BillStartDate,"p_ResultsByTime_TimePeriod_End" as BillEndDate,json_array_first("Keys") as ServiceName,"Metrics_BlendedCost_Amount" as BillAmount,"Metrics_UsageQuantity_Amount" as QuantityFROM $ORDER BY p_ResultsByTime_TimePeriod_StartWITH(Src='https://ce.us-east-1.amazonaws.com',DataConnectionType='OAuth',ScopeSeparator='{space}',ServiceProvider='AmazonAWS'--,ClientId='AKIAxxxxxxxxxxxxxxx'--,ClientSecret='lPi+XQxxxxxxxxxxxxxxxx',UseCustomApp='True',Filter='$.ResultsByTime[*].Groups[*]',RequestData='{"TimePeriod": {"Start":"2018-01-01","End": "2018-06-15"},"Granularity": "MONTHLY","Filter": {"Dimensions": {"Key": "SERVICE","Values": ["Amazon Simple Storage Service","Amazon DynamoDB","Amazon Redshift","AWS Key Management Service"]}},"GroupBy":[{"Type":"DIMENSION","Key":"SERVICE"},{"Type":"TAG","Key":"Environment"}],"Metrics":["BlendedCost", "UnblendedCost", "UsageQuantity"]}',Header='X-Amz-Target: AWSInsightsIndexService.GetCostAndUsage || Content-Type: application/x-amz-json-1.1',RequestMethod='POST') - Click Preview button to see the result.

- Click OK to save Your DSN

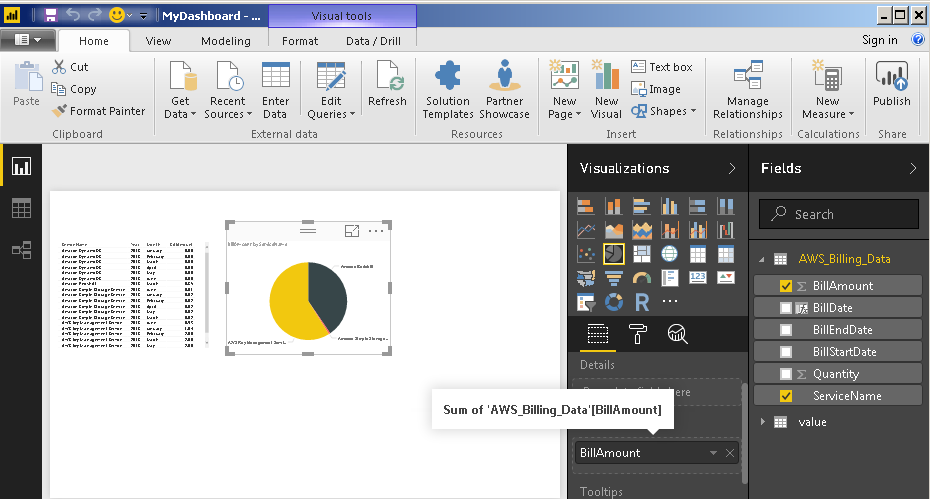

- Now you can Import Billing / Cost data in Power BI using same steps as previous section (Except use custom Query and DSN we just created for AWS API call)

- Repeat steps to import more datasets using different queries

AWS API Example – Import Data From AWS Lambda in Power BI

Now let’s look at another scenario. Assume that someone from other team has written a Lambda Function which exposes certain data in JSON format which you want to display that in your Dashboard. Refer to this Lambda API documentation to learn more about API syntax.

Here is an example query to Call Lambda Function (submit input data in JSON and receive data in JSON format)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SELECT * FROM $ WITH( Src='https://lambda.us-east-1.amazonaws.com/2015-03-31/functions/HelloWorld/invocations' ,RequestMethod='POST' ,RequestData='{"id":1}' ,RequestContentTypeCode='ApplicationJson' /*** Uncomment to override DSN settings ,DataConnectionType='OAuth' ,ScopeSeparator='{space}' ,ServiceProvider='AmazonAWS' --,ClientId='AKxxxxxxxxxxx' --,ClientSecret='lPi+Xxxxxxxxxxxxxxxxxxxxxxx' ,UseCustomApp='True' ***/ ) |

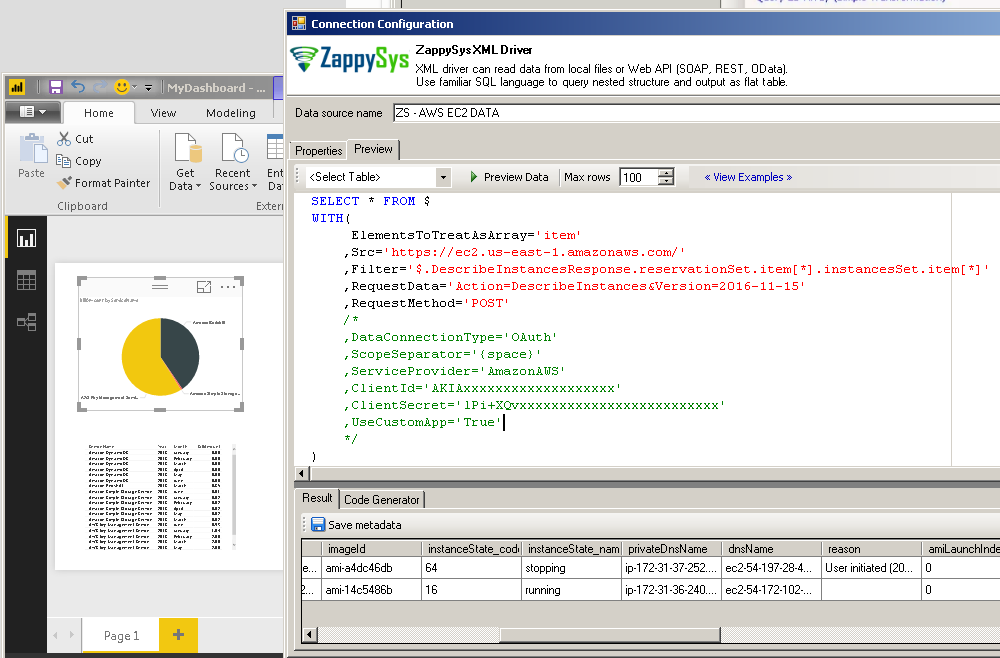

AWS API Example – Get EC2 VM information in Power BI

Now let’s look at AWS EC2 Service API (Virtual Machine Service). This API is in XML format so you have to use ZappySys XML Driver. Below query calls this EC2 API to get list of all instances.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SELECT * FROM $ WITH( ElementsToTreatAsArray='item' ,Src='https://ec2.us-east-1.amazonaws.com/' ,Filter='$.DescribeInstancesResponse.reservationSet.item[*].instancesSet.item[*]' ,RequestData='Action=DescribeInstances&Version=2016-11-15' ,RequestMethod='POST' /* ,DataConnectionType='OAuth' ,ScopeSeparator='{space}' ,ServiceProvider='AmazonAWS' ,ClientId='AKIAxxxxxxxxxxxxxxxxxxx' ,ClientSecret='lPi+XQvxxxxxxxxxxxxxxxxxxxxxxxxx' ,UseCustomApp='True' */ ) |

AWS API Example – Get AWS S3 Buckets and Files Count / Size in Power BI

Now let’s look at how to Query S3 File System in Power BI. For example if you have to prepare dashboard to show Total Data Size and File count in a given Bucket then you can write following Query. For more information check Bucket List API Help

Get File Size and Count for a given S3 Bucket

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

SELECT count(*) as TotalFiles,sum(Size)/1024/1024 as TotalMB FROM $ WITH( ElementsToTreatAsArray='Contents' ,Src='https://zs-dump1.s3.amazonaws.com/?list-type=2&prefix=&max-keys=1000' ,Filter='$.ListBucketResult.Contents[*]' ,NextUrlAttributeOrExpr='$.ListBucketResult.NextContinuationToken' ,NextUrlSuffix='&continuation-token=<%nextlink_encoded%>' /**** Uncomment below to use override DSN values ,DataConnectionType='OAuth' ,ScopeSeparator='{space}' ,ServiceProvider='AmazonAWS' ,ClientId='AKIAxxxxxxxxxxxxxxxxxxxxxxxxxxxxx' ,ClientSecret='lPi+Xcxxxxxxxxxxxxxxxxxxxxxxxx' ,UseCustomApp='True' ,RequestMethod='GET' ***/ ) |

To list buckets you can use following query

List S3 Buckets

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SELECT * FROM $ WITH( Src='https://s3.amazonaws.com' ,Filter='$.ListAllMyBucketsResult.Buckets.Bucket[*]' ,ElementsToTreatAsArray='Buckets' /**** Uncomment below to use override DSN values ,DataConnectionType='OAuth' ,ScopeSeparator='{space}' ,ServiceProvider='AmazonAWS' ,ClientId='AKIAxxxxxxxxxxxxxxxxxxxxxxxxxxxxx' ,ClientSecret='lPi+Xcxxxxxxxxxxxxxxxxxxxxxxxx' ,UseCustomApp='True' ,RequestMethod='GET' ***/ ) |

Debugging / Crafting AWS API Call Request

Check below article to learn various techniques to debug and craft AWS API Request Body / Headers and URL.

How to call Amazon AWS API using SSIS (EC2, Lambda, API Gateway, SQS)

Understanding AWS REST API Pagination in Power BI

Paginate by Response Attribute

This example shows how to paginate API calls where you need to paginate until the last page detected. In this example, next page is indicated by some attribute called nextlink (found in response). If this attribute is missing or null then it stops fetching the next page.SELECT * FROM $ WITH( SRC=@'https://zappysys.com/downloads/files/test/pagination_nextlink_inarray_1.json' ,NextUrlAttributeOrExpr = '$.nextlink' --keep reading until this attribute is missing. If attribute name contains dot then use brackets like this $.['my.attr.name'] )

Paginate by URL Parameter (Loop until certain StatusCode)

This example shows how to paginate API calls where you need to pass page number via URL. The driver keeps incrementing page number and calls next URL until the last page detected (401 error). There are few ways to indicate the last page (e.g. By status code, By row count, By response size). If you don't specify end detection then it will use the default (i.e. No records found).SELECT * FROM $ WITH ( SRC=@'https://zappysys.com/downloads/files/test/page-xml.aspx?page=1&mode=DetectBasedOnResponseStatusCode' ,PagingMode='ByUrlParameter' ,PagingByUrlAttributeName='page' ,PagingByUrlEndStrategy='DetectBasedOnResponseStatusCode' ,PagingByUrlCheckResponseStatusCode=401 ,IncrementBy=1 )

Paginate by URL Path (Loop until no record)

This example shows how to paginate API calls where you need to pass page number via URL Path. The driver keeps incrementing page number and calls next URL until the last page is detected. There are few ways to indicate the last page (e.g. By status code, By row count, By response size). If you don't specify end detection then it will use the default (i.e. No records found).SELECT * FROM $ WITH ( SRC=@'https://zappysys.com/downloads/files/test/cust-<%page%>.xml' ,PagingMode='ByUrlPath' ,PagingByUrlAttributeName='<%page%>' ,PagingByUrlEndStrategy='DetectBasedOnRecordCount' ,IncrementBy=1 )

Paginate by Header Link (RFC 5988)

API like GitHub / Wordpress use Next link in Headers (RFC 5988)SELECT * FROM $ LIMIT 25 WITH( Src='https://wordpress.org/news/wp-json/wp/v2/categories?per_page=10' ,PagingMode='ByResponseHeaderRfc5988' ,WaitTimeMs='200' --//wait 200 ms after each request )

Handling AWS Web API Error in Power BI

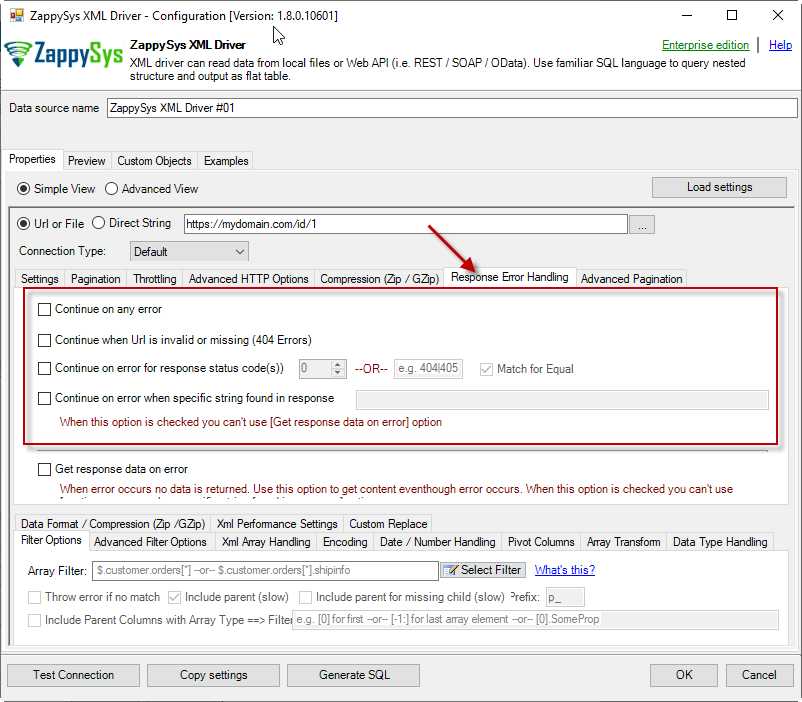

METHOD 1 - Using Error Handling Options

When to use?

You may want to use them when your source is a resource located on the Internet; e.g. a file on a website, a file on an FTP server or just a plain API HTTP response. By default, when a remote server returns an error, data retrieval is stopped, an error is raised and no data is given back to you. This might not be always desirable.Scenario 1

Imagine a scenario, that there is a web server which each day at 12 AM releases a new JSON file with that day's date as filename, e.g. http://www.some-server.com/data/2018-06-20.json. And, of course, you want to download it and use it daily in your Power BI report. But you have a problem: Power BI report data sources are refreshed each hour and you may get HTTP 404 status code (no file was found) when a file is not released yet. Which consequentially means other data sources won't be updated as well and you will see old and cached data on the report. That's where you could use Continue on any error or Continue when Url is invalid or missing (404 Errors) to avoid an error being raised and let other data sources to be updated.Scenario 2

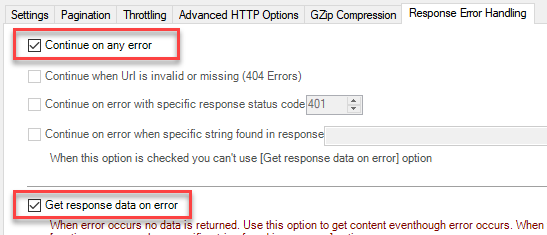

Another scenario is when you expect a web server to raise some kind of HTTP error when accessing a URL. You don't want ODBC Data Source to raise an error but instead, you want to get response data. That's where you can use Continue on any error or alike together with Get response data on error to continue on an error and get the data:

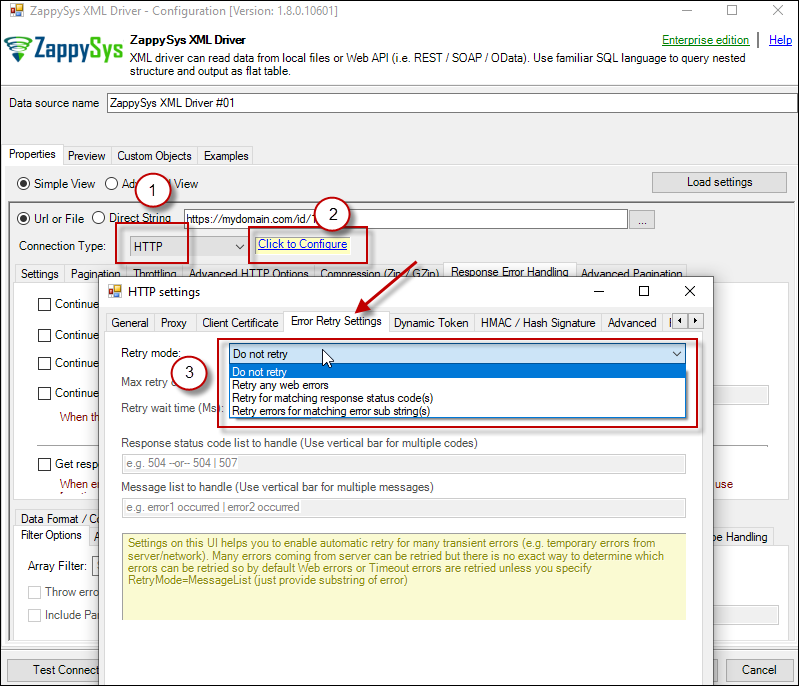

METHOD 2 - Using Connection [Retry Settings]

Another scenario you may run into is a buggy web server. You ask it to give you some file or data and it, like a snotty kid, just doesn't give it to you! You have to ask twice or thrice before it does its job. If that's the case, you have to retry HTTP requests using Connection:

Other Considerations for Calling AWS API in Power BI

API Limit / Throttling

While calling public API or other external web services one important aspect you have to check, how many requests are allowed by your API. Especially when you use API pagination options to pull many records you have to slow down based on API limits. For example, your API may allow you only 5 requests per second. Use Throttling Tab on Driver UI to set delay after each request.2D Array Transformation

If you are using JSON or XML API Driver then possible you may have to transform your data using 2D array transformation feature. Check this link for more information.Conclusion

In this article, we show how to connect to AWS API and S3 File data in Power BI. We configured ODBC DSN for AWS S3 / REST API connection and finally imported data in Power BI. We used ZappySys driver for JSON that can be used to extract data from any REST API or a JSON file. Download ODBC PowerPack to try yourself see how easy it is to consume XML / JSON / REST API and AWS data in Power BI and Say goodbye to ETL or Coding. If you need any help with your API integration feel free to contact zappysys support you will be amazed for sure how quickly your issue is resolved.