Introduction

In this post we will learn how to use ZappySys SSIS XML Source or ZappySys SSIS JSON Source to read large XML or JSON File (Process 3 Million rows in 3 minutes – 1.2 GB file).

If you use default settings to read data then it may result into OutOfMemory Exception so we will outline few techniques which will enable high performance Streaming Mode rather than In-memory load of entire file.

Prerequisites

Before we parse very large XML or JSON files using SSIS , you will need to make sure following prerequisites are met.

- SSIS designer installed. Sometimes it is referred as BIDS or SSDT (download it from Microsoft site).

- Basic knowledge of SSIS package development using Microsoft SQL Server Integration Services.

- You have at least two sample files… One file must be small dataset (less than 10 MB if possible). We will use small dataset file during design mode to get metadata and see data preview on the Component UI.

- Second XML file is the big file with full dataset you like to parse at runtime.

- Make sure SSIS PowerPack is installed. Click here to download.

Step-By-Step : Reading large XML file (SSIS XML Source)

Now let’s look at how to read large XML file (e.g. 3 Million rows or more) using ZappySys XML Source in SSIS. Below steps are identical for ZappySys JSON Source too (Except Step#7).

- Open SSIS Designer and drag Data Flow from SSIS Toolbox

- Double click Data Flow Task to switch to Data flow designer

- Now drag ZS XML Source on the surface from SSIS Toolbox.

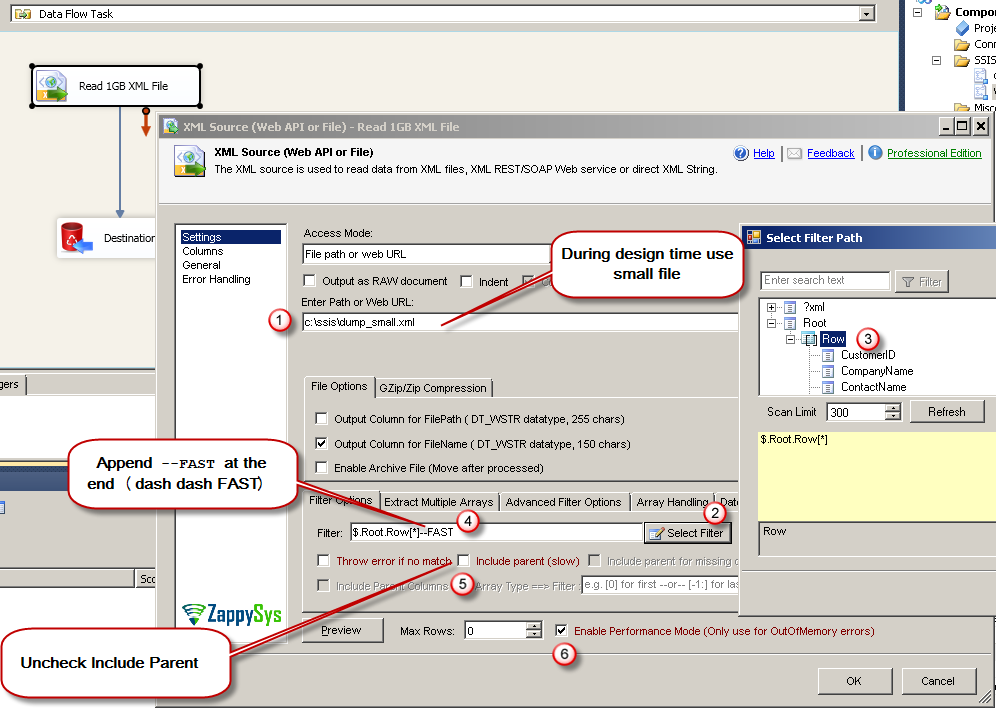

- Double click ZS XML Source and specify small dataset file path you like to parse. (e.g. c:\data\customer_small.xml )

- Click on Select Filter button to find Node which will be treated as Array. Once you close the Filter Browse Dialog. Append

--FAST Your Expression may look like below.

$.Root.Row--FAST - Now uncheck Include Parent Columns option

- Check

Enable Performance Option (For JSON Source Skip this step)NOTE: Try to enable Performance mode setting after you Select Filter (by using smaller dataset file). Once filter is set you can check enable performance mode and make sure following two settings are correctly set (Option#2 needs new version – SSIS v4.1+ / ODBC v1.4+).

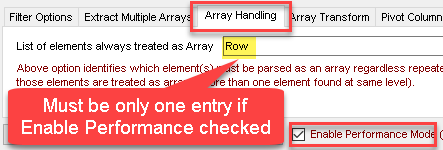

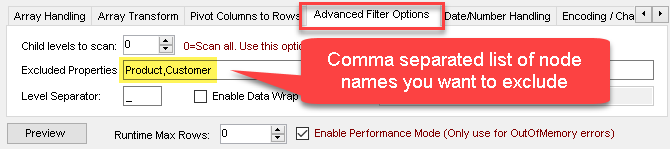

(1) On Array Handling Tab – Set Array node name (must be only one entry). For example your Filter is $.DATA.ORDER[*] then you can enter ORDER (2) On Advanced Filter Options Tab – Enter all unwanted Tag names you like to Skip. For example if you have other arrays like PRODUCT or CUSTOMER then enter PRODUCT,CUSTOMER

- Your setting may look like below

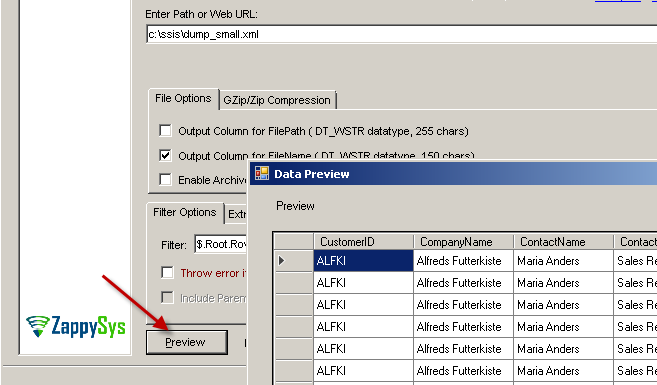

- Click Preview to verify data (Adjust Filter if needed to extract correct Hierarchy)

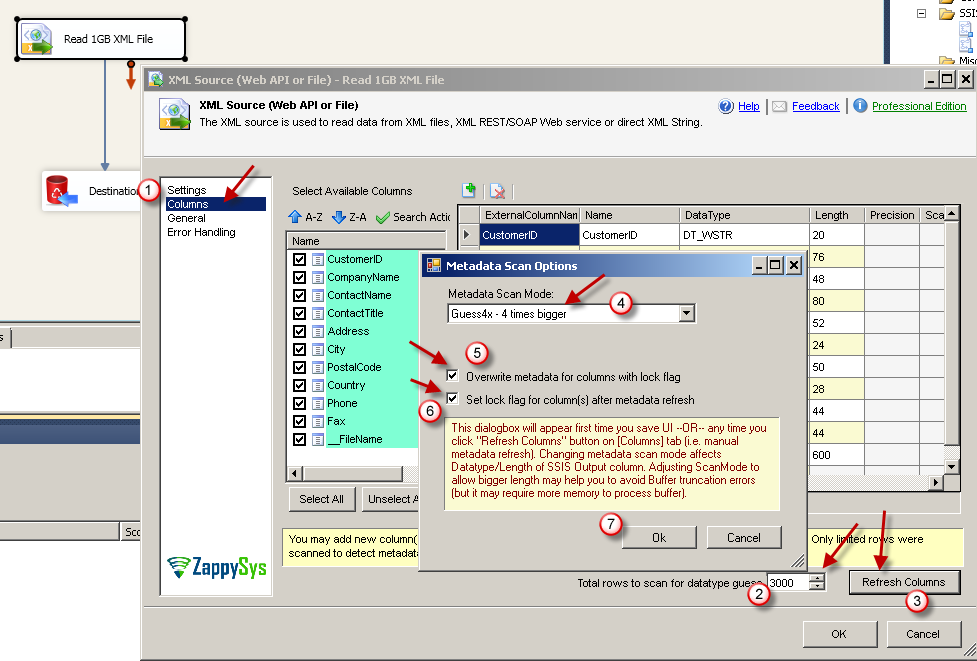

- Click on Columns Tab

- Change Scan Row count to 3000 or more and Click on Refresh Column .

- Select Guess 4x , Check Lock, Check Reset and Click OK like below. At runtime if you ever get error about Data Type Issue you can always adjust this later on too. Make sure Lock column is set to avoid setting reset for manually changed columns. For more information on metadata changes check this article

- Click OK to save settings.

- Now Right click on XML Component > Click Properties. Change DirectPath property to original file path (large file) (e.g. c:\data\customers_large.xml ). Save Package.

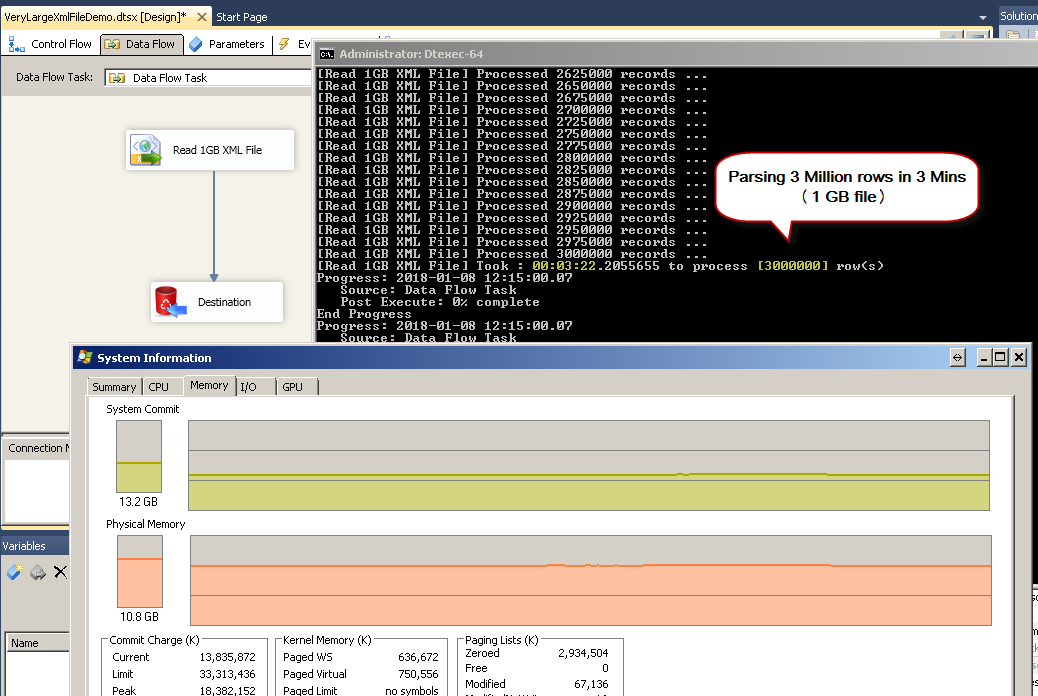

- Now you can run your SSIS Package from Designer or Command line. As you see in the below screenshot that there is virtually no memory pressure when you enable stream mode. Thanks to ZappySys unique XML / JSON Parsing Engine. When streaming mode is enabled file is not loaded into memory for parsing rather than that it only reads record by record to process very large JSON or XML file.In our below example we used Windows 7 Desktop, 16GB RAM, 4 Core i7 64 bit CPU. It took around 3 Minutes to Parse 3 Million Records (1.2 GB big XML file) . If you Parse JSON file then it can be even faster due to compact size.

Step-By-Step : Reading very large JSON file (SSIS JSON Source)

Reading very large JSON file using ZappySys JSON Source has exact same steps described in above section except two changes. You have to use ZS JSON Source and skip Step#7 (Check Enable Performance Mode – This option is not available JSON Source).

Parsing very large XML File with Multiple Arrays

Now let’s discuss a scenario which can result in OutOfMemory Exception unless you tweak some extra options. Assume you have file structure like below.

NOTE: This will only work in version 4.1.0 or later (in SSIS PowerPack) or 1.4.0 or later for ODBC PowerPack

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

<?xml version="1.0" encoding="UTF-8"?> <Data> <Product>....<Product> <Product>....<Product> .... many more.... <Product>....<Product> <Customer>....<Customer> <Customer>....<Customer> .... many more.... <Customer>....<Customer> <Row>....<Row> <Row>....<Row> .... many more.... <Row>....<Row> </Data> |

Notice that in above XML it has 3 different nodes (For Product, Customer and Order). If you try to extract Orders it might fail with OutOfMemory Exception because it needs to scan large XML before it can hit First Order node. To solve this issue you can adjust following 2 settings.

- On Array Handling Tab you have following

1Row - On Advanced Filter Options tab enter Following two nodes which we dont want to extract.

1Product,Customer

Thats it. This will avoid excessive Memory pressure to find very first node before it can start Stream.

Conclusion

As you saw in this article that ZappySys SSIS PowerPack is designed to handle very large dataset in JSON or XML. We also support very large CSV and Excel files too which are not covered in this article. Download SSIS PowerPack to explore 70+ more components by yourself to make your ETL simple and Fast.