Introduction

In our previous blog post we saw how to read JSON REST API / XML SOAP in Informatica using ZappySys ODBC Drivers. Now lets look at API Write scenario. We will learn how to to POST data to API in Informatica (Insert / Update). We will use SQL Transformation to fire ODBC Driver Queries to create / update or delete records in Target System by calling Web API (i.e. REST or SOAP).

In our previous blog post we saw how to read JSON REST API / XML SOAP in Informatica using ZappySys ODBC Drivers. Now lets look at API Write scenario. We will learn how to to POST data to API in Informatica (Insert / Update). We will use SQL Transformation to fire ODBC Driver Queries to create / update or delete records in Target System by calling Web API (i.e. REST or SOAP).

For example purpose we will use JSON API but if you are calling XML / SOAP API then use ZappySys XML Driver instead of JSON Driver.

Requirements

This article assumes that you have full filled following basic requirements.

- Download Install ZappySys ODBC PowerPack (Drivers for JSON and REST API)

- Install Informatica PowerCenter Client Tools (e.g. Workflow and Mapping Designers)

- Access to a Relational database such as SQL Server (or use any of your choice e.g. Oracle, MySQL, DB2 ). If nothing available then you can use flat file target.

Video Tutorial – POST data to API in Informatica

Here is the full Video Tutorial with many API tips / tricks to show how to POST data to REST / SOAP API in Informatica PowerCenter. It also covers how to Upload File to Web API.

Getting Started

So let’s get started with basic setup on how to POST data to your API to create new records or update / delete records. In the following demo we are trying to create new Customer records by calling POST API.

For that we will use following setup.

Create ODBC Connection for API

Check this section from previous article to learn how to configure API Connection using ZappySys ODBC Driver for JSON / XML / CSV.

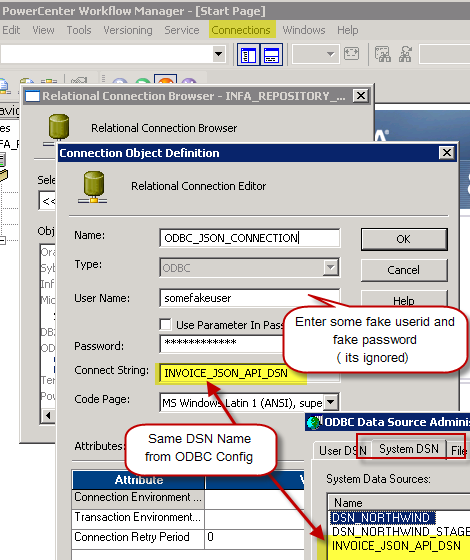

Create Relational Connection in Workflow Designer

Check this section from previous article to learn how to create and configure Relational Connection of ODBC Type which points to DSN (ZappySys JSON Driver).

Configure JSON connection in Informatica for REST API / JSON File connectivity – Using ZappySys JSON ODBC Driver

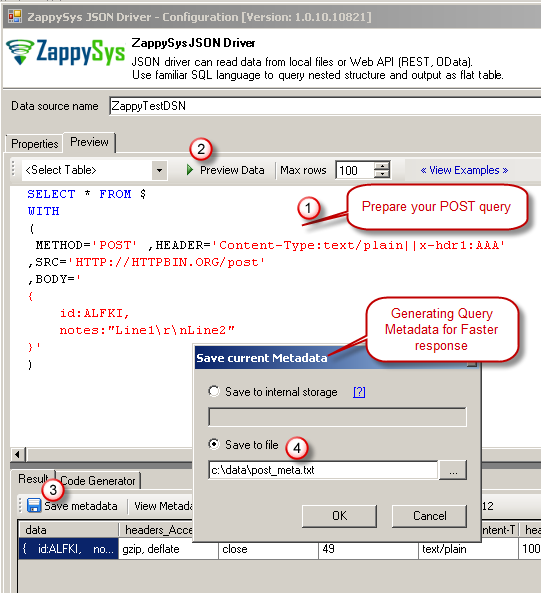

Prepare POST Query and generate Metadata File

Before you can call POST API in Informatica Mapping, you need to generate Metadata file using ODBC Data Source UI. Metadata file creation is important to avoid double calls (One for Metadata and One for Getting Data). When you dont Supply Metadata file to ZappySys Driver then it will try to call API twice (First time read 300 rows to guess datatypes / response columns and then call API again to read all remaining rows from response).

Its OK to allow two reads for the same request if its GET request (Read scenario) but its not OK if you are creating a New records because same request is sent twice and you may end up creating duplicate records.

Obtaining metadata is simple using Query Preview UI.

- Go to ODBC Data Source and setup properties on Driver UI (e.g. URL, Connection, Filter, Headers etc)

- Once everything is configured go to Preview Tab and click Preview Button to see data.

- Once Data is returned as per your need you can click on Save Metadata. Copy SQL Query for later use.

Create Informatica Mapping to POST data to REST API / SOAP Web Service

Once Metadata File is we can proceed to Informatica Mapping designer. For making instructions simple we will skip few details (e.g. Importing Source /Target Definitions). Refer to Previous article to read instructions in depth.

Created Now lets look at how to read data from relational source (E.g. SQL Server, Oracle) and send data to REST API (Call POST API).

- Drag Source into Mapping Designer

- Drag Expression Transform into Mapping Designer and drop desired columns on Expression Transform as Pass through Columns.

- Create one additional Output Port for API URL as below (You may not need this if API URL is not Dynamic. Skipping URL or any other setting in ODBC SQL Query will use DSN Settings you configured in previous section)

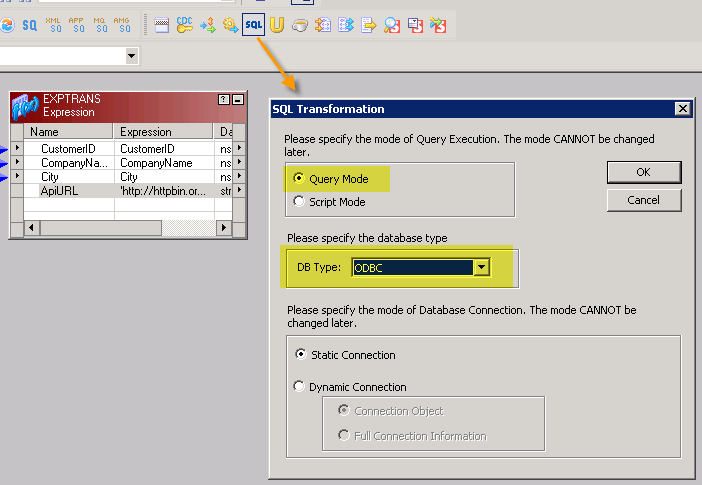

- Drag SQL Transform and select following Option (Mode = Query, Connection Type =ODBC)

- Now connect input columns from Expression Transform to SQL Transformation. These input columns will be used as parameters in API POST Body and SQL Query WITH Clause to craft JSON / XML API request.

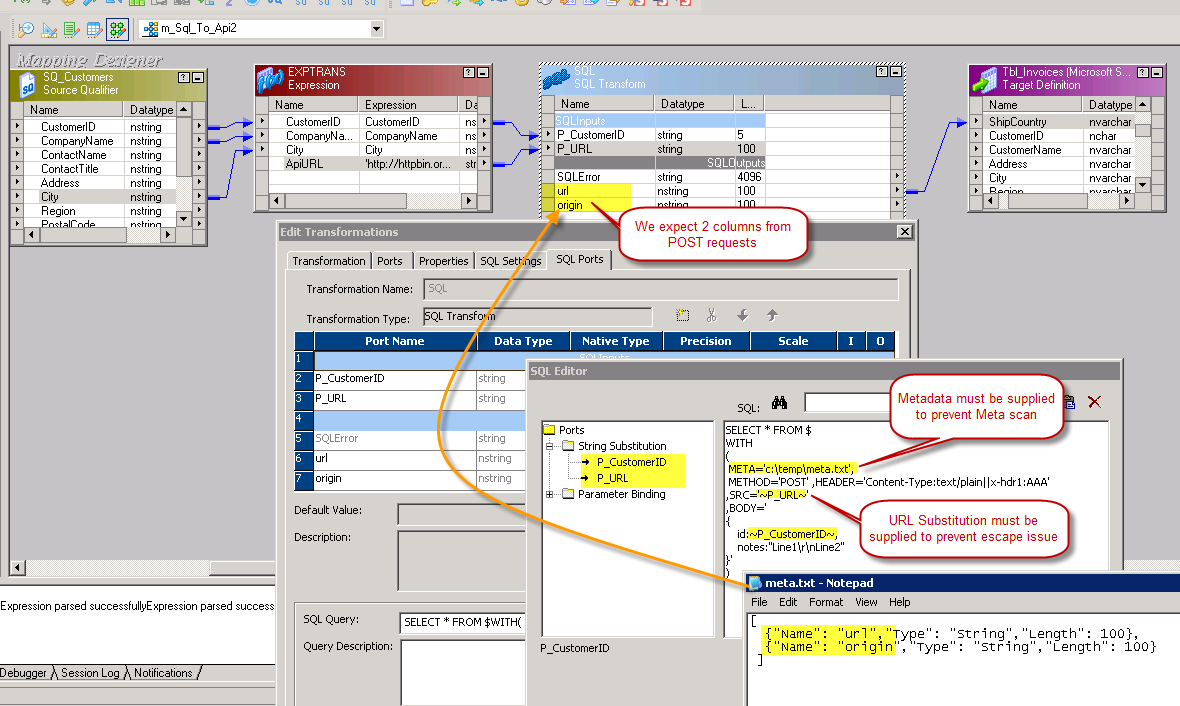

- Now double click on SQL Transform and go to SQL Ports Tab

- Click somewhere in the Output Columns Group and Add desired columns (Tip: Add same number of columns you define in the Metadata File in Previous section. For Demo purpose you can remove some columns from Metadata file so you have only hadful columns to deal with. When you remove columns from Metadata File make sure JSON is Valid. Many times user forget to remove last comma)

- Once you define Input and Output columns for your SQL Transform you can Edit SQL Query button and configure your SQL Query as below. In below example query we have supplied URL, Body, HTTP Headers. Also notice that Body JSON is crafted by using Input Columns from Upstream. We used only CustomerID as an example but in real words you may add many more in your JSON Body.

For Old Version of PowerCenter- V8.5 or lower: It’s important to Pass URL via Substitution else you may get error (http://abc.com is output as http:/abc.com – only one slash)Here is a sample Query for SQL Transform (Added String Substitutions)Few things to notice:

– Output Ports defined in SQL Transform must match order and column names of SQL query. Best thing is to avoid SELECT * FROM and use explicit column names like below. If you already took care column orders in Metadata file then you can use * to shorten your SQL statement.

– Slash (i.e. \ ) is treated as escape character so replace slash with double slash anywhere in SQL text(e.g. c:\temp\meta.txt should be replaced with c:\\temp\\meta.txt).

– If you use ? in URL (e.g. http://myapi/?id=111) then replace ? with \? because ? has special meaning in SQL Transform SQL Text. ? is used in Parameter Binding (e.g. ?my_input_port?) .

– You may use Format Specifiers <<~P_URL~,FUN_JSONENC>> if you want to encode special characters in your data (e.g. Tabs, Double quotes, New lines). Check this link

– Avoid using string specifier before back slash (e.g. c:\\data\\~FileName~ ). because \~ cause issue with escape sequence triggered by \ . To solve this issue Use Expression Transform before SQL Transform to build Dynamic Path or other type of string.

1234567891011121314SELECT url,origin FROM $WITH(META='c:\\temp\\meta.txt',METHOD='POST',HEADER='Content-Type:text/plain||x-hdr1:AAA',SRC='~P_URL~',BODY='{"ID": "~P_CustomerID~","Link": "<<~P_URL~,FUN_JSONENC>>","Notes": "Line1\\r\\nLine2"}') - In the above Screenshot Notice Following items in SQL Query.

- We have specified META property (Use the same Metadata File we generated in the previous section. Meta Property can take direct file path, meta name (settings stored in DSN) or supply direct JSON for Metadata. Notice we escaped \ with \\

12345META='[ {"Name": "MyCol1", "Type": "String", "Length": 100}, {"Name": "MyCol2", "Type": "String", "Length": 100} ...... ]'-- OR --META='c:\\meta\\my_post_query_metadata.txt'-- OR --META='MyMetaName' - Second thing you will Notice that in the Body we have used String Substitution in SRC and BODY. If your URL is not Dynamic and you like to use setting defined in DSN then omit SRC attribute from WITH clause. Substitution must start and end with tild (~). For example Input column P_URL can be used as ~P_URL~ and at runtime it will be replaced. Make sure to escape single quote with double quote (We replaced ‘ with ” in Sam”s) in Input Parameter Values else SQL Query will fail. You can use Expression Transform to escape data if needed using REPLACE function. We also replaced \r\n with \\r\\n

and1SRC='~P_URL~'

12345BODY='{"ID": "~P_CustomerID~","Notes": "Line1\\r\\nLine2, Sam''s Club"}'

- We have specified META property (Use the same Metadata File we generated in the previous section. Meta Property can take direct file path, meta name (settings stored in DSN) or supply direct JSON for Metadata. Notice we escaped \ with \\

- Now connect your SQL Transformation output Ports to Target (e.g. Flat File Target or Relational Target)

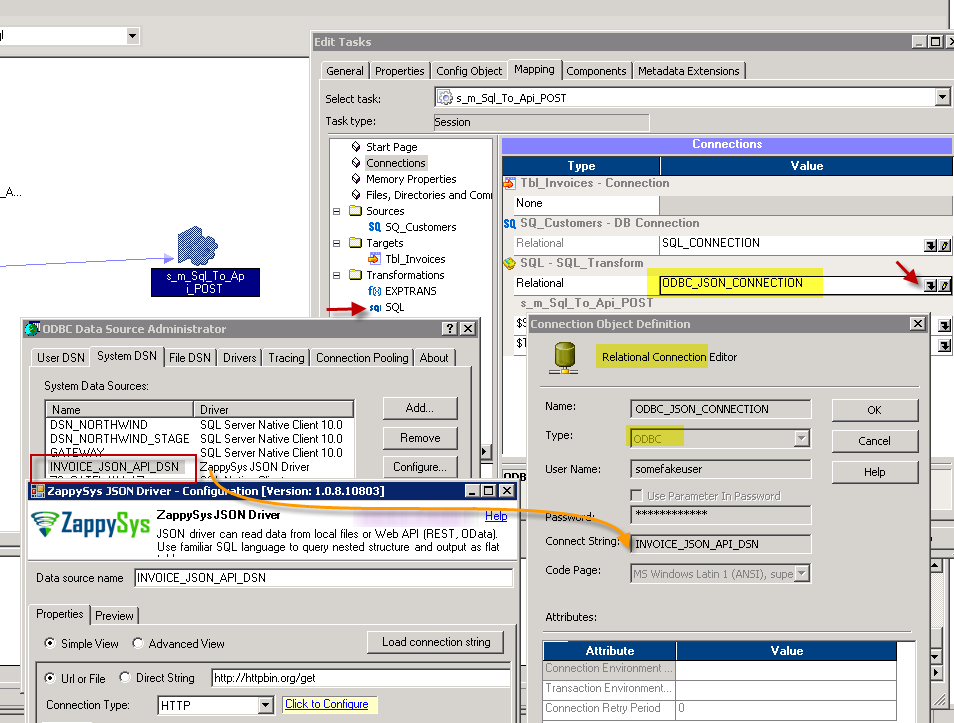

- Create new Workflow and Session using this Mapping

- Go to Session properties and on the Mapping Tab make sure to change COnnection Type for SQL Transformation to Relational Connection.

- In our example we changed Target to File Writer but you can save SQL Transform output to some relational table too.

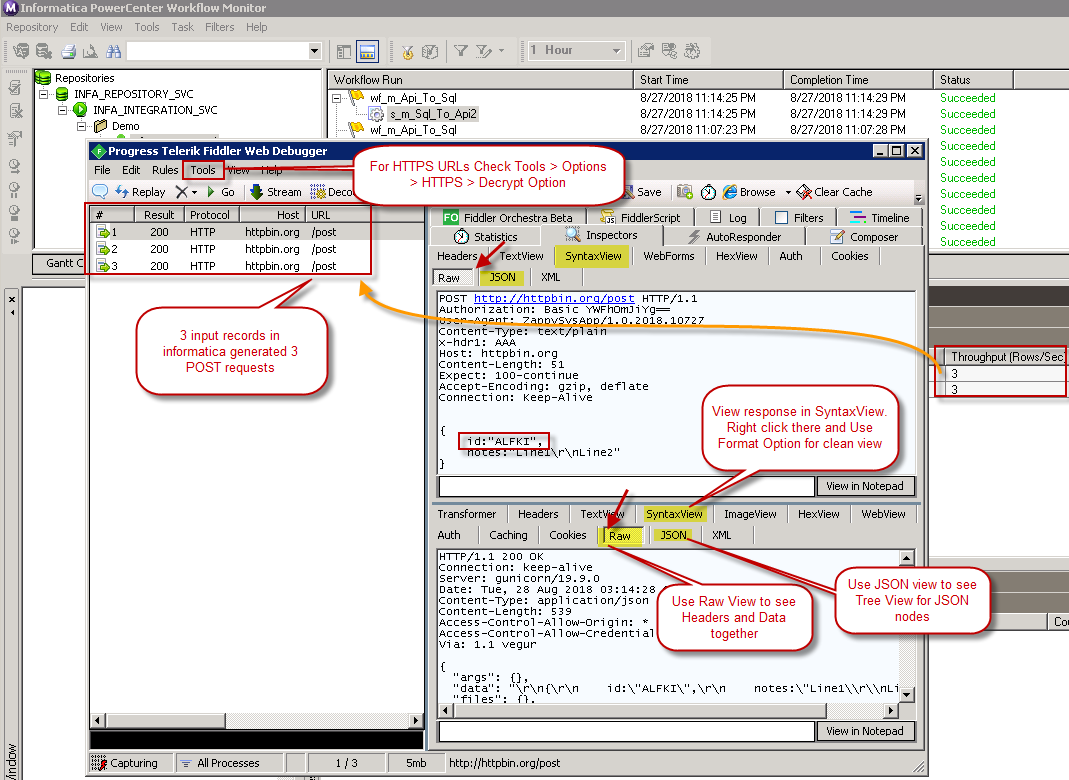

- Now you can execute Workflow and check your session log for any error. You can also debug web requests in Fiddler (Must be installed on Server where actual Job is running).

Upload File to API (Read Body from Local File)

Now let’s look at scenario to POST Body content from File (i.e. Upload File to API). Below simple query will Upload file content to API server (POST request where Body is coming from file)

|

1 2 3 4 5 6 7 8 9 10 |

SELECT url,origin FROM $ WITH ( META='c:\\temp\\meta.txt' ,METHOD='POST' ,HEADER='Content-Type:text/plain||x-hdr1:AAA' ,SRC='~P_URL~' ,BODY='@~P_FilePath~' ,IsMultiPart='True' ) |

Notice how we have used IsMultiPart = ‘True’ and used @ before FilePath in Body. Our File Path is coming from String Substitution (i.e. Input Port ~P_FilePath~)

Debugging REST / SOAP API using Fiddler

Check this article on how to debug REST / SOAP API calls using fiddler. You can also enable Log to Debug Mode on Advanced Tab of ODBC DSN UI.

Conclusion

In this post we saw how to call API POST requests in Informatica and send data from relational system to Web API (Create / Update / Delete Records using JSON / XML API calls). You can explore many other API related scenarios using ZappySys ODBC Drives for JSON / XML / CSV. Click here to try ZappySys ODBC Drivers for FREE and check out how easy it is to read / write JSON / XML API in Informatica or any other ODBC Compliant Apps (e.g Power BI, SSIS, Qlik).