Apache Spark Connector for Talend Studio

Apache Spark Connector lets you connect to Apache Spark, a unified engine for large-scale data analytics.

In this article you will learn how to quickly and efficiently integrate Apache Spark data in Talend Studio without coding. We will use high-performance Apache Spark Connector to easily connect to Apache Spark and then access the data inside Talend Studio.

Let's follow the steps below to see how we can accomplish that!

Apache Spark Connector for Talend Studio is based on ZappySys JDBC Bridge Driver which is part of ODBC PowerPack. It is a collection of high-performance ODBC drivers that enable you to integrate data in SQL Server, SSIS, a programming language, or any other ODBC-compatible application. ODBC PowerPack supports various file formats, sources and destinations, including REST/SOAP API, SFTP/FTP, storage services, and plain files, to mention a few.

Prerequisites

Before we begin, make sure you meet the following prerequisite: Java Runtime Environment (JRE) or Java Development Kit (JDK) must be installed on your system.

-

Minimum required version: Java 8

-

Recommended Java version: Java 21

If your JDBC Driver targets a different Java version (e.g., 11 / 17 / 21), install the corresponding or newer Java version.

Download Apache Spark JDBC driver

To connect to Apache Spark in , you will have to download JDBC driver for it, which we will use in later steps. Let's perform these little steps right away:

- Visit MVN Repository.

-

Download the JDBC driver, and save it locally,

e.g. to

D:\Drivers\JDBC\hive-jdbc-standalone.jar. - Make sure to download the standalone version of the Apache Hive JDBC driver to avoid Java library dependency errors, e.g., hive-jdbc-4.0.1-standalone.jar (commonly used driver to connect to Spark).

- Done! That was easy, wasn't it? Let's proceed to the next step.

Create Data Source in Data Gateway based on ZappySys JDBC Bridge Driver

In this section we will create a data source for Apache Spark in Data Gateway. Let's follow these steps to accomplish that:

-

Download and install ODBC PowerPack.

-

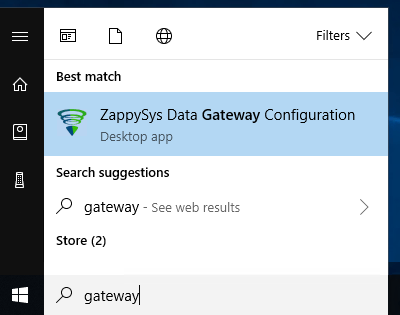

Search for

gatewayin Windows Start Menu and open ZappySys Data Gateway Configuration:

-

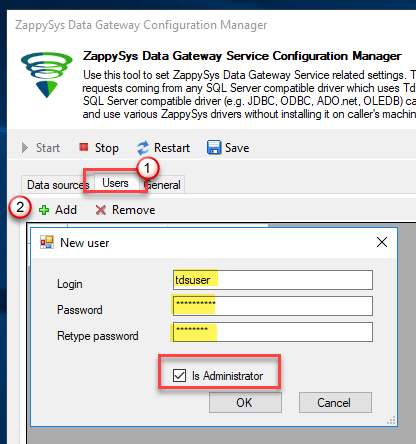

Go to Users tab and follow these steps to add a Data Gateway user:

- Click Add button

-

In Login field enter username, e.g.,

john - Then enter a Password

- Check Is Administrator checkbox

- Click OK to save

-

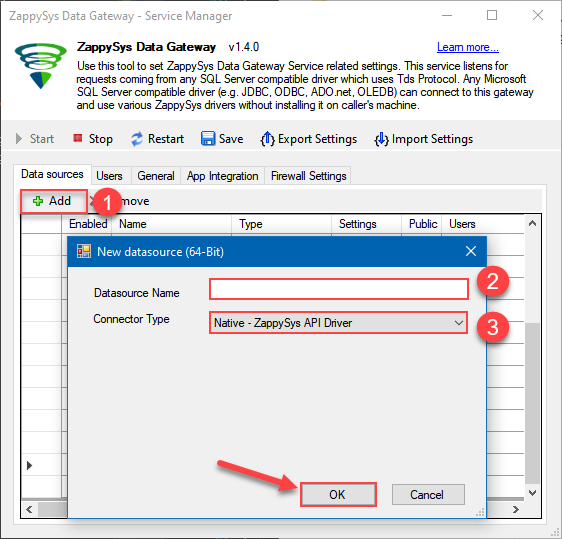

Now we are ready to add a data source:

- Click Add button

- Give Datasource a name (have it handy for later)

- Then select Native - ZappySys JDBC Bridge Driver

- Finally, click OK

ApacheSparkDSNZappySys JDBC Bridge Driver

-

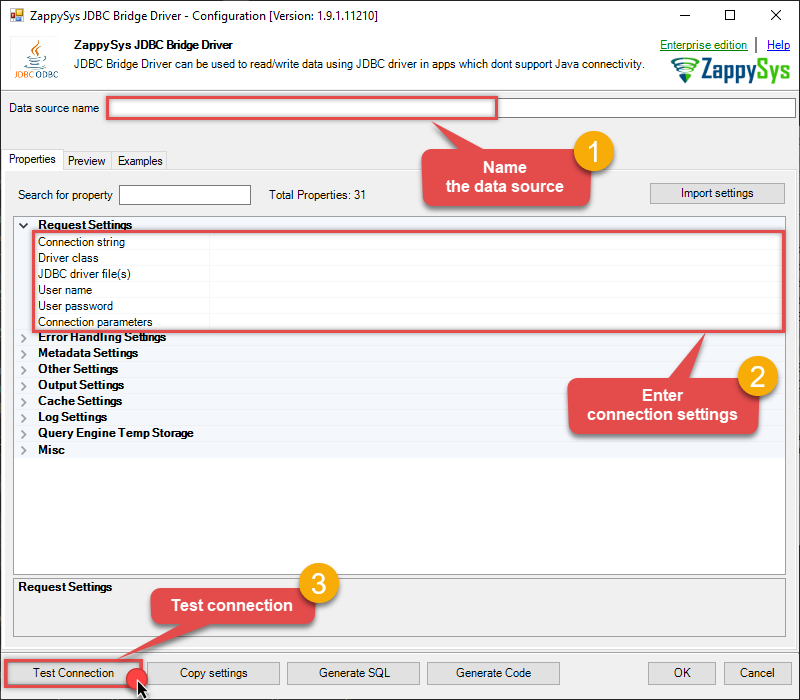

Now, we need to configure the JDBC connection in the new ODBC data source. Simply enter the Connection string, credentials, configure other settings, and then click Test Connection button to test the connection:

ApacheSparkDSNjdbc:hive2://spark-thrift-server-host:10000D:\Drivers\JDBC\hive-jdbc-standalone.jar[]

Use these values when setting parameters:

-

Connection string :jdbc:hive2://spark-thrift-server-host:10000 -

JDBC driver file(s) :D:\Drivers\JDBC\hive-jdbc-standalone.jar -

Connection parameters :[]

-

-

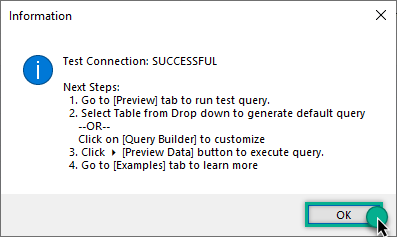

You should see a message saying that connection test is successful:

Otherwise, if you are getting an error, check out our Community for troubleshooting tips.

-

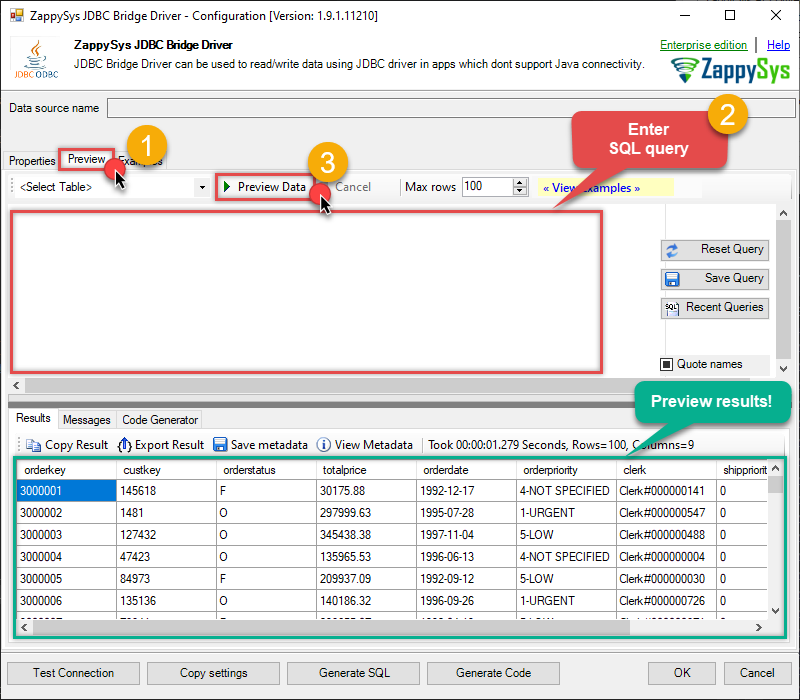

We are at the point where we can preview a SQL query. For more SQL query examples visit JDBC Bridge documentation:

ApacheSparkDSN-- Basic SELECT with a WHERE clause SELECT id, name, salary FROM employees WHERE department = 'Sales';

-- Basic SELECT with a WHERE clause SELECT id, name, salary FROM employees WHERE department = 'Sales';You can also click on the <Select Table> dropdown and select a table from the list.The ZappySys JDBC Bridge Driver acts as a transparent intermediary, passing SQL queries directly to the JDBC driver, which then handles the query execution. This means the Bridge Driver simply relays the SQL query without altering it.

Some JDBC drivers don't support

INSERT/UPDATE/DELETEstatements, so you may get an error saying "action is not supported" or a similar one. Please, be aware, this is not the limitation of ZappySys JDBC Bridge Driver, but is a limitation of the specific JDBC driver you are using. -

Click OK to finish creating the data source.

-

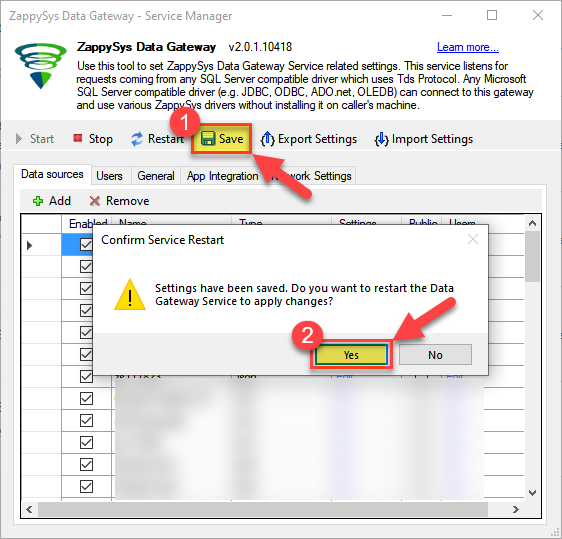

Very important step. Now, after creating or modifying the data source make sure you:

- Click the Save button to persist your changes.

- Hit Yes, once asked if you want to restart the Data Gateway service.

This will ensure all changes are properly applied:

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Read Apache Spark data in Talend Studio

To read Apache Spark data in Talend Studio, we'll need to complete several steps. Let's get through them all right away!

Create connection for input

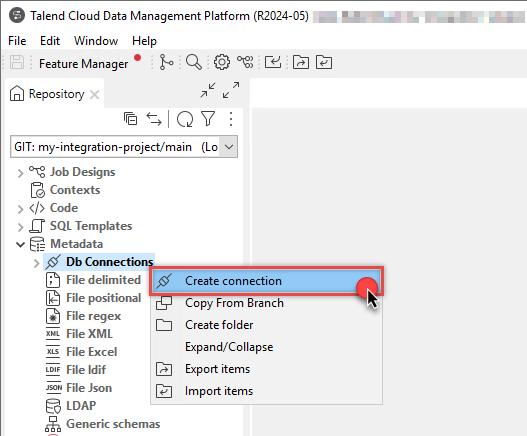

- First of all, open Talend Studio

-

Create a new connection:

-

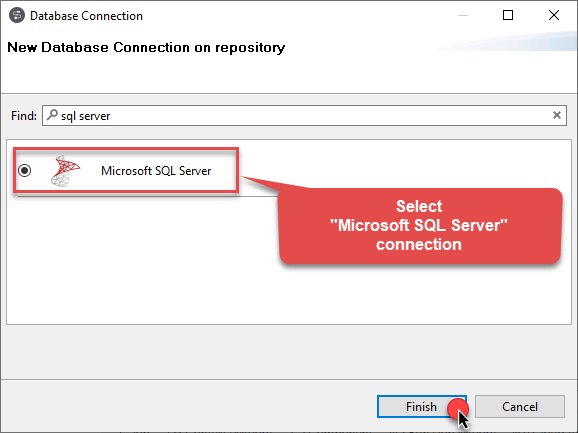

Select Microsoft SQL Server connection:

-

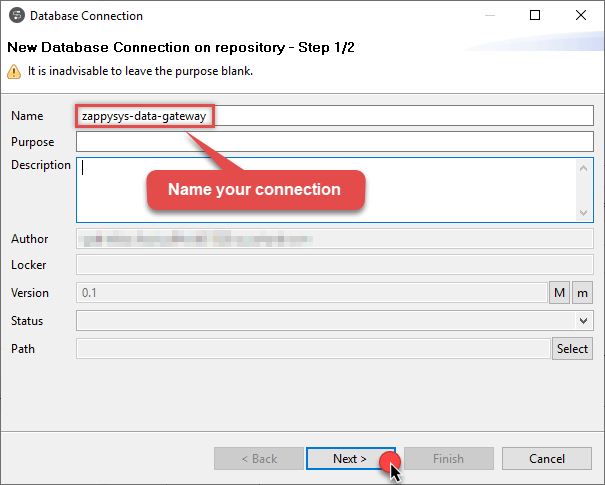

Name your connection:

-

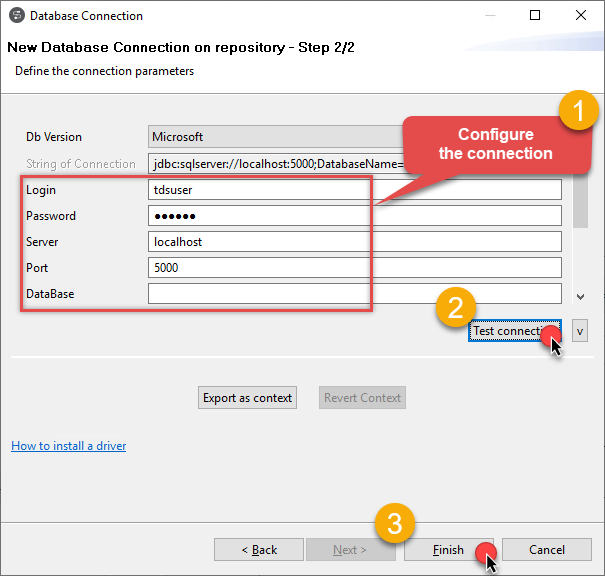

Fill-in connection parameters and then click Test connection:

ApacheSparkDSN

-

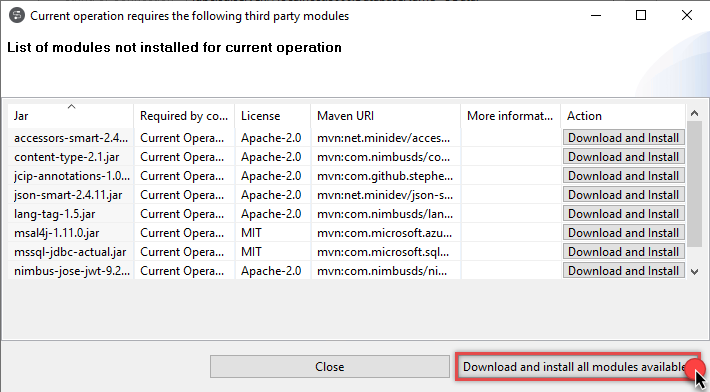

If the List of modules not installed for this operation window shows up, then download and install all of them:

Review and accept all additional module license agreements during the process

Review and accept all additional module license agreements during the process -

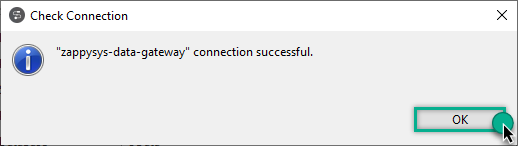

Finally, you should see a successful connection test result at the end:

Add input

-

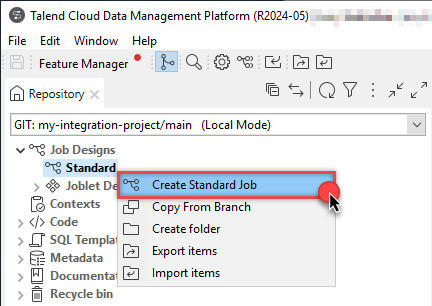

Once we have a connection to ZappySys Data Gateway created, we can proceed by creating a job:

-

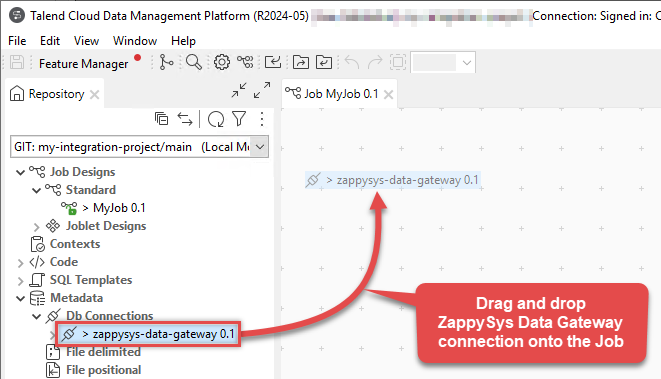

Simply drag and drop ZappySys Data Gateway connection onto the job:

-

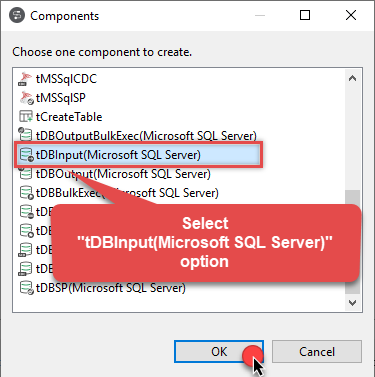

Then create an input based on ZappySys Data Gateway connection:

-

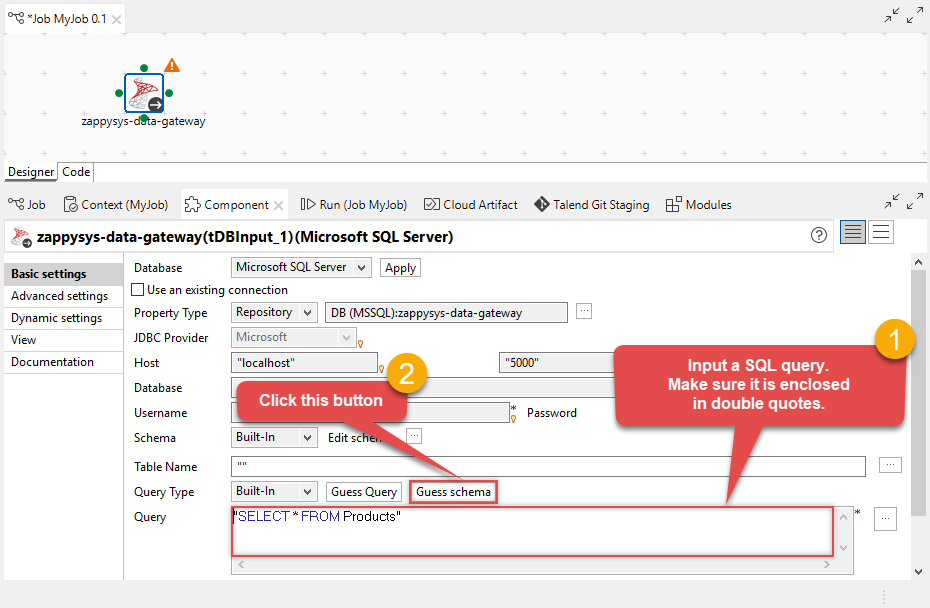

Continue by configuring a SQL query and click Guess schema button:

-

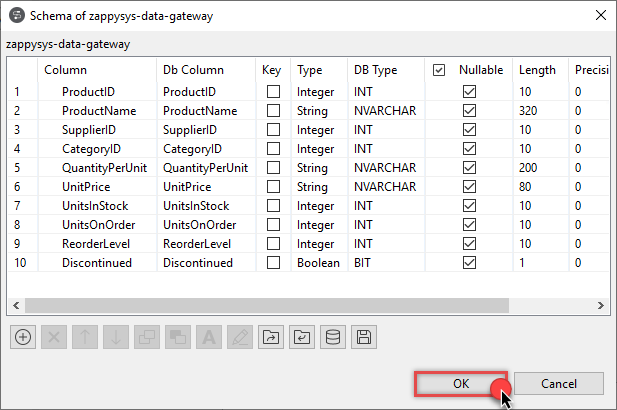

Finish by configuring the schema, for example:

Add output

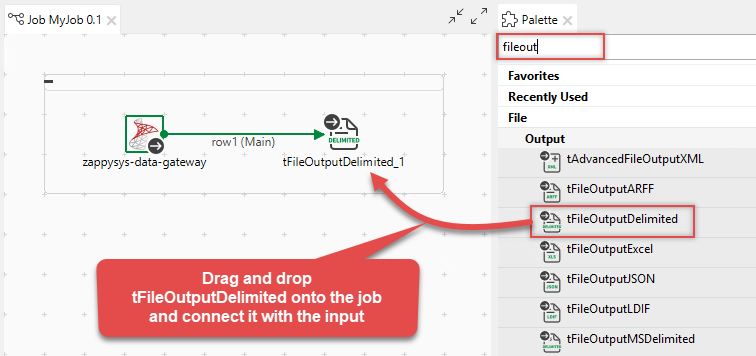

We are ready to add an output. From Palette drag and drop a tFileOutputDelimited output and connect it to the input:

Run the job

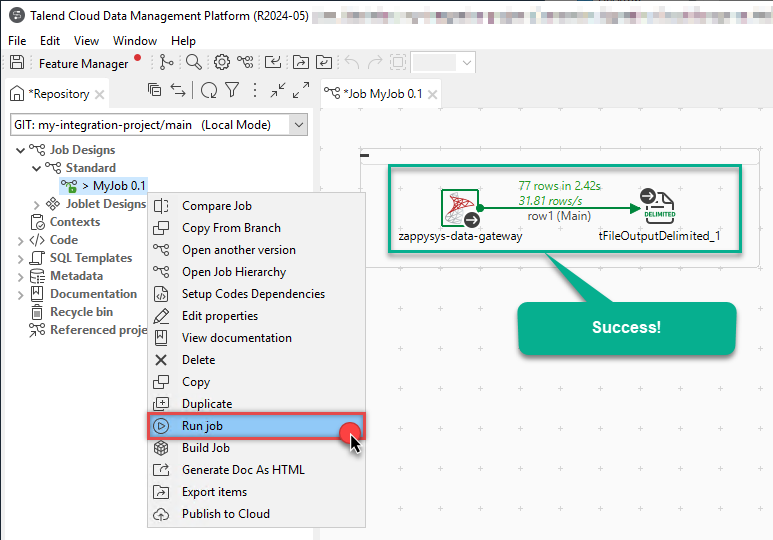

Finally, run the job and integrate your Apache Spark data:

Troubleshooters & resources (JDBC Bridge Driver)

Below are some useful community articles to help you troubleshoot and configure the ZappySys JDBC Bridge Driver:

-

How to combine multiple JAR files

Learn how to merge multiple

.jardependencies when your JDBC driver requires more than one file. -

How to fix JBR error: “Data lake is not available / Unable to verify trust for server certificate chain”

Resolve SSL or certificate validation issues encountered during JDBC connections.

-

System Exception: “Java is not installed or not accessible”

Fix Java path or environment issues that prevent the JDBC Bridge from launching Java.

-

JDBC Bridge Driver disconnect from Java host error

Troubleshoot unexpected disconnection problems between SSIS and the Java process.

-

Error: Could not open jvm.cfg while using JDBC Bridge Driver

Resolve JVM configuration path errors during driver initialization.

-

How to enable JDBC Bridge Driver logging

Enable detailed driver logging for better visibility during troubleshooting.

-

How to pass JDBC connection parameters (not by URL)

Learn how to specify connection properties programmatically instead of embedding them in the JDBC URL.

-

How to fix JDBC Bridge error: “No connection could be made because the target machine actively refused it”

Troubleshoot firewall or local port binding issues preventing communication with the Java host.

-

How to use JDBC Bridge options (System Property for Java command line, e.g., classpath, proxy)

Configure custom Java options like classpath and proxy using JDBC Bridge system properties.

Conclusion

In this article we showed you how to connect to Apache Spark in Talend Studio and integrate data without any coding, saving you time and effort. It's worth noting that ZappySys JDBC Bridge Driver allows you to connect not only to Apache Spark, but to any Java application that supports JDBC (just use a different JDBC driver and configure it appropriately).

We encourage you to download Apache Spark Connector for Talend Studio and see how easy it is to use it for yourself or your team.

If you have any questions, feel free to contact ZappySys support team. You can also open a live chat immediately by clicking on the chat icon below.

Download Apache Spark Connector for Talend Studio Documentation