Google BigQuery Connector for SSIS

Read / write Google BigQuery data inside your app without coding using easy to use high performance API Connector

In this article you will learn how to quickly and efficiently integrate Google BigQuery data in SSIS without coding. We will use high-performance Google BigQuery Connector to easily connect to Google BigQuery and then access the data inside SSIS.

Let's follow the steps below to see how we can accomplish that!

Google BigQuery Connector for SSIS is based on ZappySys API Connector Framework which is a part of SSIS PowerPack. It is a collection of high-performance SSIS connectors that enable you to integrate data with virtually any data provider supported by SSIS, including SQL Server. SSIS PowerPack supports various file formats, sources and destinations, including REST/SOAP API, SFTP/FTP, storage services, and plain files, to mention a few (if you are new to SSIS and SSIS PowerPack, find out more on how to use them).

Video Tutorial - Integrate Google BigQuery data in SSIS

This video covers the following topics and more, so please watch carefully. After watching the video, follow the steps outlined in this article:

- How to download and install the required PowerPack for Google BigQuery integration in SSIS

- How to configure the connection for Google BigQuery

- Features of the ZappySys API Source (Authentication / Query Language / Examples / Driver UI)

- How to use the Google BigQuery in SSIS

Prerequisites

Before we begin, make sure the following prerequisites are met:

- SSIS designer installed. Sometimes it is referred as BIDS or SSDT (download it from Microsoft).

- Basic knowledge of SSIS package development using Microsoft SQL Server Integration Services.

- SSIS PowerPack is installed (if you are new to SSIS PowerPack, then get started!).

Read data from Google BigQuery in SSIS (Export data)

In this section we will learn how to configure and use Google BigQuery Connector in API Source to extract data from Google BigQuery.

-

Begin with opening Visual Studio and Create a New Project.

-

Select Integration Service Project and in new project window set the appropriate name and location for project. And click OK.

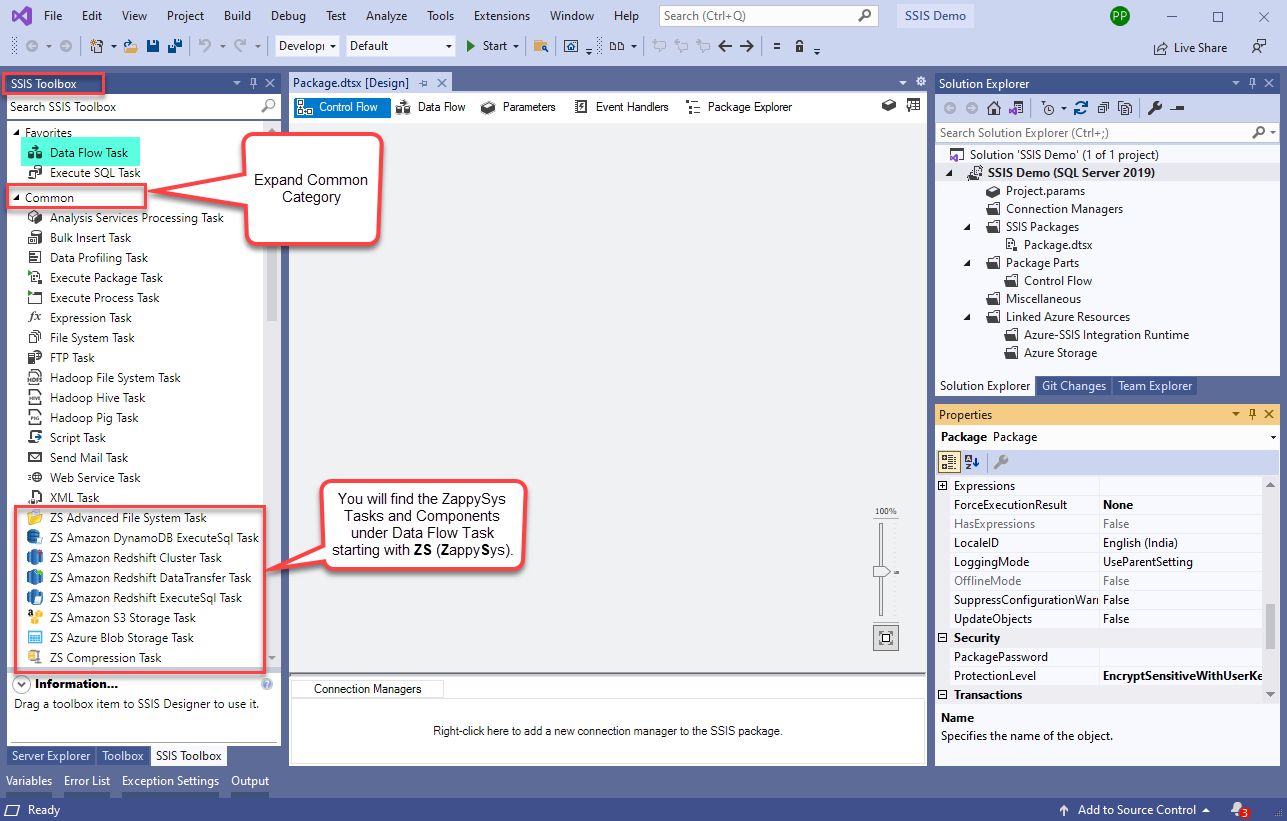

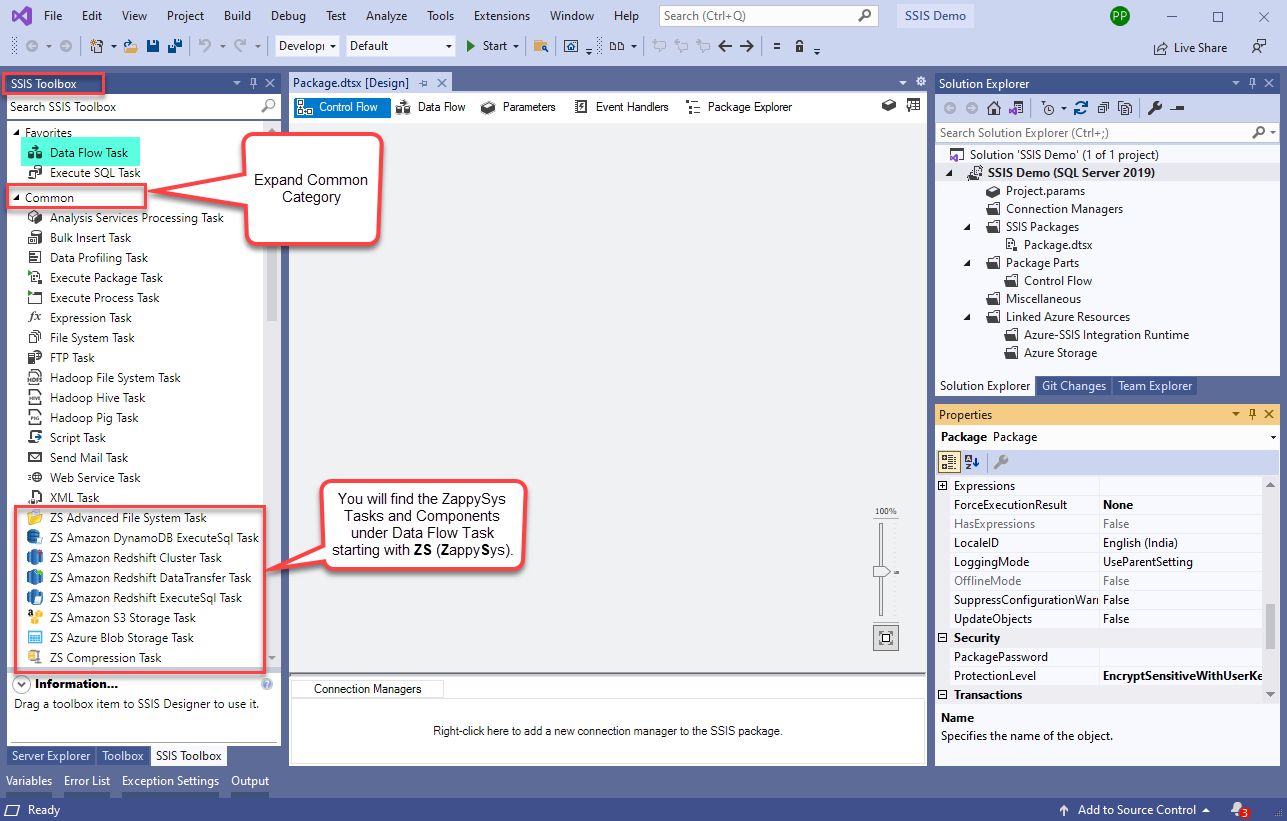

In the new SSIS project screen you will find the following:

- SSIS ToolBox on left side bar

- Solution Explorer and Property Window on right bar

- Control flow, data flow, event Handlers, Package Explorer in tab windows

- Connection Manager Window in the bottom

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link.

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link. -

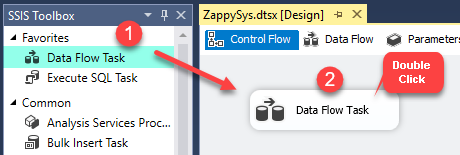

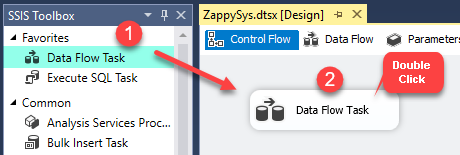

Now, Drag and Drop SSIS Data Flow Task from SSIS Toolbox. Double click on the Data Flow Task to see Data Flow designer.

-

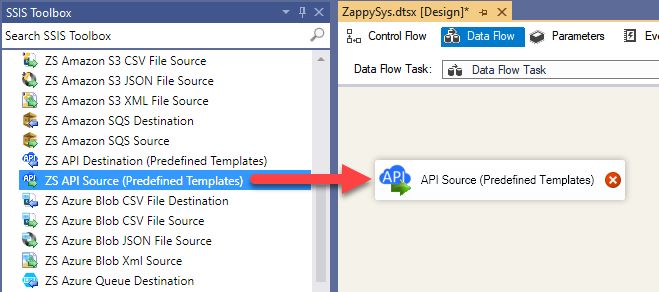

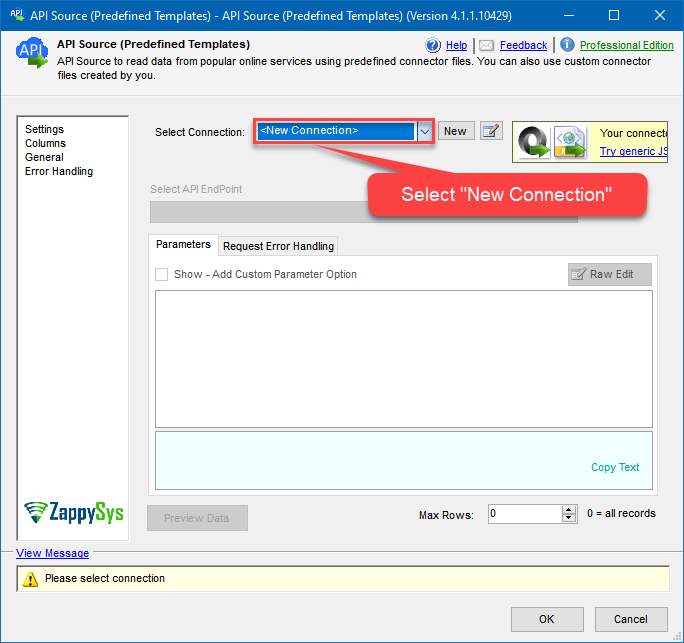

From the SSIS toolbox drag and API Source (Predefined Templates) on the data flow designer surface, and double click on it to edit it:

-

Select New Connection to create a new connection:

-

Use a preinstalled Google BigQuery Connector from Popular Connector List or press Search Online radio button to download Google BigQuery Connector. Once downloaded simply use it in the configuration:

Google BigQuery

-

Now it's time to configure authentication. Firstly, configure authentication settings in Google BigQuery service and then proceed by configuring API Connection Manager. Start by expanding an authentication type:

Google BigQuery authentication

User accounts represent a developer, administrator, or any other person who interacts with Google APIs and services. User accounts are managed as Google Accounts, either with Google Workspace or Cloud Identity. They can also be user accounts that are managed by a third-party identity provider and federated with Workforce Identity Federation. [API reference]

Follow these steps on how to create Client Credentials (User Account principle) to authenticate and access BigQuery API in SSIS package or ODBC data source:

WARNING: If you are planning to automate processes, we recommend that you use a Service Account authentication method. In case, you still need to use User Account, then make sure you use a system/generic account (e.g.automation@my-company.com). When you use a personal account which is tied to a specific employee profile and that employee leaves the company, the token may become invalid and any automated processes using that token will start to fail.Step-1: Create project

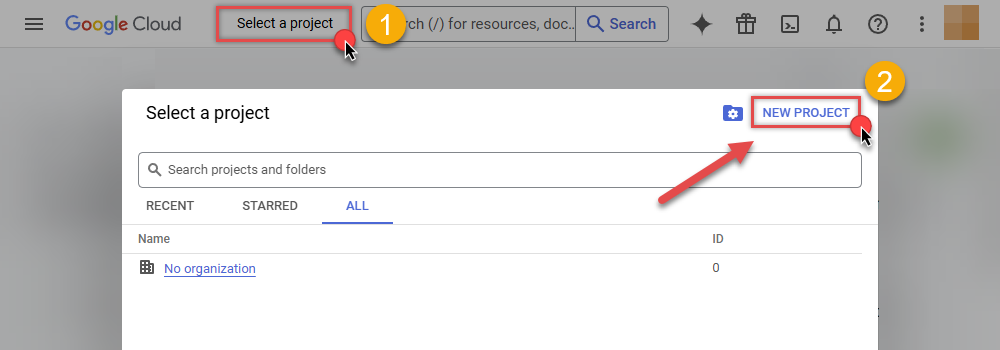

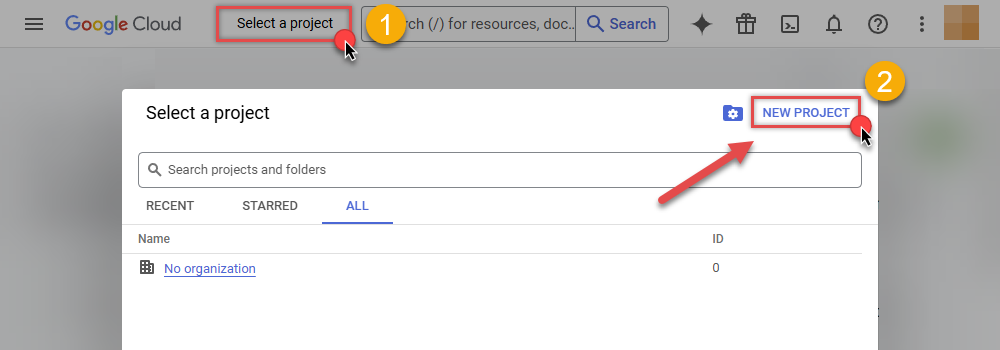

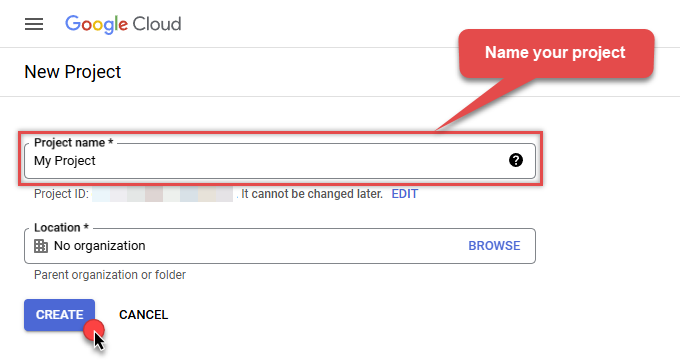

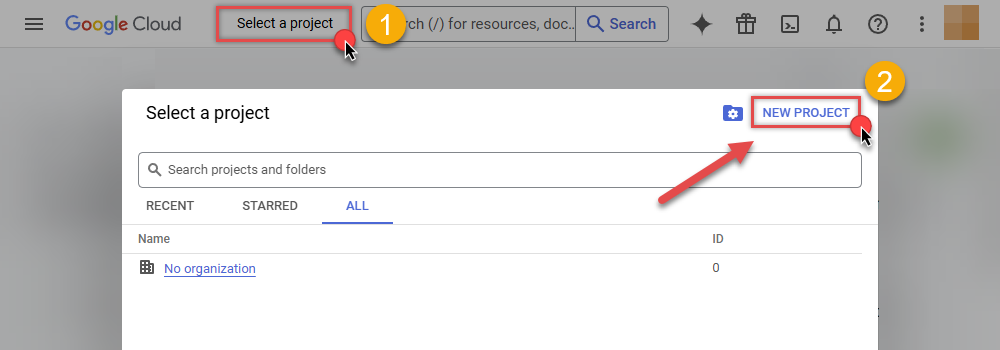

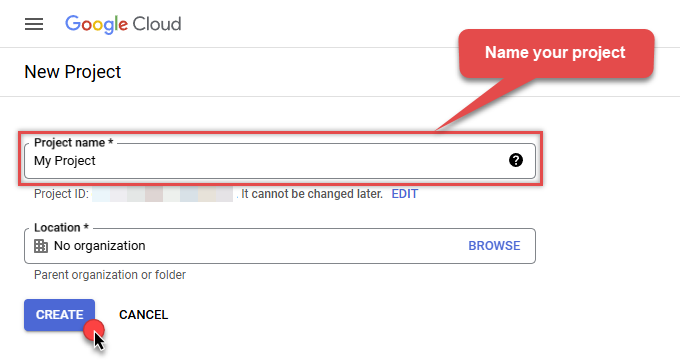

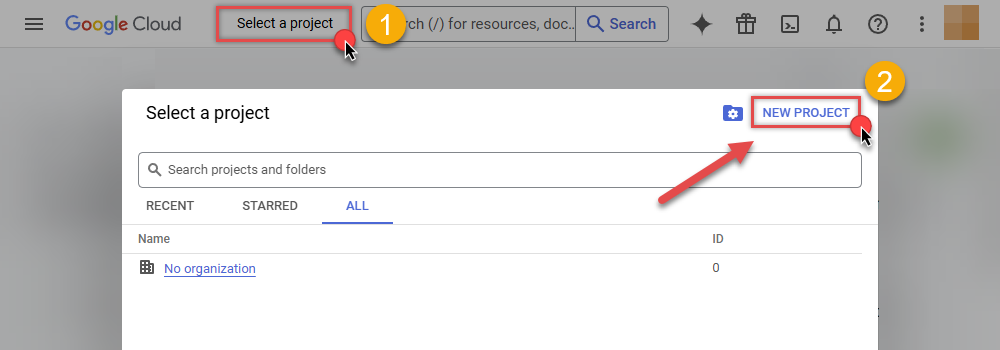

This step is optional, if you already have a project in Google Cloud and can use it. However, if you don't, proceed with these simple steps to create one:

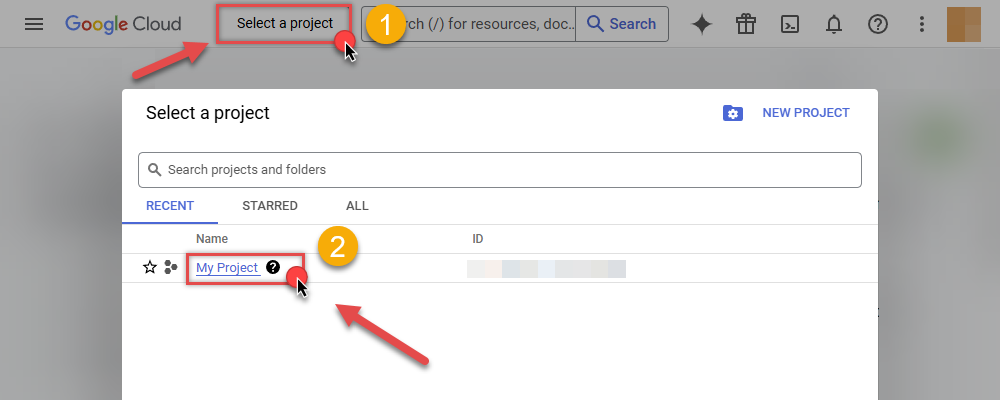

-

First of all, go to Google API Console.

-

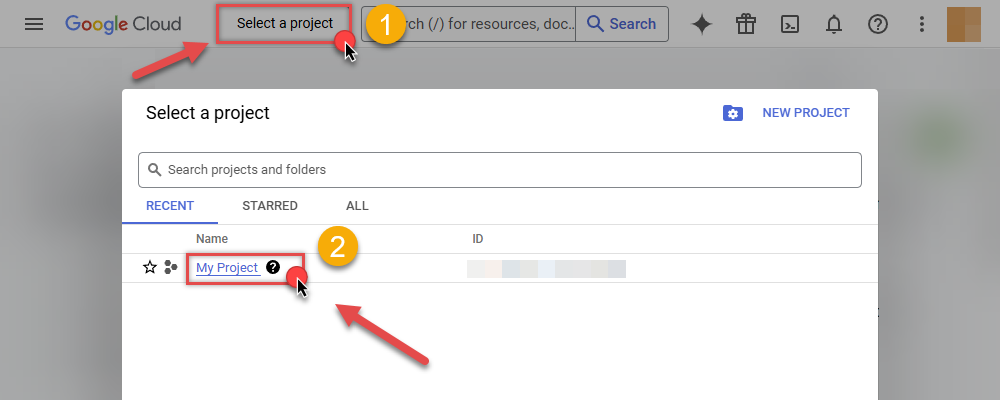

Then click Select a project button and then click NEW PROJECT button:

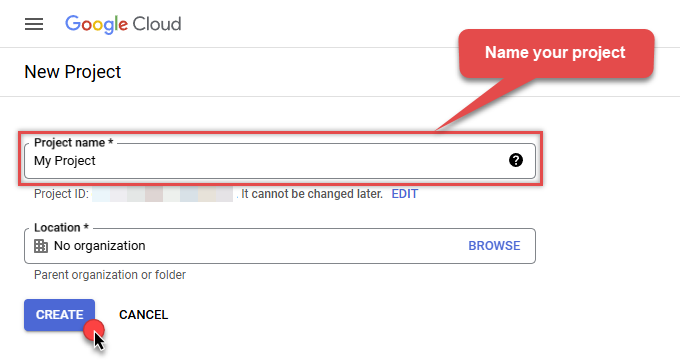

-

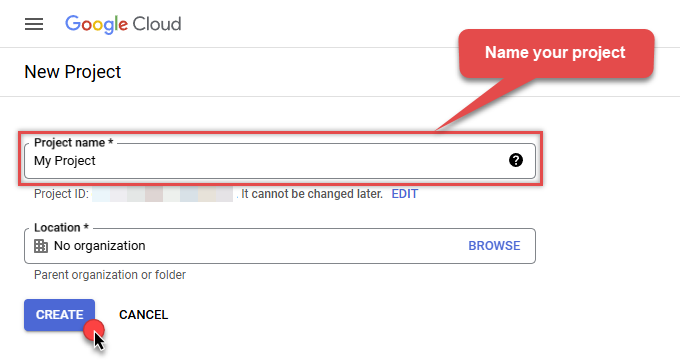

Name your project and click CREATE button:

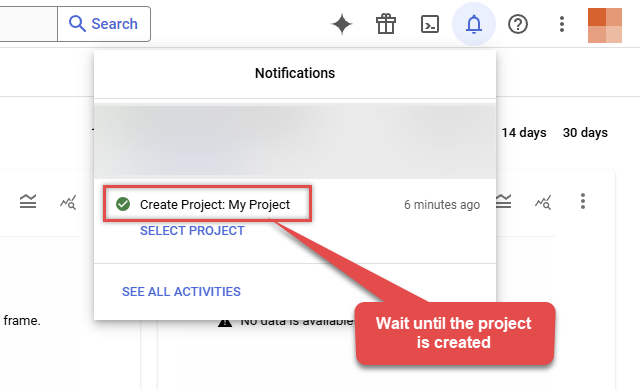

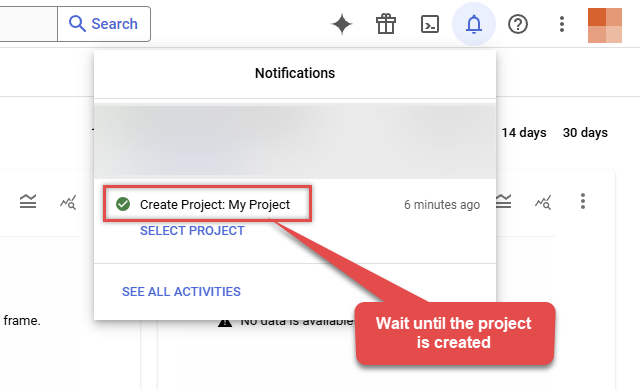

-

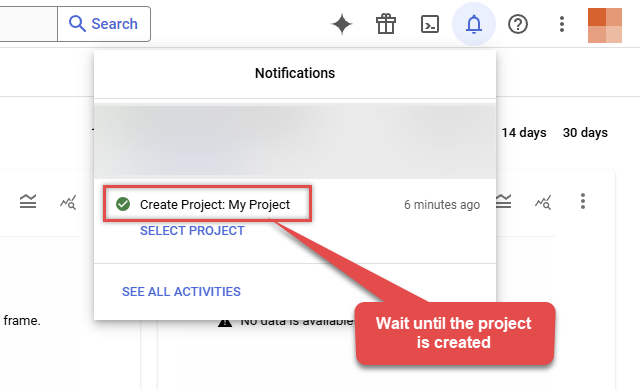

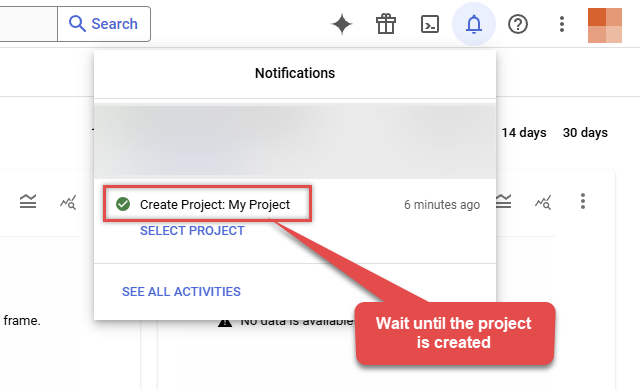

Wait until the project is created:

- Done! Let's proceed to the next step.

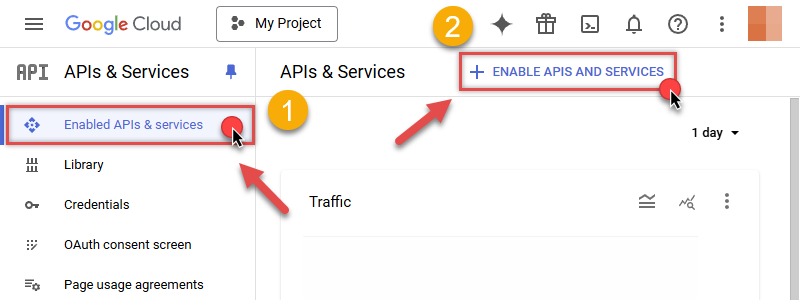

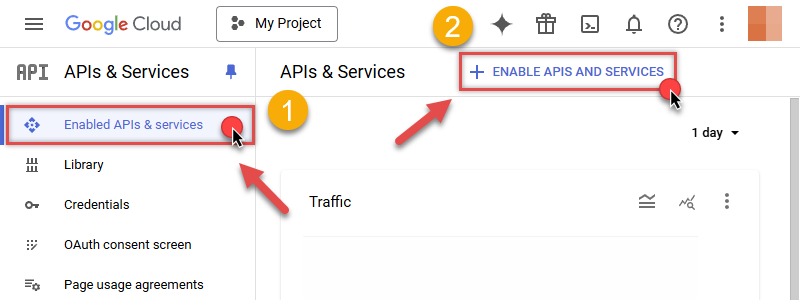

Step-2: Enable Google Cloud APIs

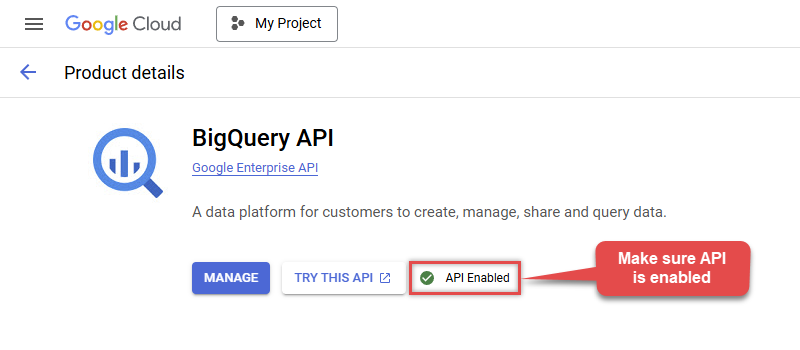

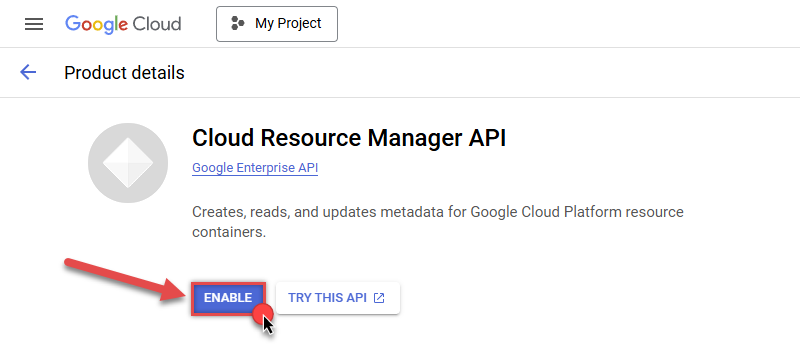

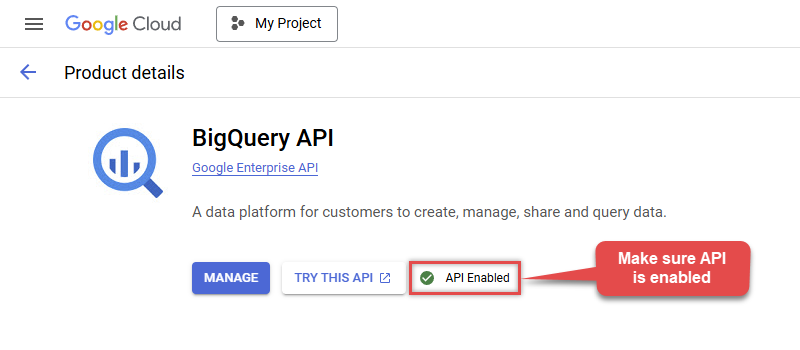

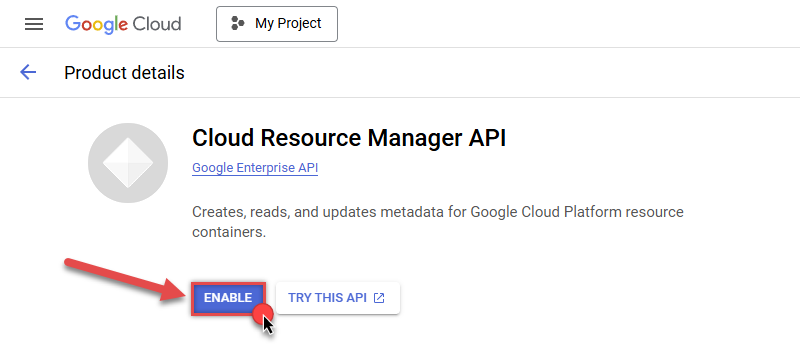

In this step we will enable BigQuery API and Cloud Resource Manager API:

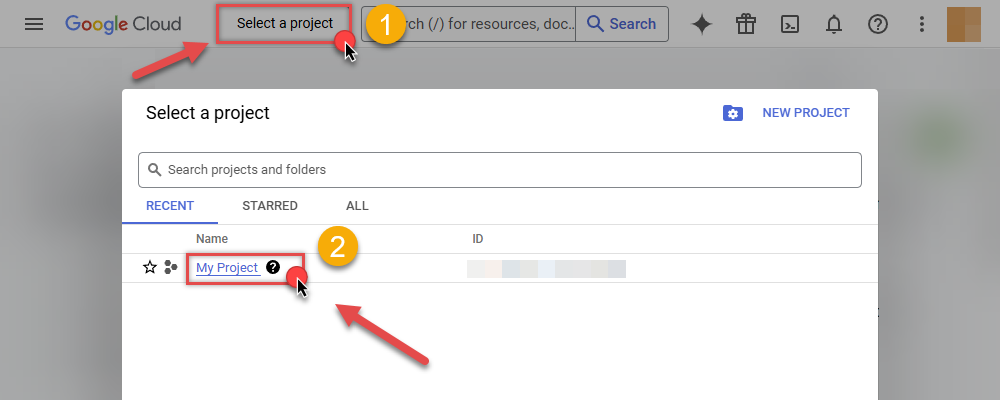

-

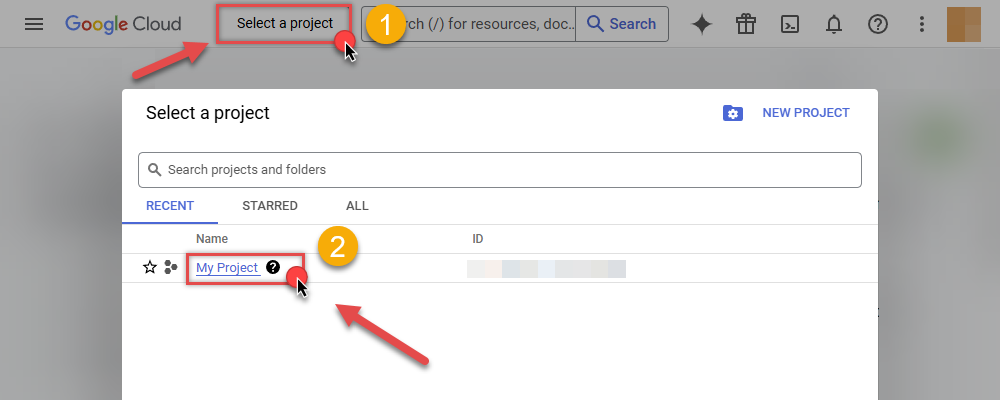

Select your project on the top bar:

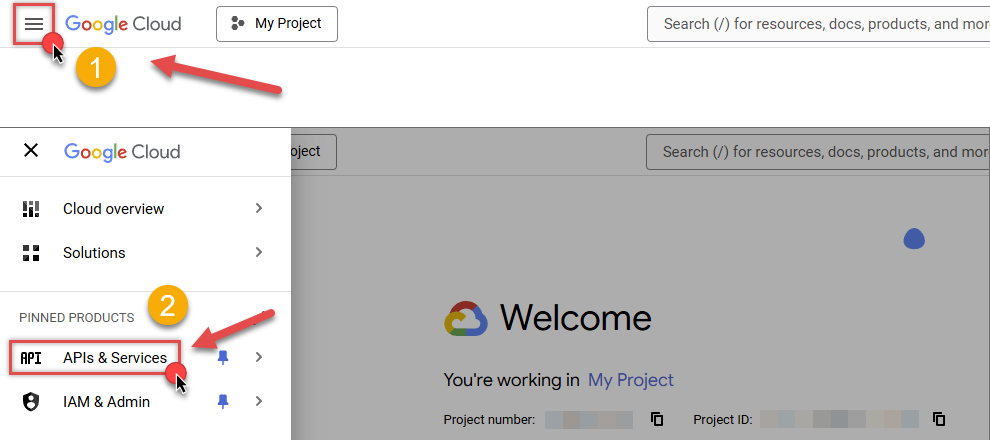

-

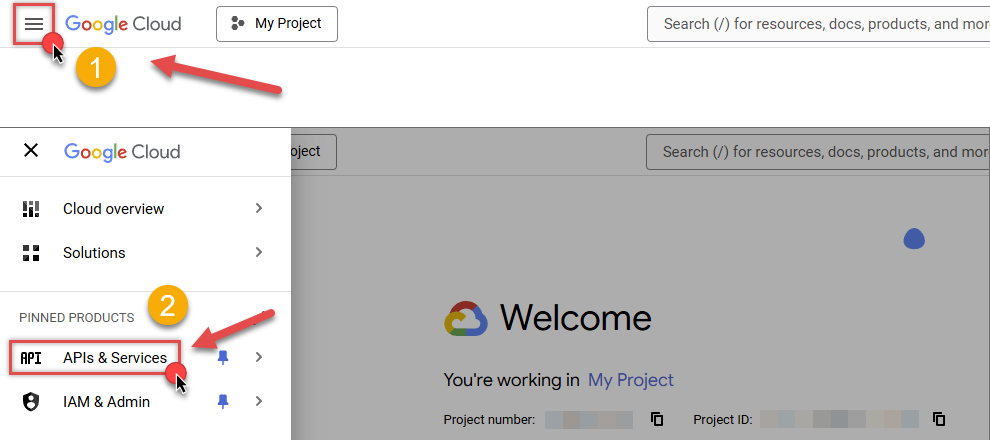

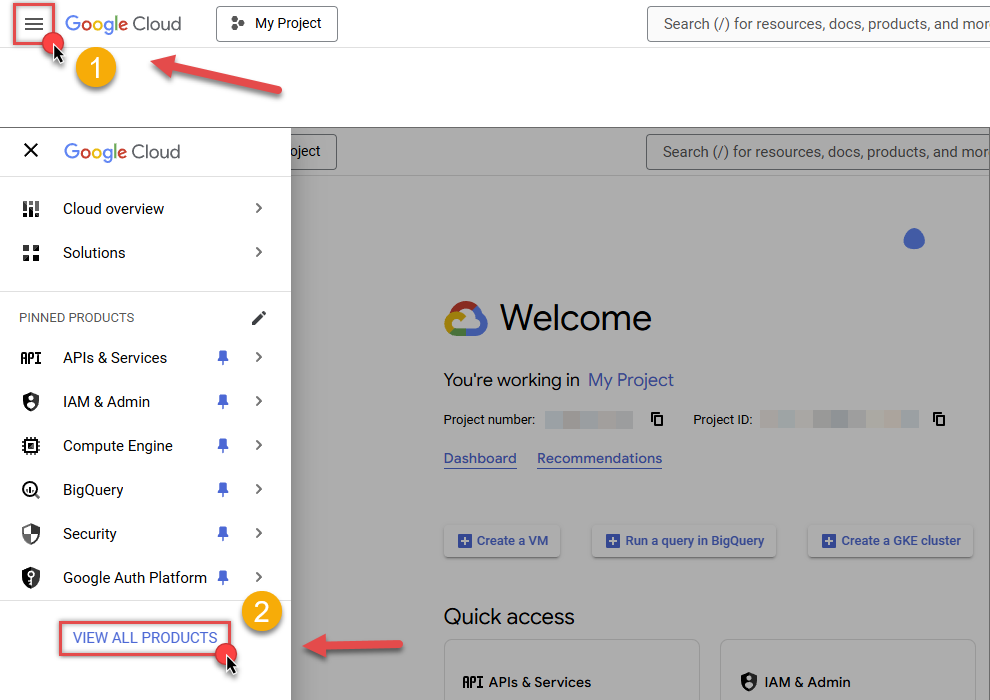

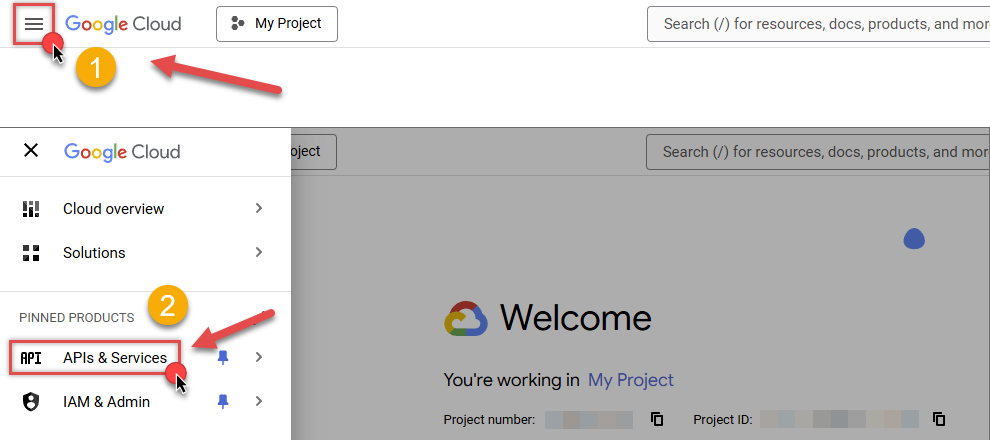

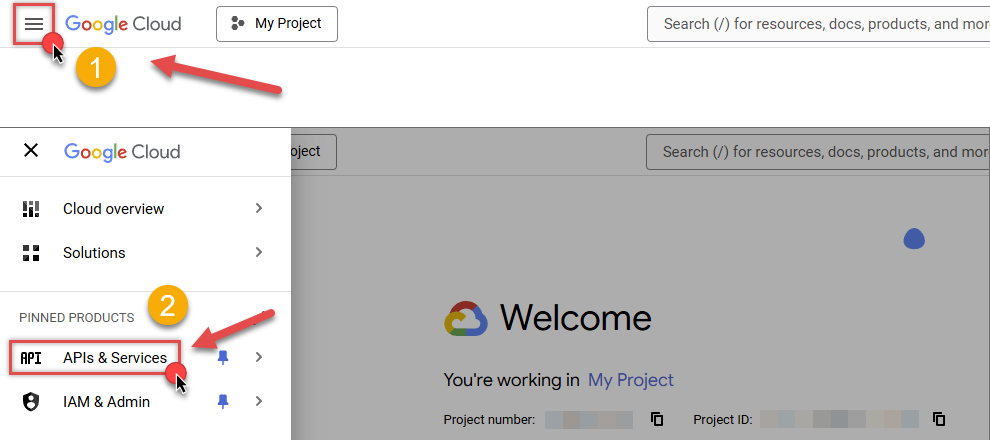

Then click the "hamburger" icon on the top left and access APIs & Services:

-

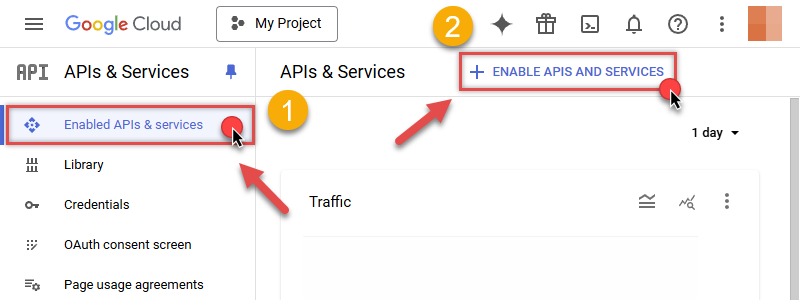

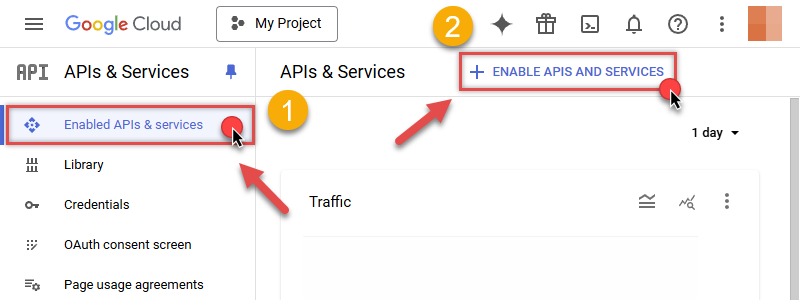

Now let's enable several APIs by clicking ENABLE APIS AND SERVICES button:

-

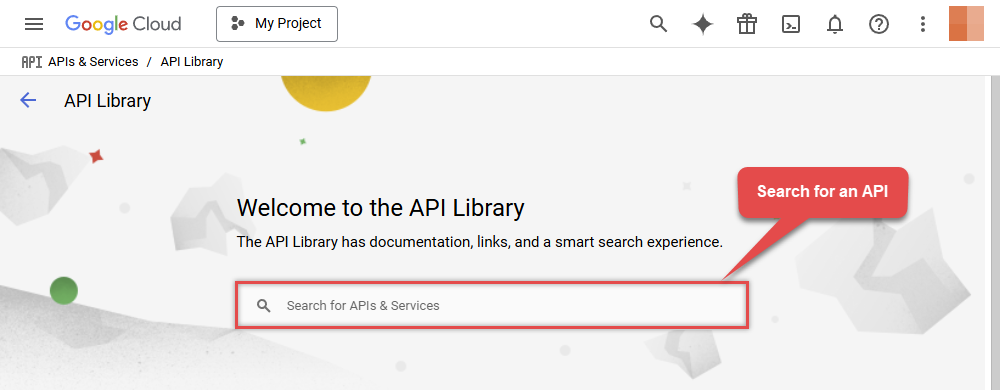

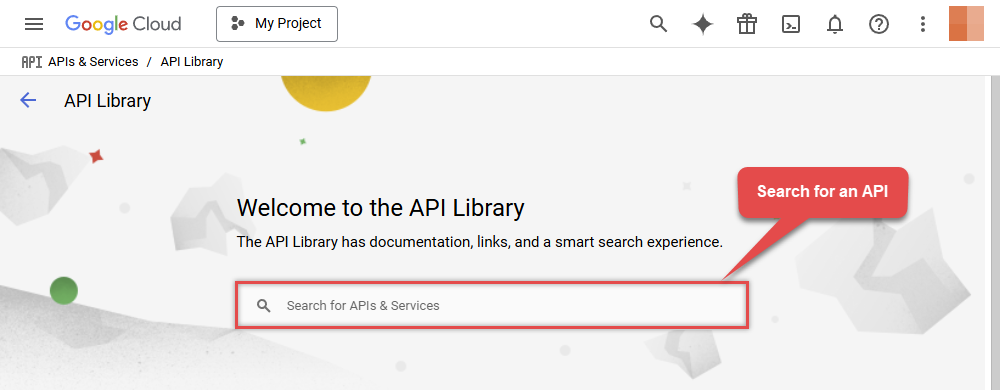

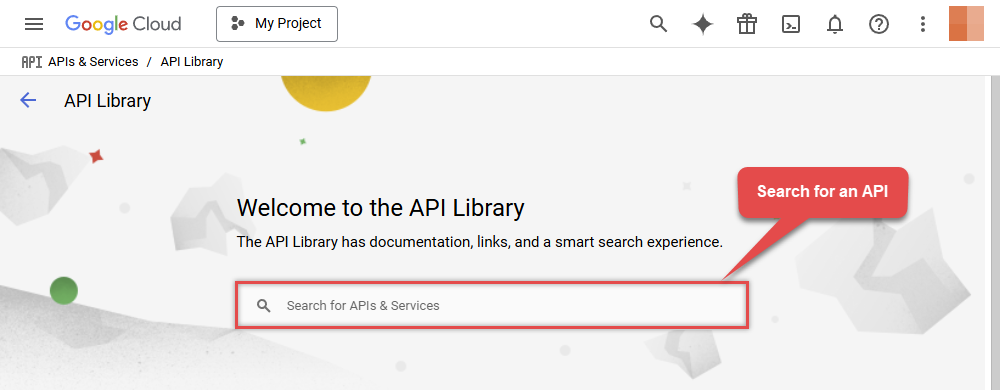

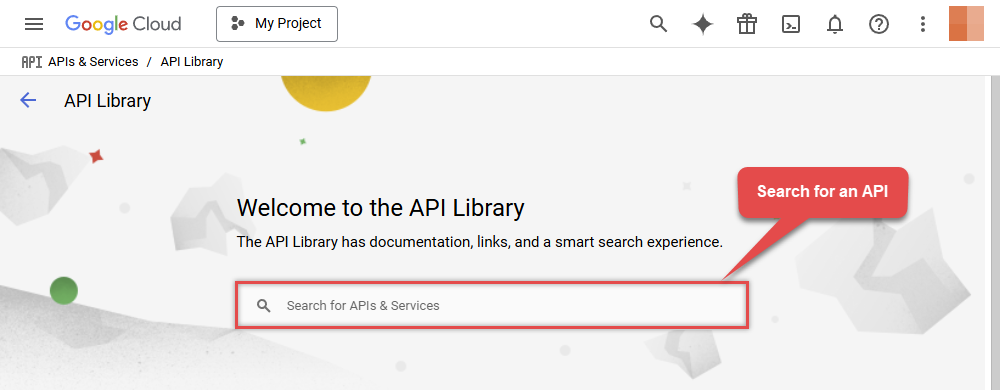

In the search bar search for

bigquery apiand then locate and select BigQuery API:

-

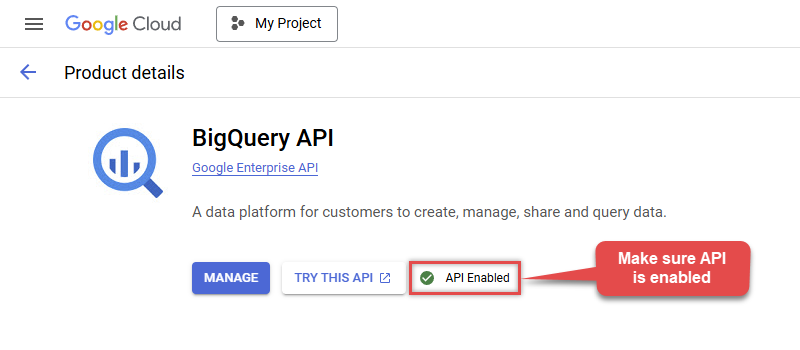

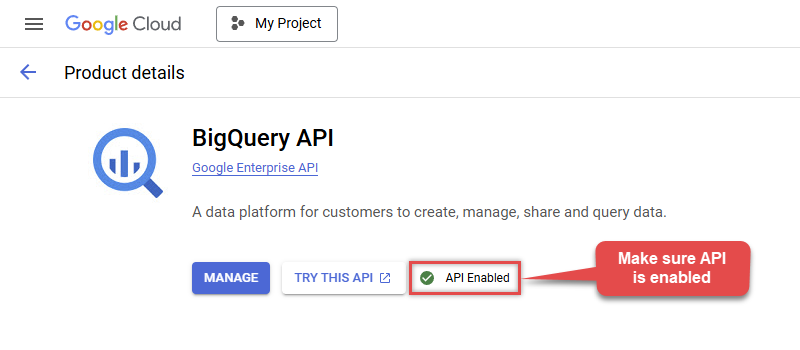

If BigQuery API is not enabled, enable it:

-

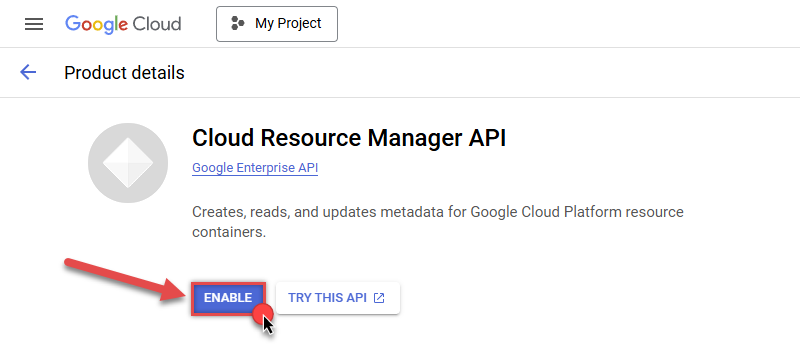

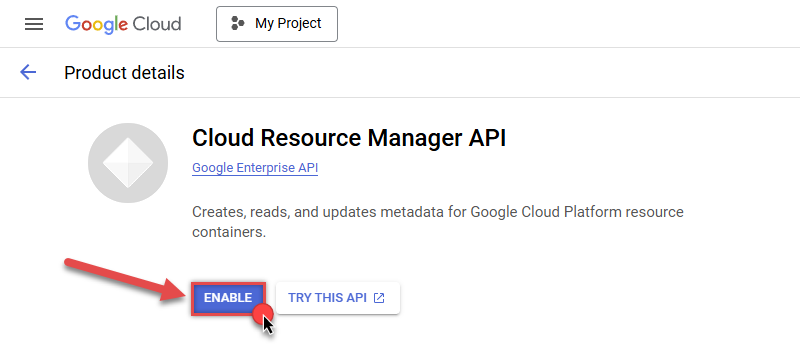

Then repeat the step and enable Cloud Resource Manager API as well:

- Done! Let's proceed to the next step.

Step-3: Create OAuth application

-

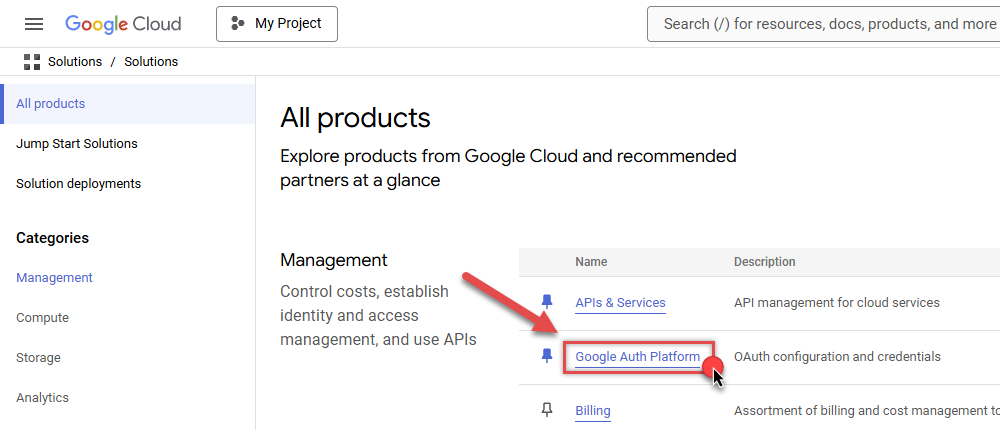

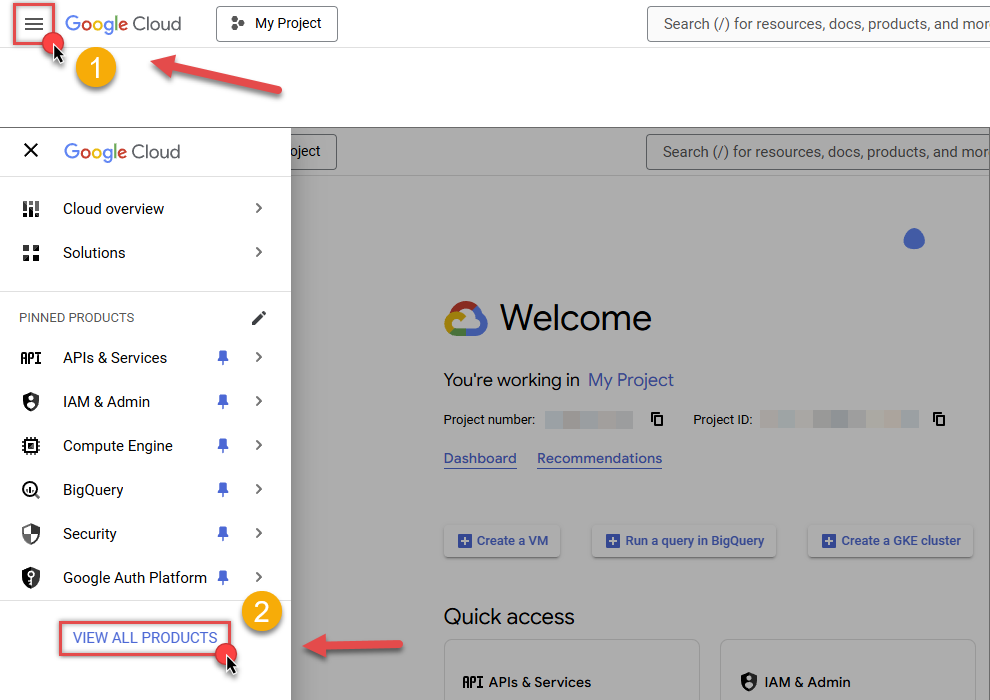

First of all, click the "hamburger" icon on the top left and then hit VIEW ALL PRODUCTS:

-

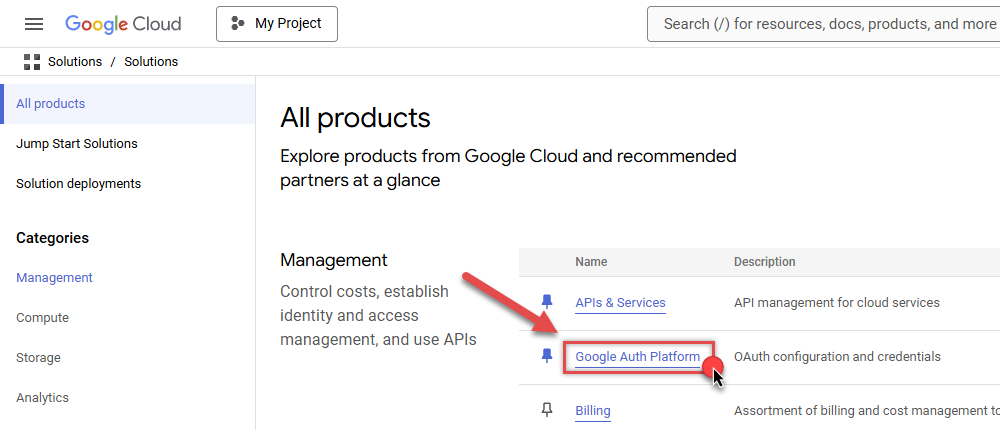

Then access Google Auth Platform to start creating an OAuth application:

-

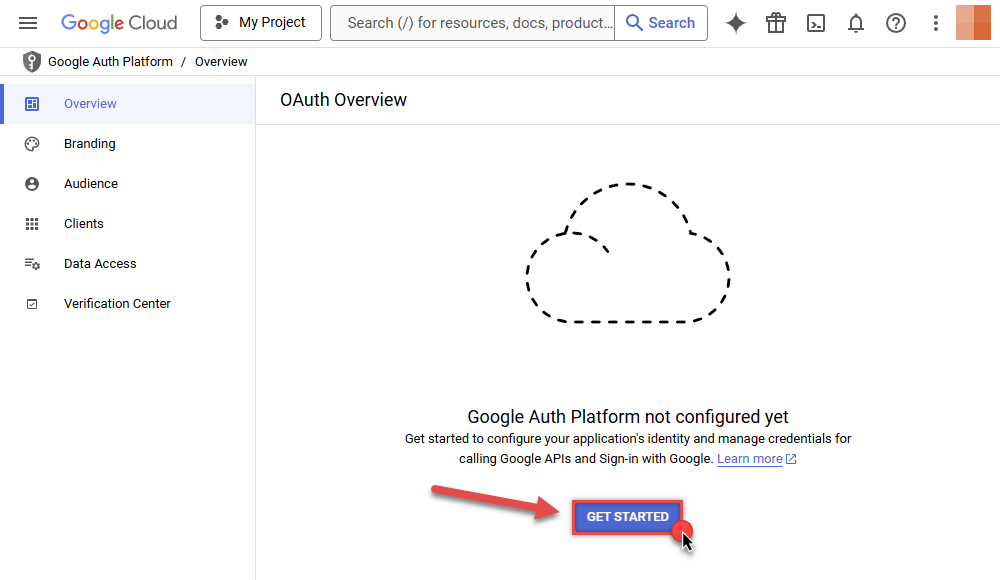

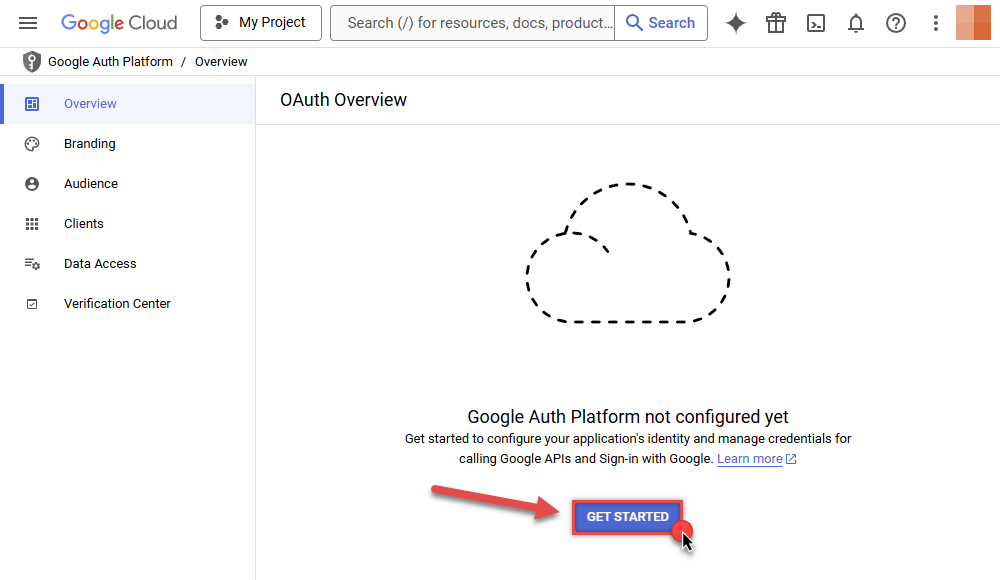

Start by pressing GET STARTED button:

-

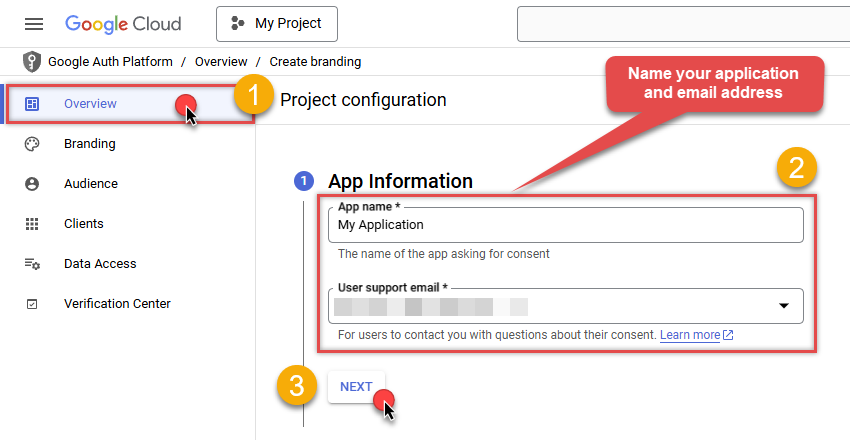

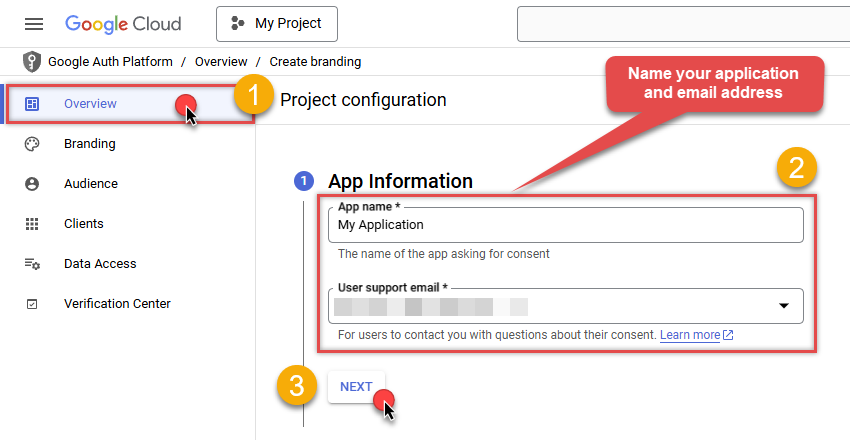

Next, continue by filling in App name and User support email fields:

-

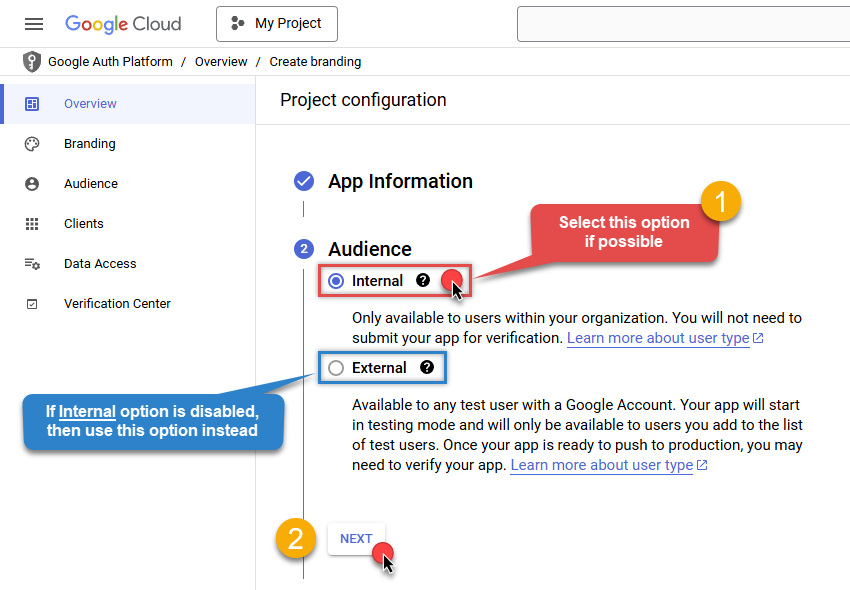

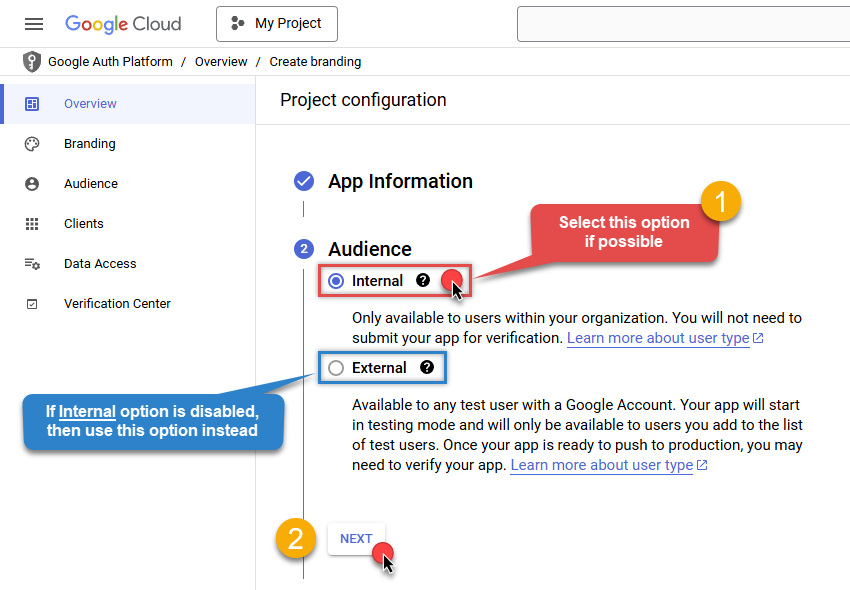

Choose Internal option, if it's enabled, otherwise select External:

-

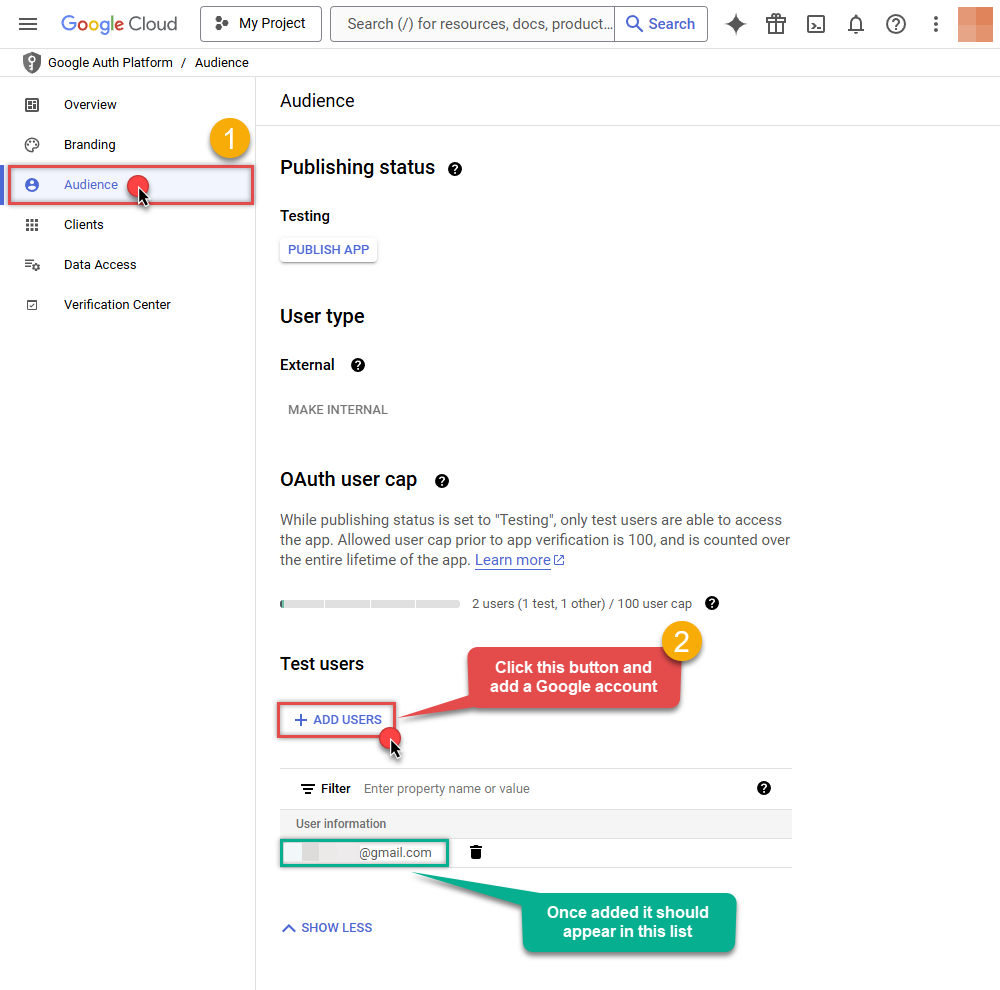

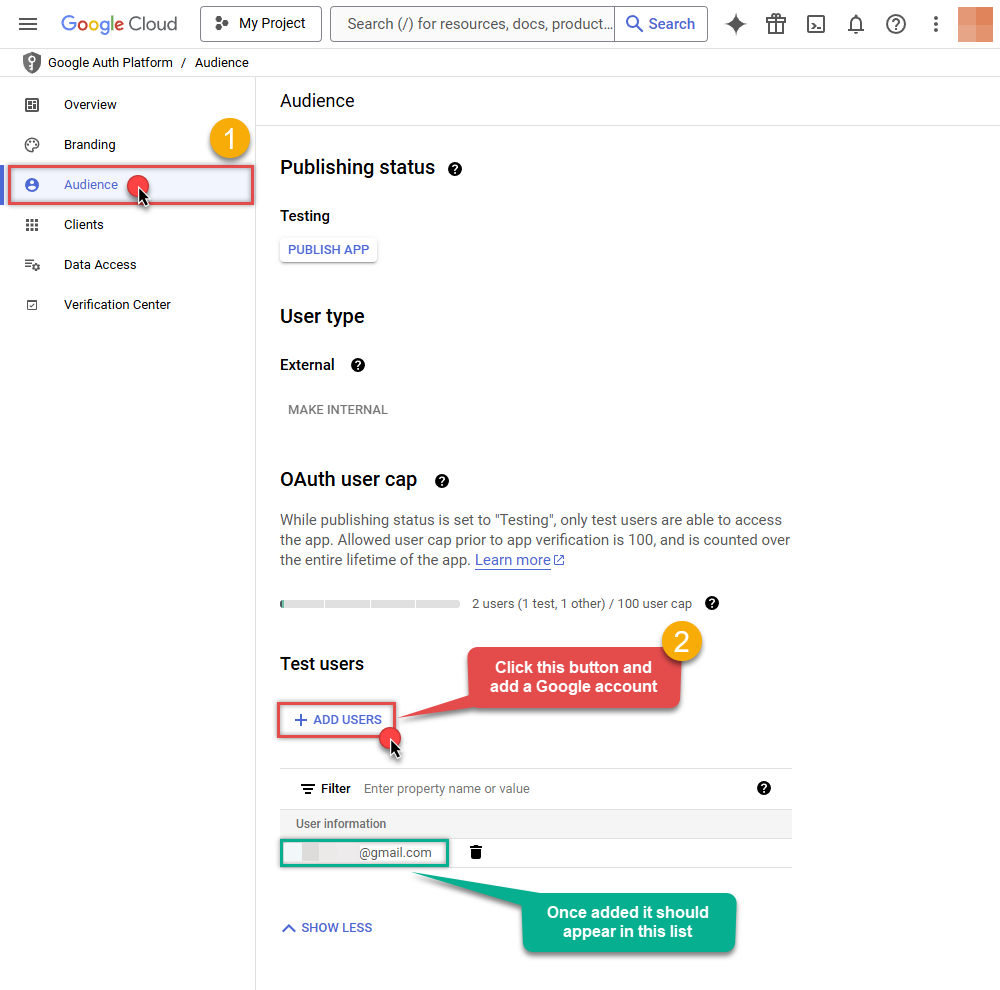

Optional step if you used

Internaloption in the previous step. Nevertheless, if you had to useExternaloption, then click ADD USERS to add a user:

-

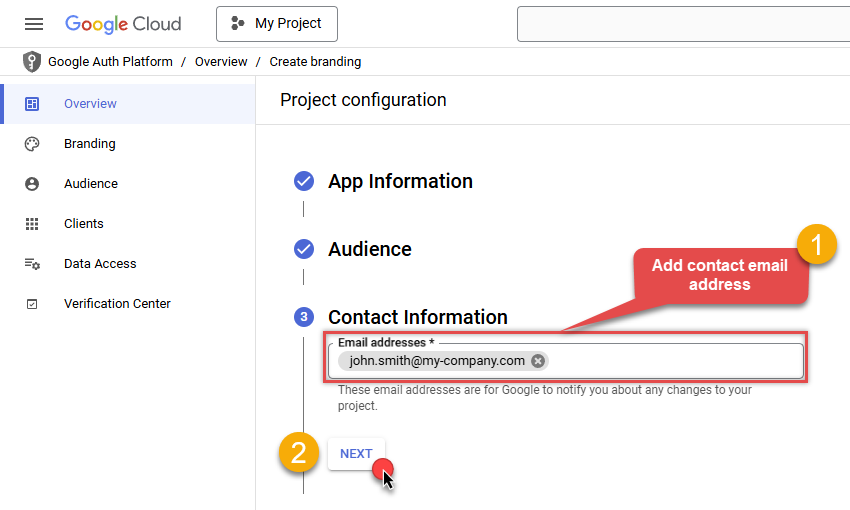

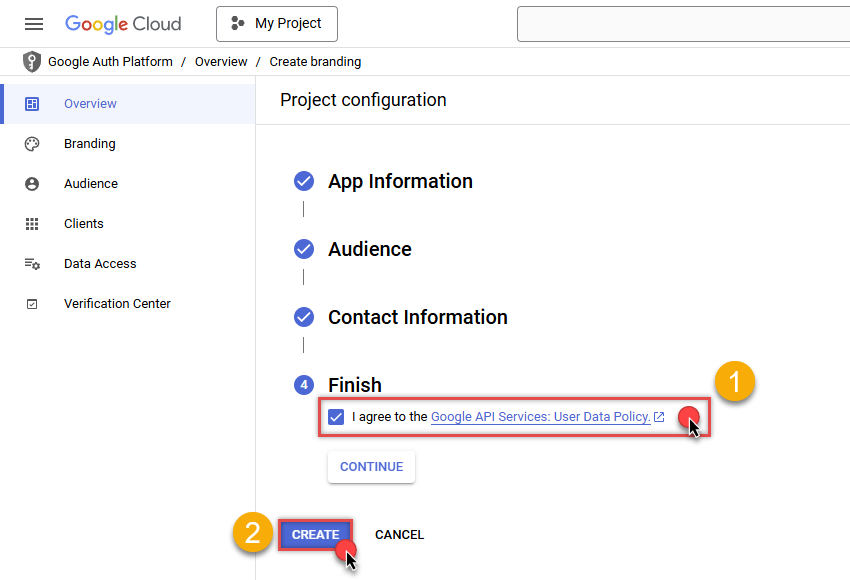

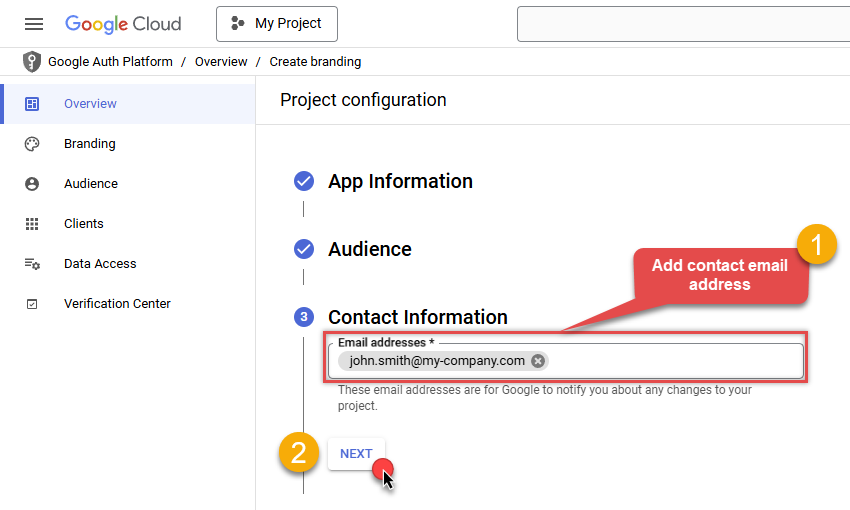

Then add your contact Email address:

-

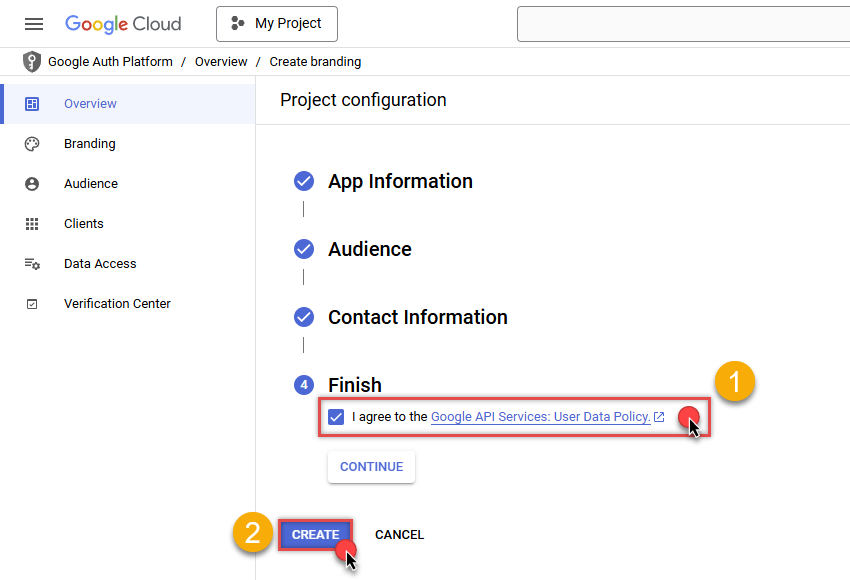

Finally, check the checkbox and click CREATE button:

- Done! Let's create Client Credentials in the next step.

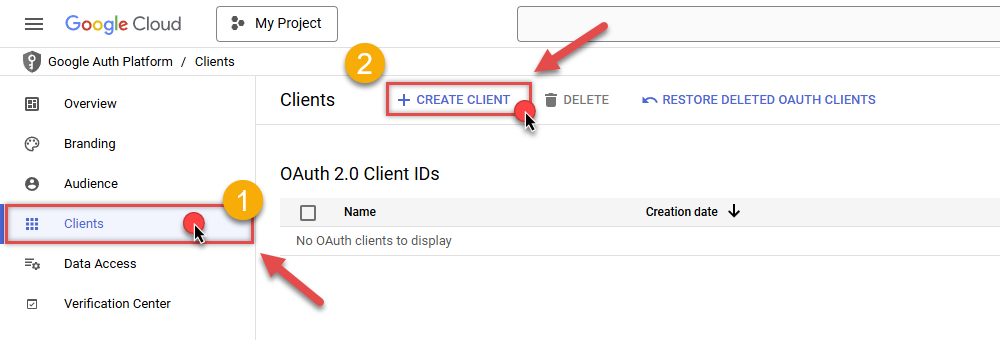

Step-4: Create Client Credentials

-

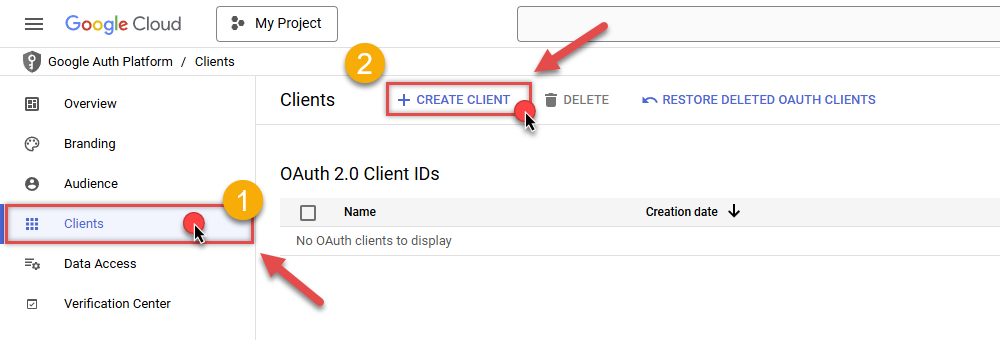

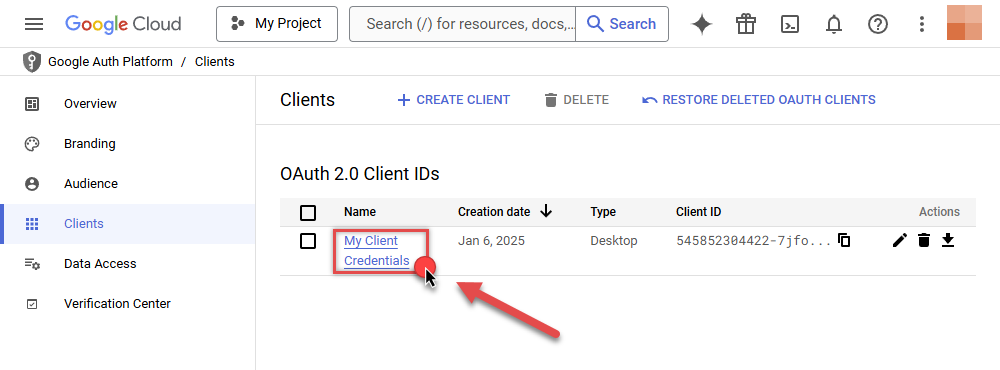

In Google Auth Platform, select Clients menu item and click CREATE CLIENT button:

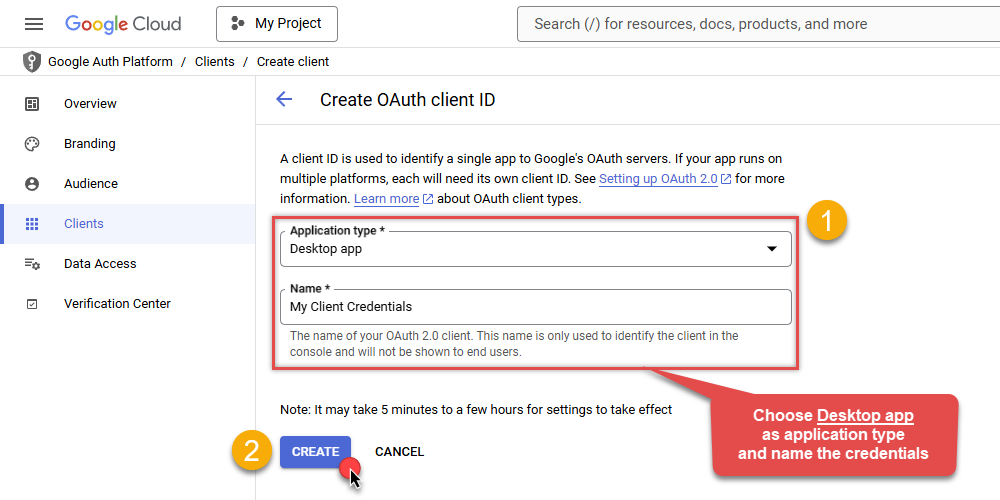

-

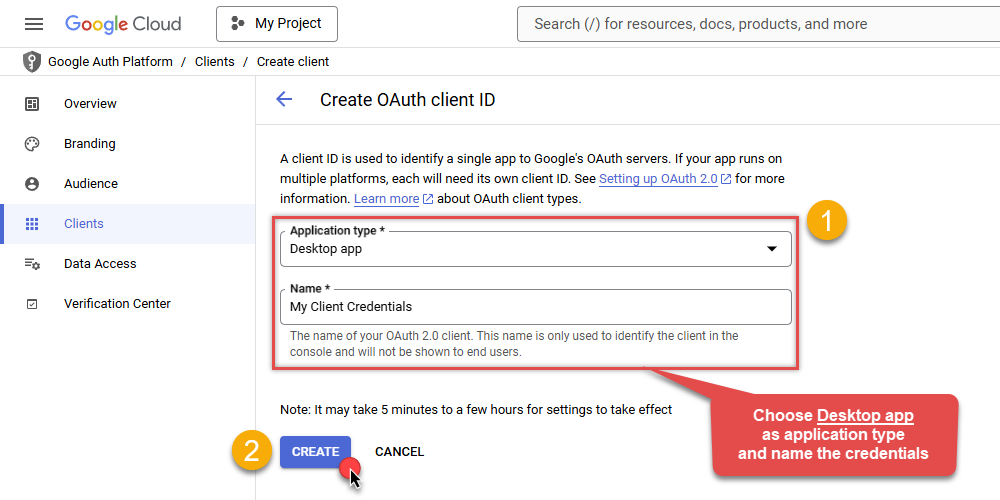

Choose

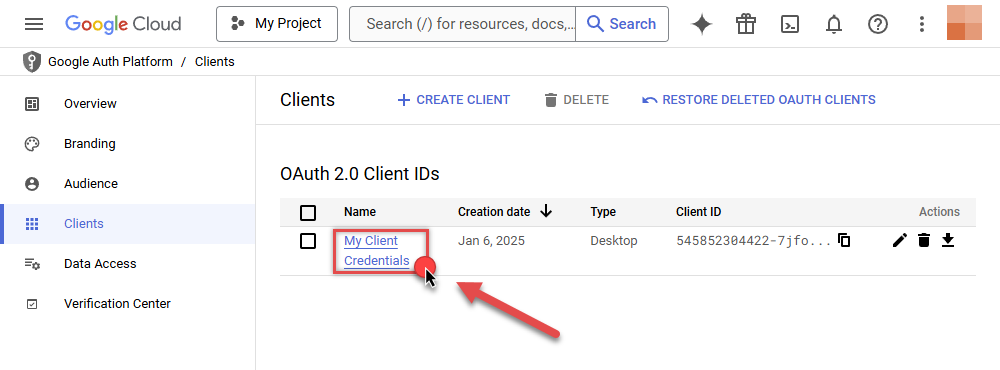

Desktop appas Application type and name your credentials:

-

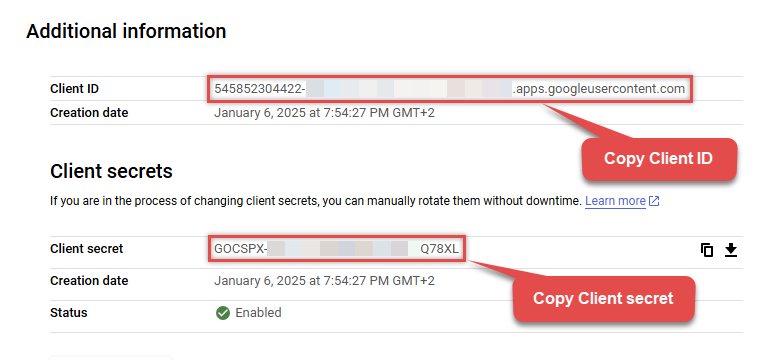

Continue by opening the created credentials:

-

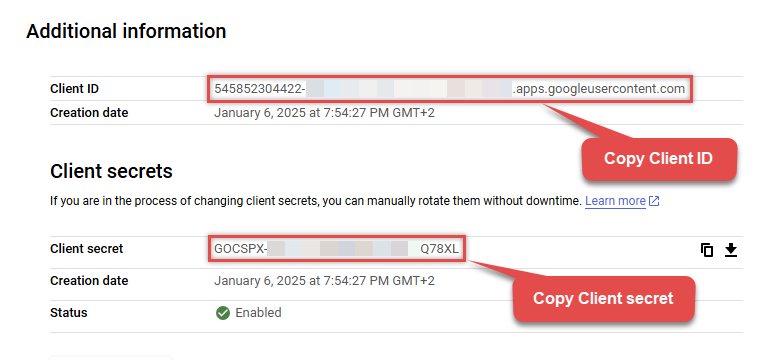

Finally, copy Client ID and Client secret for the later step:

-

Done! We have all the data needed for authentication, let's proceed to the last step!

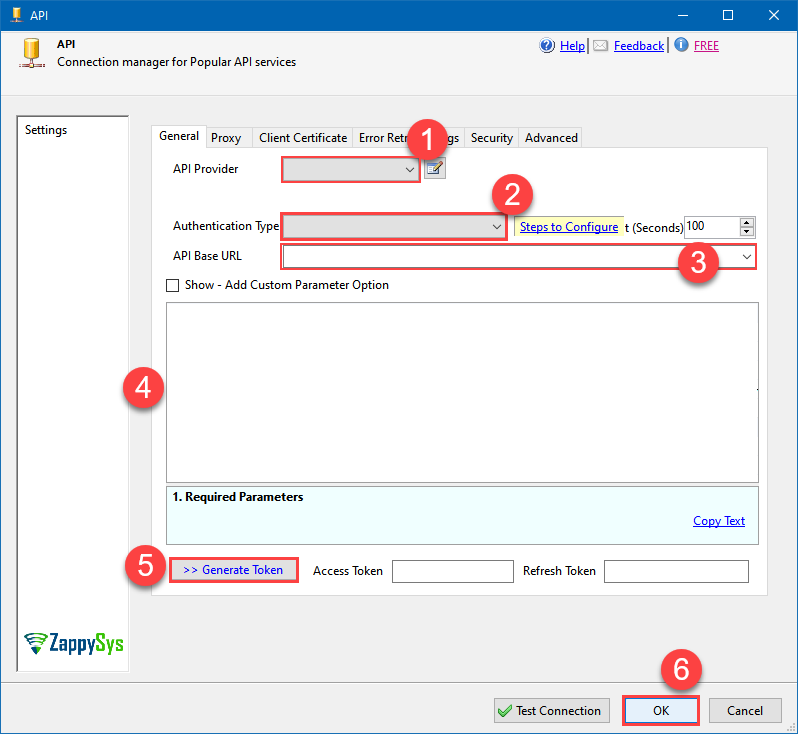

Step-5: Configure connection

-

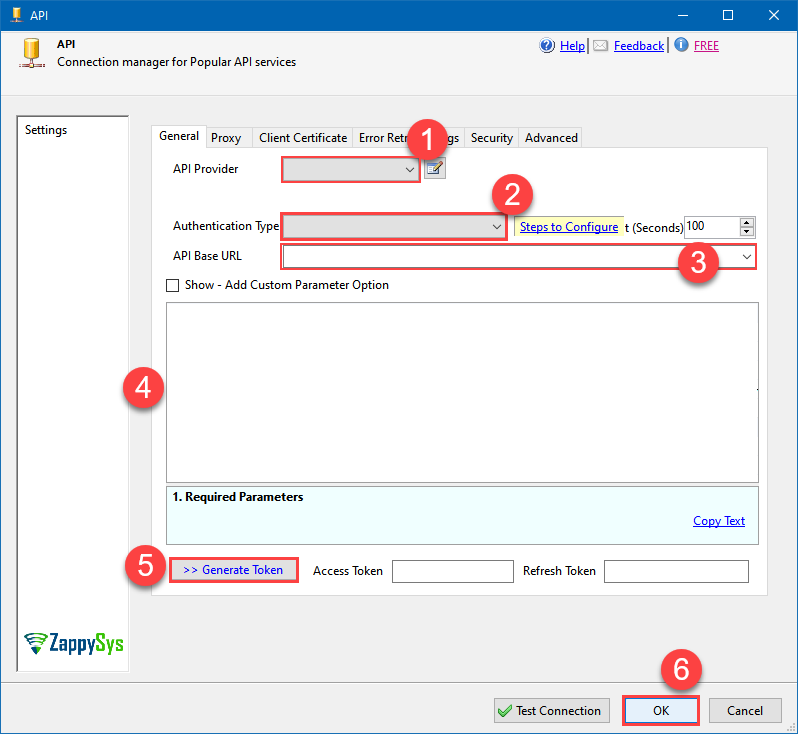

Now go to SSIS package or ODBC data source and use previously copied values in User Account authentication configuration:

- In the ClientId field paste the Client ID value.

- In the ClientSecret field paste the Client secret value.

-

Press Generate Token button to generate Access and Refresh Tokens.

-

Then choose ProjectId from the drop down menu.

-

Continue by choosing DatasetId from the drop down menu.

-

Finally, click Test Connection to confirm the connection is working.

-

Done! Now you are ready to use Google BigQuery Connector!

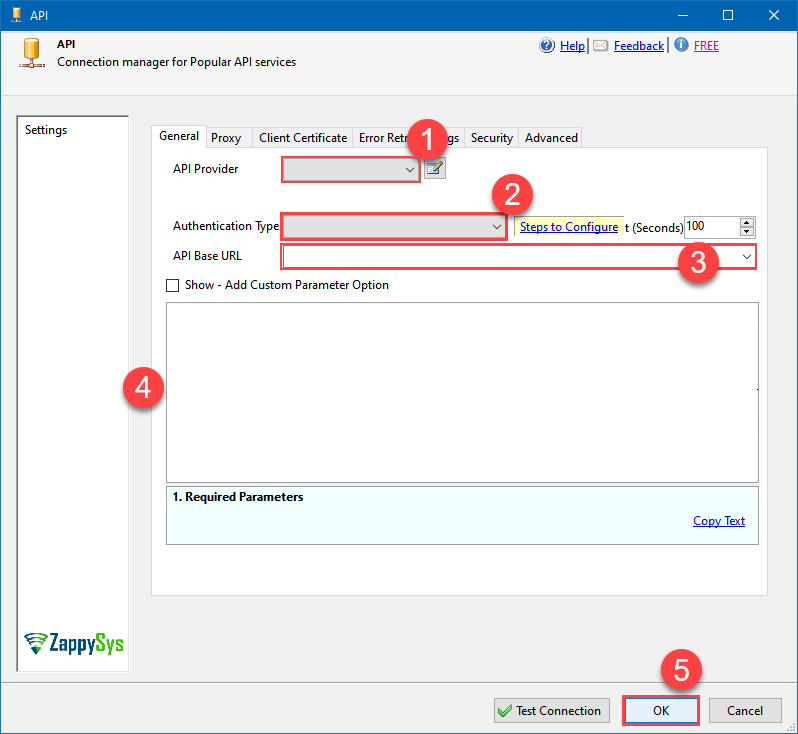

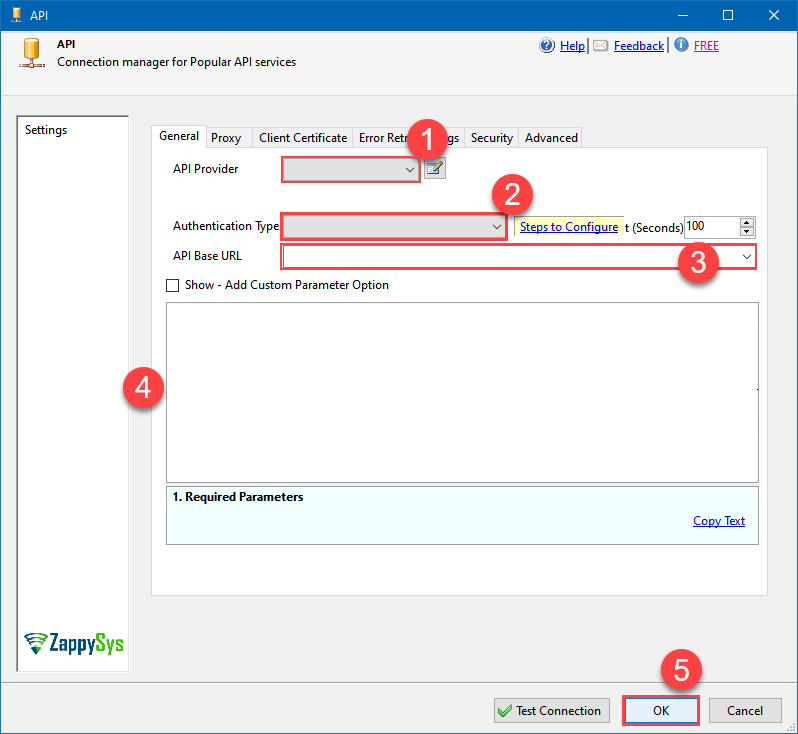

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

User Account [OAuth] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Press Generate Token button to generate the tokens.

- Finally, hit OK button:

Google BigQueryUser Account [OAuth]https://www.googleapis.com/bigquery/v2Required Parameters UseCustomApp Fill-in the parameter... ProjectId (Choose after [Generate Token] clicked) Fill-in the parameter... DatasetId (Choose after [Generate Token] clicked and ProjectId selected) Fill-in the parameter... Optional Parameters ClientId ClientSecret Scope https://www.googleapis.com/auth/bigquery https://www.googleapis.com/auth/bigquery.insertdata https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/cloud-platform.read-only https://www.googleapis.com/auth/devstorage.full_control https://www.googleapis.com/auth/devstorage.read_only https://www.googleapis.com/auth/devstorage.read_write RetryMode RetryWhenStatusCodeMatch RetryStatusCodeList 429|503 RetryCountMax 5 RetryMultiplyWaitTime True Job Location Redirect URL (Only for Web App)

Google BigQuery authentication

Service accounts are accounts that do not represent a human user. They provide a way to manage authentication and authorization when a human is not directly involved, such as when an application needs to access Google Cloud resources. Service accounts are managed by IAM. [API reference]

Follow these steps on how to create Service Account to authenticate and access BigQuery API in SSIS package or ODBC data source:

Step-1: Create project

This step is optional, if you already have a project in Google Cloud and can use it. However, if you don't, proceed with these simple steps to create one:

-

First of all, go to Google API Console.

-

Then click Select a project button and then click NEW PROJECT button:

-

Name your project and click CREATE button:

-

Wait until the project is created:

- Done! Let's proceed to the next step.

Step-2: Enable Google Cloud APIs

In this step we will enable BigQuery API and Cloud Resource Manager API:

-

Select your project on the top bar:

-

Then click the "hamburger" icon on the top left and access APIs & Services:

-

Now let's enable several APIs by clicking ENABLE APIS AND SERVICES button:

-

In the search bar search for

bigquery apiand then locate and select BigQuery API:

-

If BigQuery API is not enabled, enable it:

-

Then repeat the step and enable Cloud Resource Manager API as well:

- Done! Let's proceed to the next step and create a service account.

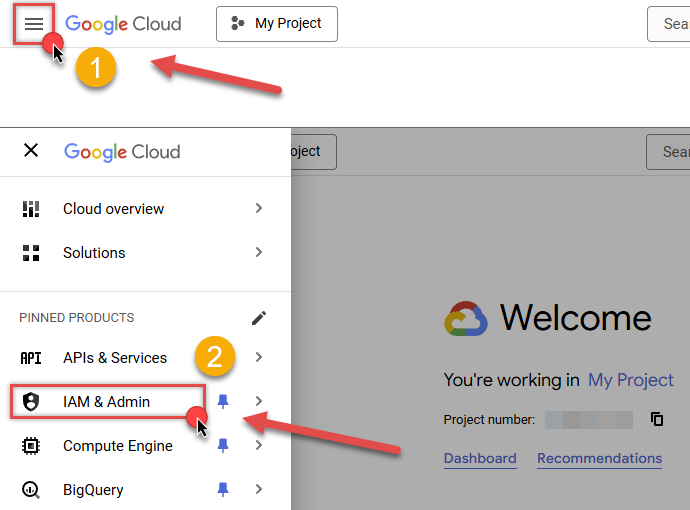

Step-3: Create Service Account

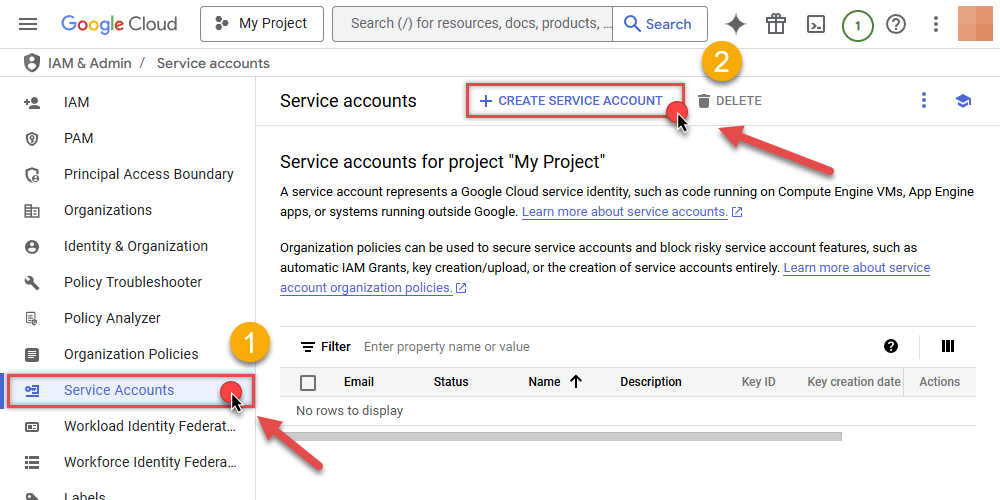

Use the steps below to create a Service Account in Google Cloud:

-

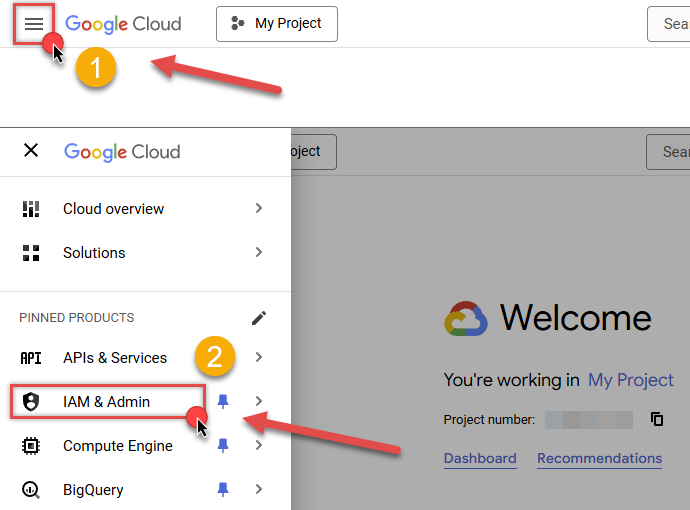

First of all, go to IAM & Admin in Google Cloud console:

-

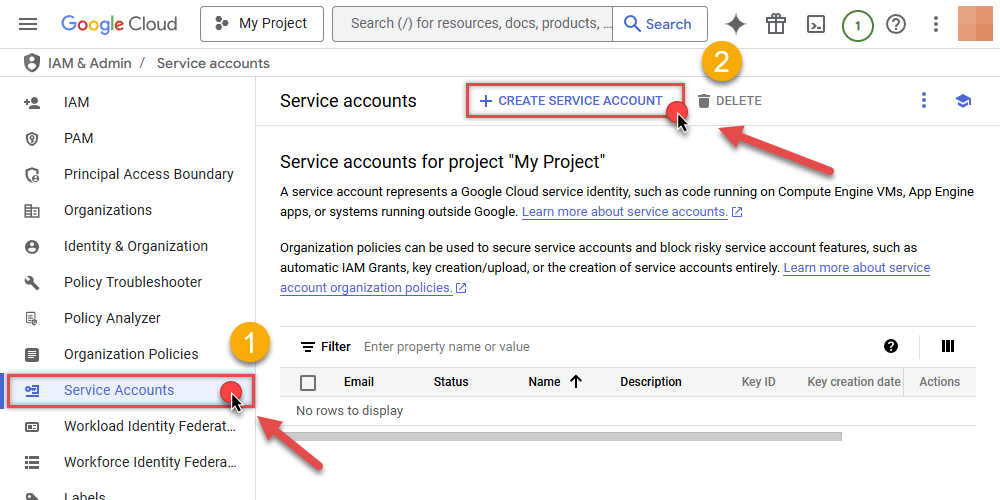

Once you do that, click Service Accounts on the left side and click CREATE SERVICE ACCOUNT button:

-

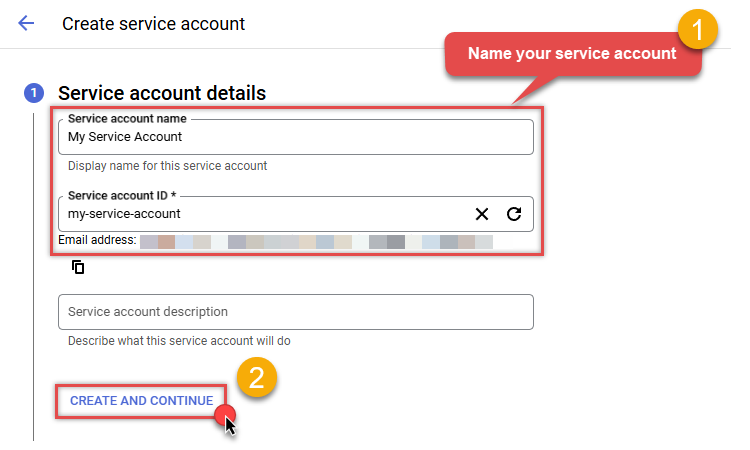

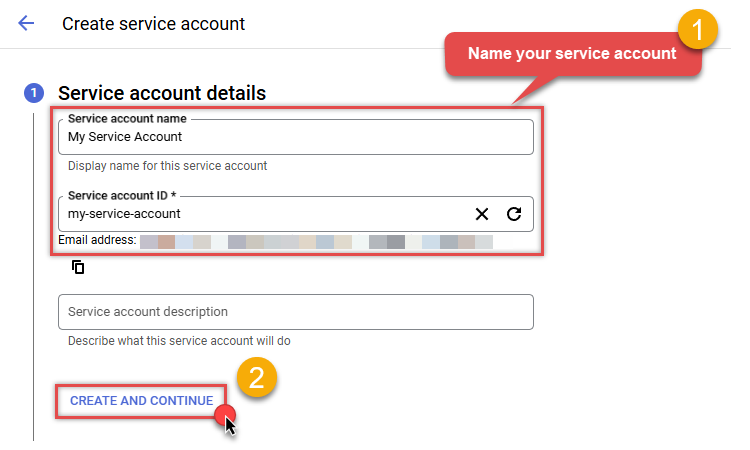

Then name your service account and click CREATE AND CONTINUE button:

-

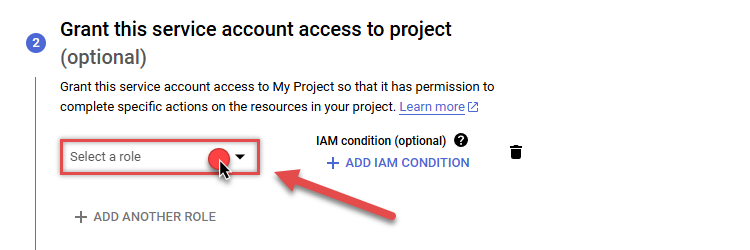

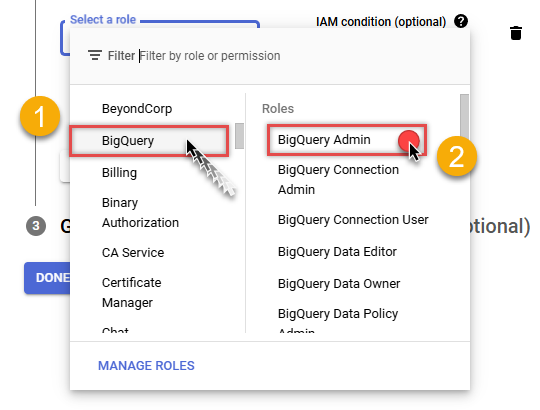

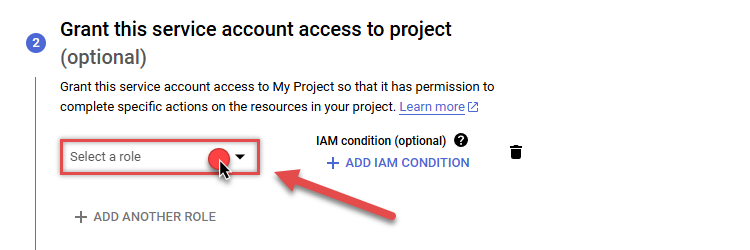

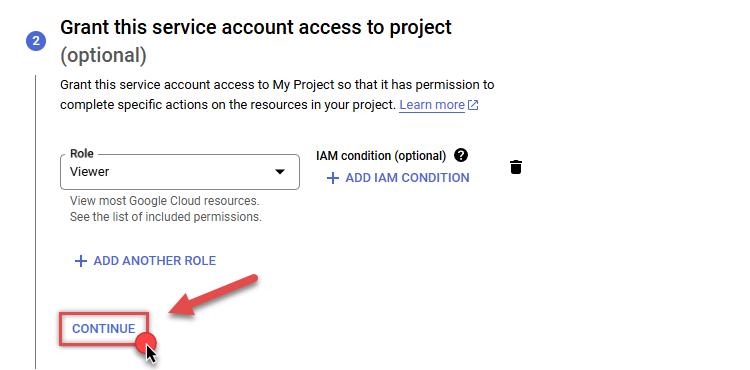

Continue by clicking Select a role dropdown and start granting service account BigQuery Admin and Project Viewer roles:

-

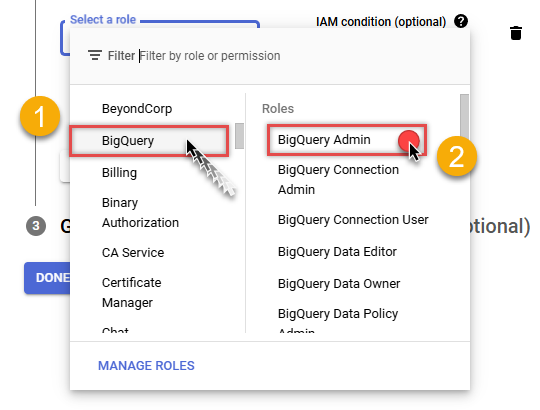

Find BigQuery group on the left and then click on BigQuery Admin role on the right:

-

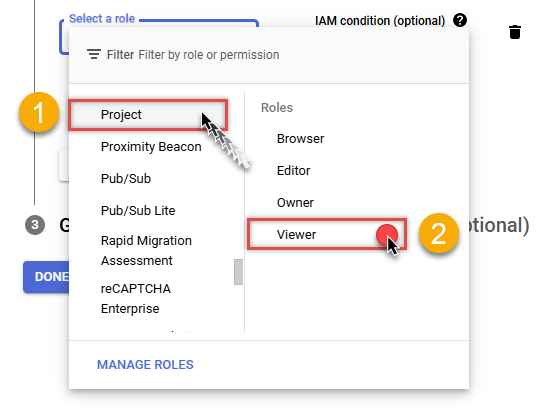

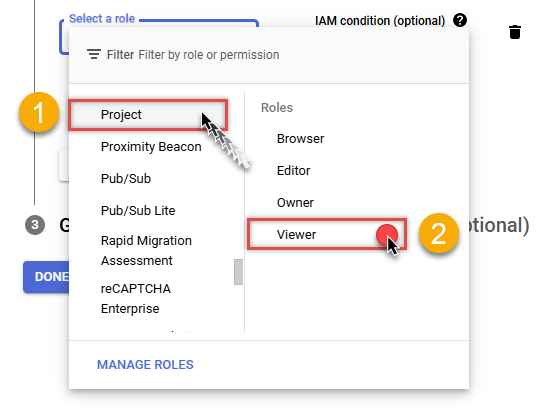

Then click ADD ANOTHER ROLE button, find Project group and select Viewer role:

-

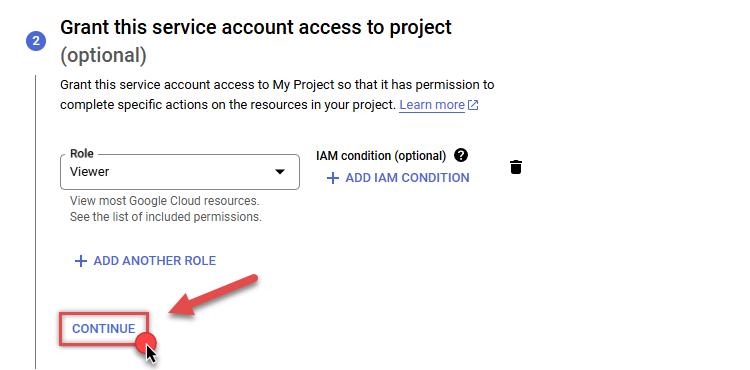

Finish adding roles by clicking CONTINUE button:

You can always add or modify permissions later in IAM & Admin.

You can always add or modify permissions later in IAM & Admin. -

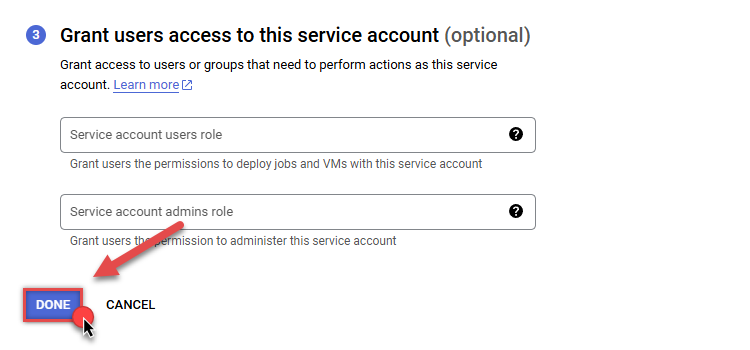

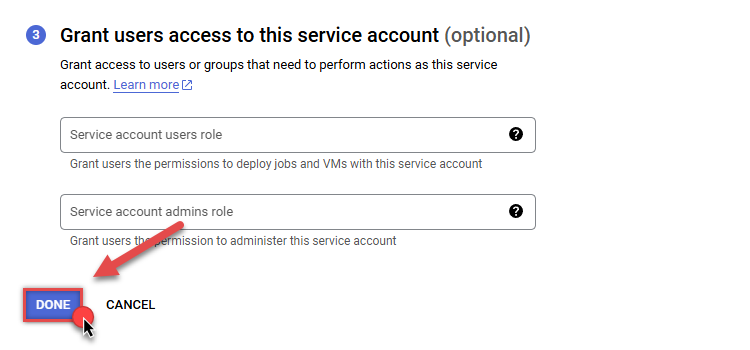

Finally, in the last step, just click button DONE:

-

Done! We are ready to add a Key to this service account in the next step.

Step-4: Add Key to Service Account

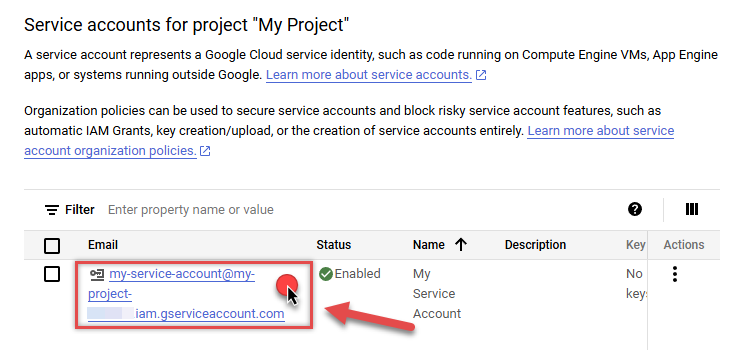

We are ready to add a Key (JSON or P12 key file) to the created Service Account:

-

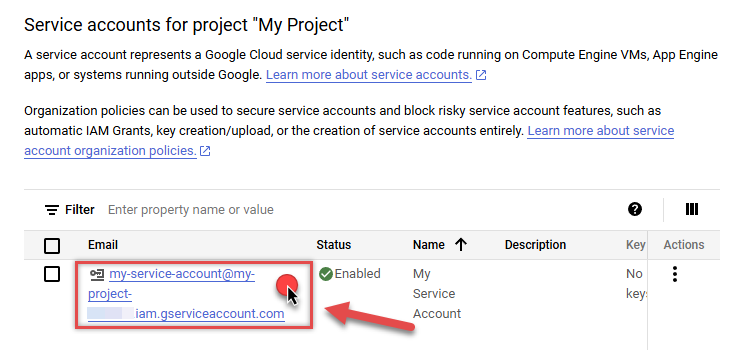

In Service Accounts open newly created service account:

-

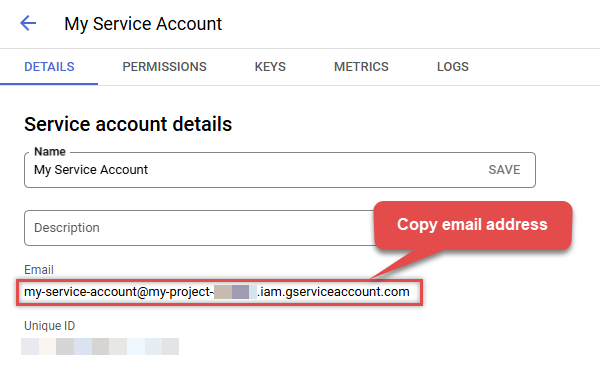

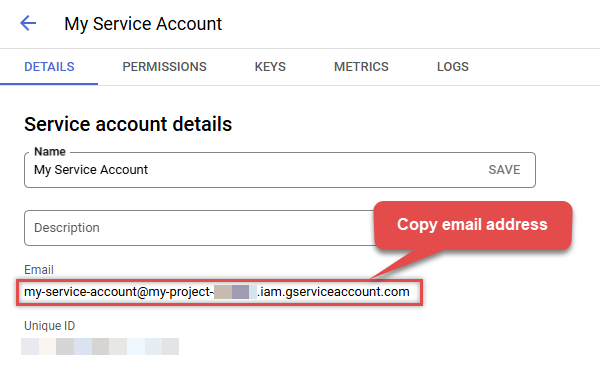

Next, copy email address of your service account for the later step:

-

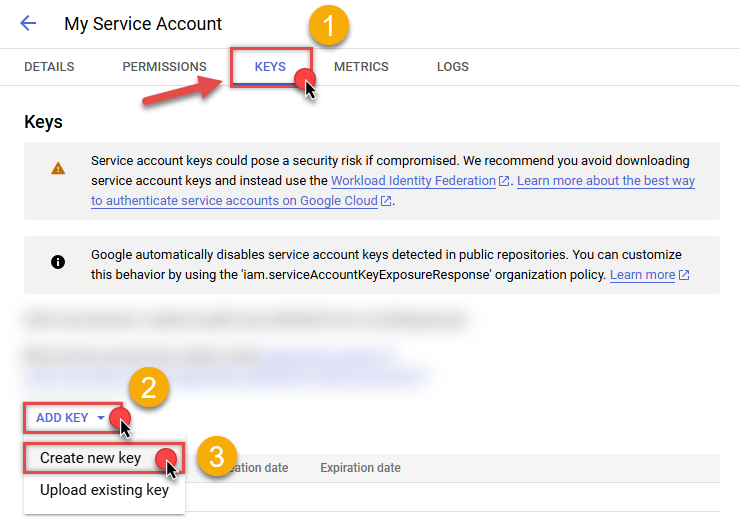

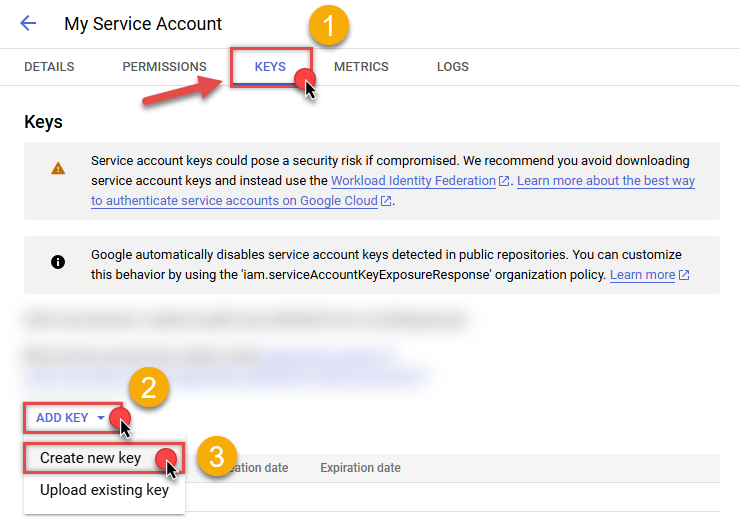

Continue by selecting KEYS tab, then press ADD KEY dropdown, and click Create new key menu item:

-

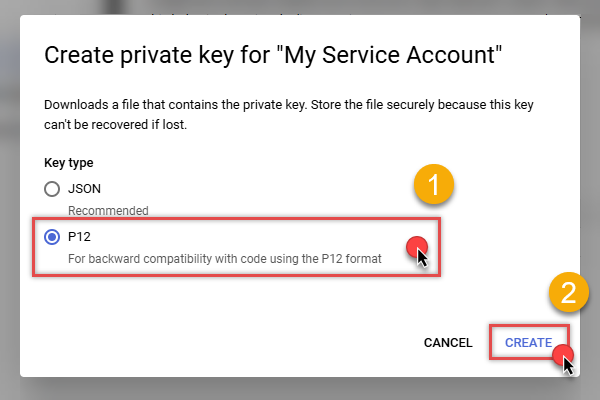

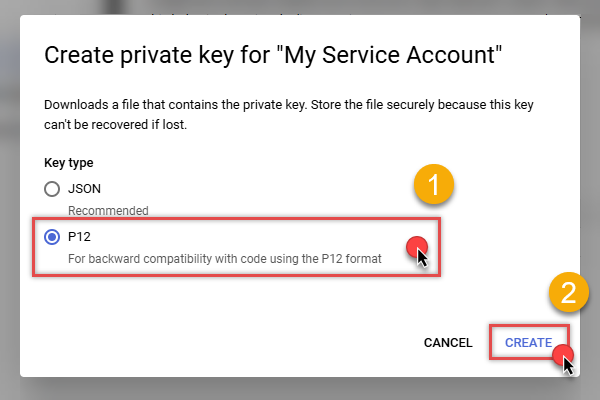

Finally, select JSON (Engine v19+) or P12 option and hit CREATE button:

- Key file downloads into your machine. We have all the data needed for authentication, let's proceed to the last step!

Step-5: Configure connection

-

Now go to SSIS package or ODBC data source and configure these fields in Service Account authentication configuration:

- In the Service Account Email field paste the service account Email address value you copied in the previous step.

- In the Service Account Private Key Path (i.e. *.json OR *.p12) field use downloaded certificate's file path.

- Done! Now you are ready to use Google BigQuery Connector!

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

Service Account (Using *.json OR *.p12 key file) [OAuth] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Finally, hit OK button:

Google BigQueryService Account (Using *.json OR *.p12 key file) [OAuth]https://www.googleapis.com/bigquery/v2Required Parameters Service Account Email Fill-in the parameter... Service Account Private Key Path (i.e. *.json OR *.p12) Fill-in the parameter... ProjectId Fill-in the parameter... DatasetId (Choose after ProjectId) Fill-in the parameter... Optional Parameters Scope https://www.googleapis.com/auth/bigquery https://www.googleapis.com/auth/bigquery.insertdata https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/cloud-platform.read-only https://www.googleapis.com/auth/devstorage.full_control https://www.googleapis.com/auth/devstorage.read_only https://www.googleapis.com/auth/devstorage.read_write RetryMode RetryWhenStatusCodeMatch RetryStatusCodeList 429 RetryCountMax 5 RetryMultiplyWaitTime True Job Location Impersonate As (Enter Email Id)

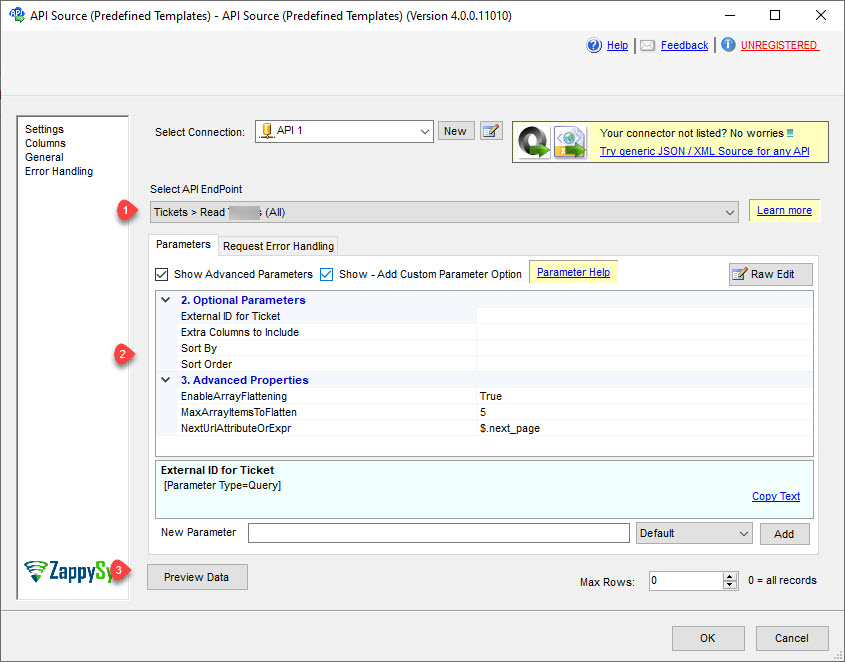

-

-

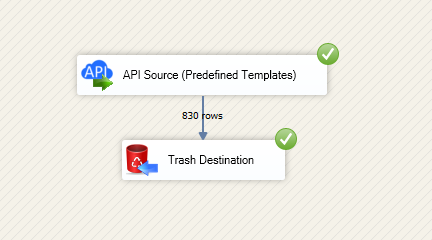

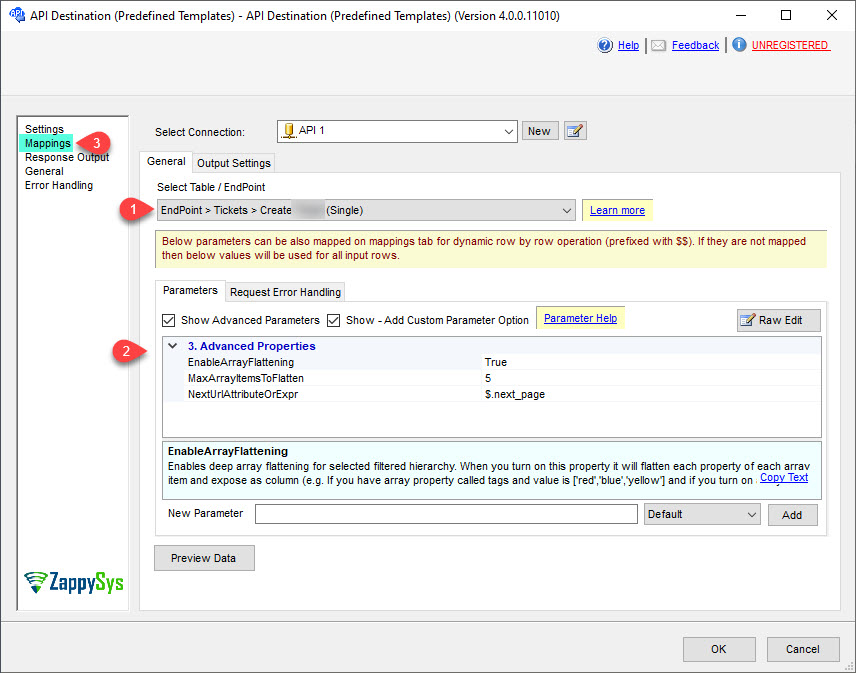

Select the desired endpoint, change/pass the properties values, and click on Preview Data button to make the API call.

API Source - Google BigQueryRead / write Google BigQuery data inside your app without coding using easy to use high performance API Connector

-

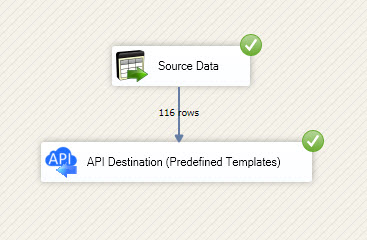

That's it! We are done! Just in a few clicks we configured the call to Google BigQuery using Google BigQuery Connector.

You can load the source data into your desired destination using the Upsert Destination , which supports SQL Server, PostgreSQL, and Amazon Redshift. We also offer other destinations such as CSV , Excel , Azure Table , Salesforce , and more . You can check out our SSIS PowerPack Tasks and components for more options. (*loaded in Trash Destination)

Write data to Google BigQuery using SSIS (Import data)

In this section we will learn how to configure and use Google BigQuery Connector in the API Destination to write data to Google BigQuery.

Video tutorial

This video covers following and more so watch carefully. After watching this video follow the steps described in this article.

- How to download SSIS PowerPack for Google BigQuery integration in SSIS

- How to configure connection for Google BigQuery

- How to write or lookup data to Google BigQuery

- Features about SSIS API Destination

- Using Google BigQuery Connector in SSIS

Step-by-step instructions

In upper section we learned how to read data, now in this section we will learn how to configure Google BigQuery in the API Source to POST data to the Google BigQuery.

-

Begin with opening Visual Studio and Create a New Project.

-

Select Integration Service Project and in new project window set the appropriate name and location for project. And click OK.

In the new SSIS project screen you will find the following:

- SSIS ToolBox on left side bar

- Solution Explorer and Property Window on right bar

- Control flow, data flow, event Handlers, Package Explorer in tab windows

- Connection Manager Window in the bottom

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link.

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link. -

Now, Drag and Drop SSIS Data Flow Task from SSIS Toolbox. Double click on the Data Flow Task to see Data Flow designer.

-

Read the data from the source, using any desired source component. You can even make an API call using the ZappySys JSON/XML/API Source and read data from there. In this example, we will use an OLE DB Source component to read real-time data from a SQL Server database.

-

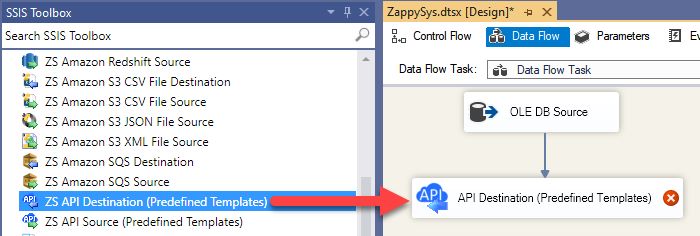

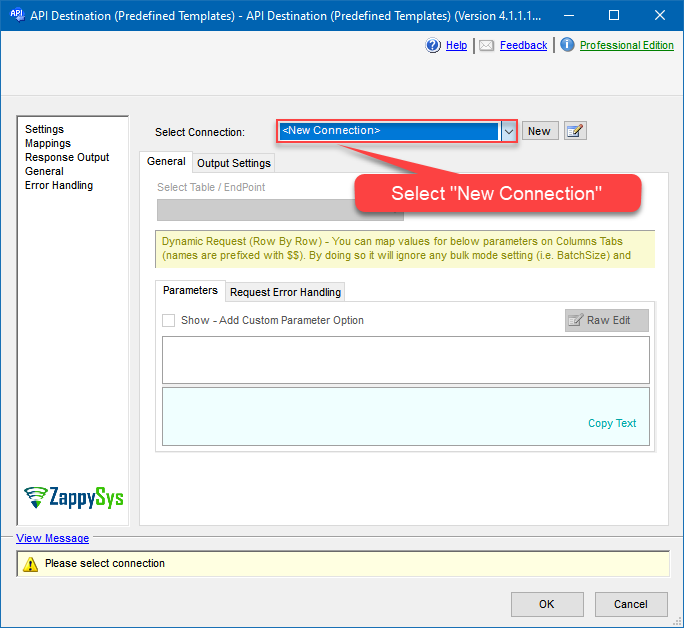

From the SSIS Toolbox drag and drop API Destination (Predefined Templates) on the Data Flow Designer surface and connect source component with it, and double click to edit it.

-

Select New Connection to create a new connection:

API Destination - Google BigQueryRead / write Google BigQuery data inside your app without coding using easy to use high performance API Connector

-

To configure the Google BigQuery connector, choose one of the following methods:

- Choose from Popular Connector List: Select a pre-installed service directly from the dropdown menu.

- Search Online: Use this to find and download a new connector file to your computer.

- Use Saved/Downloaded File: Once the file is downloaded, browse your local drive to load it into the configuration.

After that, just click Continue >>:

Google BigQuery

-

Proceed with selecting the desired Authentication Type. Then select API Base URL (in most cases default one is the right one). Finally, fill in all the required parameters and set optional parameters if needed. You may press a link Steps to Configure which will help set certain parameters. More info is available in Authentication section.

Google BigQuery authentication

User accounts represent a developer, administrator, or any other person who interacts with Google APIs and services. User accounts are managed as Google Accounts, either with Google Workspace or Cloud Identity. They can also be user accounts that are managed by a third-party identity provider and federated with Workforce Identity Federation. [API reference]

Follow these steps on how to create Client Credentials (User Account principle) to authenticate and access BigQuery API in SSIS package or ODBC data source:

WARNING: If you are planning to automate processes, we recommend that you use a Service Account authentication method. In case, you still need to use User Account, then make sure you use a system/generic account (e.g.automation@my-company.com). When you use a personal account which is tied to a specific employee profile and that employee leaves the company, the token may become invalid and any automated processes using that token will start to fail.Step-1: Create project

This step is optional, if you already have a project in Google Cloud and can use it. However, if you don't, proceed with these simple steps to create one:

-

First of all, go to Google API Console.

-

Then click Select a project button and then click NEW PROJECT button:

-

Name your project and click CREATE button:

-

Wait until the project is created:

- Done! Let's proceed to the next step.

Step-2: Enable Google Cloud APIs

In this step we will enable BigQuery API and Cloud Resource Manager API:

-

Select your project on the top bar:

-

Then click the "hamburger" icon on the top left and access APIs & Services:

-

Now let's enable several APIs by clicking ENABLE APIS AND SERVICES button:

-

In the search bar search for

bigquery apiand then locate and select BigQuery API:

-

If BigQuery API is not enabled, enable it:

-

Then repeat the step and enable Cloud Resource Manager API as well:

- Done! Let's proceed to the next step.

Step-3: Create OAuth application

-

First of all, click the "hamburger" icon on the top left and then hit VIEW ALL PRODUCTS:

-

Then access Google Auth Platform to start creating an OAuth application:

-

Start by pressing GET STARTED button:

-

Next, continue by filling in App name and User support email fields:

-

Choose Internal option, if it's enabled, otherwise select External:

-

Optional step if you used

Internaloption in the previous step. Nevertheless, if you had to useExternaloption, then click ADD USERS to add a user:

-

Then add your contact Email address:

-

Finally, check the checkbox and click CREATE button:

- Done! Let's create Client Credentials in the next step.

Step-4: Create Client Credentials

-

In Google Auth Platform, select Clients menu item and click CREATE CLIENT button:

-

Choose

Desktop appas Application type and name your credentials:

-

Continue by opening the created credentials:

-

Finally, copy Client ID and Client secret for the later step:

-

Done! We have all the data needed for authentication, let's proceed to the last step!

Step-5: Configure connection

-

Now go to SSIS package or ODBC data source and use previously copied values in User Account authentication configuration:

- In the ClientId field paste the Client ID value.

- In the ClientSecret field paste the Client secret value.

-

Press Generate Token button to generate Access and Refresh Tokens.

-

Then choose ProjectId from the drop down menu.

-

Continue by choosing DatasetId from the drop down menu.

-

Finally, click Test Connection to confirm the connection is working.

-

Done! Now you are ready to use Google BigQuery Connector!

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

User Account [OAuth] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Press Generate Token button to generate the tokens.

- Finally, hit OK button:

Google BigQueryUser Account [OAuth]https://www.googleapis.com/bigquery/v2Required Parameters UseCustomApp Fill-in the parameter... ProjectId (Choose after [Generate Token] clicked) Fill-in the parameter... DatasetId (Choose after [Generate Token] clicked and ProjectId selected) Fill-in the parameter... Optional Parameters ClientId ClientSecret Scope https://www.googleapis.com/auth/bigquery https://www.googleapis.com/auth/bigquery.insertdata https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/cloud-platform.read-only https://www.googleapis.com/auth/devstorage.full_control https://www.googleapis.com/auth/devstorage.read_only https://www.googleapis.com/auth/devstorage.read_write RetryMode RetryWhenStatusCodeMatch RetryStatusCodeList 429|503 RetryCountMax 5 RetryMultiplyWaitTime True Job Location Redirect URL (Only for Web App)

Google BigQuery authentication

Service accounts are accounts that do not represent a human user. They provide a way to manage authentication and authorization when a human is not directly involved, such as when an application needs to access Google Cloud resources. Service accounts are managed by IAM. [API reference]

Follow these steps on how to create Service Account to authenticate and access BigQuery API in SSIS package or ODBC data source:

Step-1: Create project

This step is optional, if you already have a project in Google Cloud and can use it. However, if you don't, proceed with these simple steps to create one:

-

First of all, go to Google API Console.

-

Then click Select a project button and then click NEW PROJECT button:

-

Name your project and click CREATE button:

-

Wait until the project is created:

- Done! Let's proceed to the next step.

Step-2: Enable Google Cloud APIs

In this step we will enable BigQuery API and Cloud Resource Manager API:

-

Select your project on the top bar:

-

Then click the "hamburger" icon on the top left and access APIs & Services:

-

Now let's enable several APIs by clicking ENABLE APIS AND SERVICES button:

-

In the search bar search for

bigquery apiand then locate and select BigQuery API:

-

If BigQuery API is not enabled, enable it:

-

Then repeat the step and enable Cloud Resource Manager API as well:

- Done! Let's proceed to the next step and create a service account.

Step-3: Create Service Account

Use the steps below to create a Service Account in Google Cloud:

-

First of all, go to IAM & Admin in Google Cloud console:

-

Once you do that, click Service Accounts on the left side and click CREATE SERVICE ACCOUNT button:

-

Then name your service account and click CREATE AND CONTINUE button:

-

Continue by clicking Select a role dropdown and start granting service account BigQuery Admin and Project Viewer roles:

-

Find BigQuery group on the left and then click on BigQuery Admin role on the right:

-

Then click ADD ANOTHER ROLE button, find Project group and select Viewer role:

-

Finish adding roles by clicking CONTINUE button:

You can always add or modify permissions later in IAM & Admin.

You can always add or modify permissions later in IAM & Admin. -

Finally, in the last step, just click button DONE:

-

Done! We are ready to add a Key to this service account in the next step.

Step-4: Add Key to Service Account

We are ready to add a Key (JSON or P12 key file) to the created Service Account:

-

In Service Accounts open newly created service account:

-

Next, copy email address of your service account for the later step:

-

Continue by selecting KEYS tab, then press ADD KEY dropdown, and click Create new key menu item:

-

Finally, select JSON (Engine v19+) or P12 option and hit CREATE button:

- Key file downloads into your machine. We have all the data needed for authentication, let's proceed to the last step!

Step-5: Configure connection

-

Now go to SSIS package or ODBC data source and configure these fields in Service Account authentication configuration:

- In the Service Account Email field paste the service account Email address value you copied in the previous step.

- In the Service Account Private Key Path (i.e. *.json OR *.p12) field use downloaded certificate's file path.

- Done! Now you are ready to use Google BigQuery Connector!

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

Service Account (Using *.json OR *.p12 key file) [OAuth] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Finally, hit OK button:

Google BigQueryService Account (Using *.json OR *.p12 key file) [OAuth]https://www.googleapis.com/bigquery/v2Required Parameters Service Account Email Fill-in the parameter... Service Account Private Key Path (i.e. *.json OR *.p12) Fill-in the parameter... ProjectId Fill-in the parameter... DatasetId (Choose after ProjectId) Fill-in the parameter... Optional Parameters Scope https://www.googleapis.com/auth/bigquery https://www.googleapis.com/auth/bigquery.insertdata https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/cloud-platform.read-only https://www.googleapis.com/auth/devstorage.full_control https://www.googleapis.com/auth/devstorage.read_only https://www.googleapis.com/auth/devstorage.read_write RetryMode RetryWhenStatusCodeMatch RetryStatusCodeList 429 RetryCountMax 5 RetryMultiplyWaitTime True Job Location Impersonate As (Enter Email Id)

-

-

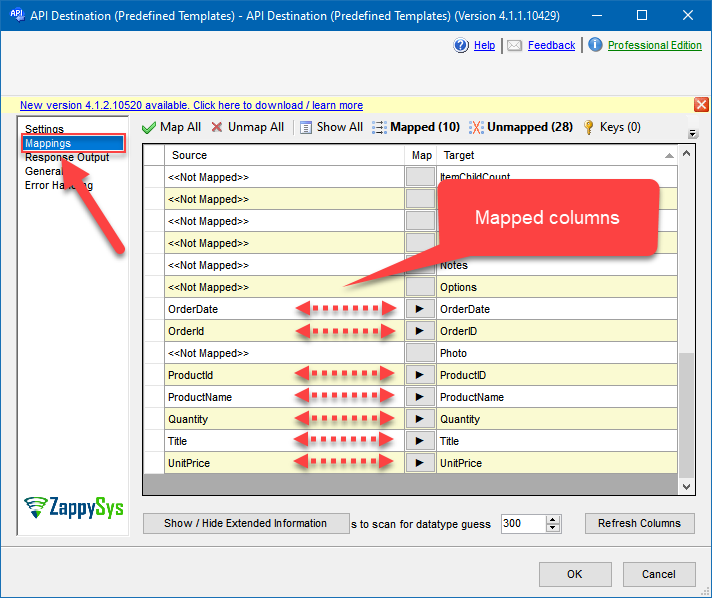

Select the desired endpoint, change/pass the properties values, and go to the Mappings tab to map the columns.

API Destination - Google BigQueryRead / write Google BigQuery data inside your app without coding using easy to use high performance API Connector

-

Finally, map the desired columns:

API Destination - Google BigQueryRead / write Google BigQuery data inside your app without coding using easy to use high performance API Connector

-

That's it; we successfully configured the POST API Call. In a few clicks we configured the Google BigQuery API call using ZappySys Google BigQuery Connector

Load Google BigQuery data into SQL Server using Upsert Destination (Insert or Update)

Once you configured the data source, you can load Google BigQuery data into SQL Server using Upsert Destination.

Upsert Destination can merge or synchronize source data with the target table.

It supports Microsoft SQL Server, PostgreSQL, and Redshift databases as targets.

Upsert Destination also supports very fast bulk upsert operation along with bulk delete.

Upsert operation

- a database operation which performs INSERT or UPDATE SQL commands

based on record's existence condition in the target table.

It

Upsert Destination supports INSERT, UPDATE, and DELETE operations,

so it is similar to SQL Server's MERGE command, except it can be used directly in SSIS package.

-

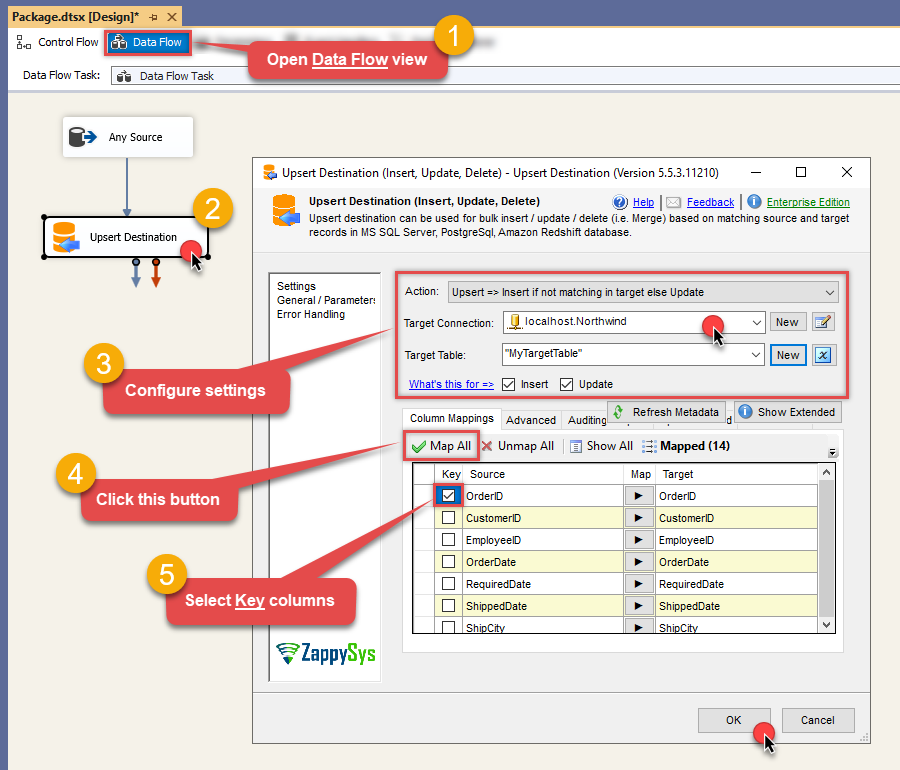

From the SSIS Toolbox drag-and-drop Upsert Destination component onto the Data Flow designer background.

-

Connect your SSIS source component to Upsert Destination.

-

Double-click on Upsert Destination component to open configuration window.

-

Start by selecting the Action from the list.

-

Next, select the desired target connection or create one by clicking <New [provider] Connection> menu item from the Target Connection dropdown.

-

Then select a table from the Target Table list or click New button to create a new table based on the source columns.

-

Continue by checking Insert and Update options according to your scenario (e.g. if Update option is unchecked, no updates will be made).

-

Finally, click Map All button to map all columns and then select the Key columns to match the columns on:

-

Click OK to save the configuration.

-

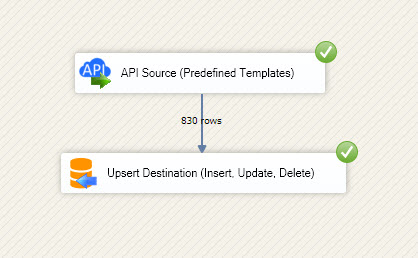

Run the package and Google BigQuery data will be merged with the target table in SQL Server, PostgreSQL, or Redshift:

-

Done!

Deploy and schedule SSIS package

After you are done creating SSIS package, most likely, you want to deploy it to SQL Server Catalog and run it periodically. Just follow the instructions in this article:

Running SSIS package in Azure Data Factory (ADF)

To use SSIS PowerPack in ADF, you must first prepare Azure-SSIS Integration Runtime. Follow this link for detailed instructions:

Actions supported by Google BigQuery Connector

Learn how to perform common Google BigQuery actions directly in SSIS with these how-to guides:

- [Dynamic Endpoint]

- Create Dataset

- Delete Dataset

- Delete Table

- Get Query Schema (From SQL)

- Get Table Schema

- Insert Table Data

- List Datasets

- List Projects

- List Tables

- Post Dynamic Endpoint

- Read Data using SQL Query -OR- Execute Script (i.e. CREATE, SELECT, INSERT, UPDATE, DELETE)

- Read Table Rows

- Make Generic API Request

- Make Generic API Request (Bulk Write)

Centralized data access via Data Gateway

In some situations, you may need to provide Google BigQuery data access to multiple users or services. Configuring the data source on a Data Gateway creates a single, centralized connection point for this purpose.

This configuration provides two primary advantages:

-

Centralized data access

The data source is configured once on the gateway, eliminating the need to set it up individually on each user's machine or application. This significantly simplifies the management process.

-

Centralized access control

Since all connections route through the gateway, access can be governed or revoked from a single location for all users.

| Data Gateway |

Local ODBC

data source

|

|

|---|---|---|

| Simple configuration | ||

| Installation | Single machine | Per machine |

| Connectivity | Local and remote | Local only |

| Connections limit | Limited by License | Unlimited |

| Central data access | ||

| Central access control | ||

| More flexible cost |

If you need any of these requirements, you will have to create a data source in Data Gateway to connect to Google BigQuery, and to create an ODBC data source to connect to Data Gateway in SSIS.

Let's not wait and get going!

Creating Google BigQuery data source in Gateway

In this section we will create a data source for Google BigQuery in Data Gateway. Let's follow these steps to accomplish that:

-

Download and install ODBC PowerPack.

-

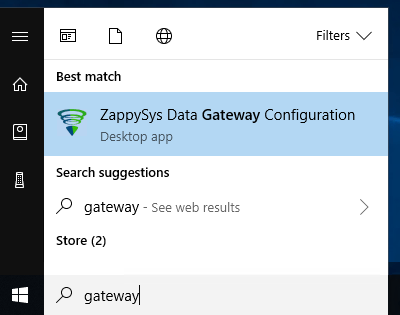

Search for

gatewayin Windows Start Menu and open ZappySys Data Gateway Configuration:

-

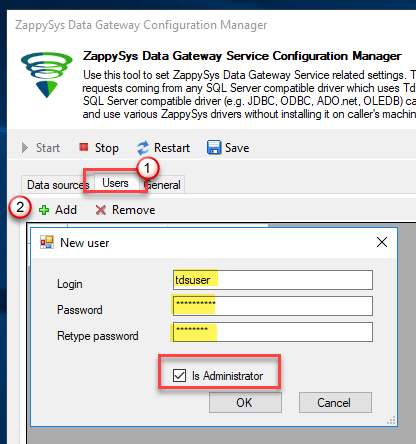

Go to Users tab and follow these steps to add a Data Gateway user:

- Click Add button

-

In Login field enter username, e.g.,

john - Then enter a Password

- Check Is Administrator checkbox

- Click OK to save

-

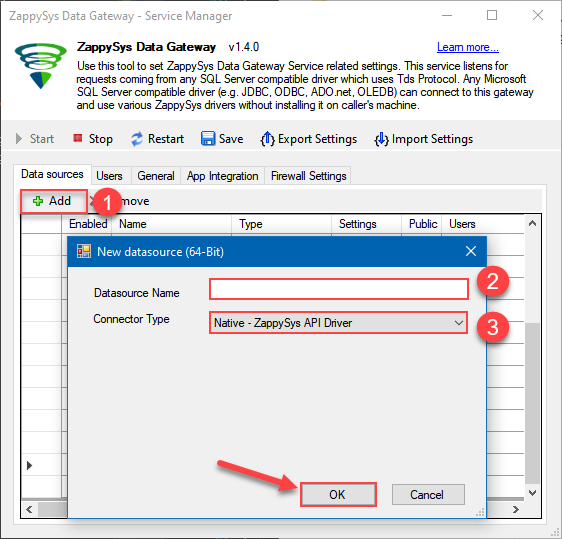

Now we are ready to add a data source:

- Click Add button

- Give Datasource a name (have it handy for later)

- Then select Native - ZappySys API Driver

- Finally, click OK

GoogleBigqueryDSNZappySys API Driver

-

When the ZappySys API Driver configuration window opens, configure the Data Source the same way you configured it in ODBC Data Sources (64-bit), in the beginning of this article.

-

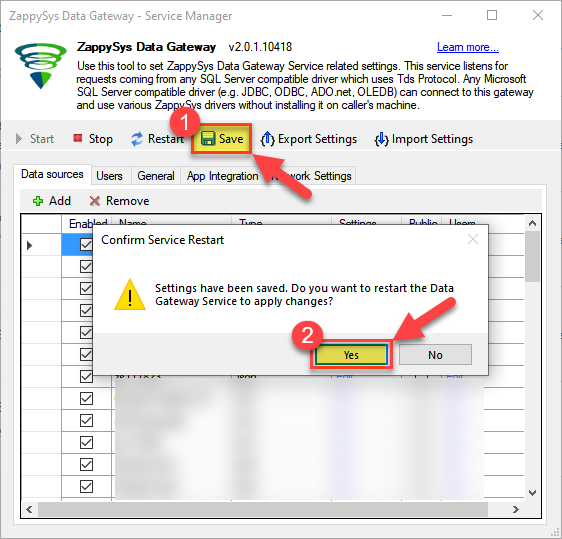

Very important step. Now, after creating or modifying the data source make sure you:

- Click the Save button to persist your changes.

- Hit Yes, once asked if you want to restart the Data Gateway service.

This will ensure all changes are properly applied:

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Creating ODBC data source for Data Gateway

In this part we will create ODBC data source to connect to Data Gateway from SSIS. To achieve that, let's perform these steps:

-

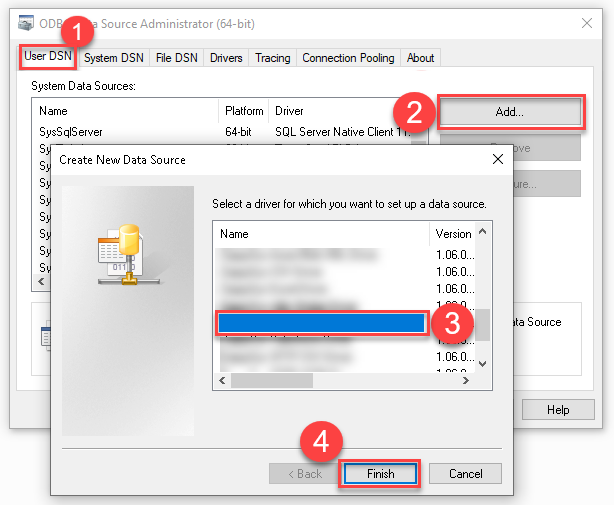

Open ODBC Data Sources (x64):

-

Create a User data source (User DSN) based on ODBC Driver 17 for SQL Server:

ODBC Driver 17 for SQL Server If you don't see ODBC Driver 17 for SQL Server driver in the list, choose a similar version driver.

If you don't see ODBC Driver 17 for SQL Server driver in the list, choose a similar version driver. -

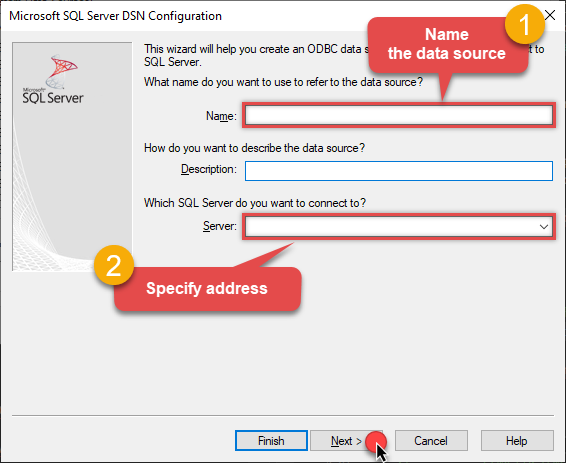

Then set a Name of the data source (e.g.

Gateway) and the address of the Data Gateway:GatewayDSNlocalhost,5000 Make sure you separate the hostname and port with a comma, e.g.

Make sure you separate the hostname and port with a comma, e.g.localhost,5000. -

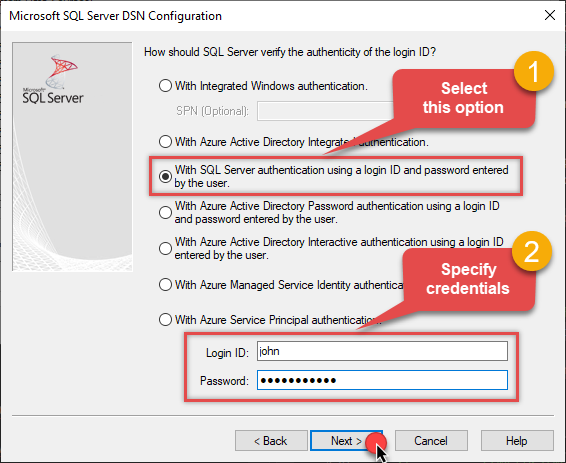

Proceed with authentication part:

- Select SQL Server authentication

-

In Login ID field enter the user name you used in Data Gateway, e.g.,

john - Set Password to the one you configured in Data Gateway

-

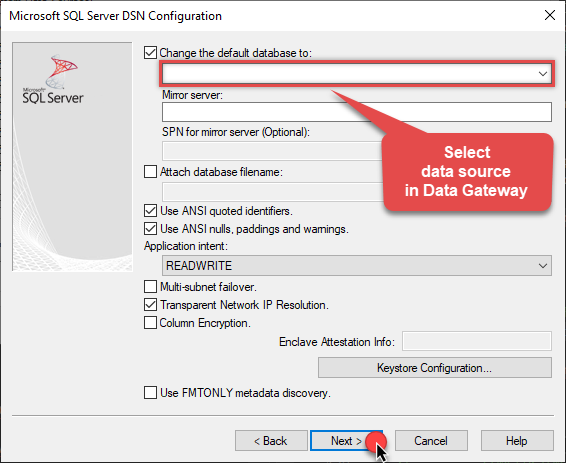

Then set the default database property to

GoogleBigqueryDSN(the one we used in Data Gateway):GoogleBigqueryDSN

-

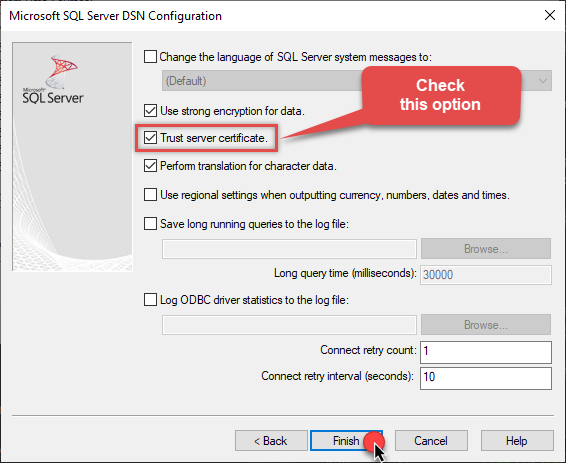

Continue by checking Trust server certificate option:

-

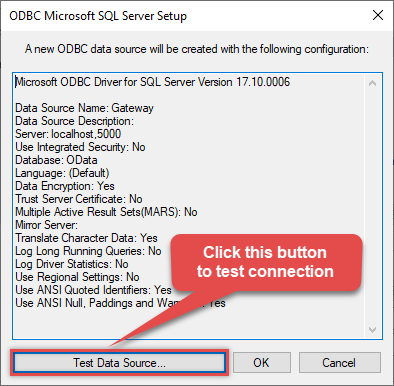

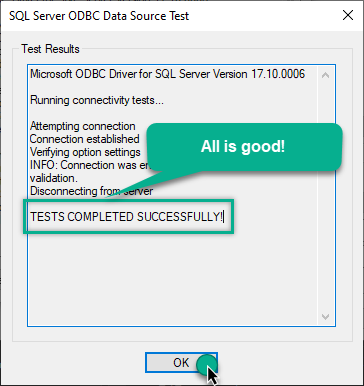

Once you do that, test the connection:

-

If connection is successful, everything is good:

-

Done!

We are ready to move to the final step. Let's do it!

Accessing data in SSIS via Data Gateway

Finally, we are ready to read data from Google BigQuery in SSIS via Data Gateway. Follow these final steps:

-

Go back to SSIS.

-

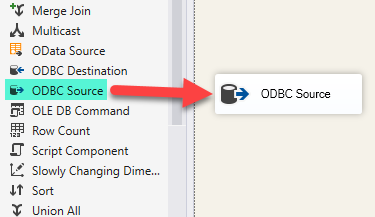

From the SSIS toolbox drag and drop ODBC Source on the dataflow designer surface:

-

Double-click on ODBC Source component to configure it.

-

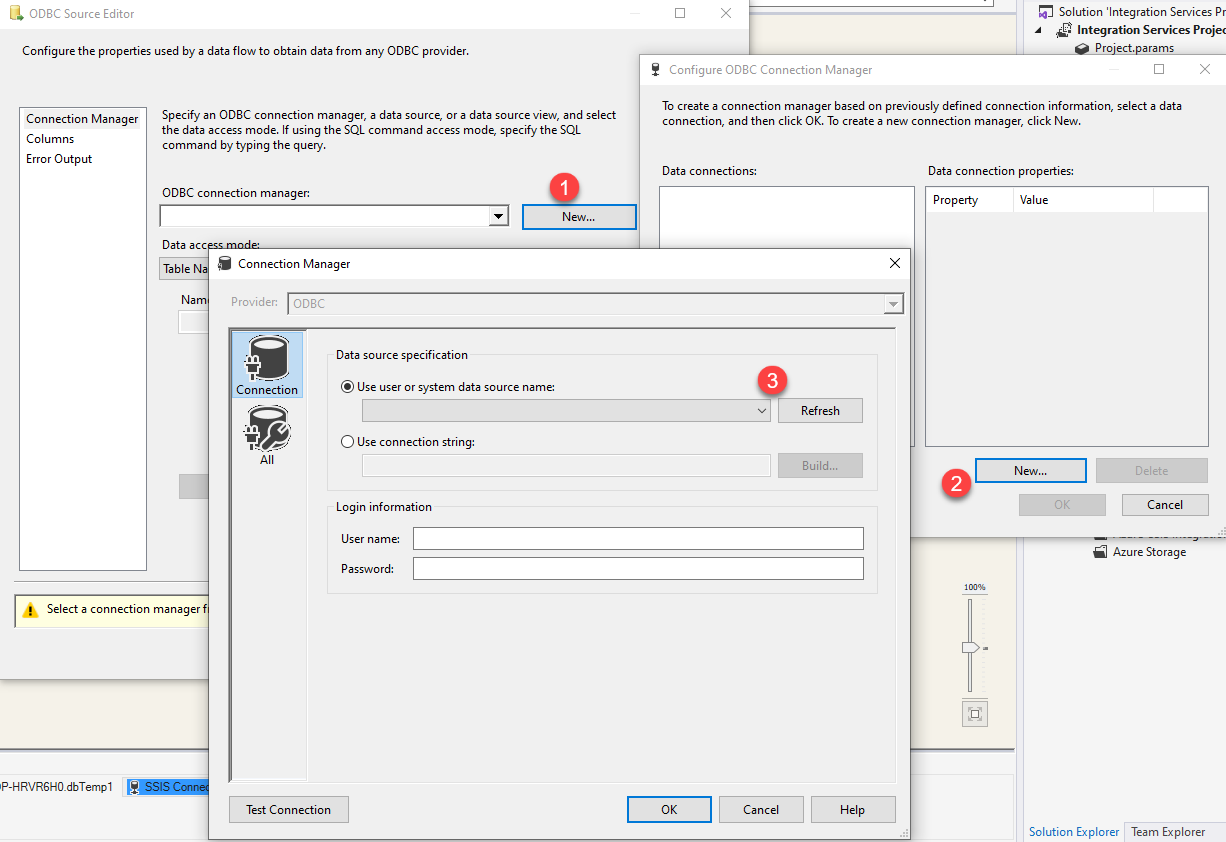

Click on New... button, it will open Configure ODBC Connection Manager window. Once it opens, click on New... button to create a new ODBC connection to Google BigQuery ODBC data source:

-

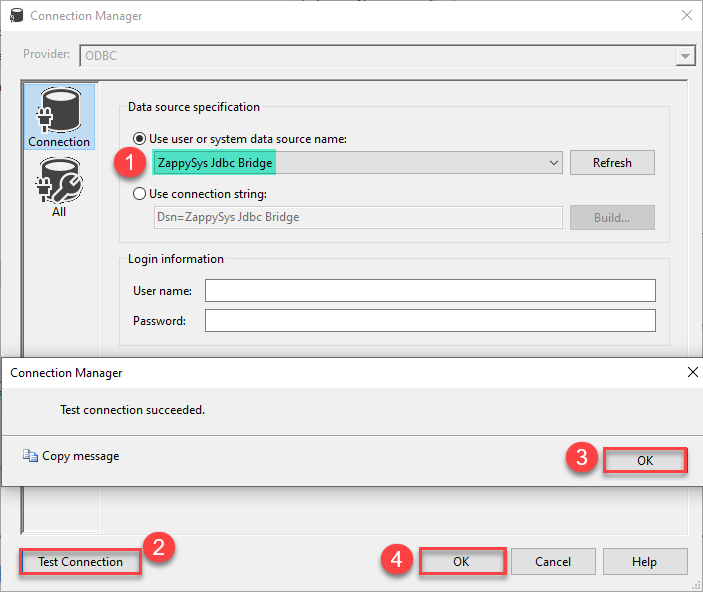

Then choose the data source from the list and click Test Connection button. If the connection test is successful, close the window, and then click OK button to finish the configuration:

GatewayDSN

-

Read the data the same way we discussed at the beginning of this article.

-

That's it!

Now you can connect to Google BigQuery data in SSIS via the Data Gateway.

john and your password.

Conclusion

In this article we showed you how to connect to Google BigQuery in SSIS and integrate data without any coding, saving you time and effort.

We encourage you to download Google BigQuery Connector for SSIS and see how easy it is to use it for yourself or your team.

If you have any questions, feel free to contact ZappySys support team. You can also open a live chat immediately by clicking on the chat icon below.

Download Google BigQuery Connector for SSIS Documentation