SSIS Amazon Redshift Data Transfer TaskAmazon Redshift Data Transfer Task can be used for bulk import data to Amazon Redshift. Source data can be Flat File, ODBC, OLEDB or ADO.net source. This task uses multiple threads to upload data in parallel and optionally compress data files to speedup process. Features

|

|

| Download Help File Buy | View All Tasks Like This |

Drag and Drop ZS Amazon Redshift Data Transfer Task from SSIS Toolbox

Drag and Drop ZS Amazon Redshift Data Transfer Task from SSIS Toolbox

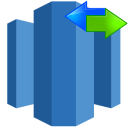

SSIS Redshift data transfer task UI – Source from file

SSIS Amazon AWS Redshift Data Transfer Task – Load from local file

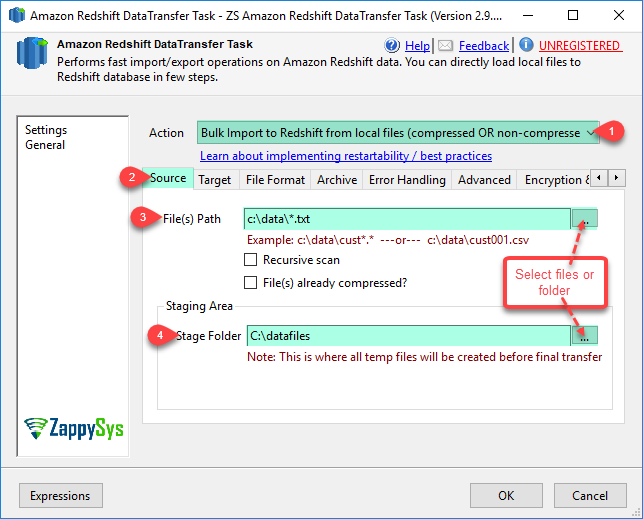

SSIS Redshift data transfer task UI – Source from relational database using sql query (i.e. ODBC, OLEDB, ADO.net)

SSIS Amazon AWS Redshift Data Transfer Task – SQL Server or MySQL Table

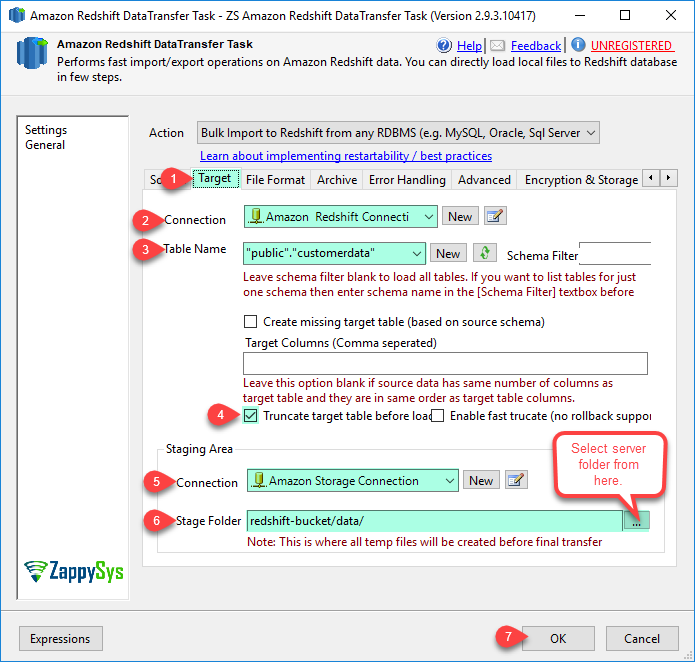

SSIS Redshift data transfer task UI – Target database

SSIS Amazon AWS Redshift Data Transfer Task

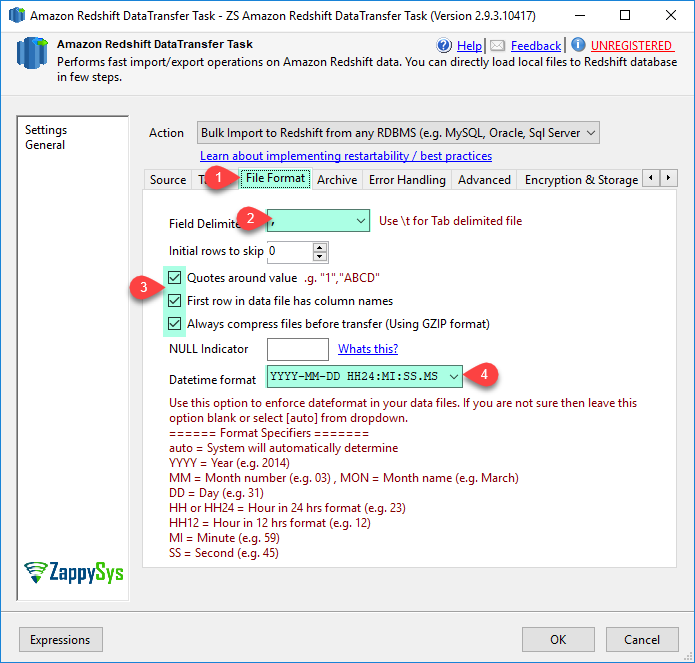

SSIS Redshift data transfer task UI – Select file format and datetime handling

SSIS Amazon AWS Redshift Data Transfer Task

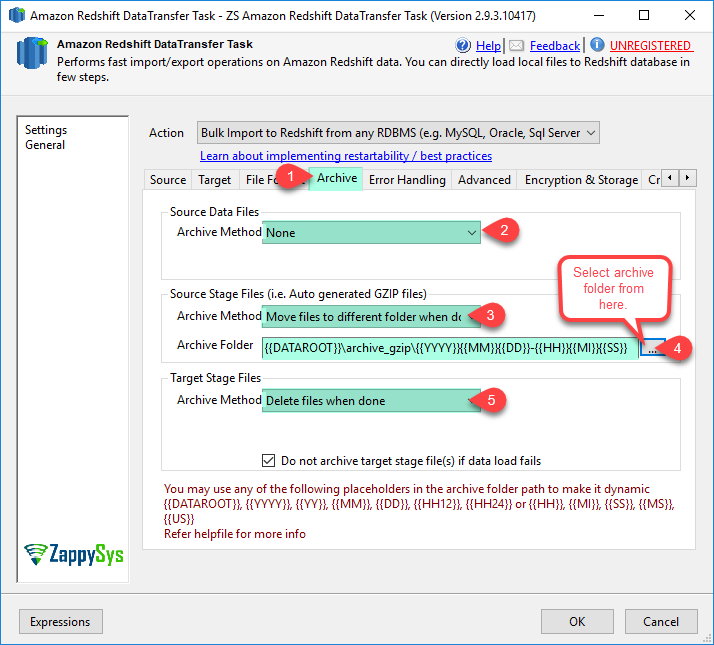

SSIS Redshift data transfer task UI – Select archive options for source, target and staging files (i.e. Delete, Move or None)

SSIS Amazon AWS Redshift Data Transfer Task

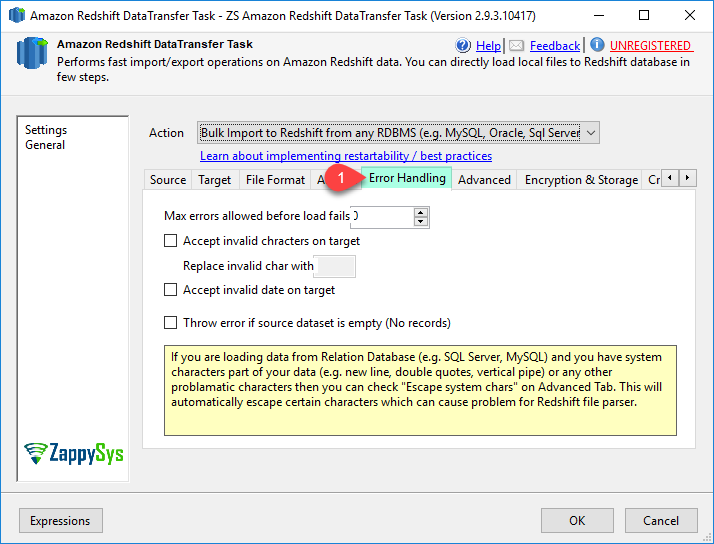

SSIS Redshift data transfer task UI – Error handling options

SSIS Amazon AWS Redshift Data Transfer Task

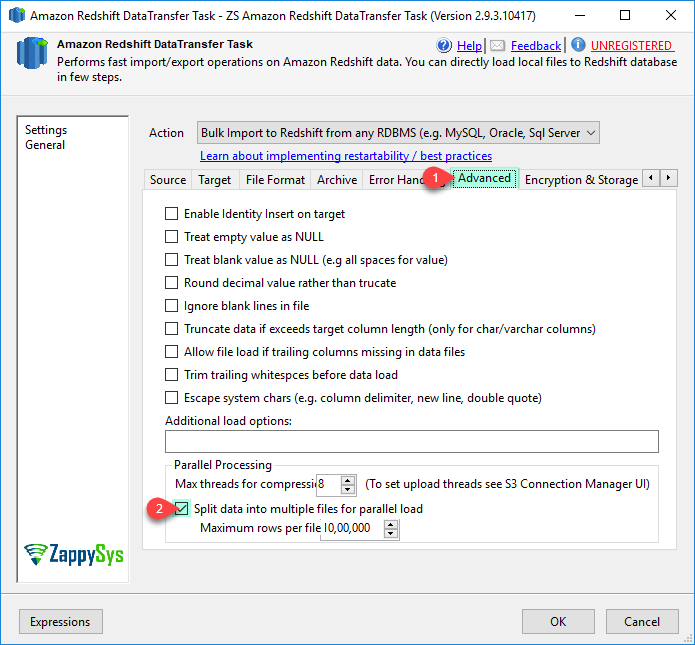

SSIS Redshift data transfer task UI – Advanced options for Redshift

SSIS Amazon AWS Redshift Data Transfer Task

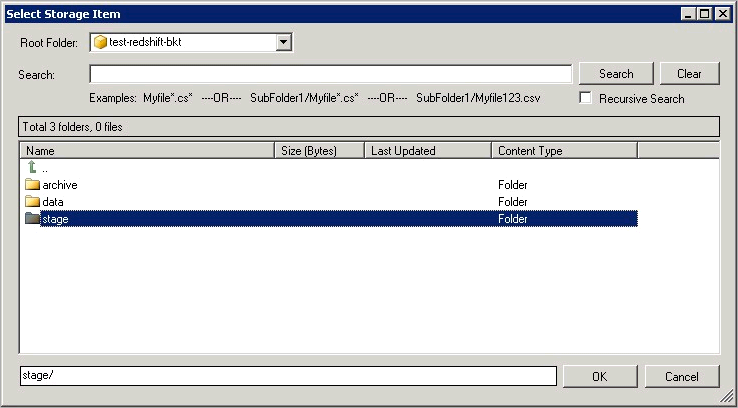

SSIS Redshift data transfer task UI – Select S3 bucket/folder location for staging area

SSIS Amazon AWS Redshift Data Transfer Task – From AWS S3 Staging

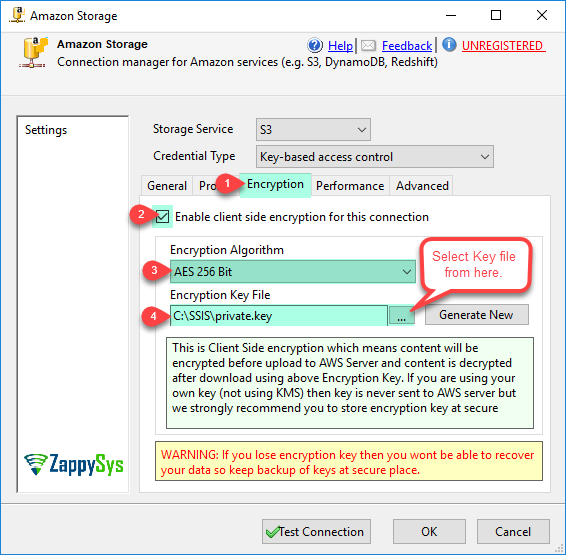

Load Encrypted files to Redshift using Client Side Encryption (Customer supplied encryption key)

Load Encrypted files to Redshift using Client Side Encryption

Articles / Tutorials

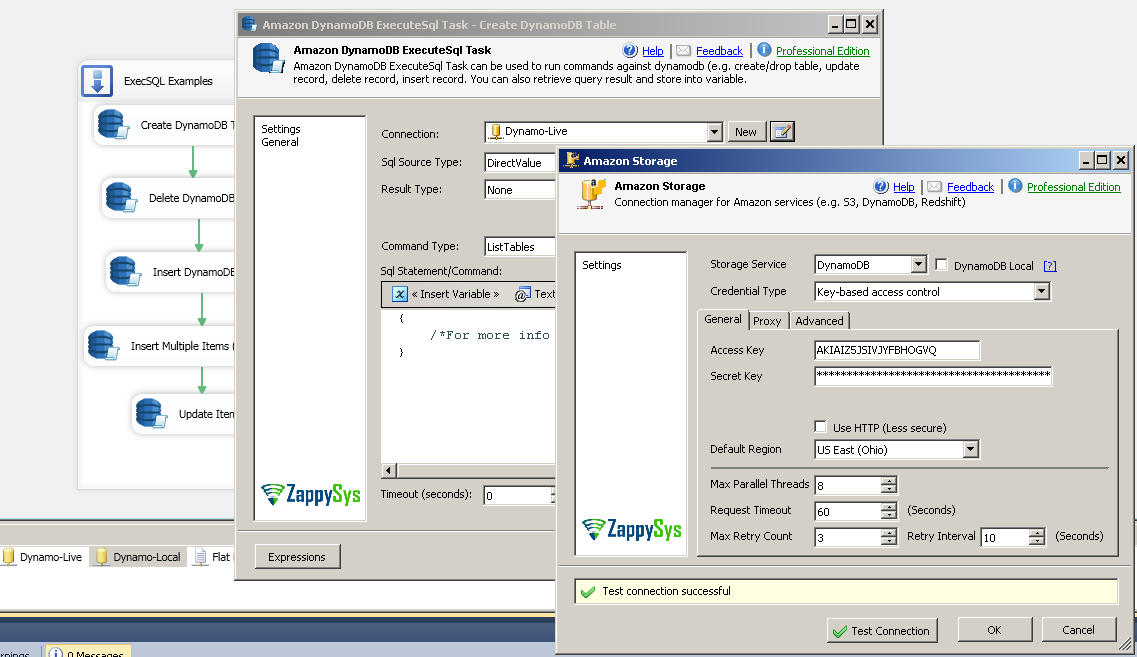

How to Read / Write DynamoDB data in SSIS (Query, Insert, Update, Delete)

How to Read / Write Amazon DynamoDB in SSISIntroduction In this article we will look at how to Read / Write Amazon DynamoDB in SSIS. ZappySys developed many AWS related components but in this article we will look at 3 Tasks/Components for DynamoDB Integration Scenarios (Read, Write, Update, Bulk Insert, Create / Drop Table etc.). We will discuss how to use SSIS DynamoDB Source […] |

Articles/Posts

MySQL to Redshift Data Load Using SSIS

Introduction – MySQL to Redshift Load Before we talk about data load from MySQL to Redshift using SSIS, first lets talk what is Amazon Redshift (or AWS Redshift). Amazon Redshift is a Cloud based Data warehouse service. This type of system also referred as MPP (Massively Parallel Processing). Amazon Redshift uses highly modified version of […]

SQL Server to Redshift Data Load Using SSIS

Introduction – SQL Server to Redshift Load Before we talk data load from SQL Server to Redshift using SSIS lets talk what is Amazon Redshift (or sometimes referred as AWS Redshift). Amazon Redshift is a Cloud based Data warehouse service. This type of system also referred as MPP (Massively Parallel Processing). Amazon Redshift uses highly […]

References

See Also

SSIS package "TestAll.dtsx" starting.

LICENSE Found: You are running Standard Edition. License for [Company=ZS,User=ZappySys].

Exporting data from sql query to ...[C:\datafiles]

Executing query - select top 500 ROW_NUMBER()Over(order by a.CustomerID) Id , a.*,b.*,c.OrderID,c.OrderDate,c.Freight from customers a,products b,orders c

Writing data to : C:\datafiles\public_customerdatabQCW_xcx0E6KdOIts4YZdQ.txt.001.part

Total 500 records written to : C:\datafiles\public_customerdatabQCW_xcx0E6KdOIts4YZdQ.txt.001.part

Export data completed. Took 0.115 seconds for 500 records, 1 files

Compressing data files...[Stage path: C:\datafiles]

Compress data files completed. Took 0.017 seconds

Uploading data files to S3 using total [32] threads...

Creating manifest file

Uploading Stream ...[119 bytes]

public_customerdatabQCW_xcx0E6KdOIts4YZdQ.manifest Transferred 100% (119 bytes)

New file created at bwtest-west2/public_customerdatabQCW_xcx0E6KdOIts4YZdQ.manifest, Size=119 bytes

Uploading C:\datafiles\public_customerdatabQCW_xcx0E6KdOIts4YZdQ.txt.001.part.gz ...[8.63 KB]

C:\datafiles\public_customerdatabQCW_xcx0E6KdOIts4YZdQ.txt.001.part.gz Transferred 100% (8837 bytes)

Deleted local file C:\datafiles\public_customerdatabQCW_xcx0E6KdOIts4YZdQ.txt.001.part.gz because DeleteWhenDone option is enabled

Transfer Summary:

Total items : 1

Successful : 1

Failed : 0

Canceled : 0

Skipped : 0

Time taken : 00:00:00.497

Max threads : 4

Transferred : 8.63 KB

Speed : 17.4 Kbps

Upload data files completed. Took 1.228 seconds

Truncating target table "public"."customerdata" because TruncateTargetBeforeLoad option is turned on

Starting redshift bulk insert...

Executing following command:

COPY "public"."customerdata" FROM 's3://bwtest-west2/public_customerdatabQCW_xcx0E6KdOIts4YZdQ.manifest' credentials 'aws_access_key_id=xxxxxxx;aws_secret_access_key=yyyyyyyy' GZIP DELIMITER '|' DATEFORMAT 'YYYY-MM-DD HH24:MI:SS' TIMEFORMAT 'YYYY-MM-DD HH24:MI:SS' IGNOREHEADER 1 MANIFEST REGION 'us-west-2'

Completed redshift bulk insert. Took 2.823 seconds

Loaded total 500 rows, 1 files, total 501 lines scanned

Archiving cloud storage stage files...

Successfully deleted total 2 item(s)

Deleted cloud storage files because Archive method is set to Delete files

Cloud storage stage files archived. Took 0.672 seconds

RedShift Table loaded: UploadData data took total 5.1182928 sec

==========================================================

Summary: Data Transfer took total .....5.12 sec.

Source file creation .........0.12 sec. 2.3% of total time

compress file ................0.02 sec. 0.4% of total time

Upload file ..................1.23 sec. 24.0% of total time

truncate target table ........0.14 sec. 2.7% of total time

Load data to target table ....2.94 sec. 57.4% of total time

Archive target stage files ...0.67 sec. 13.1% of total time

==========================================================

Total files uploaded .........1 (0.01 MB)

Actual files loaded ..........1

Total records loaded .........500

SSIS package "TestAll.dtsx" finished: Success.