| Property Name |

Description |

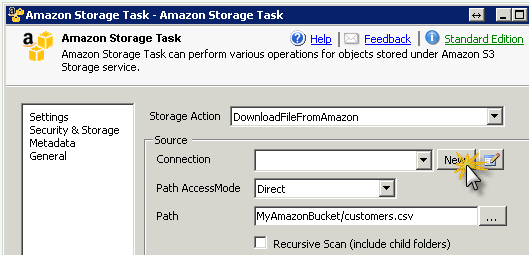

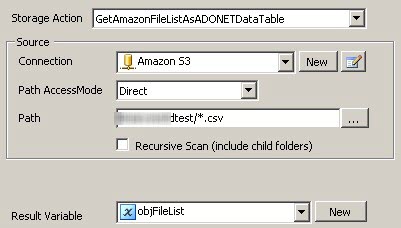

| StorageAction |

This property determines what action needs to be performed on Amazon S3 object(s).

Available Options

|

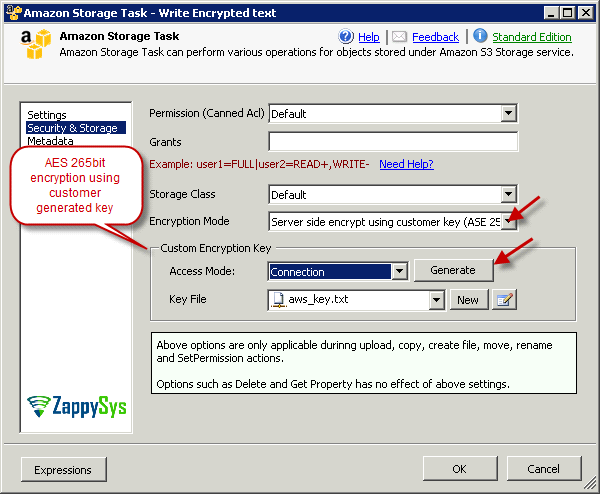

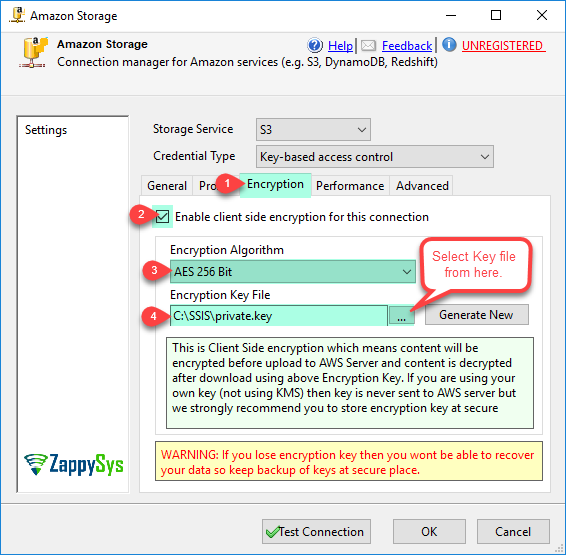

| Permission |

This property determines what permission applied on new objects. Permissions are only applied if you are performing Upload, Copy, Move, Rename or Create File actions.

Available Options

- Default : No permission applied. Under default option all S3 files are created/updated as NoACL setting(see next option).

- NoACL : Owner gets FULL_CONTROL. No one else has access rights (default).

- Private : Owner gets FULL_CONTROL. No one else has access rights (default).

- PublicRead : Owner gets FULL_CONTROL and the anonymous principal is granted READ access. If this policy is used on an object, it can be read from a browser with no authentication.

- PublicReadWrite : Owner gets FULL_CONTROL, the anonymous principal is granted READ and WRITE access. This can be a useful policy to apply to a bucket, but is generally not recommended.

- AuthenticatedRead : Owner gets FULL_CONTROL, and any principal authenticated as a registered Amazon S3 user is granted READ access.

- BucketOwnerRead : Object Owner gets FULL_CONTROL, Bucket Owner gets READ This ACL applies only to objects and is equivalent to private when used with PUT Bucket. You use this ACL to let someone other than the bucket owner write content (get full control) in the bucket but still grant the bucket owner read access to the objects.

- BucketOwnerFullControl : Object Owner gets FULL_CONTROL, Bucket Owner gets FULL_CONTROL. This ACL applies only to objects and is equivalent to private when used with PUT Bucket. You use this ACL to let someone other than the bucket owner write content (get full control) in the bucket but still grant the bucket owner full rights over the objects.

- LogDeliveryWrite : The LogDelivery group gets WRITE and READ_ACP permissions on the bucket.

|

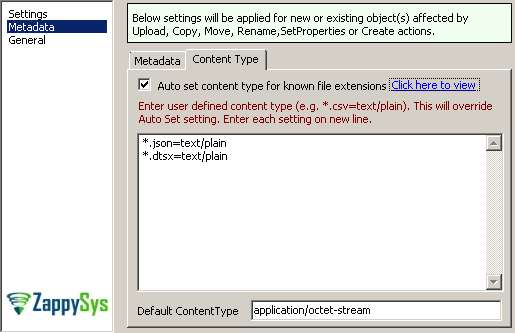

| AutoSetContentType |

When this option is True (default is false) it will automatically determines content type of the file during Upload, Create, Copy and Move operation.Default content type of any new file is application/octet-stream |

| UserDefinedContentTypes |

If content type is not correctly set for certain file extension then you may provide your own list of content types. It has to be in the following format. Each content type in new line

*.csv=text/plain

*.dtsx=text/plain

*.dtsconfig=text/plain

.....more entries

.....more entries

|

| DefaultContentType |

By default every new file on Amazon S3 is set to content type application/octet-stream. If this is not what you want as default content type then you may provide UserDefinedContentTypes or just set this property to your own content type. System set content type in the following order

1. Check if UserDefinedContentTypes is set (if extension of file being processed match with user defined content type list then set file content Type)

2. Check if AutoSetContentType is set (if True then Lookup Predefined list and set file content Type)

3. Check if Check DefaultContentType is set (if DefaultContentType is set then use it for file being processed)

|

| ThrowErrorIfSourceNotFound |

If this option is set to True (default is true) then when source path not found on Amazon S3 exception is thrown. If this option is False then no error is thrown when file not found. |

| DeleteSourceWhenDone |

If this option is set to True (default is false) then after successful upload/download operation source file is deleted. This option is applicable only for Upload and Download actions. |

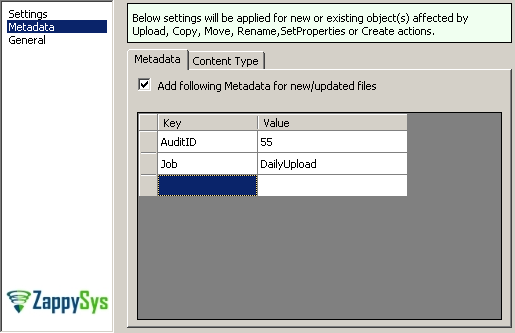

| MetadataKeyValue |

For any new file you may specify metadata in Key/Value pair from Metadata Tab of task UI. If you want to enter from expression then use the following syntax for multiple Key/value pairs of metadata. This option is ignored if EnableMetadataUpdate=False (see next option).

<MetaData><User>SamW</User><Host>BW001-DEV</Host></MetaData>

|

| EnableMetadataUpdate |

If this option is True (default is False) then MetadataKeyValue property is processed (see previous option) |

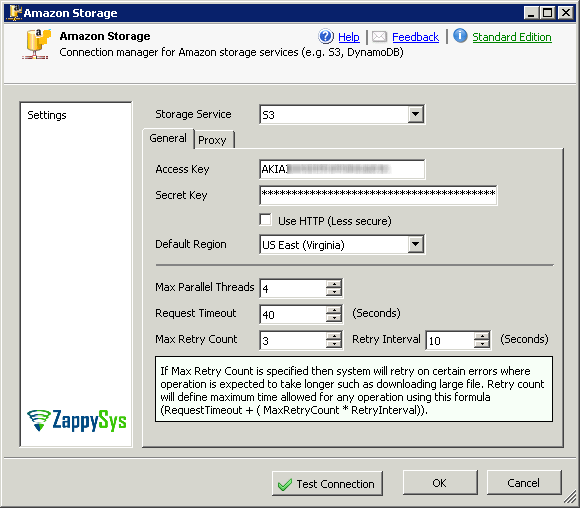

| SourceStorageConnection |

Connection Name for Source S3 Connection (Not applicable for UploadFileToAmazon and CreateNewAmazonFile operations) |

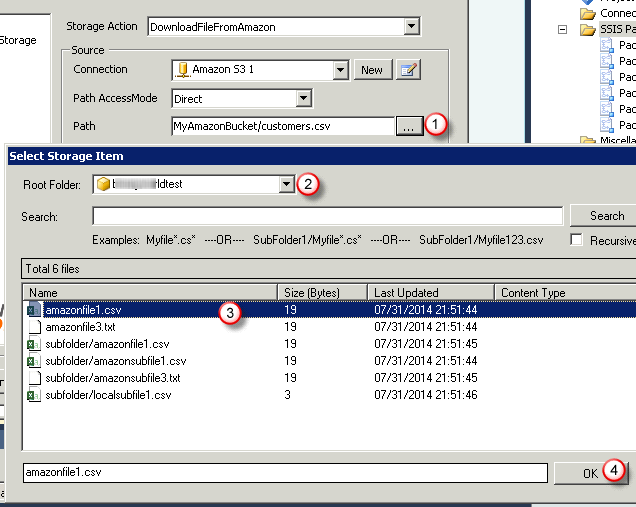

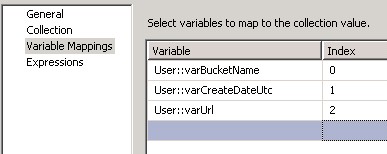

| SourcePathAccessMode |

Specifies how source path will be provided

Available Options

- Direct (default) : Direct value is provided.

- Variable : Value is read from specified variable at runtime.

- Connection : Value is read from path specified by file connection. This option is only applicable when Source is local File system.

|

| SourcePathValue |

Direct static value (only applicable when SourcePathAccessMode=Direct) |

| SourcePathVariable |

Variable Name (only applicable when SourcePathAccessMode=Variable) |

| SourcePathConnection |

Connection Name or ID (only applicable when SourcePathAccessMode=Connection) |

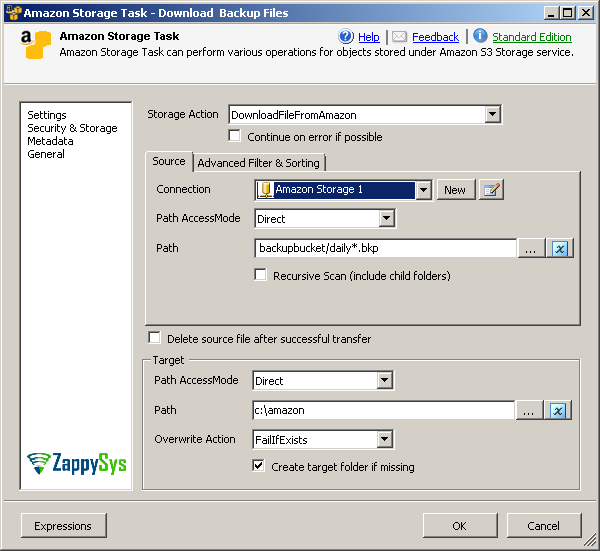

| Recursive |

Specifies whether source path pattern should scan sub folder (default false) |

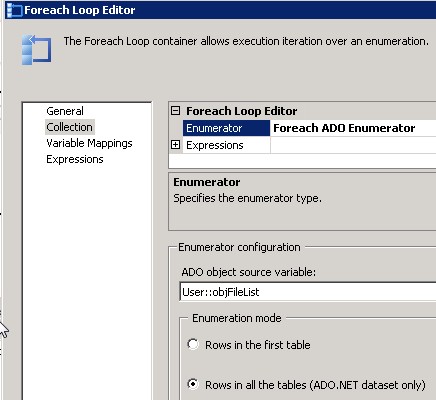

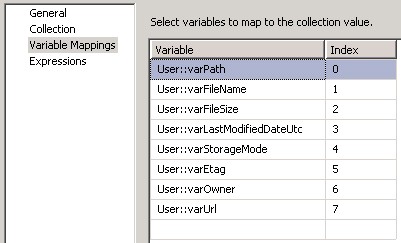

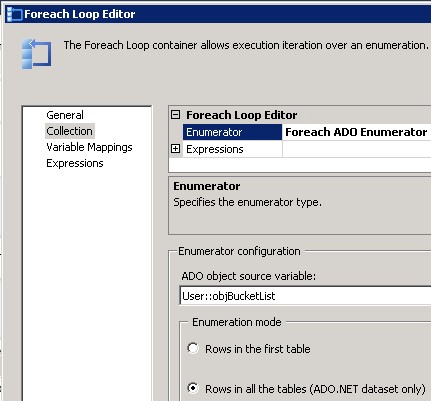

| ResultVariable |

Variable name which will hold result from S3 Action (default false) |

| TargetStorageConnection |

Connection Name for target S3 Connection (Only applicable for UploadFileToAmazon, CopyAmazonFileToAmazon, MoveAmazonFileToAmazon and CreateNewAmazonFile operations) |

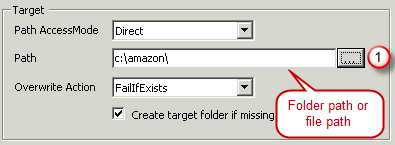

| TargetPathAccessMode |

Specifies how target path will be provided

Available Options

- Direct (default) : Direct value is provided.

- Variable : Value is read from specified variable at runtime.

- Connection : Value is read from path specified by file connection. This option is only applicable when target is local File system.

|

| TargetPathValue |

Direct static value (only applicable when TargetPathAccessMode=Direct) |

| TargetPathConnection |

Connection Name or ID which points to target file path (only applicable when TargetPathAccessMode=Connection) |

| TargetPathVariable |

Variable Name (only applicable when TargetPathAccessMode=Variable) |

| OverWriteOption |

Specifies whether overwrite action when target file exists

Available Options

- Direct (default) : Direct value is provided.

- Variable : Value is read from specified variable at runtime.

- Connection : Value is read from path specified by file connection. This option is only applicable when target is local File system.

|

| CreateMissingTargetFolder |

Create target folder if missing (default True). This option is applicable for DownloadAmazonFileToLocal |

| ExcludeRegXPattern |

Regular expression pattern to exclude items from selection (if you apply MyFile*.* filter for source path and then use ExcludeRegXPattern=(.msi|.exe) then it will include all files with matching name but exclude *.msi and *.exe |

| IncludeRegXPattern |

Regular expression pattern to include items from selection (if you apply MyFile*.* filter for source path and then use IncludeRegXPattern=(.txt|.csv) then it will include only txt and csv files with matching name pattern |

| SortBy |

Property by which you want to sort result

Available Options

| Option |

Description |

| Size |

File Size in Bytes |

| LastModifiedDate |

File Last Modified DateTime |

| CreationDate |

File Creation DateTime |

| AgeInDays |

File Age in Days |

| LastEditInDays |

Last Edit in Days |

| Content |

File Content |

| Exists |

File Exists Flag |

| FileCount |

File Count |

| FolderPath |

File Directory Path |

| FilePath |

File Path |

| FileName |

File Name |

| FileExtension |

File Extension |

| FileEncoding |

File Encoding |

|

| SortDirection |

Sort order (e.g. Ascending or Descending)

Available Options

| Option |

Description |

| Asc |

Asc |

| Desc |

Desc |

|

| WhereClause |

Where clause expression to filter items. (e.g. Size>100 and Extension IN ('.txt','.csv') ) |

| EnableSort |

Sort items by specified attribute |

| MaxItems |

Maximum items to return (e.g. TOP) |

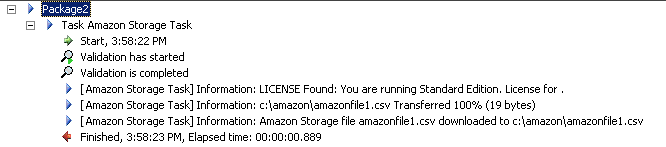

| ContinueOnError |

This option prevents task failure on error such as transfer of file(s) failed for some reason, delete operation failed for few files.

|

| LoggingMode |

Determines logging detail level. Available options are Normal, Medium, Detailed, Debugging

|