Amazon S3 ODBC Driver (for CSV Files)Amazon S3 ODBC Driver for CSV files can be used to read delimited files (e.g. CSV / TSV ) stored in AWS S3 Buckets. Using this driver you can easily integrate AWS S3 data inside SQL Server (T-SQL) or your BI / ETL / Reporting Tools / Programming Languages. Write familiar SQL queries to read data without any coding effort. This driver supports latest security standards, and optimized for large data files. It also supports reading compressed files (e.g. GZip /Zip). This driver is using same high performance data processing engine which was originally developed for CSV Connector in SSIS PowerPack. ODBC PowerPack and SSIS PowerPack, both products share many UI elements and concepts. We wrote many articles to explain various features in one product but concepts are mostly same in both products so hope you can reuse steps explained in different articles even though screenshots /steps may be slightly different. Feature Summary

|

|

| Download Help File Buy | View All Drivers |

Featured Articles

|

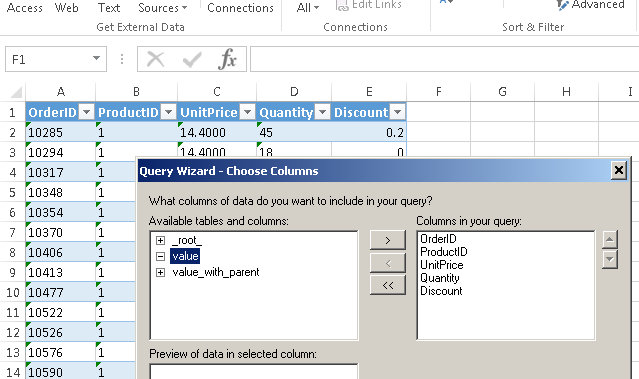

Amazon S3 CSV ODBC Driver UI

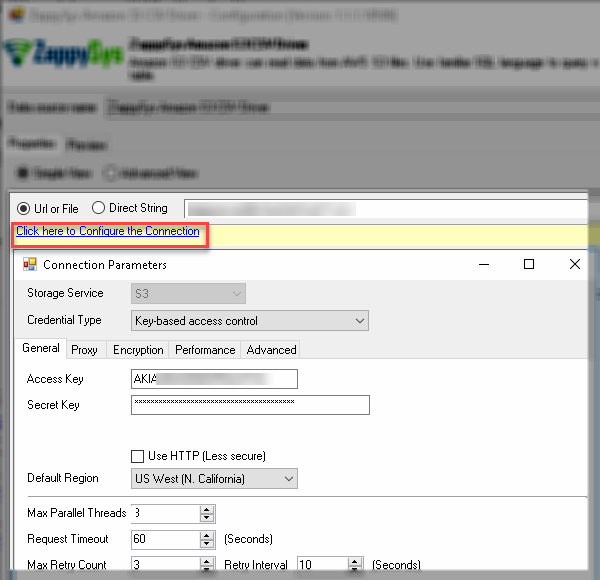

Configure Amazon S3 Connection – Amazon S3 ODBC Driver for CSV Files

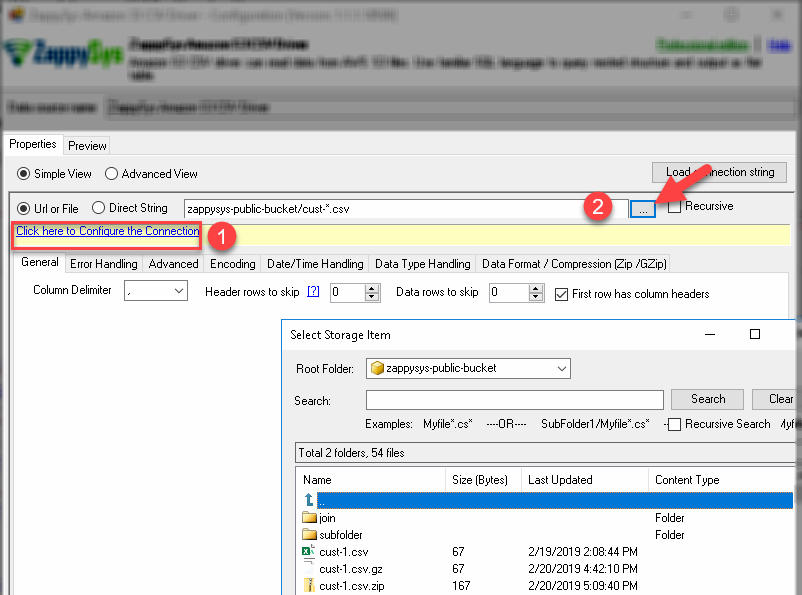

Browse File(s) – Amazon S3 File Selection

Integration Scenarios (Reporting / ETL / BI / Programming)

ZappySys ODBC Drivers built using ODBC standard which is widely adopted by industry for a long time. Which mean the majority of BI Tools / Database Engines / ETL Tools already there will support native / 3rd party ODBC Drivers. Below is the small list of most popular tools / programming languages our Drivers support. If your tool / programming language doesn’t appear in the below list, which means we have not documented use case but as long as your tool supports ODBC Standard, our drivers should work fine.

ZappySys Drivers for REST API, JSON, XML – Integrate with Power BI, Tableau, QlikView, QlikSense, Informatica PowerCenter, Talend, SQL Server, SSIS, SSAS, SSRS, Visual Studio / WinForm / WCF, Python, C#, JAVA, VB.net, PHP. PowerShell

|

BI / Reporting Tools |

|

ETL Tools |

|

|

Programming Languages |

|

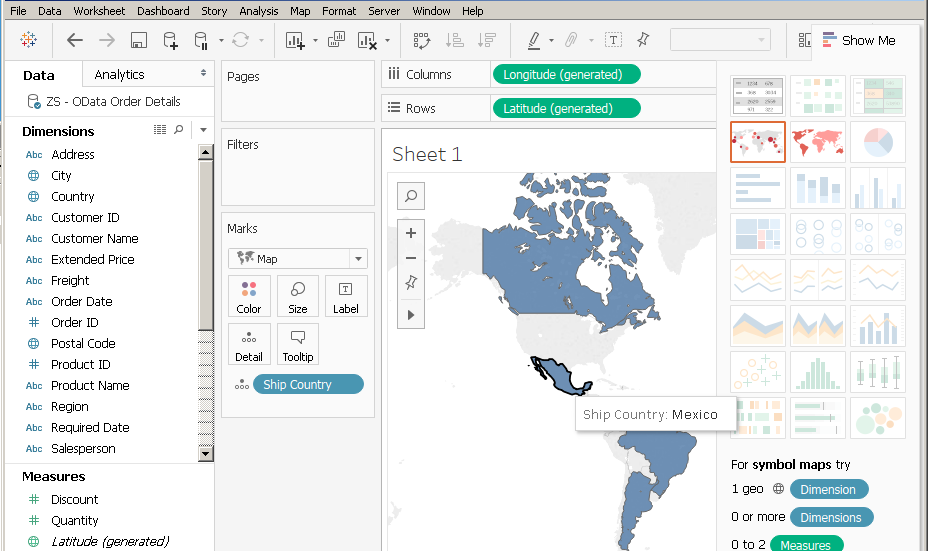

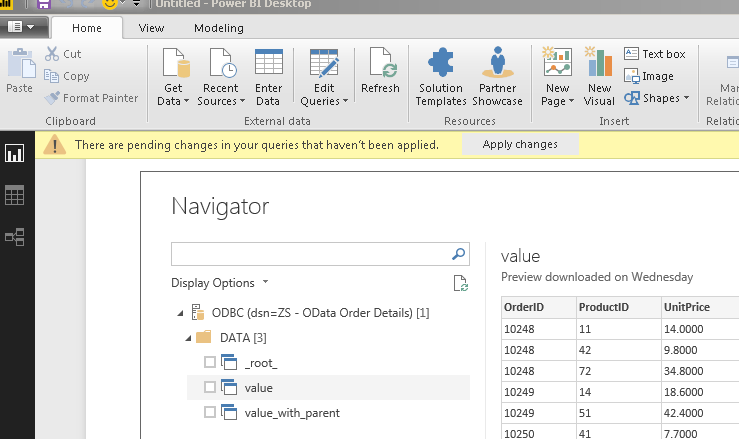

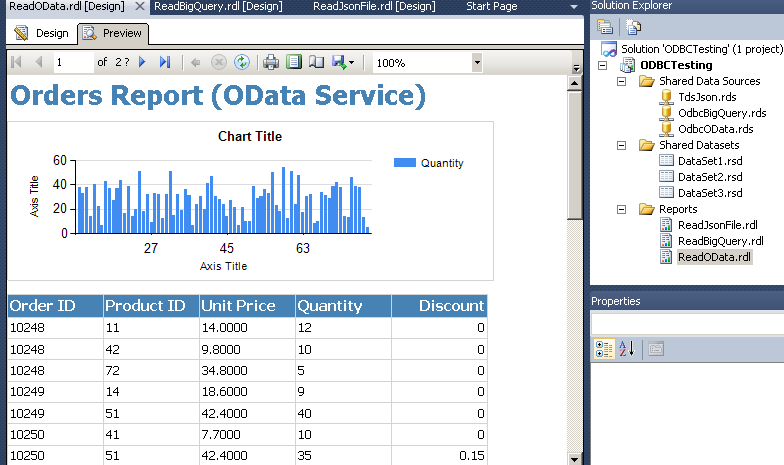

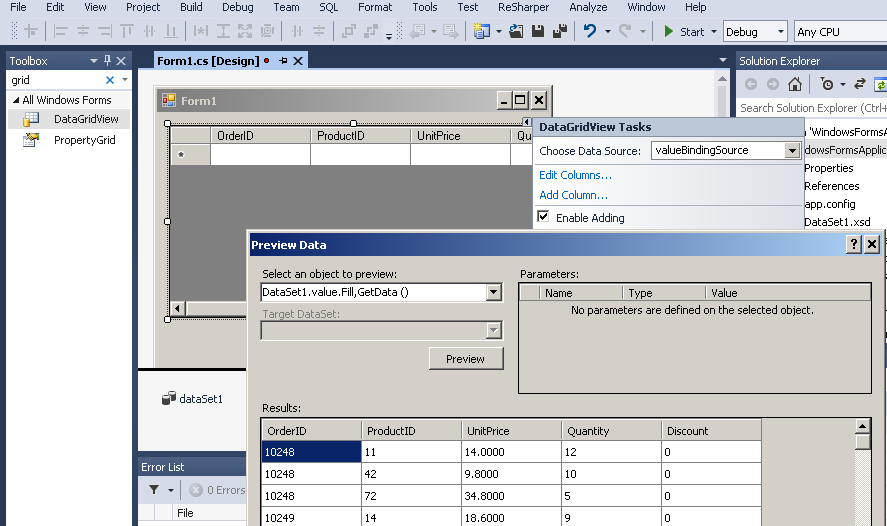

ODBC Integration Screenshots in various tools

SQL Query Examples – Amazon S3 ODBC Driver for CSV Files

/*--------- Basic Read (API, File, Embedded) Query Single File ---------*/ SELECT * FROM $ WITH (SRC='zappysys-public-bucket/cust-1.csv')

/*--------- Basic Read (API, File, Embedded) Query Multiple Files ---------*/ SELECT * FROM $ WITH ( SRC='zappysys-public-bucket/cust*-?.csv' --,RECURSIVE='True' --Include files from sub folder )

/*--------- URL JOIN Read unstructured data and pass to API call ---------*/ SELECT c.title,o.url,o.data FROM $ WITH ( Src='http://google.com' ,EnableRawOutputModeSingleRow='True' ,RawOutputFilterExpr='<title>([^<]*)<\/title>{{0,1}}||content="([^"]*)"{{0,1}}' --can be Regex, JsonPath or XPath. Use double pipe to split multiple columns ,RawOutputDataRowTemplate@='{title:"[$1]", content:"[$2]" }' --must be Json template. Comment this line to generate default columns e.g. col1, col2...If you comment this then use c.col1 and c.col2 instread of c.title and c.content in Select and join1_xxxxx attributes ,RawOutputExtractMode='Regex' --can be Regex, Xml, Json or None. -- Comment this and RawOutputFilterExpr, RawOutputDataRowTemplate to extract Raw data without column parsing (i.e. returns raw text of URL/Path) ,Alias='c' ,join1_RequestMethod='GET' ,join1_src='mybucket/myservice/[$c.title$]/log.ext' ,join1_RequestContentTypeCode= 'TextPlain' ,join1_alias= 'o' ,join1_EnableRawOutputModeSingleRow='False' )

/*--------- Functions String Manipulation ---------*/ SELECT substr('ZappySys', 1, 5) ,printf('%s %s %.2f', 'v1', CompanyName, CompanyId) ,('Str1' ||CompanyName || 'Str2' as ConcatExample , upper('ZappySys') , lower('ZappySys') , replace('ZappySys', 'Sys', 'XYZ') , instr( 'ZappySys', 'Sys') , trim ( ' Zappy Sys ') trim1 , trim ( '@@@ZappySys@@@', '@') trim2 , rtrim( ' ZappySys ') , ltrim( ' ZappySys ') , length ( CompanyName ) FROM $ WITH ( DATA ='CompanyId,CompanyName 1000,ZappySys 1001,Microsoft 1002,Amazon ')

/*--------- Functions Control flow ---------*/ SELECT COALESCE (10,20) coalesce1 --returns first non-null arg ,COALESCE(null, 20) coalesce2 --returns first non-null arg ,COALESCE(null, 20, 30, 40) coalesce3 --returns first non-null arg ,IFNULL(null,20) --returns first non-null arg. Same function as COALESCE but simple (just two arguments allowed) ,NULLIF (20,20) nullif1 --returns null if both argument same ,NULLIF (10,20) nullif2 --returns null if both argument same FROM $ WITH ( DATA='CompanyId,CompanyName 1000,ZappySys 1001,Microsoft 1002,Amazon ')

/*--------- Functions Date/Time ---------*/ SELECT DATE('now') date_now ,STRFTIME('%Y-%m-%dT%H:%M:%f','now') formatted_Date_time ,DATETIME('now') datetime_now ,DATE('now','localtime') datetime_now_local ,TIME('now') time_now ,JULIANDAY('now') ,DATE('now', 'start of month', '+1 month' , '-1 day' ) dt_modify1 ,DATE('now', '+5 day') dt_modify2 FROM $ WITH ( DATA='CompanyId,CompanyName 1000,ZappySys 1001,Microsoft 1002,Amazon ')

/*--------- Functions Math ---------*/ SELECT abs(-500) ,random() ,round(1000.236, 2) FROM $ WITH ( DATA = 'CompanyId,CompanyName 1000,ZappySys 1001,Microsoft 1002,Amazon ' )

/*--------- Language Features Group By / Limit / Order By ---------*/ SELECT Country AS Invoice_Country , SUM(UnitPrice * Quantity) AS Invoice_Total FROM $ WHERE Discount > 0 --AND OrderDate<=DATETIME('1997-12-31 00:00:00') -- OR use DATE('1997-12-31') DateTime column can be queried this way. You must wrap DATETIME function around it. Also must use 'yyyy-MM-dd' or 'yyyy-MM-dd HH:mm:ss' or 'yyyy-MM-dd HH:mm:ss.fff' format (where fff is milliseconds) GROUP BY Country HAVING SUM(UnitPrice * Quantity) > 1000 ORDER BY Invoice_Total DESC --,DATETIME(OrderDate) LIMIT 3 WITH (SRC='zappysys-public-bucket/invoices.csv')

/*--------- Language Features Case Statement ---------*/ SELECT Name , CASE Age WHEN 55 THEN 55+1 WHEN 51 THEN 51+1 ELSE 0 END ThisIsCaseColumn FROM $ WITH (DATA='Name|Age|Income|Hiredate Bob|55|5000.00|2018-01-01 Sam|51|2000.00|2016-02-01 John|45|3000.00|2014-07-01 ' ,ColumnDelimiter='|' )

/*--------- Language Features UNION ALL / UNION Statement ---------*/ SELECT * into #tbl1 FROM $ WITH ( --enter path in SRC or use static value in DATA --SRC='zappysys-public-bucket/api/data.csv' --SRC='zappysys-public-bucket/some*.csv' --SRC='zappysys-public-bucket/somefile.csv' DATA='Name|Age|Income|Hiredate Bob|55|5000.00|2018-01-01 Sam|51|2000.00|2016-02-01' ,ColumnDelimiter='|' ); SELECT * into #tbl2 FROM $ WITH (DATA='Name|Age|Income|Hiredate John|45|3000.00|2014-07-01' ,ColumnDelimiter='|' ); select * from #tbl1 UNION ALL select * from #tbl2;

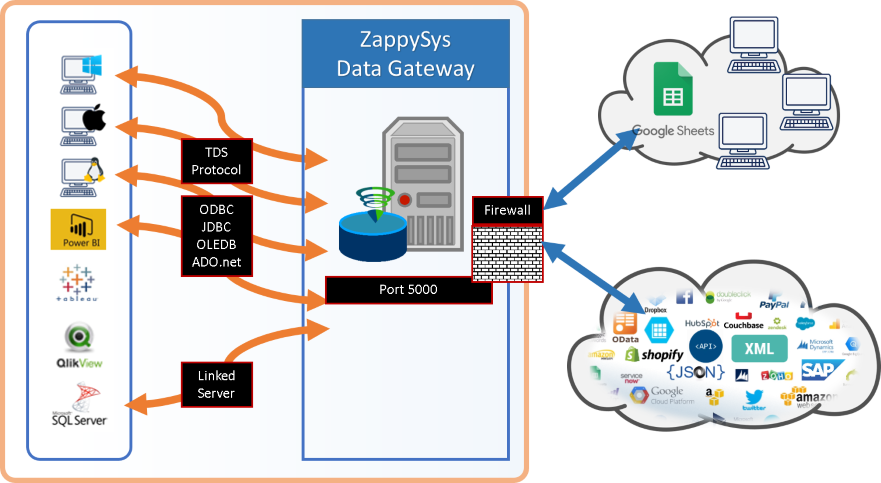

ZappySys Data Gateway (ODBC Bridge for SQL Server / JAVA / Linux / Mac)

ZappySys has developed a unique bridge called ZappySys Data Gateway Service (ZSDG) which can help to access our Drivers in SQL Server or JAVA based Apps or Non-Windows OS (e.g. Mac, Linux). ZappySys Data Gateway service can run in the cloud (VM Exposed to internet) or you can install locally on-premises.

Client application can connect to Data Gateway Service using any Microsoft SQL Server compatible driver (i.e. SQL Server ODBC, OLEDB, ADO.net or JDBC Driver or Linked Server in SQL Server). Data Gateway can be installed on the central server where you can have many users who can connect to Data Gateway to use ZappySys Drivers without installing anything on their machine. Data Gateway Service understands TDS Protocol and Client App can be running on any machine or operating system (MacOS, Linux, Windows).

ZappySys Data Gateway – Connect to JSON, XML, OData, REST API, SOAP data sources using TDS protocol compatible drivers (or any SQL Server ODBC, JDBC, OLEDB, ADO.net driver )

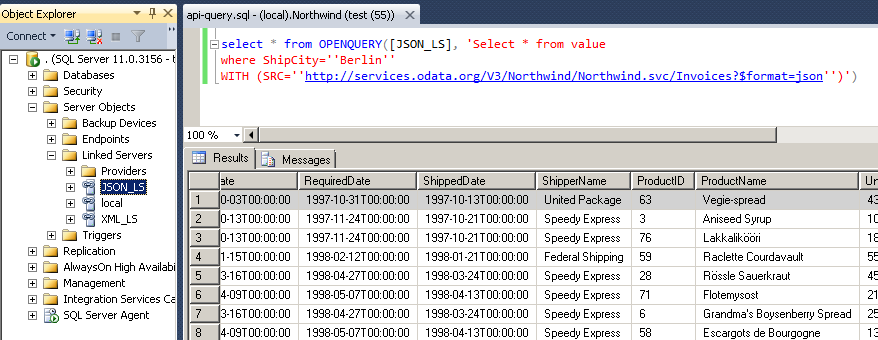

Microsoft SQL Server Integration using Data Gateway Service (T-SQL)

Here is one possible use case of using Data Gateway Service. Any DBA or Non-Programmer can start writing T-SQL queries to use ZappySys Drivers (e.g. REST API, JSON, XML, CSV data source) right inside your usual T-SQL code (You can access data from Salesforce, REST API, JSON, XML, CSV inside Views, Functions or SQL Stored Procedures).

This approach can eliminate any possible ETL work needed to extract data outside of SQL Server, you can start using your existing SQL Skill to achieve previously hard to achieve scenarios without coding.

For many other possible use case of Data Gateway click here.

SQL Server Integration Example – Query REST API / JSON Files / XML Files inside SQL Server using ZappySys Data Gateway Service (Use of Linked Server / OPENQUERY Feature in T-SQL Code / SSMS)

Video Tutorial – Calling ZappySys Drivers inside SQL Server (JSON / REST Driver use case)

Here is a short video to demonstrate a use case of Data Gateway. With this approach you can import data from REST API or any other data source for which ZappySys offers Drivers (e.g. Amazon S3, Azure, SFTP, Salesforce, XML , CSV)

Programming Language Examples

Most programming languages come with out of the box support for ODBC. Which means you can use ZappySys ODBC drivers inside your favorite language. Here are few languages which already support ODBC. We have used JSON Driver / SQL query as an example but concept is same for other drivers too. Refer to help file to learn more about Driver specific Connection String and SQL Query.

using (OdbcConnection conn = new OdbcConnection("DRIVER ={ZappySys Amazon S3 CSV Driver};")) { conn.Open(); cmd = new OdbcCommand( @"SELECT Country as Invoice_Country, SUM(UnitPrice * Quantity) Total FROM value GROUP BY Country ORDERBY Total DESC", conn); var rdr = cmd.ExecuteReader(); while (rdr.Read()) { Console.WriteLine("---- Fetching Row -------"); for (i = 0; i < rdr.FieldCount; i++) { Console.Write("Field {0}={1} ", i, rdr[i]); } Console.WriteLine(""); } }

//Assuming the Microsoft SQL Server JDBC Driver is in below folder //C:\Program Files\Microsoft JDBC Driver 6.0 for SQL Server\sqljdbc_6.0\enu\auth\x64 private static final String jdbcDriver = "com.microsoft.sqlserver.jdbc.SQLServerDriver"; //The JDBC connection URL to connect to ZappySys Data Gateway Service using SQL Server driver private static final String jdbcURL = "jdbc:sqlserver://localhost:5000;databasename=master;user=tdsuser;password=tds123;"; //Connect to the database Connection databaseConnection = DriverManager.getConnection(jdbcURL); System.out.println("Connected to ZappySys Data Gateway Service using Microsoft SQL Server JDBC driver"); //declare the statement object Statement sqlStatement = databaseConnection.createStatement(); ResultSet rs = sqlStatement.executeQuery("SELECT Country , SUM(UnitPrice * Quantity) Total " + "FROM value " + "GROUP BY Country " + "WITH (SRC='https://services.odata.org/V3/Northwind/Northwind.svc/Invoices?$format=json')"); while (rs.next()) { System.out.println("-----Fetching new row----\n"); System.out.println(rs.getString("Country") + "\n"); //System.out.println(rs.getString("Total") + "\n"); }

#Example of using ODBC driver inside Python using pyodbc library (Read more info about pyodbc from below) #https://github.com/mkleehammer/pyodbc/wiki import pyodbc #connect to api service using ZappySys ODBC driver for JSON #Use DSN #conn = pyodbc.connect(r'DSN=MyZappyDsnName;') # OR Use direct connection string conn = pyodbc.connect( r'DRIVER={ZappySys Amazon S3 CSV Driver};' ) cursor = cnxn.cursor() #execute query to fetch data from API service cursor.execute("SELECT * FROM value ORDER BY Country WITH (SRC='https://services.odata.org/V3/Northwind/Northwind.svc/Invoices?$format=json')") row = cursor.fetchone() while row: print row[0] row = cursor.fetchone()

echo "Example of using ZappySys ODBC Driver in PHP\n"; $conn = odbc_connect("DRIVER={ZappySys Amazon S3 CSV Driver};", "", ""); $sql = "SELECT * FROM value ORDER BY Country WITH (SRC='https://services.odata.org/V3/Northwind/Northwind.svc/Invoices?$format=json')"; $rs = odbc_exec($conn,$sql); echo "Fetching first row....\n"; odbc_fetch_row($rs); echo "Country=" . odbc_result($rs,"Country") . "\n"; echo "Closing connection ....\n"; odbc_close($conn);

$conn = New-Object System.Data.Odbc.OdbcConnection $conn.ConnectionString = "DRIVER={ZappySys Amazon S3 CSV Driver}" #--OR-- Use DSN name #$conn.connectionstring = "DSN=MyDSNName" $conn.Open() # ------------------------------------------------------------------------------- # In powershell $ is special char so we used `$ in below string to escape it. # Also We used multi string start with "@<new line> and ends with <new line>"@ # ------------------------------------------------------------------------------- $sql = @" SELECT * FROM value WITH (SRC='https://services.odata.org/V3/Northwind/Northwind.svc/Customers?`$format=json') "@ $cmd = $conn.CreateCommand() $cmd.CommandText = $sql $dataset = New-Object System.Data.DataSet #Load data in DataSet (New-Object System.Data.Odbc.OdbcDataAdapter($cmd)).Fill($dataSet) #Export datatable to file in CSV format $dataset.Tables[0] | ConvertTo-csv -NoTypeInformation -Delimiter "`t" | Out-File "c:\temp\dump.csv" -fo Write-Host "Total rows $($dataSet.Tables[0].Rows.Count)" $conn.Close()