Cosmos DB Connector for Azure Data Factory (Pipeline)

Connect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!

In this article you will learn how to quickly and efficiently integrate Cosmos DB data in Azure Data Factory (Pipeline) without coding. We will use high-performance Cosmos DB Connector to easily connect to Cosmos DB and then access the data inside Azure Data Factory (Pipeline).

Let's follow the steps below to see how we can accomplish that!

Cosmos DB Connector for Azure Data Factory (Pipeline) is based on ZappySys API Driver which is part of ODBC PowerPack. It is a collection of high-performance ODBC drivers that enable you to integrate data in SQL Server, SSIS, a programming language, or any other ODBC-compatible application. ODBC PowerPack supports various file formats, sources and destinations, including REST/SOAP API, SFTP/FTP, storage services, and plain files, to mention a few.

Create ODBC Data Source (DSN) based on ZappySys API Driver

Step-by-step instructions

To get data from Cosmos DB using Azure Data Factory (Pipeline) we first need to create a DSN (Data Source) which will access data from Cosmos DB. We will later be able to read data using Azure Data Factory (Pipeline). Perform these steps:

-

Download and install ODBC PowerPack.

-

Open ODBC Data Sources (x64):

-

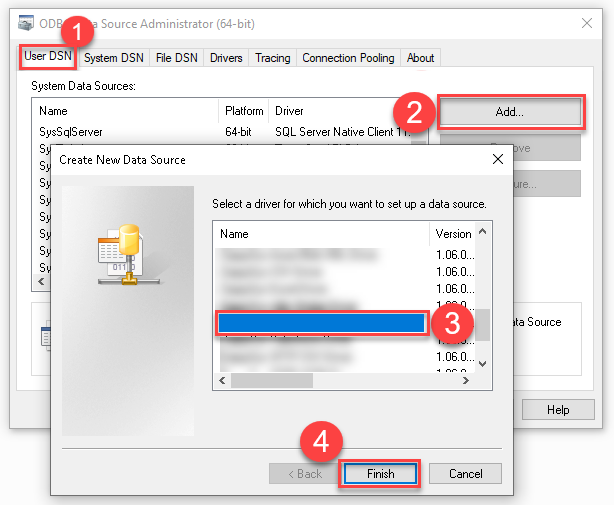

Create a User data source (User DSN) based on ZappySys API Driver:

ZappySys API Driver

-

Create and use User DSN

if the client application is run under a User Account.

This is an ideal option

in design-time , when developing a solution, e.g. in Visual Studio 2019. Use it for both type of applications - 64-bit and 32-bit. -

Create and use System DSN

if the client application is launched under a System Account, e.g. as a Windows Service.

Usually, this is an ideal option to use

in a production environment . Use ODBC Data Source Administrator (32-bit), instead of 64-bit version, if Windows Service is a 32-bit application.

Azure Data Factory (Pipeline) uses a Service Account, when a solution is deployed to production environment, therefore for production environment you have to create and use a System DSN. -

Create and use User DSN

if the client application is run under a User Account.

This is an ideal option

-

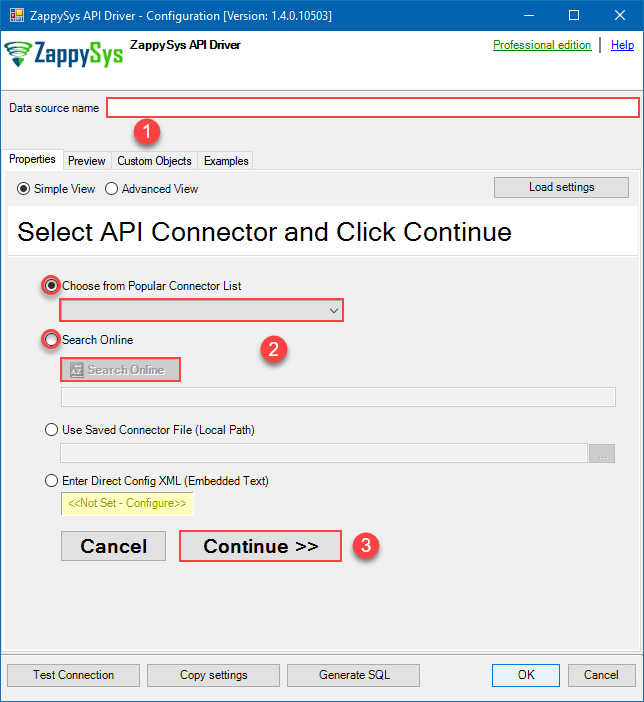

When the Configuration window appears give your data source a name if you haven't done that already, then select "Cosmos DB" from the list of Popular Connectors. If "Cosmos DB" is not present in the list, then click "Search Online" and download it. Then set the path to the location where you downloaded it. Finally, click Continue >> to proceed with configuring the DSN:

CosmosDbDSNCosmos DB

-

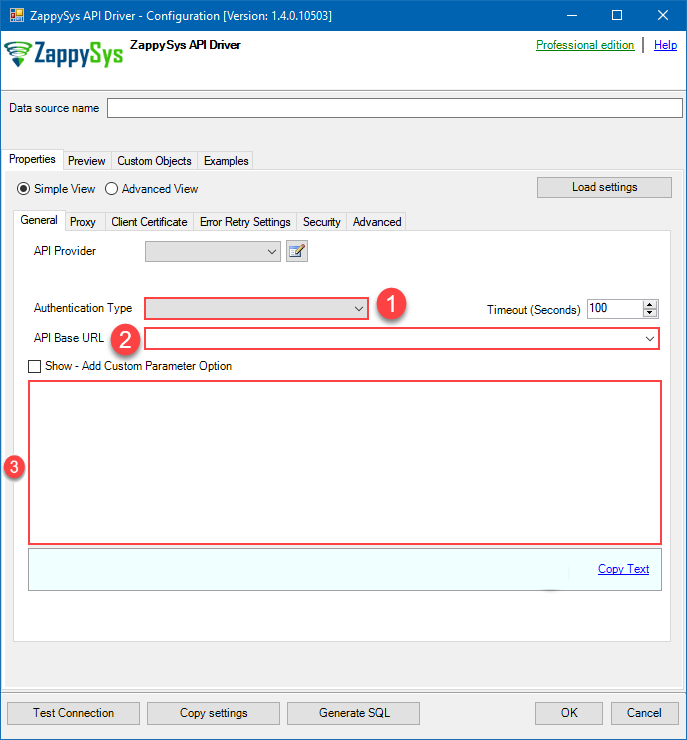

Now it's time to configure the Connection Manager. Select Authentication Type, e.g. Token Authentication. Then select API Base URL (in most cases, the default one is the right one). More info is available in the Authentication section.

Cosmos DB authentication

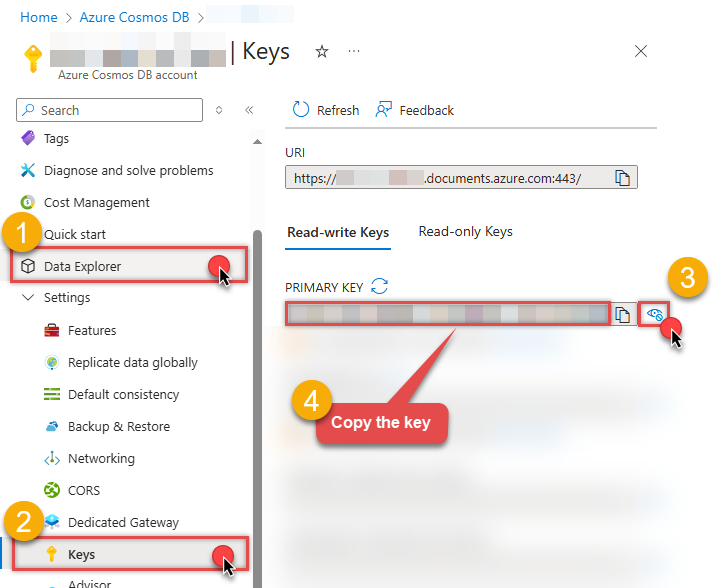

Connecting to your Azure Cosmos DB data requires you to authenticate your REST API access. Follow the instructions below:- Go to your Azure portal homepage: https://portal.azure.com/.

- In the search bar at the top of the homepage, enter Azure Cosmos DB. In the dropdown that appears, select Azure Cosmos DB.

- Click on the name of the database account you want to connect to (also copy and paste the name of the database account for later use).

-

On the next page where you can see all of the database account information, look along the left side and select Keys:

- On the Keys page, you will have two tabs: Read-write Keys and Read-only Keys. If you are going to write data to your database, you need to remain on the Read-write Keys tab. If you are only going to read data from your database, you should select the Read-only Keys tab.

- On the Keys page, copy the PRIMARY KEY value and paste it somewhere for later use (the SECONDARY KEY value may also be copied and used).

- Now go to SSIS package or ODBC data source and use this PRIMARY KEY in API Key authentication configuration.

- Enter the primary or secondary key you recorded in step 6 into the Primary or Secondary Key field.

- Then enter the database account you recorded in step 3 into the Database Account field.

- Next, enter or select the default database you want to connect to using the Defualt Database field.

- Continue by entering or selecting the default table (i.e. container/collection) you want to connect to using the Default Table (Container/Collection) field.

- Select the Test Connection button at the bottom of the window to verify proper connectivity with your Azure Devops account.

- If the connection test succeeds, select OK.

- Done! Now you are ready to use Asana Connector!

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

API Key [Http] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Finally, hit OK button:

CosmosDbDSNCosmos DBAPI Key [Http]https://[$Account$].documents.azure.comRequired Parameters Primary or Secondary Key Fill-in the parameter... Account Name (Case-Sensitive) Fill-in the parameter... Database Name (keep blank to use default) Case-Sensitive Fill-in the parameter... API Version Fill-in the parameter... Optional Parameters Default Table (needed to invoke #DirectSQL)

-

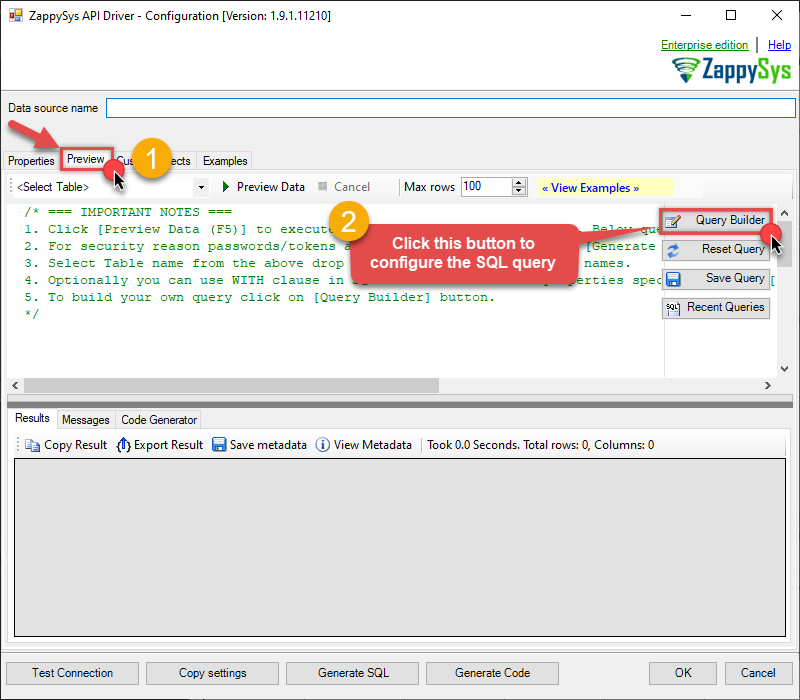

Once the data source connection has been configured, it's time to configure the SQL query. Select the Preview tab and then click Query Builder button to configure the SQL query:

ZappySys API Driver - Cosmos DBConnect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!CosmosDbDSN

ZappySys API Driver - Cosmos DBConnect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!CosmosDbDSN

-

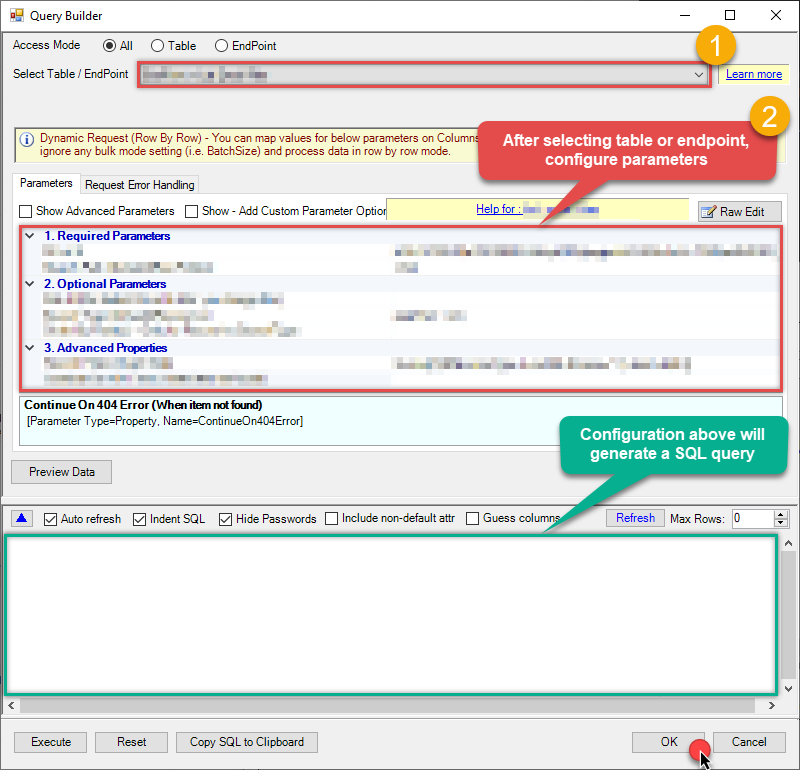

Start by selecting the Table or Endpoint you are interested in and then configure the parameters. This will generate a query that we will use in Azure Data Factory (Pipeline) to retrieve data from Cosmos DB. Hit OK button to use this query in the next step.

#DirectSQL SELECT * FROM root where root.id !=null order by root._ts desc Some parameters configured in this window will be passed to the Cosmos DB API, e.g. filtering parameters. It means that filtering will be done on the server side (instead of the client side), enabling you to get only the meaningful data

Some parameters configured in this window will be passed to the Cosmos DB API, e.g. filtering parameters. It means that filtering will be done on the server side (instead of the client side), enabling you to get only the meaningful datamuch faster . -

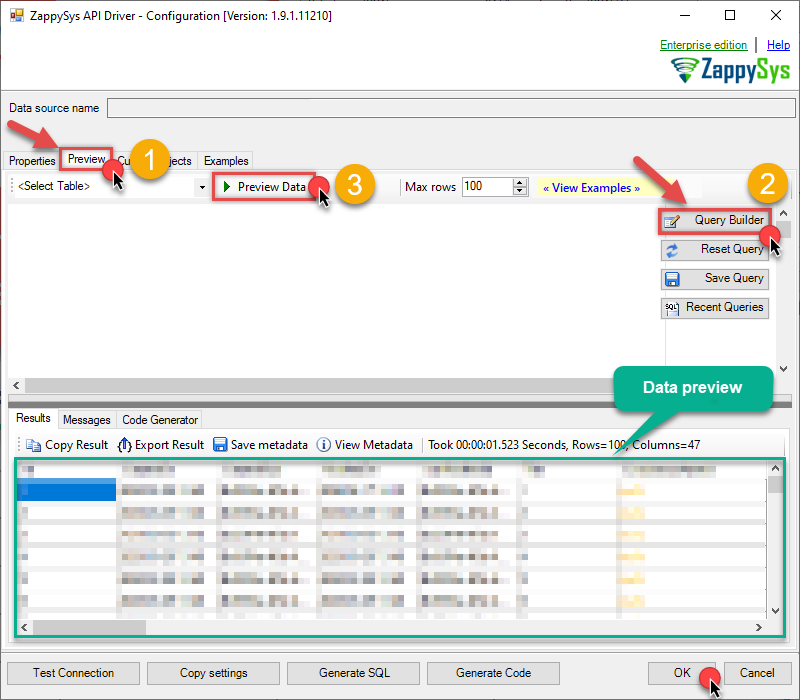

Now hit Preview Data button to preview the data using the generated SQL query. If you are satisfied with the result, use this query in Azure Data Factory (Pipeline):

ZappySys API Driver - Cosmos DBConnect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!CosmosDbDSN

ZappySys API Driver - Cosmos DBConnect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!CosmosDbDSN#DirectSQL SELECT * FROM root where root.id !=null order by root._ts desc You can also access data quickly from the tables dropdown by selecting <Select table>.A

You can also access data quickly from the tables dropdown by selecting <Select table>.AWHEREclause,LIMITkeyword will be performed on the client side, meaning that thewhole result set will be retrieved from the Cosmos DB API first, and only then the filtering will be applied to the data. If possible, it is recommended to use parameters in Query Builder to filter the data on the server side (in Cosmos DB servers). -

Click OK to finish creating the data source.

Video Tutorial

Read data in Azure Data Factory (ADF) from ODBC datasource (Cosmos DB)

-

Sign in to Azure Portal

-

Open your browser and go to: https://portal.azure.com

-

Enter your Azure credentials and complete MFA if required.

-

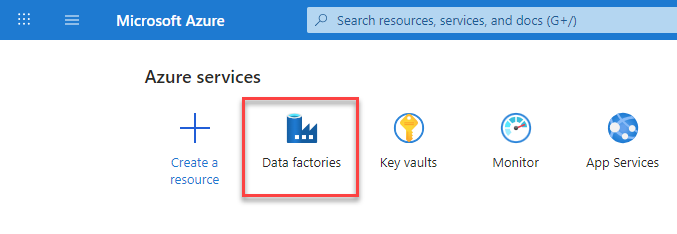

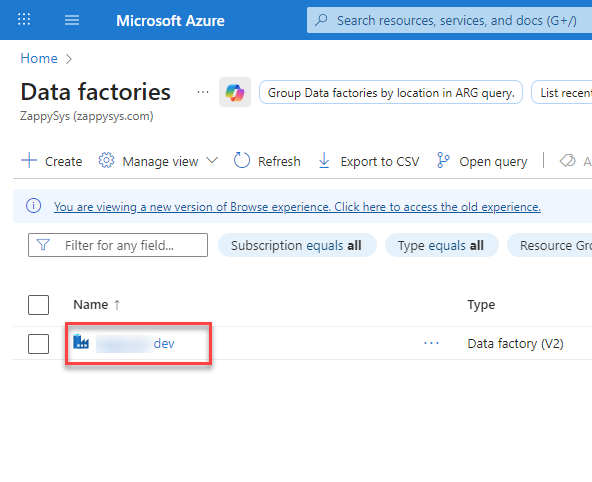

After login, go to Data factories.

-

-

Under Azure Data Factory Resource - Create or select the Data Factory you want to work with.

-

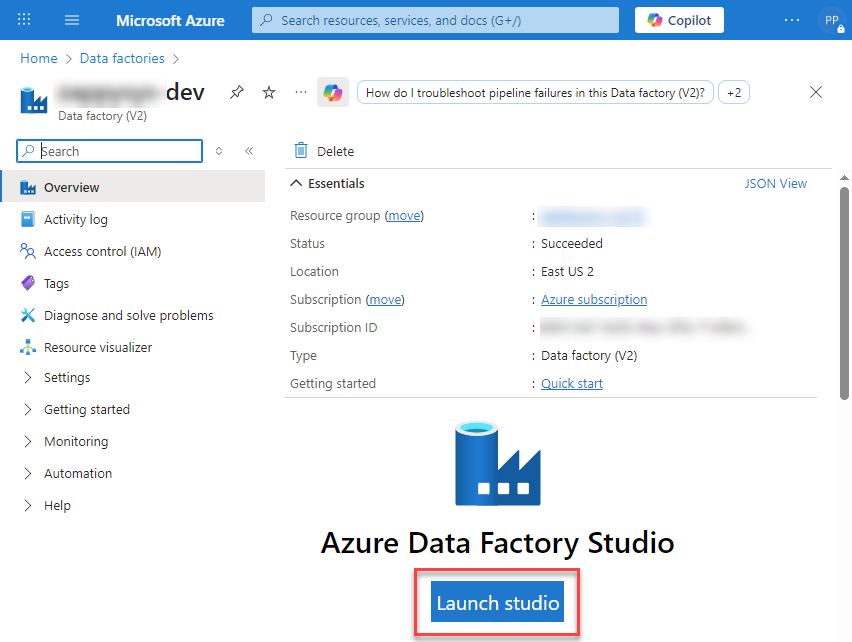

Inside the Data Factory resource page, click Launch studio.

-

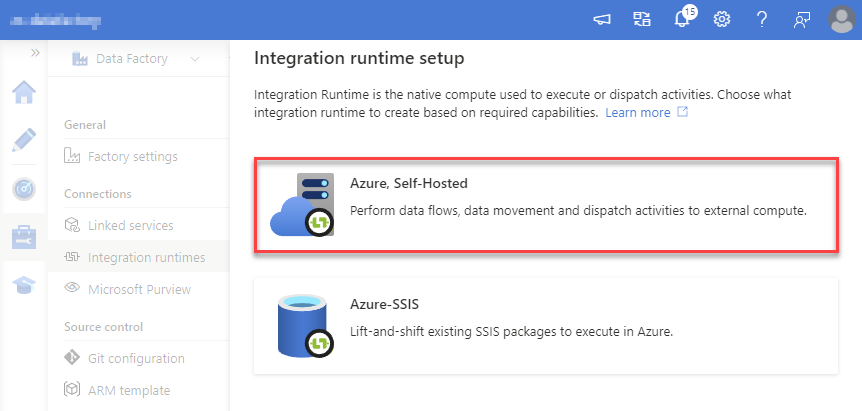

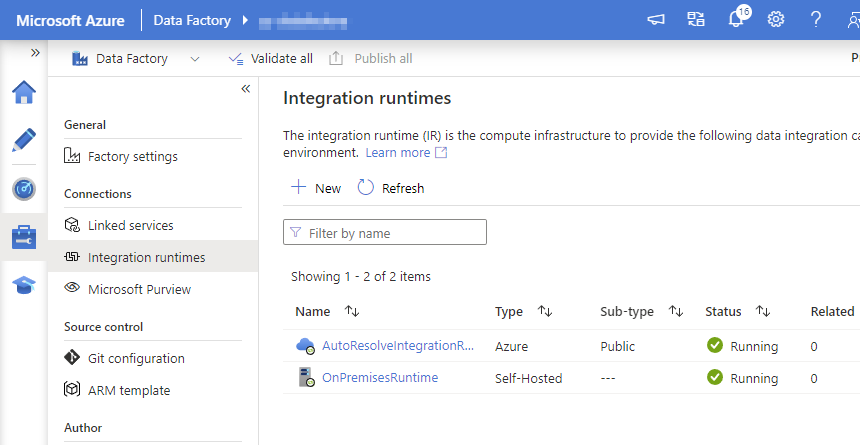

Create a New Integration Runtime (Self-Hosted):

In Azure Data Factory Studio, go to the Manage section (left menu).

Under Connections, select Integration runtimes.

Click + New to create a new integration runtime.

-

Select Azure, Self-Hosted option:

-

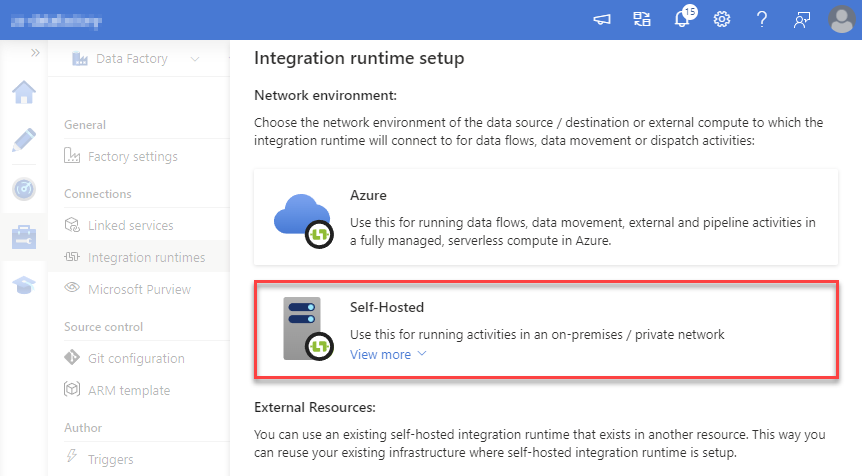

Select Self-Hosted option:

-

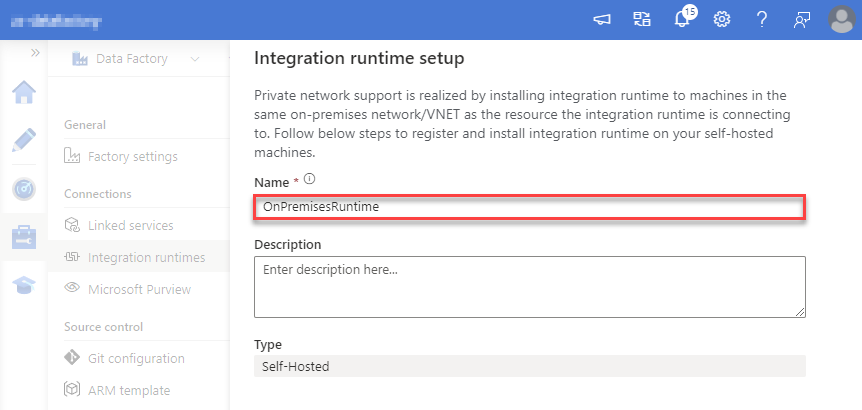

Set a name, we will use OnPremisesRuntime:

-

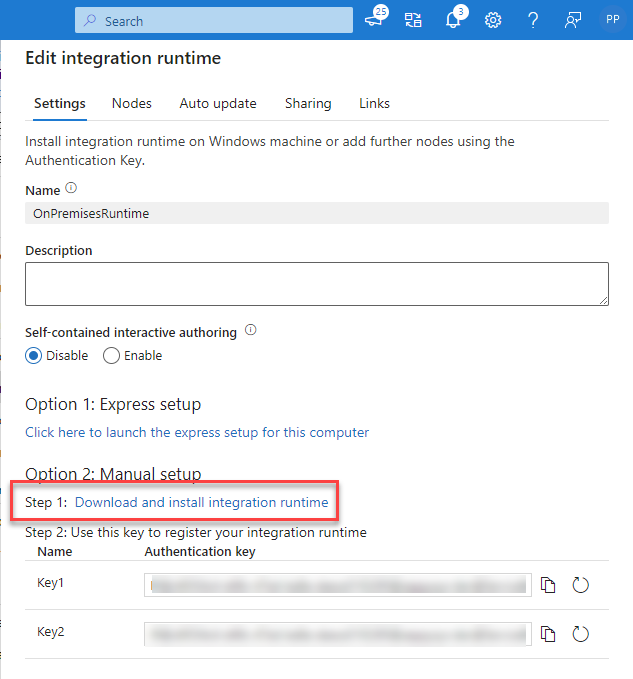

Download and install Microsoft Integration Runtime.

-

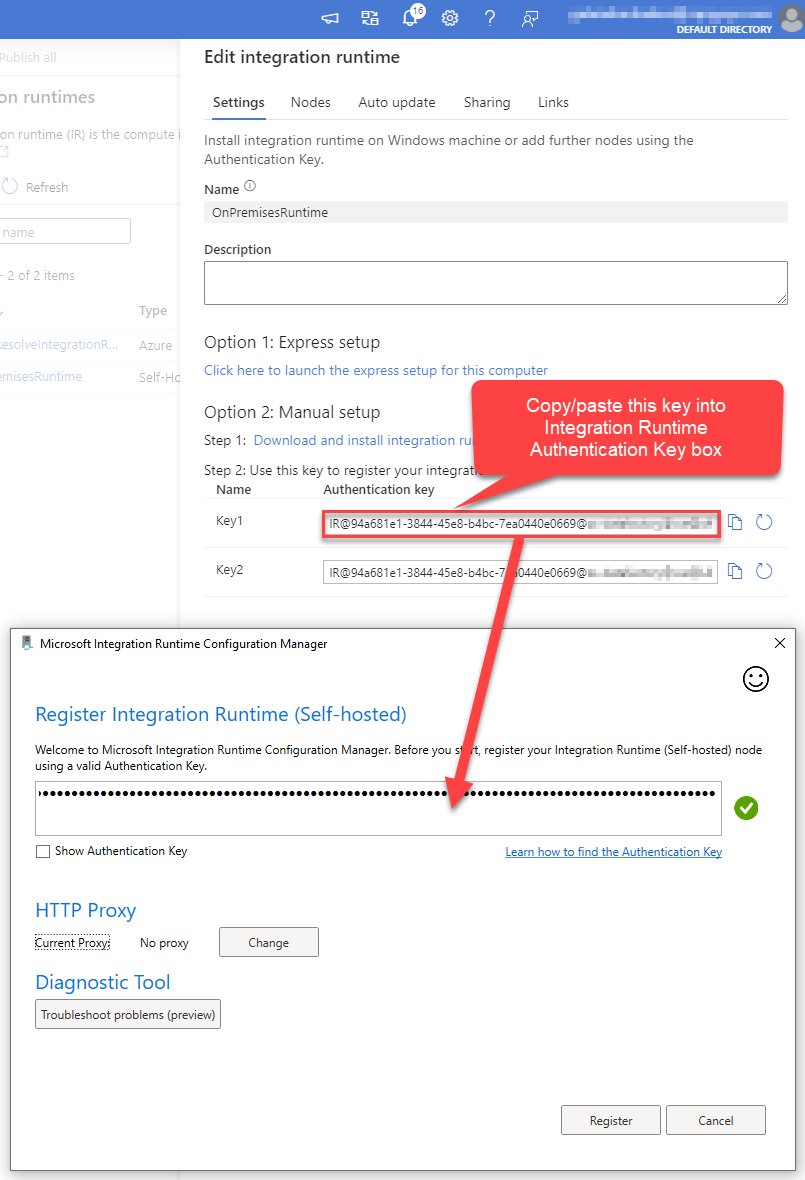

Launch Integration Runtime and copy/paste Authentication Key from Integration Runtime configuration in Azure Portal:

-

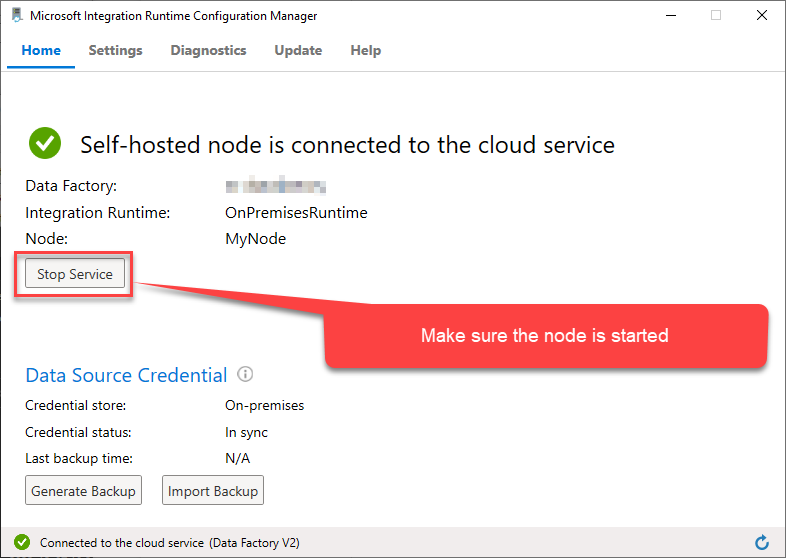

After finishing registering the Integration Runtime node, you should see a similar view:

-

Go back to Azure Portal and finish adding new Integration Runtime. You should see it was successfully added:

-

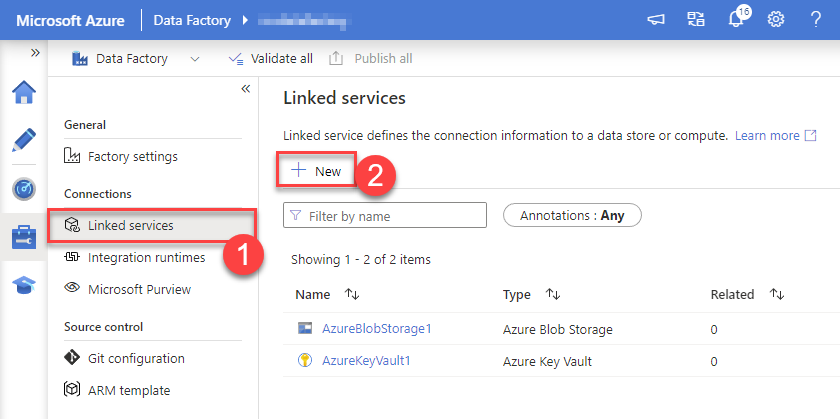

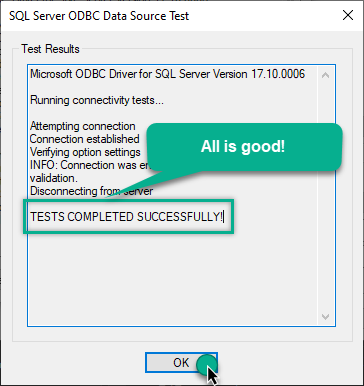

Create a New Linked service:

In the Manage section (left menu).

Under Connections, select Linked services.

Click + New to create a new Linked service based on ODBC.

-

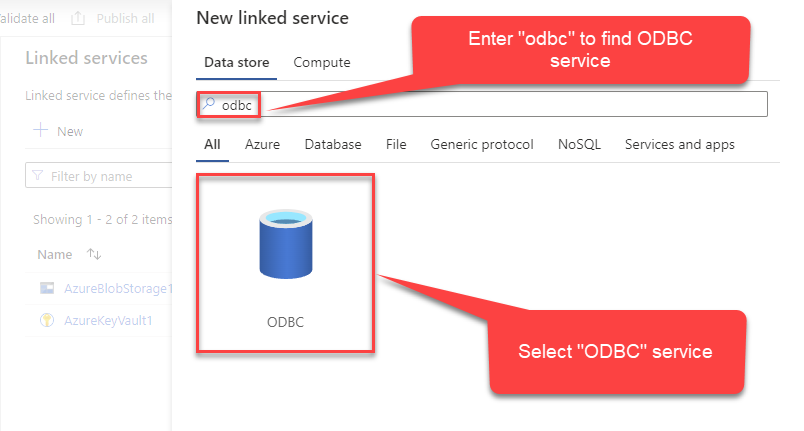

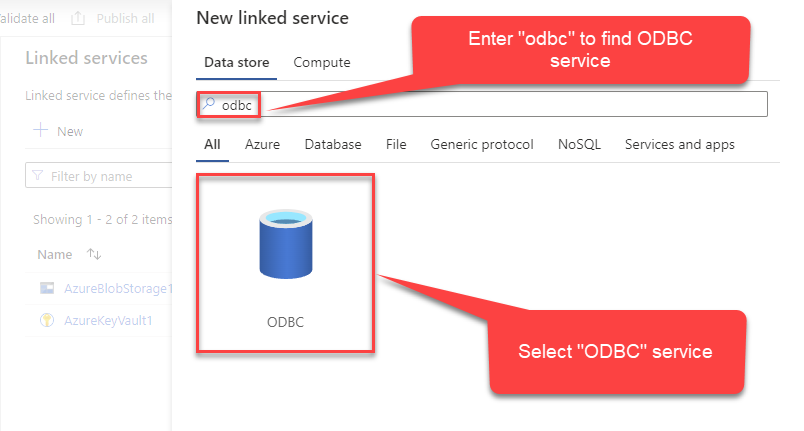

Select ODBC service:

-

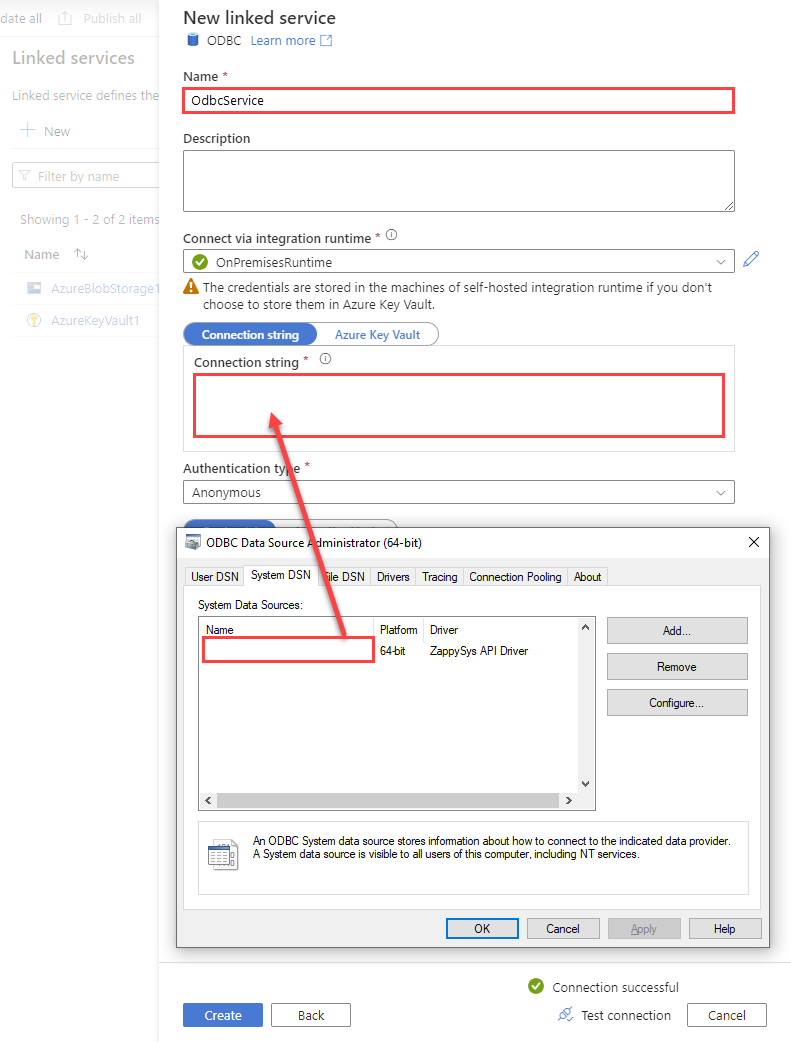

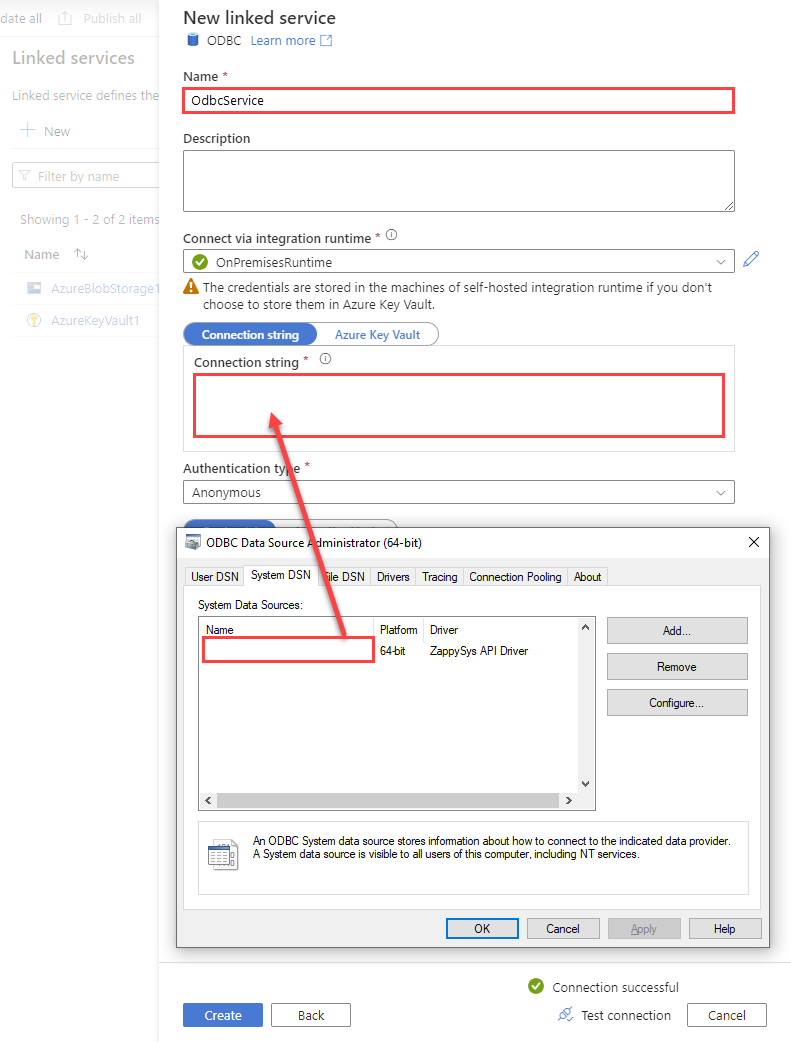

Configure new ODBC service. Use the same DSN name we used in the previous step and copy it to Connection string box:

CosmosDbDSNDSN=CosmosDbDSN

-

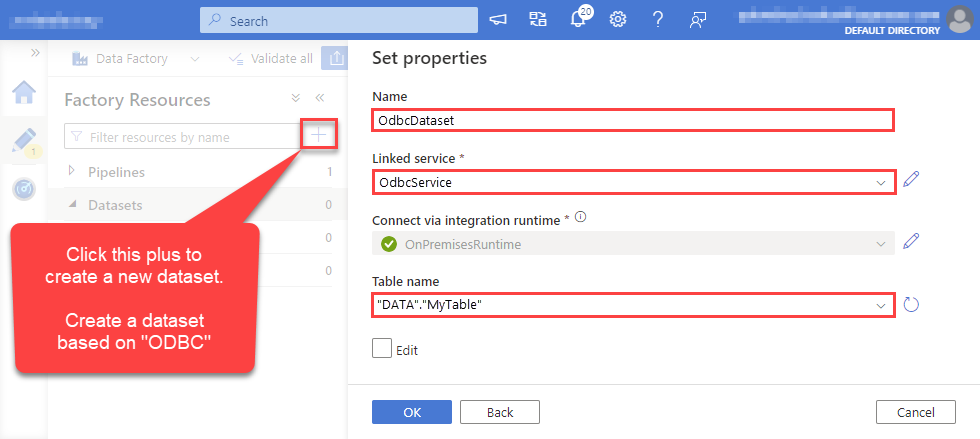

For created ODBC service create ODBC-based dataset:

-

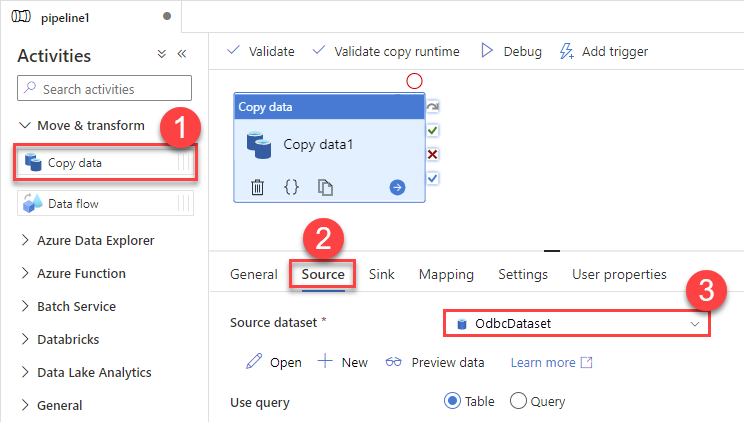

Go to your pipeline and add Copy data connector into the flow. In Source section use OdbcDataset we created as a source dataset:

-

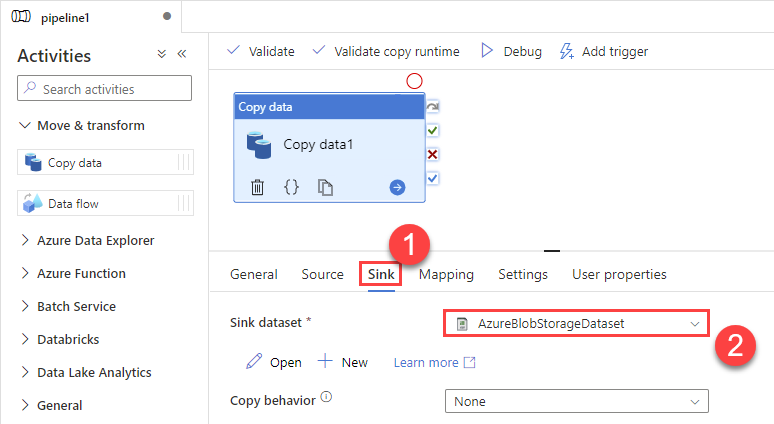

Then go to Sink section and select a destination/sink dataset. In this example we use precreated AzureBlobStorageDataset which saves data into an Azure Blob:

-

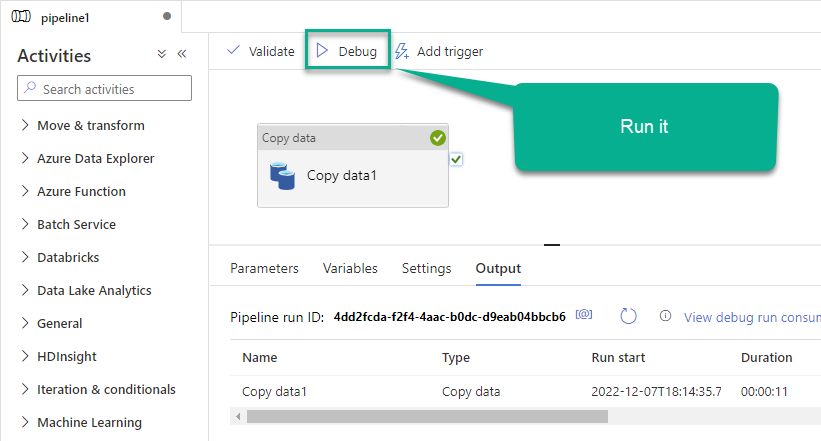

Finally, run the pipeline and see data being transferred from OdbcDataset to your destination dataset:

Executing SQL queries using Lookup activity

If you need to execute commands in Cosmos DB instead of retrieving data, use the Lookup activity for that purpose. Use this approach when you want data to be changed on the Cosmos DB side, but you don't need the data on your side (a "fire-and-forget" scenario).

Perform these simple steps to accomplish that:

-

Go to your pipeline in Azure Data Factory

-

Find Lookup activity in the Activities pane

-

Then drag-and-drop the Lookup activity onto your pipeline canvas

-

Click Settings tab

-

Select

OdbcDatasetin the Source dataset field -

Finally, enter your SQL query in the Query text box:

Centralized data access via Data Gateway

In some situations, you may need to provide Cosmos DB data access to multiple users or services. Configuring the data source on a Data Gateway creates a single, centralized connection point for this purpose.

This configuration provides two primary advantages:

-

Centralized data access

The data source is configured once on the gateway, eliminating the need to set it up individually on each user's machine or application. This significantly simplifies the management process.

-

Centralized access control

Since all connections route through the gateway, access can be governed or revoked from a single location for all users.

| Data Gateway |

Local ODBC

data source

|

|

|---|---|---|

| Simple configuration | ||

| Installation | Single machine | Per machine |

| Connectivity | Local and remote | Local only |

| Connections limit | Limited by License | Unlimited |

| Central data access | ||

| Central access control | ||

| More flexible cost |

If you need any of these requirements, you will have to create a data source in Data Gateway to connect to Cosmos DB, and to create an ODBC data source to connect to Data Gateway in Azure Data Factory (Pipeline).

Let's not wait and get going!

Creating Cosmos DB data source in Gateway

In this section we will create a data source for Cosmos DB in Data Gateway. Let's follow these steps to accomplish that:

-

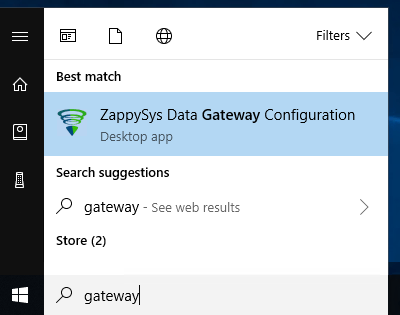

Search for

gatewayin Windows Start Menu and open ZappySys Data Gateway Configuration:

-

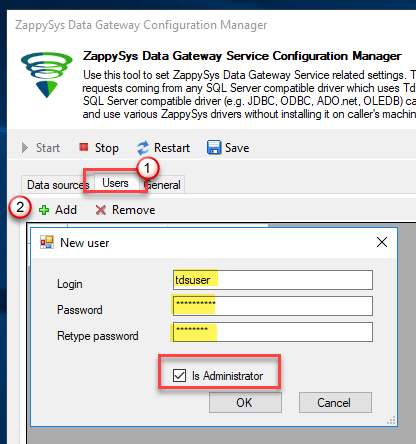

Go to Users tab and follow these steps to add a Data Gateway user:

- Click Add button

-

In Login field enter username, e.g.,

john - Then enter a Password

- Check Is Administrator checkbox

- Click OK to save

-

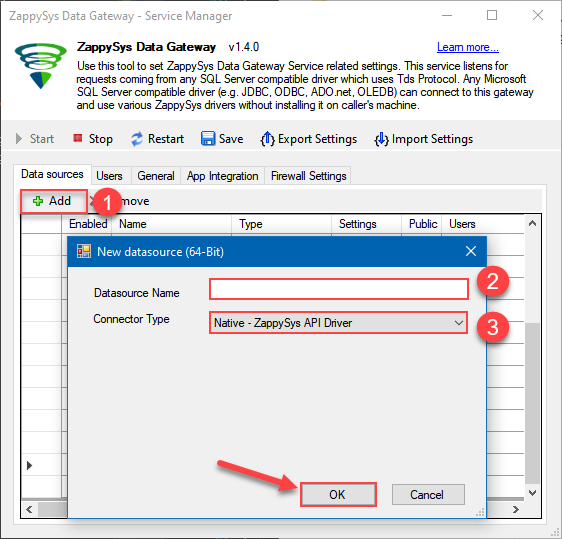

Now we are ready to add a data source:

- Click Add button

- Give Datasource a name (have it handy for later)

- Then select Native - ZappySys API Driver

- Finally, click OK

CosmosDbDSNZappySys API Driver

-

When the ZappySys API Driver configuration window opens, configure the Data Source the same way you configured it in ODBC Data Sources (64-bit), in the beginning of this article.

-

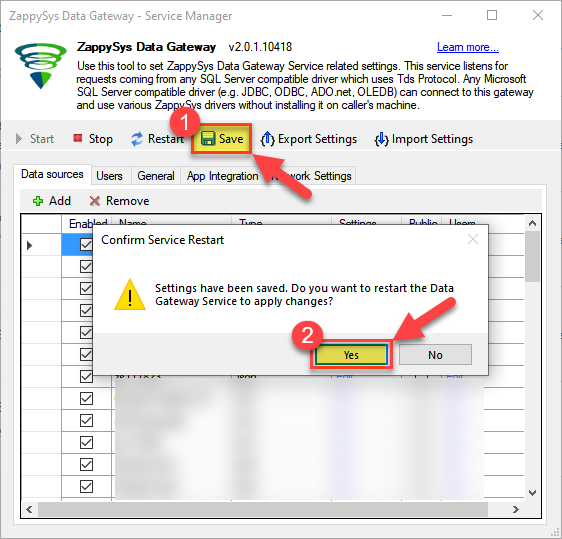

Very important step. Now, after creating or modifying the data source make sure you:

- Click the Save button to persist your changes.

- Hit Yes, once asked if you want to restart the Data Gateway service.

This will ensure all changes are properly applied:

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Skipping this step may result in the new settings not taking effect and, therefore you will not be able to connect to the data source.

Creating ODBC data source for Data Gateway

In this part we will create ODBC data source to connect to Data Gateway from Azure Data Factory (Pipeline). To achieve that, let's perform these steps:

-

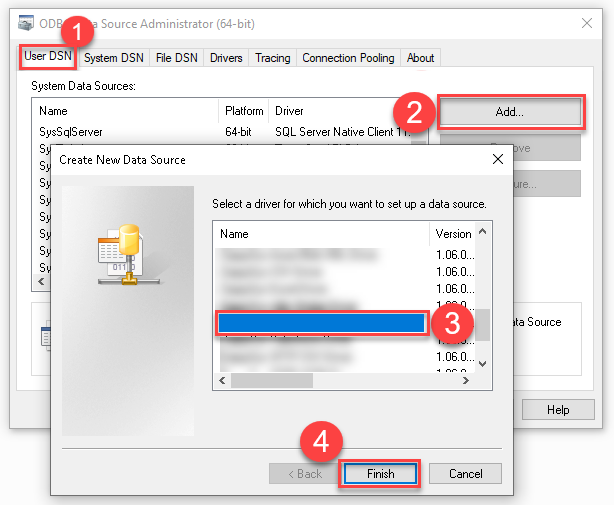

Open ODBC Data Sources (x64):

-

Create a User data source (User DSN) based on ODBC Driver 17 for SQL Server:

ODBC Driver 17 for SQL Server If you don't see ODBC Driver 17 for SQL Server driver in the list, choose a similar version driver.

If you don't see ODBC Driver 17 for SQL Server driver in the list, choose a similar version driver. -

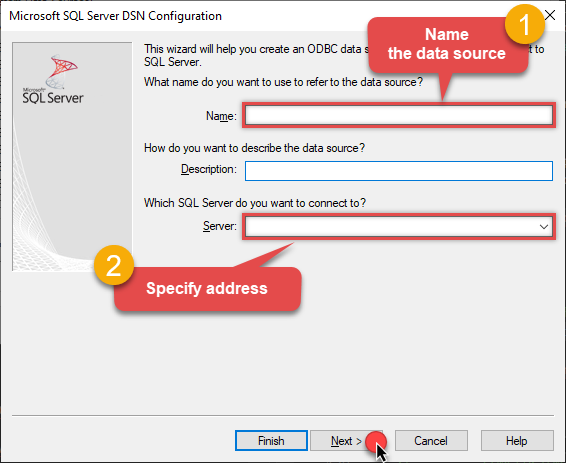

Then set a Name of the data source (e.g.

Gateway) and the address of the Data Gateway:GatewayDSNlocalhost,5000 Make sure you separate the hostname and port with a comma, e.g.

Make sure you separate the hostname and port with a comma, e.g.localhost,5000. -

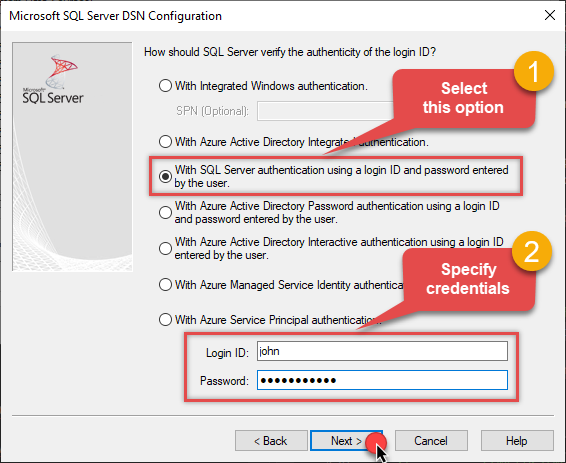

Proceed with authentication part:

- Select SQL Server authentication

-

In Login ID field enter the user name you used in Data Gateway, e.g.,

john - Set Password to the one you configured in Data Gateway

-

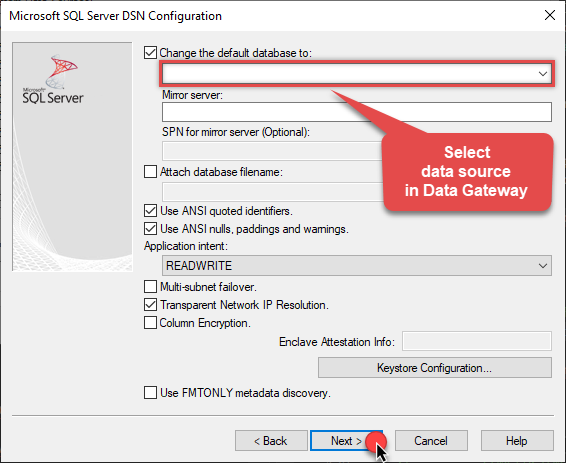

Then set the default database property to

CosmosDbDSN(the one we used in Data Gateway):CosmosDbDSN

-

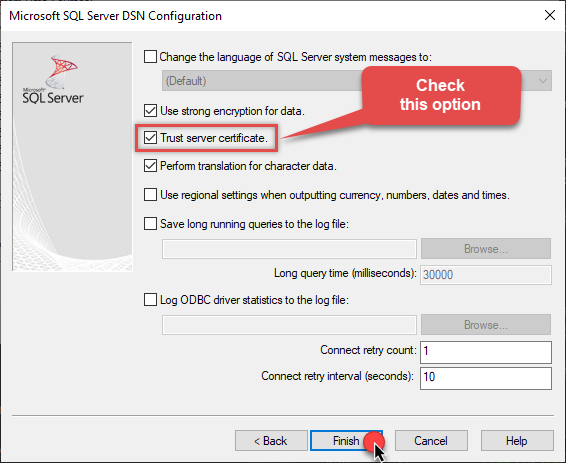

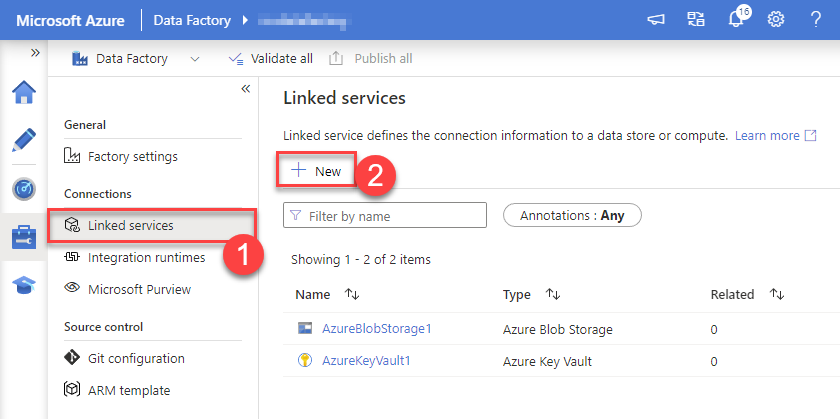

Continue by checking Trust server certificate option:

-

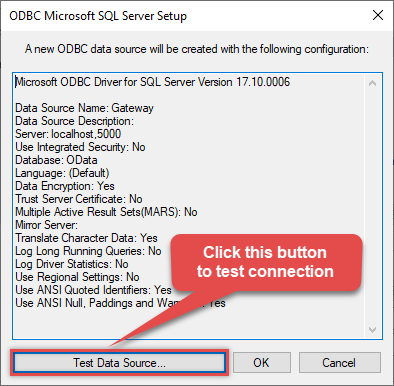

Once you do that, test the connection:

-

If connection is successful, everything is good:

-

Done!

We are ready to move to the final step. Let's do it!

Accessing data in Azure Data Factory (Pipeline) via Data Gateway

Finally, we are ready to read data from Cosmos DB in Azure Data Factory (Pipeline) via Data Gateway. Follow these final steps:

-

Go back to Azure Data Factory (Pipeline).

-

Create a New Linked service:

In the Manage section (left menu).

Under Connections, select Linked services.

Click + New to create a new Linked service based on ODBC.

-

Select ODBC service:

-

Configure new ODBC service. Use the same DSN name we used in the previous step and copy it to Connection string box:

GatewayDSNDSN=GatewayDSN

-

Read the data the same way we discussed at the beginning of this article.

-

That's it!

Now you can connect to Cosmos DB data in Azure Data Factory (Pipeline) via the Data Gateway.

john and your password.

Actions supported by Cosmos DB Connector

Learn how to perform common Cosmos DB actions directly in Azure Data Factory (Pipeline) with these how-to guides:

- Create a document in the container

- Create Permission Token for a User (One Table)

- Create User for Database

- Delete a Document by Id

- Get All Documents for a Table

- Get All Users for a Database

- Get Database Information by Id or Name

- Get Document by Id

- Get List of Databases

- Get List of Tables

- Get table information by Id or Name

- Get table partition key ranges

- Get User by Id or Name

- Query documents using Cosmos DB SQL query language

- Update Document in the Container

- Upsert a document in the container

- Make Generic API Request

- Make Generic API Request (Bulk Write)

Conclusion

In this article we showed you how to connect to Cosmos DB in Azure Data Factory (Pipeline) and integrate data without any coding, saving you time and effort.

We encourage you to download Cosmos DB Connector for Azure Data Factory (Pipeline) and see how easy it is to use it for yourself or your team.

If you have any questions, feel free to contact ZappySys support team. You can also open a live chat immediately by clicking on the chat icon below.

Download Cosmos DB Connector for Azure Data Factory (Pipeline) Documentation