Endpoint Make Generic API Request

Name

generic_request

Description

This is generic endpoint. Use this endpoint when some actions are not implemented by connector. Just enter partial URL (Required), Body, Method, Header etc. Most parameters are optional except URL.

Related Tables

Generic Table (Bulk Read / Write)

Parameters

| Parameter | Required | Options | ||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Name:

Label: HTTP - Url or File Path API URL goes here. You can enter full URL or Partial URL relative to Base URL. If it is full URL then domain name must be part of ServiceURL or part of TrustedDomains |

YES | |||||||||||||||||||||||||||

|

Name:

Label: HTTP - Request Body |

||||||||||||||||||||||||||||

|

Name:

Label: HTTP - Is MultiPart Body (Pass File data/Mixed Key/value) Set this option if you want to upload file(s) using either raw file data (i.e., POST raw file data) or send data using the multi-part encoding method (i.e. Content-Type: multipart/form-data). A multi-part request allows you to mix key/value pairs and upload files in the same request. On the other hand, raw upload allows only a single file to be uploaded (without any key/value data). ==== Raw Upload (Content-Type: application/octet-stream) ===== To upload a single file in raw mode, check this option and specify the full file path starting with the @ sign in the Body (e.g. @c:\data\myfile.zip) ==== Form-Data / Multipart Upload (Content-Type: multipart/form-data) ===== To treat your request data as multi-part fields, you must specify key/value pairs separated by new lines in the RequestData field (i.e., Body). Each key/value pair should be entered on a new line, and key/value are separated using an equal sign (=). Leading and trailing spaces are ignored, and blank lines are also ignored. If a field value contains any special character(s), use escape sequences (e.g., for NewLine: \r\n, for Tab: \t, for at (@): @). When the value of any field starts with the at sign (@), it is automatically treated as a file you want to upload. By default, the file content type is determined based on the file extension; however, you can supply a content type manually for any field using this format: [YourFileFieldName.Content-Type=some-content-type]. By default, file upload fields always include Content-Type in the request (non-file fields do not have Content-Type by default unless you supply it manually). If, for some reason, you don't want to use the Content-Type header in your request, then supply a blank Content-Type to exclude this header altogether (e.g., SomeFieldName.Content-Type=). In the example below, we have supplied Content-Type for file2 and SomeField1. All other fields are using the default content type. See the example below of uploading multiple files along with additional fields. If some API requires you to pass Content-Type: multipart/form-data rather than multipart/form-data, then manually set Request Header => Content-Type: multipart/mixed (it must start with multipart/ or it will be ignored). file1=@c:\data\Myfile1.txt file2=@c:\data\Myfile2.json file2.Content-Type=application/json SomeField1=aaaaaaa SomeField1.Content-Type=text/plain SomeField2=12345 SomeFieldWithNewLineAndTab=This is line1\r\nThis is line2\r\nThis is \ttab \ttab \ttab SomeFieldStartingWithAtSign=\@MyTwitterHandle |

||||||||||||||||||||||||||||

|

Name:

Label: HTTP - Headers (e.g. hdr1:aaa || hdr2:bbb) Headers for Request. To enter multiple headers use double pipe or new line after each {header-name}:{value} pair |

||||||||||||||||||||||||||||

|

Name:

Label: Parser - Filter (e.g. $.rows[*] ) Enter filter to extract array from response. Example: $.rows[*] --OR-- $.customers[*].orders[*]. Check your response document and find out hierarchy you like to extract |

|

|||||||||||||||||||||||||||

|

Name:

Label: Download - Enable reading binary data |

||||||||||||||||||||||||||||

|

Name:

Label: Download - File overwrite mode |

||||||||||||||||||||||||||||

|

Name:

Label: Download - Save file path |

||||||||||||||||||||||||||||

|

Name:

Label: Download - Enable raw output mode as single row |

||||||||||||||||||||||||||||

|

Name:

Label: Download - Raw output data RowTemplate |

||||||||||||||||||||||||||||

|

Name:

Label: Download - Request Timeout (Milliseconds) |

Output Columns

| Label | Data Type (SSIS) | Data Type (SQL) | Length | Description | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| There are no Static columns defined for this endpoint. This endpoint detects columns dynamically at runtime. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Input Columns

| Label | Data Type (SSIS) | Data Type (SQL) | Length | Description | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| There are no Static columns defined for this endpoint. This endpoint detects columns dynamically at runtime. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Examples

SSIS

Use OneDrive Connector in API Source or in API Destination SSIS Data Flow components to read or write data.

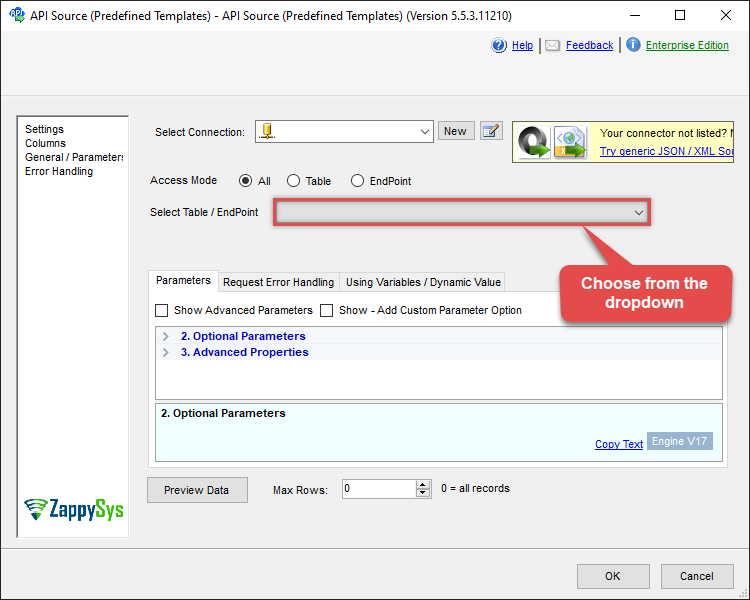

API Source

This Endpoint belongs to the Generic Table (Bulk Read / Write) table, therefore it is better to use it, instead of accessing the endpoint directly:

| Required Parameters | |

|---|---|

| HTTP - Url or File Path | Fill-in the parameter... |

| HTTP - Request Method | Fill-in the parameter... |

| Optional Parameters | |

| HTTP - Request Body | |

| HTTP - Is MultiPart Body (Pass File data/Mixed Key/value) | |

| HTTP - Request Format (Content-Type) | ApplicationJson |

| HTTP - Headers (e.g. hdr1:aaa || hdr2:bbb) | Accept: */* || Cache-Control: no-cache |

| Parser - Response Format (Default=Json) | Default |

| Parser - Filter (e.g. $.rows[*] ) | |

| Parser - Encoding | |

| Parser - CharacterSet | |

| Download - Enable reading binary data | False |

| Download - File overwrite mode | AlwaysOverwrite |

| Download - Save file path | |

| Download - Enable raw output mode as single row | False |

| Download - Raw output data RowTemplate | {Status:'Downloaded'} |

| Download - Request Timeout (Milliseconds) | |

| General - Enable Custom Search/Replace | |

| General - SearchFor (e.g. (\d)-(\d)--regex) | |

| General - ReplaceWith (e.g. $1-***) | |

| General - File Compression Type | |

| General - Date Format | |

| General - Enable Big Number Handling | False |

| General - Wait time (Ms) - Helps to slow down pagination (Use for throttling) | 0 |

| JSON/XML - ExcludedProperties (e.g. meta,info) | |

| JSON/XML - Flatten Small Array (Not preferred for more than 10 items) | |

| JSON/XML - Max Array Items To Flatten | 10 |

| JSON/XML - Array Transform Type | |

| JSON/XML - Array Transform Column Name Filter | |

| JSON/XML - Array Transform Row Value Filter | |

| JSON/XML - Array Transform Enable Custom Columns | |

| JSON/XML - Enable Pivot Transform | |

| JSON/XML - Array Transform Custom Columns | |

| JSON/XML - Pivot Path Replace With | |

| JSON/XML - Enable Pivot Path Search Replace | False |

| JSON/XML - Pivot Path Search For | |

| JSON/XML - Include Pivot Path | False |

| JSON/XML - Throw Error When No Match for Filter | False |

| JSON/XML - Parent Column Prefix | |

| JSON/XML - Include Parent When Child Null | False |

| Pagination - Mode | |

| Pagination - Attribute Name (e.g. page) | |

| Pagination - Increment By (e.g. 100) | 1 |

| Pagination - Expression for Next URL (e.g. $.nextUrl) | |

| Pagination - Wait time after each request (milliseconds) | 0 |

| Pagination - Max Rows Expr | |

| Pagination - Max Pages Expr | |

| Pagination - Max Rows DataPath Expr | |

| Pagination - Max Pages | 0 |

| Pagination - End Rules | |

| Pagination - Next URL Suffix | |

| Pagination - Next URL End Indicator | |

| Pagination - Stop Indicator Expr | |

| Pagination - Current Page | |

| Pagination - End Strategy Type | DetectBasedOnRecordCount |

| Pagination - Stop based on this Response StatusCode | |

| Pagination - When EndStrategy Condition Equals | True |

| Pagination - Max Response Bytes | 0 |

| Pagination - Min Response Bytes | 0 |

| Pagination - Error String Match | |

| Pagination - Enable Page Token in Body | False |

| Pagination - Placeholders (e.g. {page}) | |

| Pagination - Has Different NextPage Info | False |

| Pagination - First Page Body Part | |

| Pagination - Next Page Body Part | |

| Csv - Column Delimiter | , |

| Csv - Has Header Row | True |

| Csv - Throw error when column count mismatch | False |

| Csv - Throw error when no record found | False |

| Csv - Allow comments (i.e. line starts with # treat as comment and skip line) | False |

| Csv - Comment Character | # |

| Csv - Skip rows | 0 |

| Csv - Ignore Blank Lines | True |

| Csv - Skip Empty Records | False |

| Csv - Skip Header Comment Rows | 0 |

| Csv - Trim Headers | False |

| Csv - Trim Fields | False |

| Csv - Ignore Quotes | False |

| Csv - Treat Any Blank Value As Null | False |

| Xml - ElementsToTreatAsArray | |

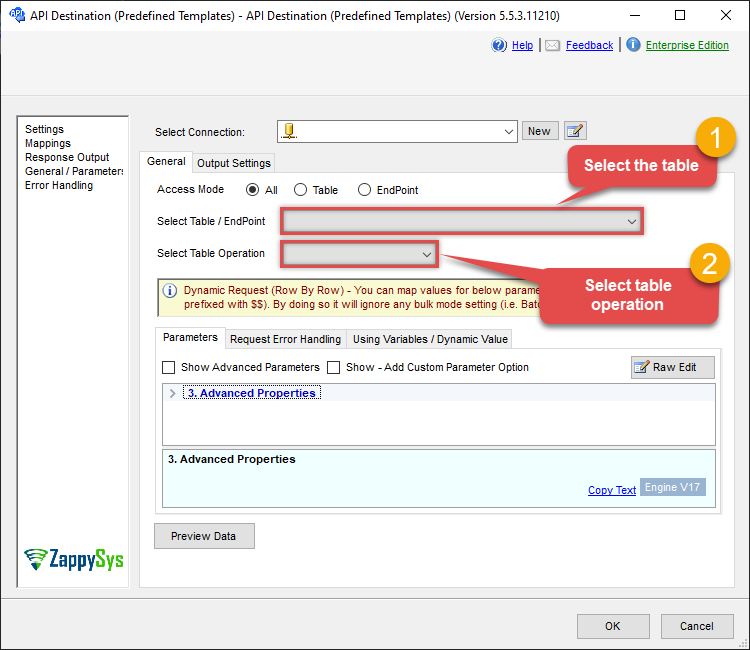

API Destination

This Endpoint belongs to the Generic Table (Bulk Read / Write) table, therefore it is better to use it, instead of accessing the endpoint directly. Use this table and table-operation pair to make generic api request:

| Required Parameters | |

|---|---|

| HTTP - Url or File Path | Fill-in the parameter... |

| HTTP - Request Method | Fill-in the parameter... |

| Optional Parameters | |

| HTTP - Request Body | |

| HTTP - Is MultiPart Body (Pass File data/Mixed Key/value) | |

| HTTP - Request Format (Content-Type) | ApplicationJson |

| HTTP - Headers (e.g. hdr1:aaa || hdr2:bbb) | Accept: */* || Cache-Control: no-cache |

| Parser - Response Format (Default=Json) | Default |

| Parser - Filter (e.g. $.rows[*] ) | |

| Parser - Encoding | |

| Parser - CharacterSet | |

| Download - Enable reading binary data | False |

| Download - File overwrite mode | AlwaysOverwrite |

| Download - Save file path | |

| Download - Enable raw output mode as single row | False |

| Download - Raw output data RowTemplate | {Status:'Downloaded'} |

| Download - Request Timeout (Milliseconds) | |

| General - Enable Custom Search/Replace | |

| General - SearchFor (e.g. (\d)-(\d)--regex) | |

| General - ReplaceWith (e.g. $1-***) | |

| General - File Compression Type | |

| General - Date Format | |

| General - Enable Big Number Handling | False |

| General - Wait time (Ms) - Helps to slow down pagination (Use for throttling) | 0 |

| JSON/XML - ExcludedProperties (e.g. meta,info) | |

| JSON/XML - Flatten Small Array (Not preferred for more than 10 items) | |

| JSON/XML - Max Array Items To Flatten | 10 |

| JSON/XML - Array Transform Type | |

| JSON/XML - Array Transform Column Name Filter | |

| JSON/XML - Array Transform Row Value Filter | |

| JSON/XML - Array Transform Enable Custom Columns | |

| JSON/XML - Enable Pivot Transform | |

| JSON/XML - Array Transform Custom Columns | |

| JSON/XML - Pivot Path Replace With | |

| JSON/XML - Enable Pivot Path Search Replace | False |

| JSON/XML - Pivot Path Search For | |

| JSON/XML - Include Pivot Path | False |

| JSON/XML - Throw Error When No Match for Filter | False |

| JSON/XML - Parent Column Prefix | |

| JSON/XML - Include Parent When Child Null | False |

| Pagination - Mode | |

| Pagination - Attribute Name (e.g. page) | |

| Pagination - Increment By (e.g. 100) | 1 |

| Pagination - Expression for Next URL (e.g. $.nextUrl) | |

| Pagination - Wait time after each request (milliseconds) | 0 |

| Pagination - Max Rows Expr | |

| Pagination - Max Pages Expr | |

| Pagination - Max Rows DataPath Expr | |

| Pagination - Max Pages | 0 |

| Pagination - End Rules | |

| Pagination - Next URL Suffix | |

| Pagination - Next URL End Indicator | |

| Pagination - Stop Indicator Expr | |

| Pagination - Current Page | |

| Pagination - End Strategy Type | DetectBasedOnRecordCount |

| Pagination - Stop based on this Response StatusCode | |

| Pagination - When EndStrategy Condition Equals | True |

| Pagination - Max Response Bytes | 0 |

| Pagination - Min Response Bytes | 0 |

| Pagination - Error String Match | |

| Pagination - Enable Page Token in Body | False |

| Pagination - Placeholders (e.g. {page}) | |

| Pagination - Has Different NextPage Info | False |

| Pagination - First Page Body Part | |

| Pagination - Next Page Body Part | |

| Csv - Column Delimiter | , |

| Csv - Has Header Row | True |

| Csv - Throw error when column count mismatch | False |

| Csv - Throw error when no record found | False |

| Csv - Allow comments (i.e. line starts with # treat as comment and skip line) | False |

| Csv - Comment Character | # |

| Csv - Skip rows | 0 |

| Csv - Ignore Blank Lines | True |

| Csv - Skip Empty Records | False |

| Csv - Skip Header Comment Rows | 0 |

| Csv - Trim Headers | False |

| Csv - Trim Fields | False |

| Csv - Ignore Quotes | False |

| Csv - Treat Any Blank Value As Null | False |

| Xml - ElementsToTreatAsArray | |

ODBC application

Use these SQL queries in your ODBC application data source:

Get __DynamicRequest__

SELECT * FROM __DynamicRequest__

generic_request endpoint belongs to

__DynamicRequest__

table(s), and can therefore be used via those table(s).

SQL Server

Use these SQL queries in SQL Server after you create a data source in Data Gateway:

Get __DynamicRequest__

DECLARE @MyQuery NVARCHAR(MAX) = 'SELECT * FROM __DynamicRequest__';

EXEC (@MyQuery) AT [LS_TO_ONEDRIVE_IN_GATEWAY];

generic_request endpoint belongs to

__DynamicRequest__

table(s), and can therefore be used via those table(s).