| Property Name |

Description |

| LoggingMode |

LoggingMode determines how much information is logged during Package Execution. Set Logging mode to Debugging for maximum log.

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| Normal [0] |

Normal |

| Medium [1] |

Medium |

| Detailed [2] |

Detailed |

| Debugging [3] |

Debugging |

|

| PrefixTimestamp |

When you enable this property it will prefix timestamp before Log messages. |

| TreatBlankNumberAsNull |

Treat empty string as NULL for any numeric data types |

| TreatBlankBoolAsNull |

Treat empty string as NULL for bool data types |

| TreatBlankDateAsNull |

Treat empty string as NULL for any date/time data types |

| Encoding |

Encoding of source file

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| Default [0] |

Default |

| ASCII [1] |

ASCII |

| UTF8 [2] |

UTF-8 |

| UTF16 [3] |

UTF-16 LE (i.e. Unicode Little Endian) |

| UTF32 [4] |

UTF-32 |

| UTF8WithoutBOM [5] |

UTF-8 Without BOM |

| UTF32WithoutBOM [6] |

UTF-32 Without BOM |

| UTF7 [7] |

UTF-7 |

| UTF7WithoutBOM [8] |

UTF-7 Without BOM |

| UTF16WithoutBOM [9] |

UTF-16 Without BOM |

| BigEndian [10] |

UTF-16 BE (i.e. Unicode Big Endian) |

| BigEndianWithoutBOM [11] |

UTF-16 BE Without BOM |

|

| CharacterSet |

Character set for text (e.g. windows-1250 ) |

| Culture |

Culture code (e.g. pt-BT). This helps to parse culture specific number formats (e.g. In some culture you may have comma rather than decimal points 0.1 can be 0,1) |

| MaxRows |

Maximum JSON records to fetch. Set this value to 0 for all records |

| EnableCustomReplace |

Enables custom search / replace in the document text after its read from the file/url or direct string. This replace operation happens before its parsed. This option can be useful for custom escape sequence in source document which is causing issue in the parser. You can replace such unwanted characters fore parser starts parsing the text. |

| SearchFor |

String you like to search for (Only valid when EnableCustomReplace option is turned on). If you want to enable Regular Expression pattern search then add --regex or --regex-ic (for case-insensitive search) at the end of your search string (e.g. ORDER-\d+--regex OR ORDER-\d+--regex-ic (case-insensitive search) ) |

| ReplaceWith |

String you like to replace with (Only valid when EnableCustomReplace option is turned on). If you added --regex or --regex-ic at the end of your SearchFor string then ReplaceWith can use special placeholders (i.e. $1, $2...) based on regular expression groups. For example you SearchFor=(\w+)(@\w+.com) to search for emails then to mask emails you can something like this for ReplaceWith = ****$2 (where $2 is domain part and $1 is before @) |

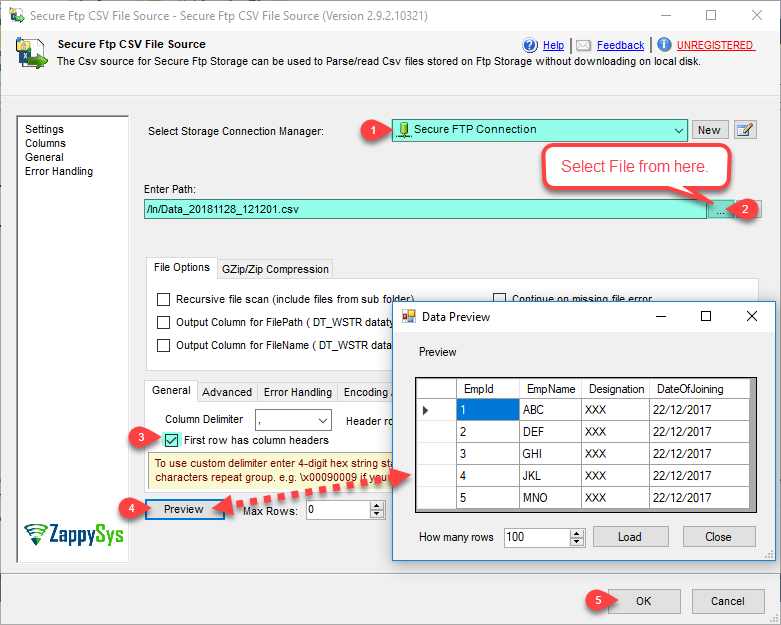

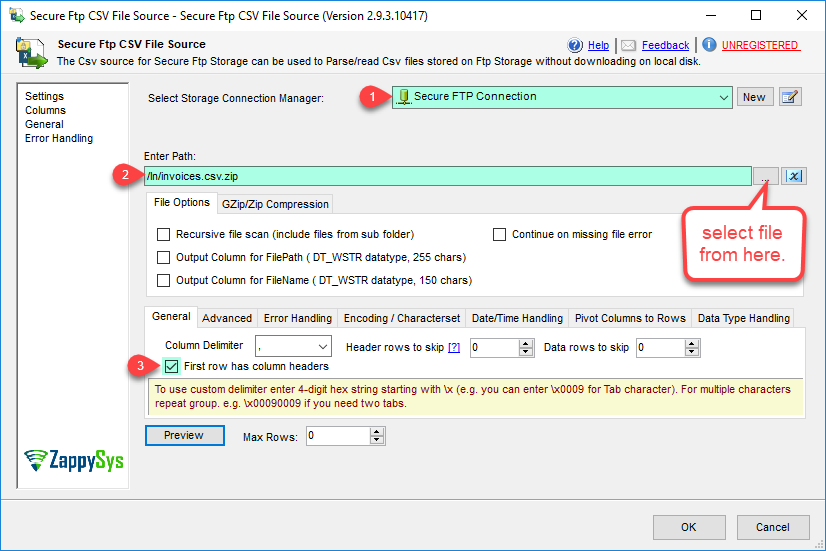

| ColumnDelimiter |

Column delimiter for data you like to parse. To use custom delimiter type it directly or enter 4-digit hex string starting with \x (e.g. you can enter \x0009 for Tab character). For multiple characters repeat group. e.g. \x00090009 if you need two tabs. |

| HasColumnHeaderRow |

Column delimiter for data you like to parse. |

| ThrowErrorOnColumnCountMismatch |

Throw error if record has different number of columns than actual columns detected based on first row |

| ThrowErrorOnNoRecordFound |

Throw error if no record found or file is blank |

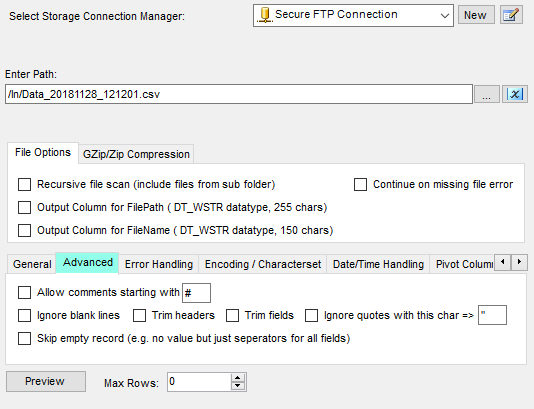

| AllowComment |

Allow comment lines which can be skipped by parser. When comment line found row is skipped. See CommentCharacter to configure first character for commented line. |

| CommentCharacter |

Allow lines with comment. When comment line found row is skipped. See LineCommentCharacter property to configure first character for commented line. |

| SkipRows |

Total data rows you like to skip (after header row) |

| SkipHeaderCommentRows |

Total rows you like to skip before header row. If its header less file then skip initial N rows (before any data row). |

| TreatBlankAsNull |

When this option is enabled, blank values for any column is treated as null so for string type rather than empty value you will see null value in the output. |

| IgnoreBlankLines |

When this option is enabled, blank lines are skipped. |

| SkipEmptyRecords |

When this option is enabled, any row with empty values in all fields is skipped (e.g. , , , , ). |

| TrimHeaders |

Trim column names if whitespace found before or after name |

| TrimFields |

Trim value for each field if whitespace found before or after |

| IgnoreQuotes |

Ignore quote character and consider it part of actual value |

| QuoteCharacter |

Quote character for quoted values. |

| DirectPath |

JSON file path (e.g. c:\data\myfile.json) or pattern to process multiple files (e.g. c:\data\*.json) |

| Recursive |

Include files from sub folders too. |

| EnableMultiPathMode |

Enable this option to treat DirectPath as list of paths / urls (separated by new line or double colon :: ). This option is very useful if you have many URLs / Paths with similar data structure and you want to return response from all URLs in one step (UNION all URLs with single dataset). Examples: http://someurl1::http://someurl2 --OR-- c:\file1::c:\file2 --OR-- c:\file1::https://someurl |

| ContinueOnFileNotFoundError |

By default process stops with error if specified local file is not found. Set this property to true if you wish to continue rather than throwing file not found error. |

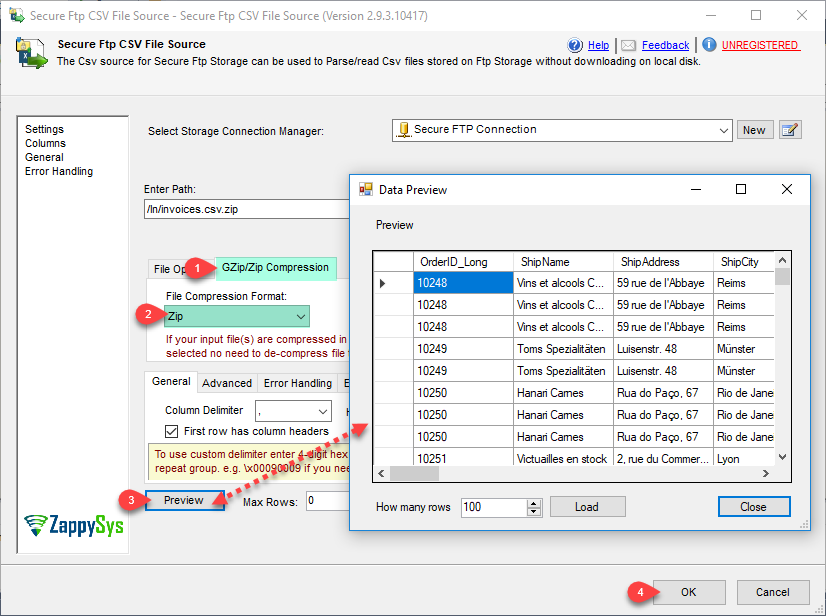

| FileCompressionType |

Compression format for source file (e.g. gzip, zip)

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| None [0] |

None |

| GZip [1] |

GZip |

| Zip [2] |

Zip |

| TarGZip [3] |

TarGZip |

|

| DateFormatString |

Specifies how custom date formatted strings are parsed when reading JSON. |

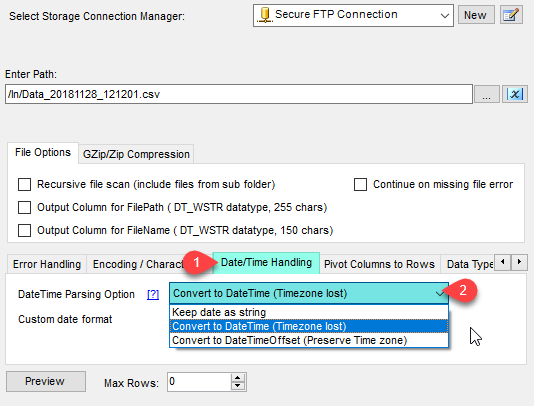

| DateParseHandling |

Specifies how date formatted strings, e.g. Date(1198908717056) and 2012-03-21T05:40Z, are parsed when reading JSON.

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| None [0] |

Keep date as string |

| DateTime [1] |

Convert to DateTime (Timezone lost) |

| DateTimeOffset [2] |

Convert to DateTimeOffset (Preserve Time zone) |

|

| FloatParseHandling |

Specifies how decimal values are parsed when reading JSON. Change this setting to Decimal if you like to have large precision / scale.

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| Double [0] |

Default (Double [~15-17 digits]) |

| Decimal [1] |

Decimal (High Precision / Scale [~28-29 digits] ) |

|

| OnErrorOutputResponseBody |

When you redirect error to error output by default you get additional information in ErrorMessage column. Check this option if you need exact Response Body (Useful if its in JSON/XML format which needs to be parsed for additional information for later step). |

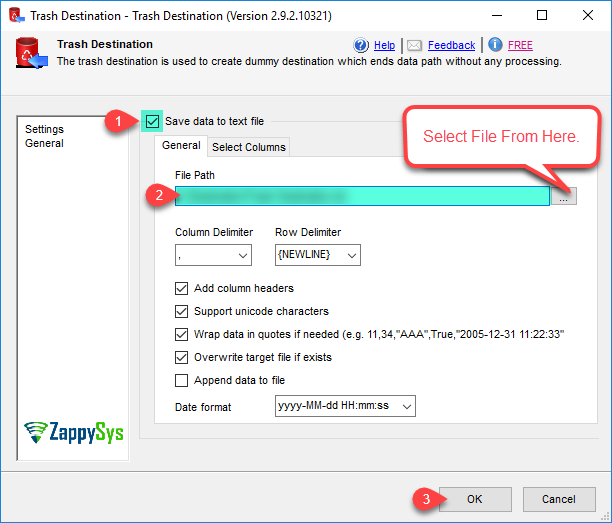

| OutputFilePath |

Set this option to true if you want to output FilePath. This option is ignored when you consume DirectValue or data from Url rather than local files. Output column name will be __FilePath |

| OutputFileName |

Set this option to true if you want to output FileName. This option is ignored when you consume DirectValue or data from Url rather than local files. Output column name will be __FileName |

| EnablePivot |

When this property is true then Column is converted to Row. Pivoted names will appear under Pivot_Name column and values will appear under Pivot_Value field. |

| IncludePivotPath |

When this property is true then one extra column Pivot_Path appears in the output along with Pivot_Name and Pivot_Value. This option is really useful to see parent hierarchy for pivoted value. |

| EnablePivotPathSearchReplace |

Enables custom search/replace function on Pivot_Path before final value appears in the output. This option is only valid when IncludePivotPath=true. |

| PivotPathSearchFor |

Search string (static string or regex pattern) for search/replace operation on Pivot_Path. You can use --regex suffix to treat search string as Regular Expression (e.g. MyData-(\d+)--regex ). To invoke case in-sensitive regex search use --regex. This option is only valid when EnablePivotPathSearchReplace=true. |

| PivotPathReplaceWith |

Replacement string for search/replace operation on Pivot_Path. If you used --regex suffix in PivotPathSearchFor then you can use placeholders like $0, $1, $2... anywhere in this string (e.g. To remove first part of email id and just keep domain part you can do this way. Set PivotPathSearchFor=(\w+)@(\w+.com)--regex, and set current property i.e. PivotPathReplaceWith=***@$2 ). This option is only valid when EnablePivotPathSearchReplace=true. |

| MetaDataScanMode |

Metadata scan mode controls how data type and length is determined. By default few records scanned to determine datatype/length. Changing ScanMode affects length/datatype accuracy.

Available Options (Use numeric value listed in bracket if you have to define expression on this property (for dynamic behavior).

| Option |

Description |

| Auto [0] |

Auto |

| Strict [1] |

Strict - Exact length |

| Guess2x [2] |

Guess2x - 2 times bigger |

| Guess3x [3] |

Guess3x - 3 times bigger |

| Guess4x [4] |

Guess4x - 4 times bigger |

| TreatAsUnicodeString [5] |

Set all columns as string |

| Guess10x [6] |

Guess10x - 10 times bigger |

| TreatStringAsMaxLength [7] |

Set string columns with MAX Length - i.e. DT_WSTR(4000) |

| TreatStringAsBlob [8] |

Set string columns as BLOB - i.e. DT_NTEXT |

|

| MetaDataCustomLength |

Length for all string column. This option is only valid for MetaDataScanMode=Custom |

| MetaDataTreatStringAsAscii |

When this option is true, it detects all string values as DT_STR (Ascii) rather than DT_WSTR (Unicode) |