Salesforce Connector for Azure Data Factory (Pipeline) How to Make Generic API Request

Introduction

In this article we will delve deeper into Salesforce and Azure Data Factory (Pipeline) integration, and will learn how to make generic api request. We are continuing from where we left off. By this time, you must have installed ODBC PowerPack, created ODBC Data Source, and configured authentication settings in your Salesforce account .

So, let's not waste time and begin.

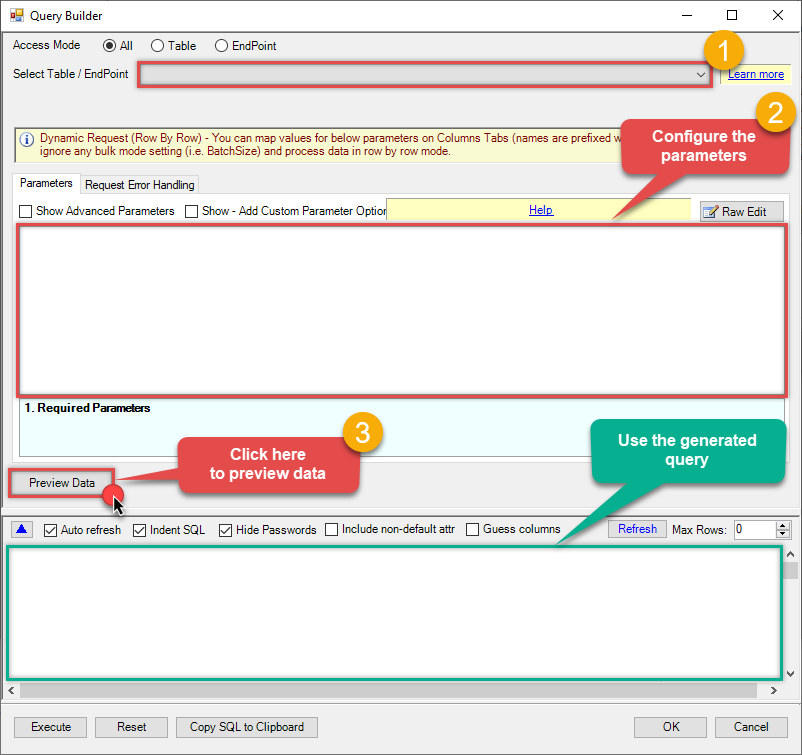

Use Query Builder to generate SQL query

-

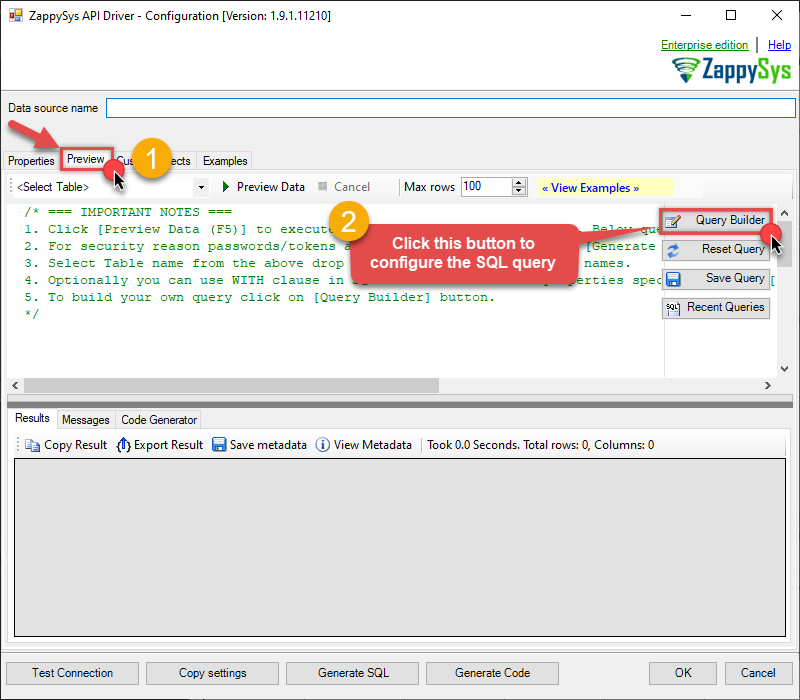

The first thing you have to do is open Query Builder:

ZappySys API Driver - SalesforceAmazon Ads Connector can be used to get Amazon advertisements using Amazon Advertisements API, download various advertisement-related reports.SalesforceDSN

ZappySys API Driver - SalesforceAmazon Ads Connector can be used to get Amazon advertisements using Amazon Advertisements API, download various advertisement-related reports.SalesforceDSN

-

Then simply select the Make Generic API Request endpoint (action).

-

Continue by configuring the Required parameters. You can also set optional parameters too.

-

Move on by hitting Preview Data button to preview the results.

-

If you see the results you need, simply copy the generated query:

-

That's it! You can use this query in Azure Data Factory (Pipeline).

Let's not stop here and explore SQL query examples, including how to use them in Stored Procedures and Views (virtual tables) in the next steps.

SQL query examples

Use these SQL queries in your Azure Data Factory (Pipeline) data source:

How to Get __DynamicRequest__

SELECT * FROM __DynamicRequest__

generic_request endpoint belongs to

__DynamicRequest__

table(s), and can therefore be used via those table(s).

Make Generic API Request in Azure Data Factory (Pipeline)

-

Sign in to Azure Portal

-

Open your browser and go to: https://portal.azure.com

-

Enter your Azure credentials and complete MFA if required.

-

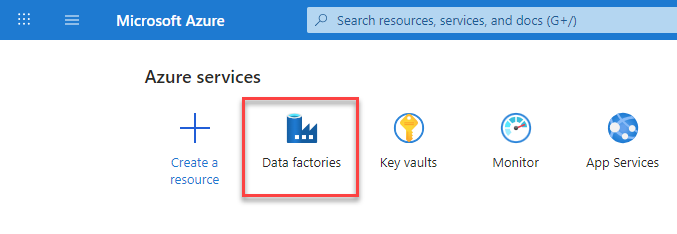

After login, go to Data factories.

-

-

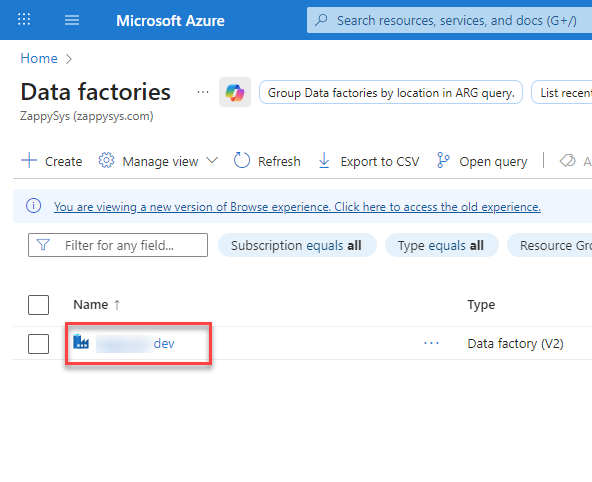

Under Azure Data Factory Resource - Create or select the Data Factory you want to work with.

-

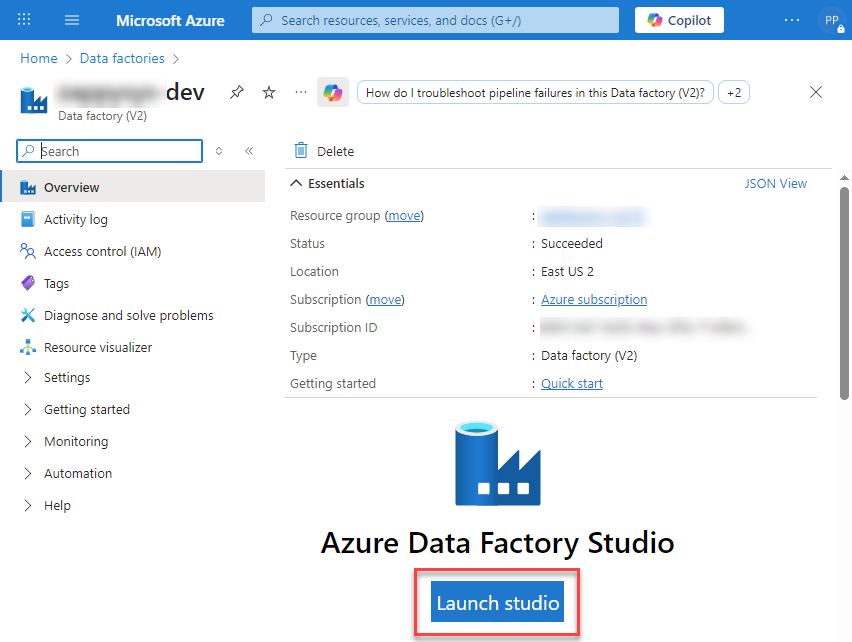

Inside the Data Factory resource page, click Launch studio.

-

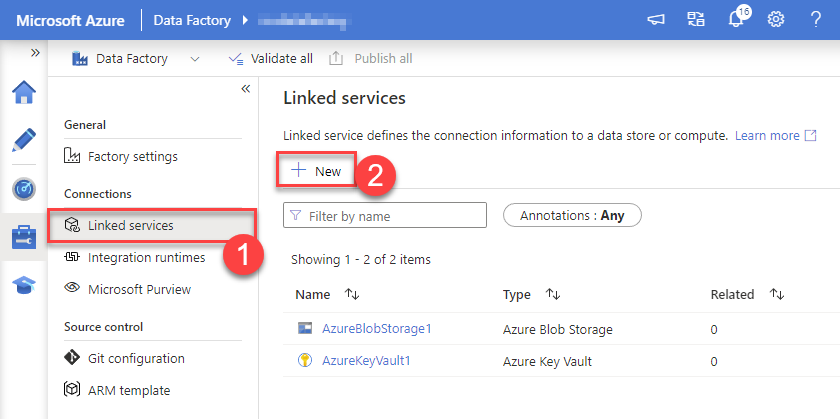

Create a New Linked service:

In the Manage section (left menu).

Under Connections, select Linked services.

Click + New to create a new Linked service based on ODBC.

-

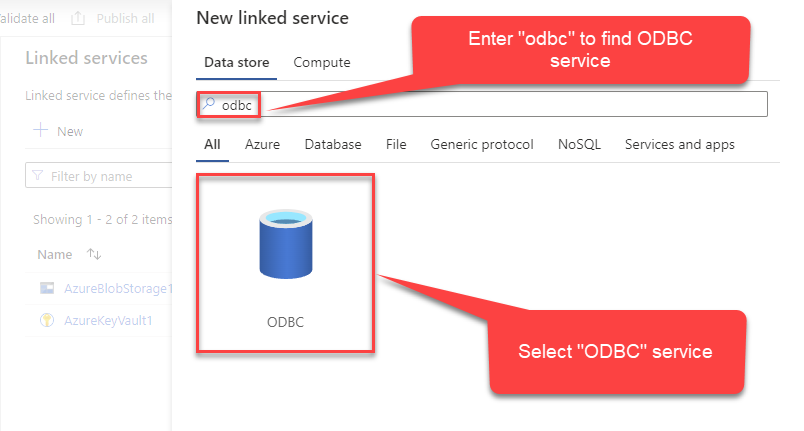

Select ODBC service:

-

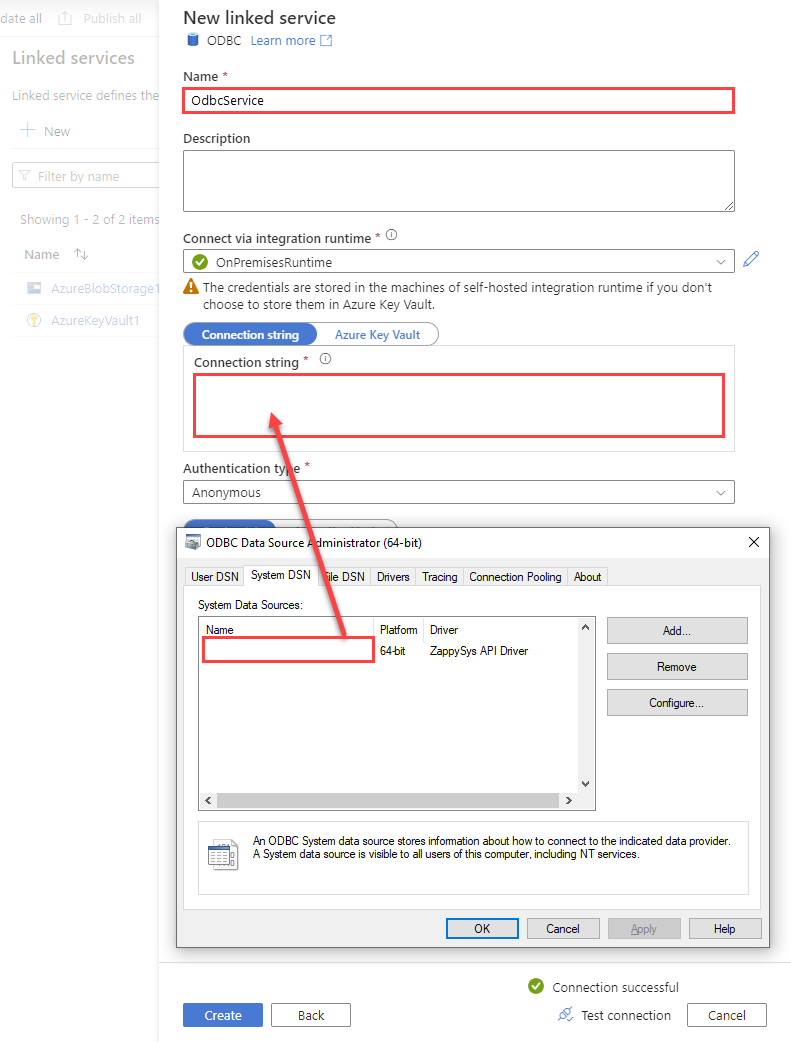

Configure new ODBC service. Use the same DSN name we used in the previous step and copy it to Connection string box:

SalesforceDSNDSN=SalesforceDSN

-

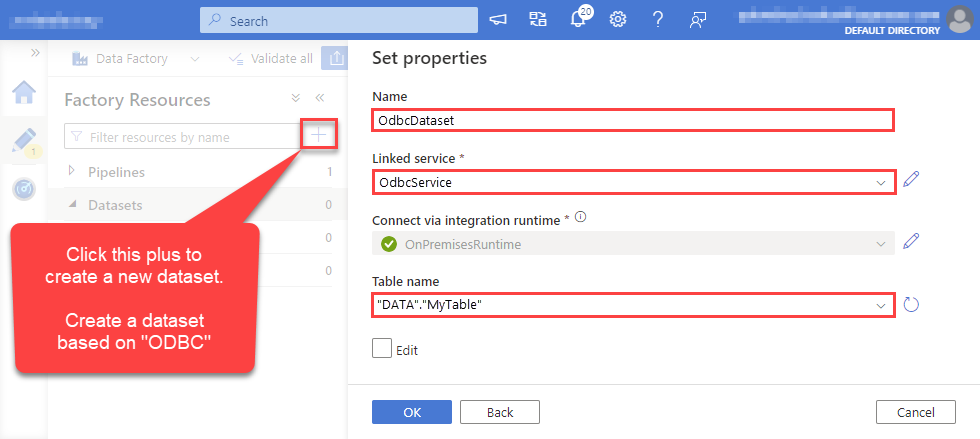

For created ODBC service create ODBC-based dataset:

-

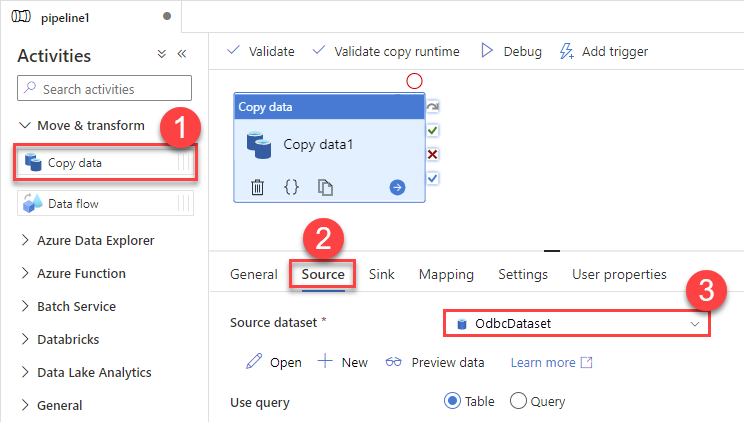

Go to your pipeline and add Copy data connector into the flow. In Source section use OdbcDataset we created as a source dataset:

-

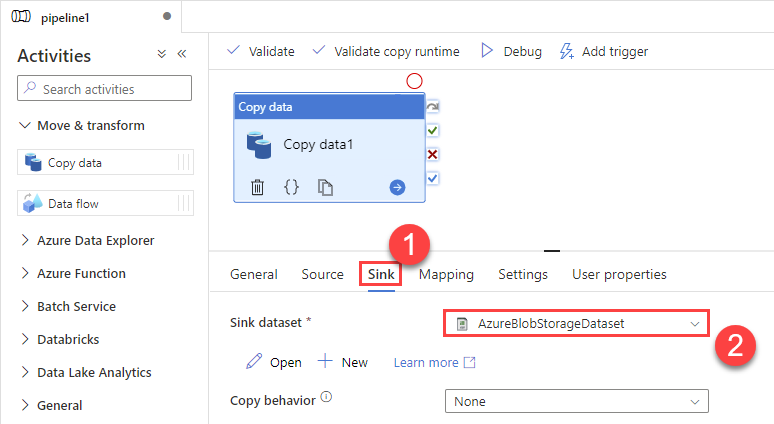

Then go to Sink section and select a destination/sink dataset. In this example we use precreated AzureBlobStorageDataset which saves data into an Azure Blob:

-

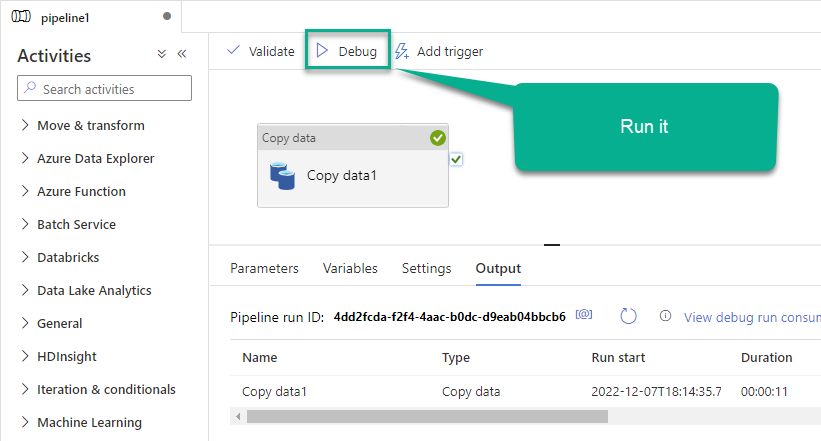

Finally, run the pipeline and see data being transferred from OdbcDataset to your destination dataset:

More actions supported by Salesforce Connector

Learn how to perform other actions directly in Azure Data Factory (Pipeline) with these how-to guides: