Reading data in SSIS

In this section we will learn how to configure and use Cosmos DB Connector in API Source to extract data from Cosmos DB.

Video tutorial

This video covers following and more so watch carefully. After watching this video follow the steps described in this article.

- How to download SSIS PowerPack for Cosmos DB integration in SSIS

- How to configure connection for Cosmos DB

- How to read data from Cosmos DB

- Features about SSIS API Source

- Using Cosmos DB Connector in SSIS

Step-by-step instructions

-

Begin with opening Visual Studio and Create a New Project.

-

Select Integration Service Project and in new project window set the appropriate name and location for project. And click OK.

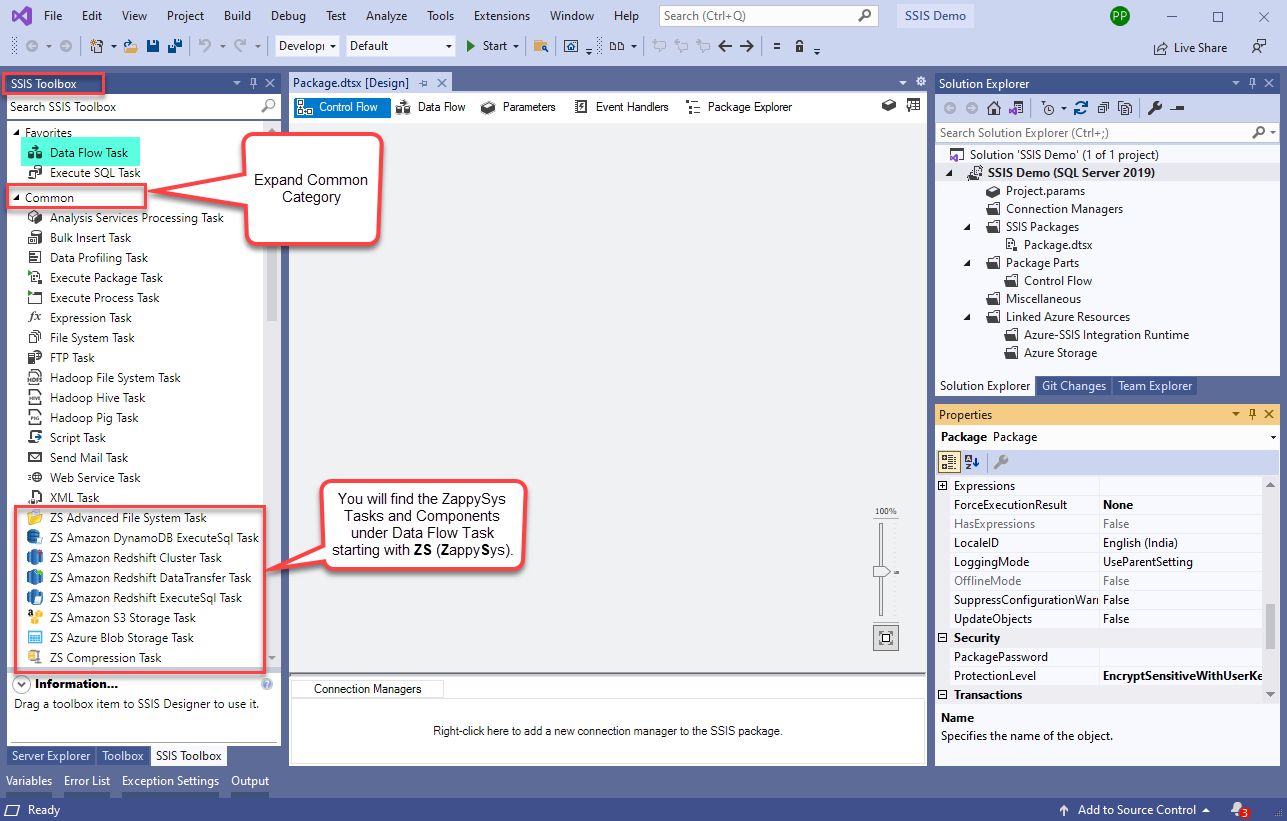

In the new SSIS project screen you will find the following:

- SSIS ToolBox on left side bar

- Solution Explorer and Property Window on right bar

- Control flow, data flow, event Handlers, Package Explorer in tab windows

- Connection Manager Window in the bottom

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link.

Note: If you don't see ZappySys SSIS PowerPack Task or Components in SSIS Toolbox, please refer to this help link. -

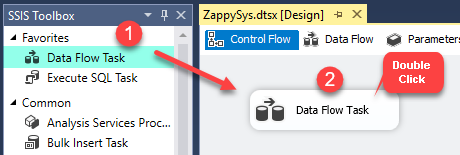

Now, Drag and Drop SSIS Data Flow Task from SSIS Toolbox. Double click on the Data Flow Task to see Data Flow designer.

-

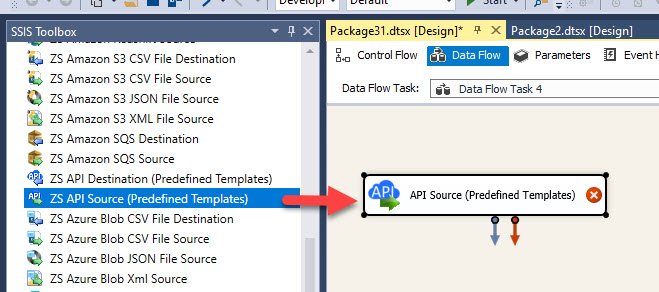

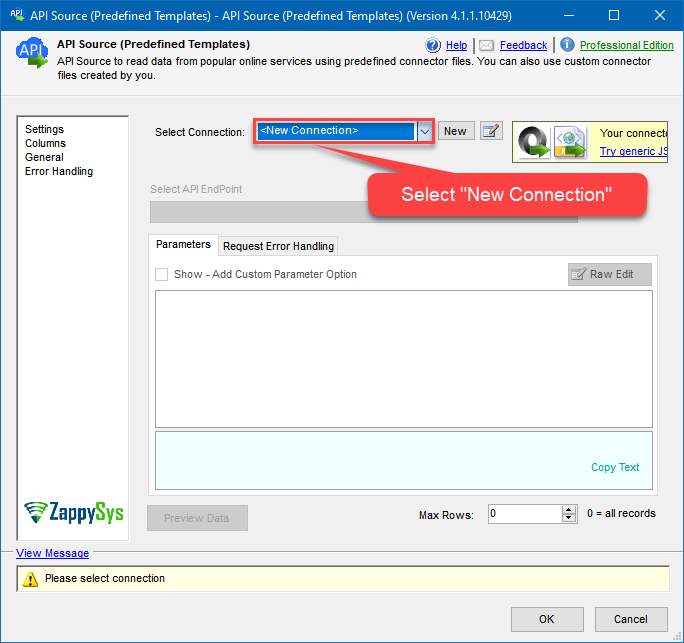

From the SSIS toolbox drag and API Source (Predefined Templates) on the data flow designer surface, and double click on it to edit it:

-

Select New Connection to create a new connection:

-

Use a preinstalled Cosmos DB Connector from Popular Connector List or press Search Online radio button to download Cosmos DB Connector. Once downloaded simply use it in the configuration:

Cosmos DB

-

Now it's time to configure authentication. Firstly, configure authentication settings in Cosmos DB service and then proceed by configuring API Connection Manager. Start by expanding an authentication type:

Cosmos DB authentication

Connecting to your Azure Cosmos DB data requires you to authenticate your REST API access. Follow the instructions below:- Go to your Azure portal homepage: https://portal.azure.com/.

- In the search bar at the top of the homepage, enter Azure Cosmos DB. In the dropdown that appears, select Azure Cosmos DB.

- Click on the name of the database account you want to connect to (also copy and paste the name of the database account for later use).

-

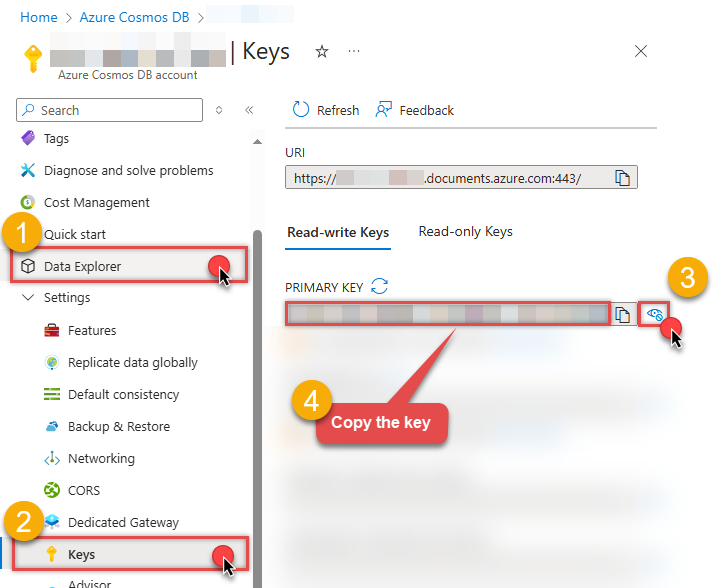

On the next page where you can see all of the database account information, look along the left side and select Keys:

- On the Keys page, you will have two tabs: Read-write Keys and Read-only Keys. If you are going to write data to your database, you need to remain on the Read-write Keys tab. If you are only going to read data from your database, you should select the Read-only Keys tab.

- On the Keys page, copy the PRIMARY KEY value and paste it somewhere for later use (the SECONDARY KEY value may also be copied and used).

- Now go to SSIS package or ODBC data source and use this PRIMARY KEY in API Key authentication configuration.

- Enter the primary or secondary key you recorded in step 6 into the Primary or Secondary Key field.

- Then enter the database account you recorded in step 3 into the Database Account field.

- Next, enter or select the default database you want to connect to using the Defualt Database field.

- Continue by entering or selecting the default table (i.e. container/collection) you want to connect to using the Default Table (Container/Collection) field.

- Select the Test Connection button at the bottom of the window to verify proper connectivity with your Azure Devops account.

- If the connection test succeeds, select OK.

- Done! Now you are ready to use Asana Connector!

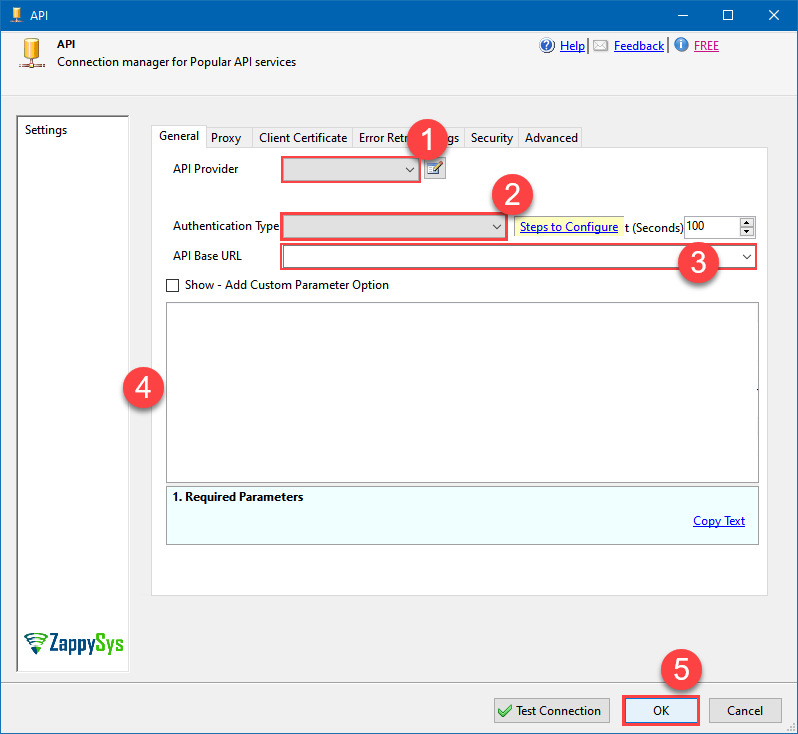

API Connection Manager configuration

Just perform these simple steps to finish authentication configuration:

-

Set Authentication Type to

API Key [Http] - Optional step. Modify API Base URL if needed (in most cases default will work).

- Fill in all the required parameters and set optional parameters if needed.

- Finally, hit OK button:

Cosmos DBAPI Key [Http]https://[$Account$].documents.azure.comRequired Parameters Primary or Secondary Key Fill-in the parameter... Account Name (Case-Sensitive) Fill-in the parameter... Database Name (keep blank to use default) Case-Sensitive Fill-in the parameter... API Version Fill-in the parameter... Optional Parameters Default Table (needed to invoke #DirectSQL)

-

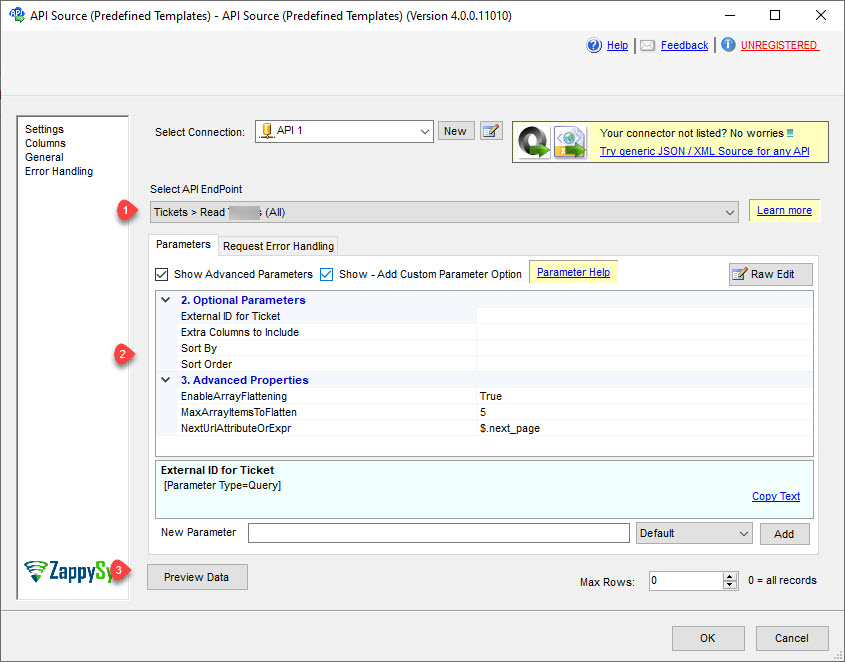

Select the desired endpoint, change/pass the properties values, and click on Preview Data button to make the API call.

API Source - Cosmos DBConnect to your Azure Cosmos DB databases to read, query, create, update, and delete documents and more!

-

That's it! We are done! Just in a few clicks we configured the call to Cosmos DB using Cosmos DB Connector.

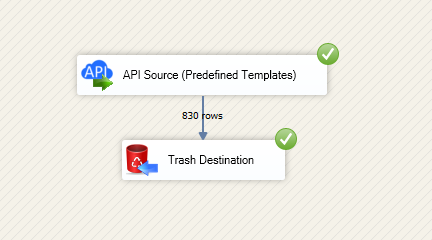

You can load the source data into your desired destination using the Upsert Destination , which supports SQL Server, PostgreSQL, and Amazon Redshift. We also offer other destinations such as CSV , Excel , Azure Table , Salesforce , and more . You can check out our SSIS PowerPack Tasks and components for more options. (*loaded in Trash Destination)