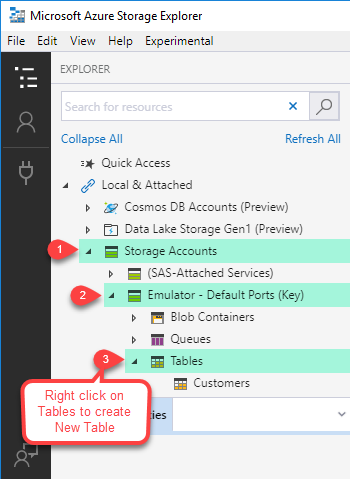

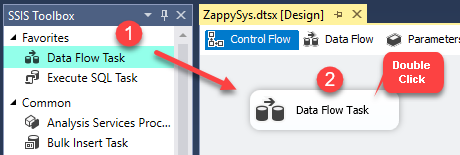

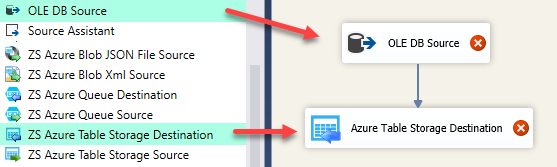

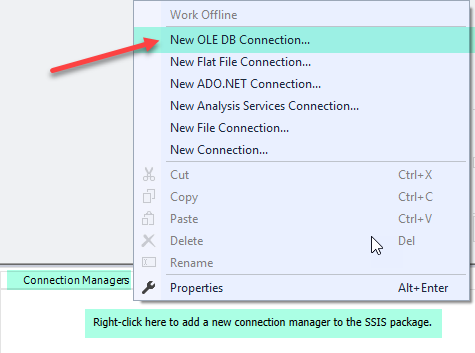

Introduction In our previous blog, we saw how toÃÂ read/write Azure Blob Storage Files in SSIS (CSV, JSON, XML Format files).ÃÂ Now in this blog, we will see how to Read/Write Azure Table Storage data in SSIS.ÃÂ To illustrate, we will useÃÂ ZappySys SSIS PowerPack, which includes several tasks to import/export data from multiples sources to multiple destinations like […]

|

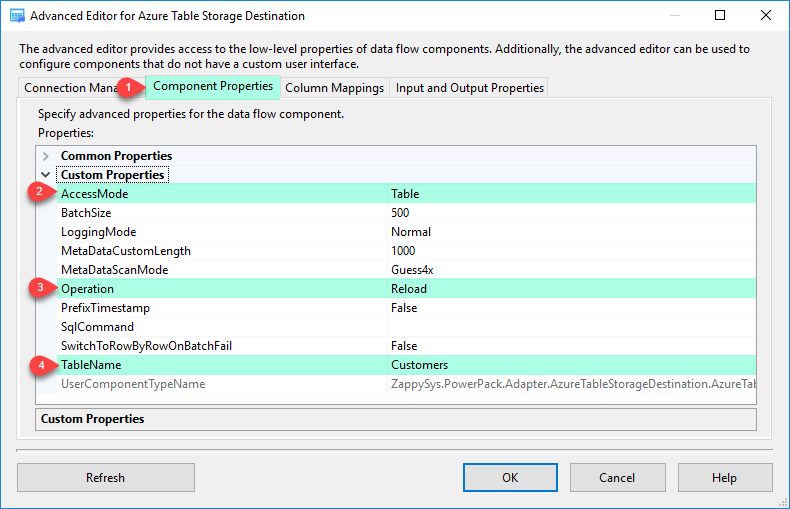

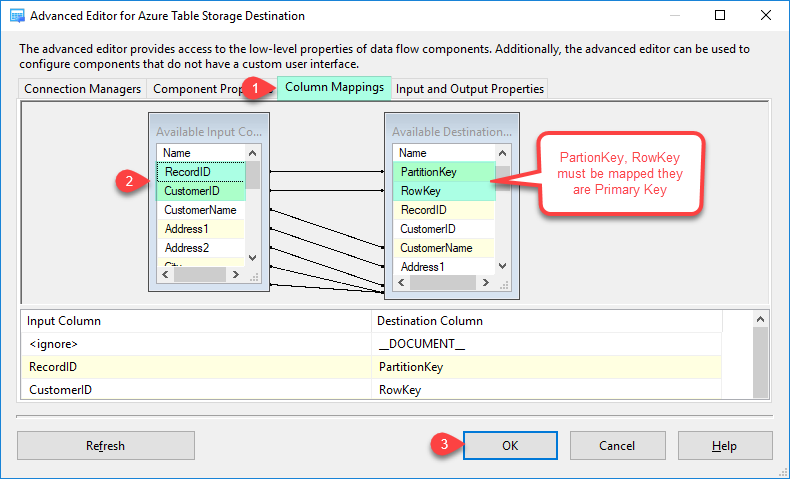

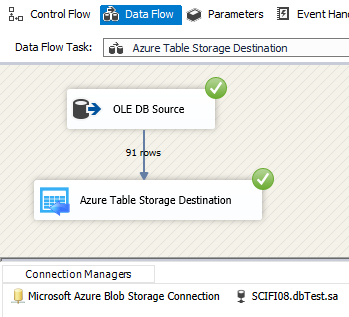

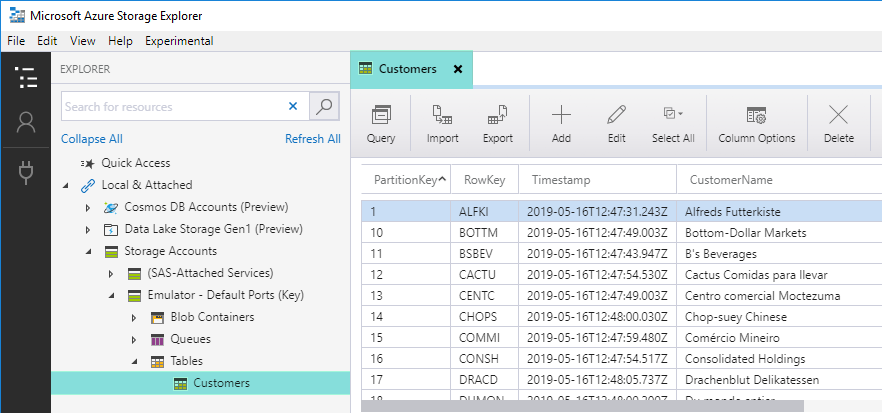

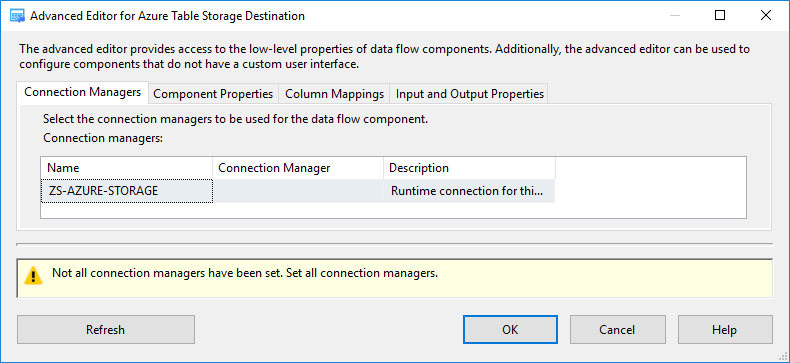

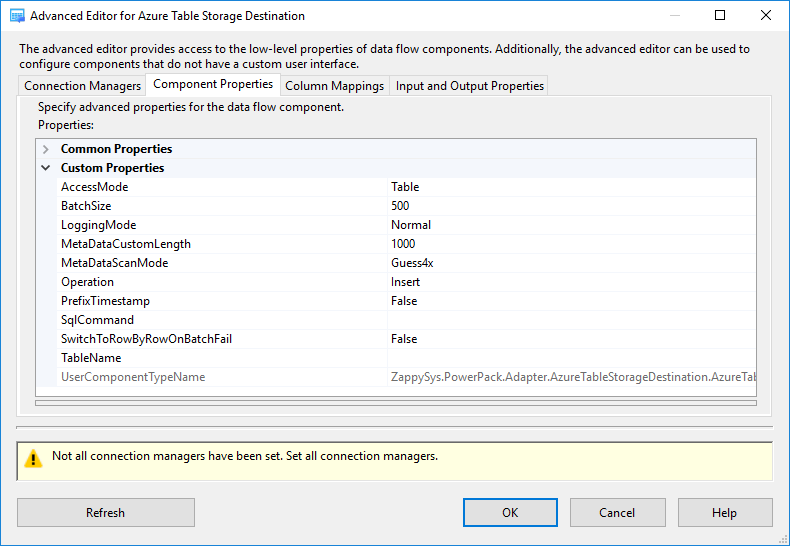

SSIS Azure Table Destination Adapter (Bulk Load,Write,Copy NoSQL Data)

|

|