Table [Dynamic Table]

Description

No description available

Supported Operations

Below section contains supported CRUD operations. Each operation is executed by some EndPoint behind the scene.| Method | Supported | Reference EndPoint |

|---|---|---|

| SELECT | get_[dynamic_endpoint_name] | |

| INSERT | post_[dynamic_endpoint_name] | |

| UPDATE | ||

| UPSERT | ||

| DELETE | ||

| LOOKUP | get_query |

Examples

SSIS

Use Google BigQuery Connector in API Source component to read data or in API Destination component to read/write data:

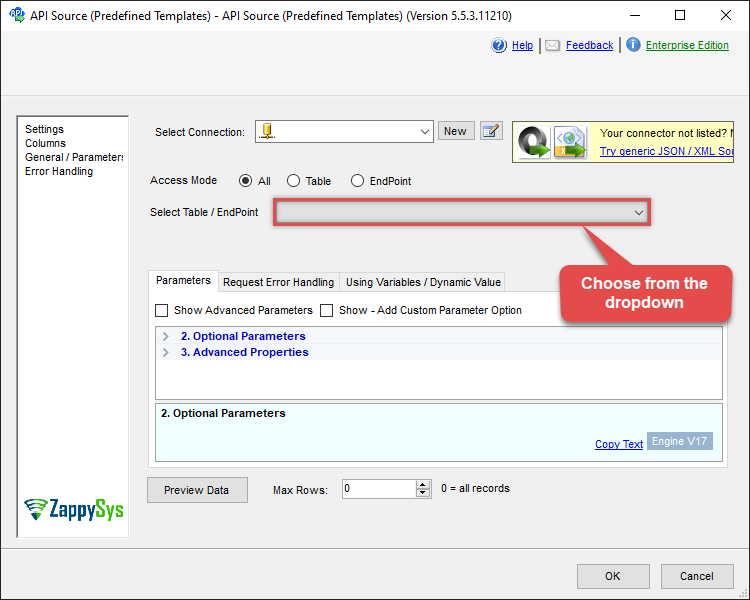

Read from [Dynamic Table] table using API Source

API Source - Google BigQuery

Read and write Google BigQuery data effortlessly. Query, integrate, and manage datasets, tables, and jobs — almost no coding required.

Google BigQuery

[Dynamic Table]

| Optional Parameters | |

|---|---|

| ArrayTransformType | TransformComplexTwoDimensionalArray |

| ArrayTransRowValueFilter | $.f[*].v |

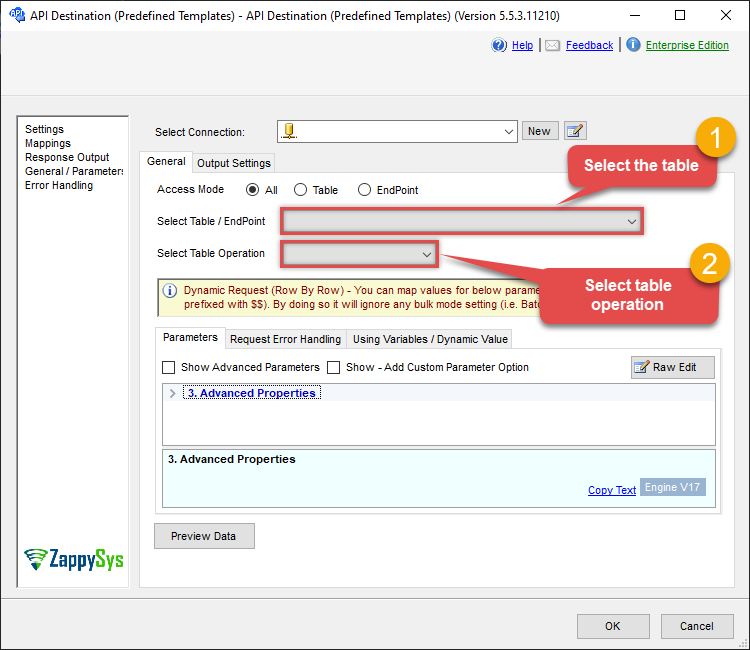

Read/write to [Dynamic Table] table using API Destination

API Destination - Google BigQuery

Read and write Google BigQuery data effortlessly. Query, integrate, and manage datasets, tables, and jobs — almost no coding required.

Google BigQuery

[Dynamic Table]

Select

| Optional Parameters | |

|---|---|

| ArrayTransformType | TransformComplexTwoDimensionalArray |

| ArrayTransRowValueFilter | $.f[*].v |

ODBC application

Use these SQL queries in your ODBC application data source:

Query using simple SQL (Native SQL)

#DirectSQL

SELECT *

FROM bigquery-public-data.samples.wikipedia

LIMIT 1000Query using complex SQL (Native SQL)

#DirectSQL

SELECT name, gender, SUM(number) AS total

FROM bigquery-public-data.usa_names.usa_1910_2013

GROUP BY name, gender

ORDER BY total DESC

LIMIT 10Delete multiple records (DML) (Native SQL)

#DirectSQL

DELETE FROM TestDataset.MyTable

WHERE Id > 5Query with CAST unix TIMESTAMP as datetime (Native SQL)

#DirectSQL

SELECT id, col_timestamp, CAST(col_timestamp AS DATE) AS timestamp_as_date, CAST(col_timestamp AS DATETIME) AS timestamp_as_datetime

FROM MyProject.MyDataset.MyTable

LIMIT 10Create table / run DDL (Native SQL)

#DirectSQL

CREATE TABLE TestDataset.Table1 (ID INT64, Name STRING, BirthDate DATETIME, Active BOOL)UPDATE table data for complex types (RECORD, Geography, JSON) (Native SQL)

#DirectSQL

UPDATE TestDataset.DataTypeTest

SET ColTime='23:59:59.123456',

ColGeography=ST_GEOGPOINT(34.150480, -84.233870),

ColRecord=(1,"AA","Column3 data"),

ColBigNumeric=1222222222222222222.123456789123456789123456789123456789,

ColJson= JSON_ARRAY('{"doc":1, "values":[{"id":1},{"id":2}]}')

WHERE ColInteger=1INSERT datetime / Unix timestamp values (Native SQL)

#DirectSQL

--DateTime Without milliseconds

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1004,'My date time','2025-01-31T23:59:59');

--DateTime with milliseconds

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1004,'My date time','2025-01-31T23:59:59.1234');

--DateTime alias field (e.g. {your-timestamp-column}_DT) - Useful if ETL tool needs DateTime datatype rather than Numeric Unix Epoch

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp_DT) VALUES(1004,'My date time','2025-01-31T23:59:59');

--Unix Epoch format (without milliseconds)

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1006,'My date time',1738367999);

--Unix Epoch format (with milliseconds)

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1006,'My date time',1738367999.12345);DROP table (if exists) / other DDL (Native SQL)

#DirectSQL

DROP TABLE IF EXISTS Myproject.Mydataset.MytableCall stored procedure (Native SQL)

#DirectSQL

CALL TestDataset.usp_GetData(1)INSERT single row

INSERT INTO MyBQTable1(SomeBQCol1, SomeBQCol2) VALUES(1,'AAA')

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')INSERT multiple rows from SQL Server

INSERT INTO MyBQTable1

SOURCE(

'MSSQL'

, 'Data Source=localhost;Initial Catalog=tempdb;Integrated Security=true'

, 'SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM SomeTable WHERE SomeCol=123'

)

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')INSERT multiple rows from any ODBC source (DSN)

INSERT INTO MyBQTable1

SOURCE(

'ODBC'

, 'DSN=MyDsn'

, 'SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM SomeTable WHERE SomeCol=123'

)

WITH(DatasetId='TestDataset')INSERT multiple rows from JSON files / API

INSERT INTO MyBQTable1

SOURCE(

'ODBC'

, 'Driver={ZappySys JSON Driver};Src=''https://some-url/get-data'''

, 'SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM _root_'

)

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')SQL Server

Use these SQL queries in SQL Server after you create a data source in Data Gateway:

Query using simple SQL (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

SELECT *

FROM bigquery-public-data.samples.wikipedia

LIMIT 1000';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Query using complex SQL (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

SELECT name, gender, SUM(number) AS total

FROM bigquery-public-data.usa_names.usa_1910_2013

GROUP BY name, gender

ORDER BY total DESC

LIMIT 10';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Delete multiple records (DML) (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

DELETE FROM TestDataset.MyTable

WHERE Id > 5';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Query with CAST unix TIMESTAMP as datetime (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

SELECT id, col_timestamp, CAST(col_timestamp AS DATE) AS timestamp_as_date, CAST(col_timestamp AS DATETIME) AS timestamp_as_datetime

FROM MyProject.MyDataset.MyTable

LIMIT 10';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Create table / run DDL (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

CREATE TABLE TestDataset.Table1 (ID INT64, Name STRING, BirthDate DATETIME, Active BOOL)';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];UPDATE table data for complex types (RECORD, Geography, JSON) (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

UPDATE TestDataset.DataTypeTest

SET ColTime=''23:59:59.123456'',

ColGeography=ST_GEOGPOINT(34.150480, -84.233870),

ColRecord=(1,"AA","Column3 data"),

ColBigNumeric=1222222222222222222.123456789123456789123456789123456789,

ColJson= JSON_ARRAY(''{"doc":1, "values":[{"id":1},{"id":2}]}'')

WHERE ColInteger=1';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT datetime / Unix timestamp values (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

--DateTime Without milliseconds

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1004,''My date time'',''2025-01-31T23:59:59'');

--DateTime with milliseconds

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1004,''My date time'',''2025-01-31T23:59:59.1234'');

--DateTime alias field (e.g. {your-timestamp-column}_DT) - Useful if ETL tool needs DateTime datatype rather than Numeric Unix Epoch

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp_DT) VALUES(1004,''My date time'',''2025-01-31T23:59:59'');

--Unix Epoch format (without milliseconds)

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1006,''My date time'',1738367999);

--Unix Epoch format (with milliseconds)

INSERT INTO TestDataset.DataTypeTest (ColInteger,ColString,ColTimestamp) VALUES(1006,''My date time'',1738367999.12345);';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];DROP table (if exists) / other DDL (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

DROP TABLE IF EXISTS Myproject.Mydataset.Mytable';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Call stored procedure (Native SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

CALL TestDataset.usp_GetData(1)';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT single row

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1(SomeBQCol1, SomeBQCol2) VALUES(1,''AAA'')

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT multiple rows from SQL Server

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''MSSQL''

, ''Data Source=localhost;Initial Catalog=tempdb;Integrated Security=true''

, ''SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM SomeTable WHERE SomeCol=123''

)

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT multiple rows from any ODBC source (DSN)

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''ODBC''

, ''DSN=MyDsn''

, ''SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM SomeTable WHERE SomeCol=123''

)

WITH(DatasetId=''TestDataset'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT multiple rows from JSON files / API

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''ODBC''

, ''Driver={ZappySys JSON Driver};Src=''''https://some-url/get-data''''''

, ''SELECT Col1 AS SomeBQCol1, Col2 AS SomeBQCol2 FROM _root_''

)

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];