Table [Dynamic Table]

Description

No description available

Supported Operations

Below section contains supported CRUD operations. Each operation is executed by some EndPoint behind the scene.| Method | Supported | Reference EndPoint |

|---|---|---|

| SELECT | get_[dynamic_endpoint_name] | |

| INSERT | post_[dynamic_endpoint_name] | |

| UPDATE | ||

| UPSERT | ||

| DELETE | ||

| LOOKUP | get_query |

Examples

SSIS

Use Google BigQuery Connector in API Source component to read data or in API Destination component to read/write data:

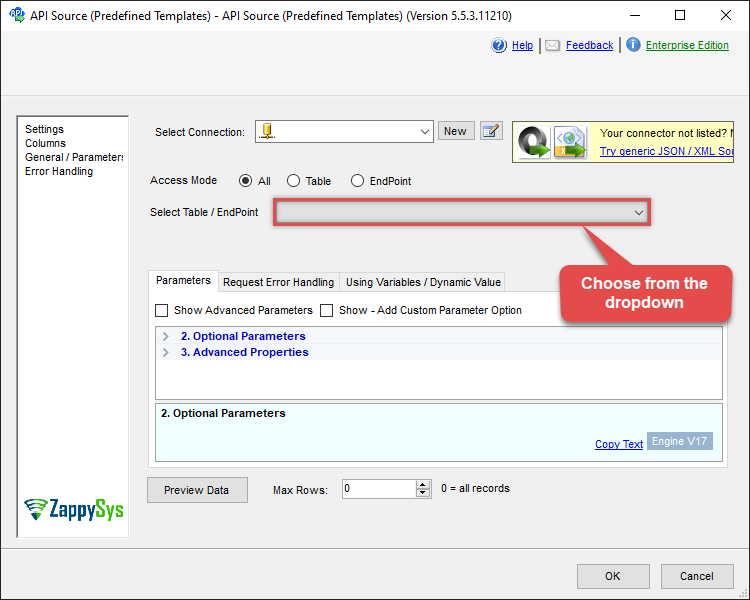

Read from [Dynamic Table] table using API Source

| Optional Parameters | |

|---|---|

| ArrayTransformType | TransformComplexTwoDimensionalArray |

| ArrayTransRowValueFilter | $.f[*].v |

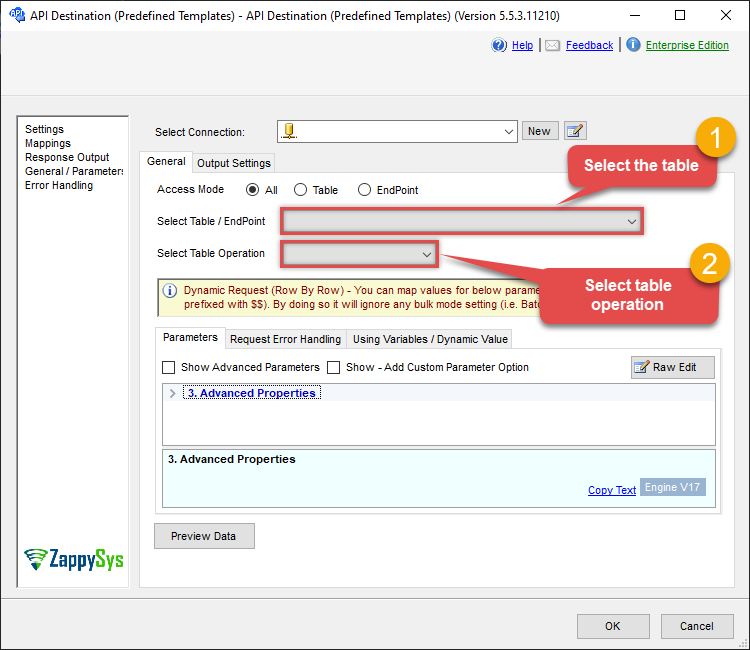

Read/write to [Dynamic Table] table using API Destination

| Optional Parameters | |

|---|---|

| ArrayTransformType | TransformComplexTwoDimensionalArray |

| ArrayTransRowValueFilter | $.f[*].v |

ODBC application

Use these SQL queries in your ODBC application data source:

Native Query (ServerSide): Query using Simple SQL

Server side BigQuery SQL query example. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.samples.wikipedia)

#DirectSQL SELECT * FROM bigquery-public-data.samples.wikipedia LIMIT 1000 /* try your own dataset or Some FREE dataset like nyc-tlc.yellow.trips -- 3 parts ([Project.]Dataset.Table) */Native Query (ServerSide): Query using Complex SQL

Server side SQL query example of BigQuery. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.usa_names.usa_1910_2013)

#DirectSQL

SELECT name, gender, SUM(number) AS total

FROM bigquery-public-data.usa_names.usa_1910_2013

GROUP BY name, gender

ORDER BY total DESC

LIMIT 10Native Query (ServerSide): Delete Multiple Records (Call DML)

This Server side SQL query example of BigQuery shows how to invoke DELETE statement. To do that prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.usa_names.usa_1910_2013)

#DirectSQL DELETE FROM TestDataset.MyTable Where Id > 5Native Query (ServerSide): Query with CAST unix TIMESTAMP datatype column as datetime

This example shows how to query timestamp column as DateTime. E.g. 73833719.524272 should be displayed as 1972-05-04 or with milliseconds 1972-05-04 1:21:59.524 PM then use CAST function (you must use #DirectSQL prefix)

#DirectSQL

SELECT id, col_timestamp, CAST(col_timestamp as DATE) AS timestamp_as_date, CAST(col_timestamp as DATETIME) AS timestamp_as_datetime

FROM MyProject.MyDataset.MyTable

LIMIT 10Native Query (ServerSide): Create Table / Run Other DDL

Example of how to run Valid BigQuery DDL statement. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

#DirectSQL CREATE TABLE TestDataset.Table1 (ID INT64,Name STRING,BirthDate DATETIME, Active BOOL)Native Query (ServerSide): UPDATE Table data for complex types (e.g. Nested RECORD, Geography, JSON)

Example of how to run Valid BigQuery DML statement ()e.g. UPDATE / INSERT / DELETE). This usecase shows how to update record with complex data types such as RECORD (i.e Array), Geography, JSON and more. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

#DirectSQL

Update TestDataset.DataTypeTest

Set ColTime='23:59:59.123456',

ColGeography=ST_GEOGPOINT(34.150480, -84.233870),

ColRecord=(1,"AA","Column3 data"),

ColBigNumeric=1222222222222222222.123456789123456789123456789123456789,

ColJson= JSON_ARRAY('{"doc":1, "values":[{"id":1},{"id":2}]}')

Where ColInteger=1Native Query (ServerSide): DROP Table (if exists) / Other DDL

Example of how to run Valid BigQuery DDL statement. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

#DirectSQL DROP TABLE IF EXISTS Myproject.Mydataset.MytableNative Query (ServerSide): Call Stored Procedure

Example of how to run BigQuery Stored Procedure and pass parameters. Assuming you created a valid stored proc called usp_GetData in TestDataset, call like below.

#DirectSQL CALL TestDataset.usp_GetData(1)INSERT Single Row

This is sample how you can insert into BigQuery using ZappySys query language. You can also use ProjectId='myproject-id' in WITH clause.

INSERT INTO MyBQTable1(SomeBQCol1, SomeBQCol2) Values(1,'AAA')

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')INSERT Multiple Rows from SQL Server

This example shows how to bulk insert into Google BigQuery Table from microsoft SQL Server as external source. Notice that INSERT is missing column list. Its provided by source query so must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names)

INSERT INTO MyBQTable1

SOURCE(

'MSSQL'

, 'Data Source=localhost;Initial Catalog=tempdb;Initial Catalog=tempdb;Integrated Security=true'

, 'SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM SomeTable Where SomeCol=123'

)

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')INSERT Multiple Rows from any ODBC Source (DSN)

This example shows how to bulk insert into Google BigQuery Table from any external ODBC Source (Assuming you have installed ODBC Driver and configured DSN). Notice that INSERT is missing column list. Its provided by source query so it must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names)

INSERT INTO MyBQTable1

SOURCE(

'ODBC'

, 'DSN=MyDsn'

, 'SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM SomeTable Where SomeCol=123'

)

WITH(DatasetId='TestDataset')INSERT Multiple Rows from any JSON Files / API (Using ZappySys ODBC JSON Driver)

This example shows how to bulk insert into Google BigQuery Table from any external ODBC JSON API / File Source (Assuming you have installed ZappySys ODBC Driver for JSON). Notice that INSERT is missing column list. Its provided by source query so it must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names). You can also use similar approach to read from CSV files or XML Files. Just use CSV / XML driver rather than JSON driver in connection string. Refer this for more examples of JSON Query https://zappysys.com/onlinehelp/odbc-powerpack/scr/json-odbc-driver-sql-query-examples.htm

INSERT INTO MyBQTable1

SOURCE(

'ODBC'

, 'Driver={ZappySys JSON Driver};Src='https://some-url/get-data''

, 'SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM _root_'

)

--WITH(DatasetId='TestDataset',Output='*')

--WITH(DatasetId='TestDataset',ProjectId='MyProjectId',Output='*')SQL Server

Use these SQL queries in SQL Server after you create a data source in Data Gateway:

Native Query (ServerSide): Query using Simple SQL

Server side BigQuery SQL query example. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.samples.wikipedia)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL SELECT * FROM bigquery-public-data.samples.wikipedia LIMIT 1000 /* try your own dataset or Some FREE dataset like nyc-tlc.yellow.trips -- 3 parts ([Project.]Dataset.Table) */';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): Query using Complex SQL

Server side SQL query example of BigQuery. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.usa_names.usa_1910_2013)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

SELECT name, gender, SUM(number) AS total

FROM bigquery-public-data.usa_names.usa_1910_2013

GROUP BY name, gender

ORDER BY total DESC

LIMIT 10';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): Delete Multiple Records (Call DML)

This Server side SQL query example of BigQuery shows how to invoke DELETE statement. To do that prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL). Query free dataset table (bigquery-public-data.usa_names.usa_1910_2013)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL DELETE FROM TestDataset.MyTable Where Id > 5';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): Query with CAST unix TIMESTAMP datatype column as datetime

This example shows how to query timestamp column as DateTime. E.g. 73833719.524272 should be displayed as 1972-05-04 or with milliseconds 1972-05-04 1:21:59.524 PM then use CAST function (you must use #DirectSQL prefix)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

SELECT id, col_timestamp, CAST(col_timestamp as DATE) AS timestamp_as_date, CAST(col_timestamp as DATETIME) AS timestamp_as_datetime

FROM MyProject.MyDataset.MyTable

LIMIT 10';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): Create Table / Run Other DDL

Example of how to run Valid BigQuery DDL statement. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL CREATE TABLE TestDataset.Table1 (ID INT64,Name STRING,BirthDate DATETIME, Active BOOL)';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): UPDATE Table data for complex types (e.g. Nested RECORD, Geography, JSON)

Example of how to run Valid BigQuery DML statement ()e.g. UPDATE / INSERT / DELETE). This usecase shows how to update record with complex data types such as RECORD (i.e Array), Geography, JSON and more. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL

Update TestDataset.DataTypeTest

Set ColTime=''23:59:59.123456'',

ColGeography=ST_GEOGPOINT(34.150480, -84.233870),

ColRecord=(1,"AA","Column3 data"),

ColBigNumeric=1222222222222222222.123456789123456789123456789123456789,

ColJson= JSON_ARRAY(''{"doc":1, "values":[{"id":1},{"id":2}]}'')

Where ColInteger=1';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): DROP Table (if exists) / Other DDL

Example of how to run Valid BigQuery DDL statement. Prefix SQL with word #DirectSQL to invoke server side engine (Pass-through SQL)

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL DROP TABLE IF EXISTS Myproject.Mydataset.Mytable';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];Native Query (ServerSide): Call Stored Procedure

Example of how to run BigQuery Stored Procedure and pass parameters. Assuming you created a valid stored proc called usp_GetData in TestDataset, call like below.

DECLARE @MyQuery NVARCHAR(MAX) = '#DirectSQL CALL TestDataset.usp_GetData(1)';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT Single Row

This is sample how you can insert into BigQuery using ZappySys query language. You can also use ProjectId='myproject-id' in WITH clause.

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1(SomeBQCol1, SomeBQCol2) Values(1,''AAA'')

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT Multiple Rows from SQL Server

This example shows how to bulk insert into Google BigQuery Table from microsoft SQL Server as external source. Notice that INSERT is missing column list. Its provided by source query so must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names)

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''MSSQL''

, ''Data Source=localhost;Initial Catalog=tempdb;Initial Catalog=tempdb;Integrated Security=true''

, ''SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM SomeTable Where SomeCol=123''

)

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT Multiple Rows from any ODBC Source (DSN)

This example shows how to bulk insert into Google BigQuery Table from any external ODBC Source (Assuming you have installed ODBC Driver and configured DSN). Notice that INSERT is missing column list. Its provided by source query so it must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names)

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''ODBC''

, ''DSN=MyDsn''

, ''SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM SomeTable Where SomeCol=123''

)

WITH(DatasetId=''TestDataset'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];INSERT Multiple Rows from any JSON Files / API (Using ZappySys ODBC JSON Driver)

This example shows how to bulk insert into Google BigQuery Table from any external ODBC JSON API / File Source (Assuming you have installed ZappySys ODBC Driver for JSON). Notice that INSERT is missing column list. Its provided by source query so it must produce valid column names found in target BQ Table (you can use SQL Alias in Column name to produce matching names). You can also use similar approach to read from CSV files or XML Files. Just use CSV / XML driver rather than JSON driver in connection string. Refer this for more examples of JSON Query https://zappysys.com/onlinehelp/odbc-powerpack/scr/json-odbc-driver-sql-query-examples.htm

DECLARE @MyQuery NVARCHAR(MAX) = 'INSERT INTO MyBQTable1

SOURCE(

''ODBC''

, ''Driver={ZappySys JSON Driver};Src=''https://some-url/get-data''''

, ''SELECT Col1 as SomeBQCol1,Col2 as SomeBQCol2 FROM _root_''

)

--WITH(DatasetId=''TestDataset'',Output=''*'')

--WITH(DatasetId=''TestDataset'',ProjectId=''MyProjectId'',Output=''*'')';

EXEC (@MyQuery) AT [LS_TO_GOOGLE_BIGQUERY_IN_GATEWAY];