Endpoint Upsert documents

Name

upsert_documents

Description

Insert Or Update (Upserts) documents. If _id column not supplied then this acts like INSERT call. If _id column supplied and its found then UPDATE action happens else INSERT. Look at the Result column in the output to see if document was created or updated.

Related Tables

[Dynamic Table]

, [Dynamic Table]

Parameters

| Parameter | Required | Options |

|---|---|---|

|

Name:

Label: Index |

YES | |

|

Name:

Label: Alias (Deprecated - Use Index instead) |

Output Columns

| Label | Data Type (SSIS) | Data Type (SQL) | Length | Description |

|---|---|---|---|---|

| ErrorFound |

DT_BOOL

|

bit

|

||

| Result |

DT_WSTR

|

nvarchar(4000)

|

4000 | |

| HttpStatus |

DT_I4

|

int

|

||

| Id |

DT_WSTR

|

nvarchar(50)

|

50 | |

| Index |

DT_WSTR

|

nvarchar(150)

|

150 | |

| Type |

DT_WSTR

|

nvarchar(50)

|

50 | |

| IndexUuid |

DT_WSTR

|

nvarchar(50)

|

50 | |

| Reason |

DT_WSTR

|

nvarchar(4000)

|

4000 | |

| ErrorType |

DT_WSTR

|

nvarchar(250)

|

250 | |

| ErrorCausedByType |

DT_WSTR

|

nvarchar(250)

|

250 | |

| ErrorCausedByreason |

DT_WSTR

|

nvarchar(2000)

|

2000 | |

| Version |

DT_I4

|

int

|

||

| SequenceNo |

DT_I4

|

int

|

||

| PrimaryTerm |

DT_I4

|

int

|

||

| ShardsFailed |

DT_I4

|

int

|

||

| ShardsSuccessful |

DT_I4

|

int

|

||

| ShardsTotal |

DT_I4

|

int

|

||

| Took |

DT_I4

|

int

|

Input Columns

| Label | Data Type (SSIS) | Data Type (SQL) | Length | Description |

|---|---|---|---|---|

| _id |

DT_WSTR

|

nvarchar(150)

|

150 | |

| [$parent.Pivot_Path$] |

DT_WSTR

|

nvarchar(4000)

|

4000 | |

| [$parent.Pivot_Path$].lat |

DT_R8

|

float

|

||

| [$parent.Pivot_Path$].lon |

DT_R8

|

float

|

||

| [$parent.Pivot_Path$].type |

DT_WSTR

|

nvarchar(30)

|

30 | |

| [$parent.Pivot_Path$].coordinates |

DT_TEXT

|

varchar(MAX)

|

Examples

SSIS

Use ElasticSearch Connector in API Source or in API Destination SSIS Data Flow components to read or write data.

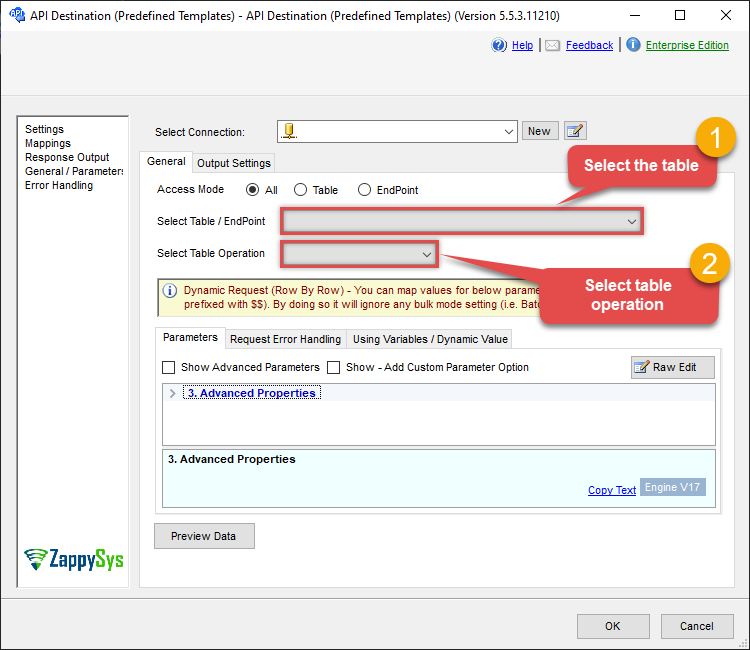

API Destination

This Endpoint belongs to the [Dynamic Table] table, therefore it is better to use it, instead of accessing the endpoint directly. Use this table and table-operation pair to upsert documents:

| Optional Parameters | |

|---|---|

| RowHeaderFooterContinueOnError | True |

| RowHeader | |

ODBC application

Use these SQL queries in your ODBC application data source:

Upsert (Update or Insert) documents using various data types (supply _id)

This example shows how to update or insert document for different datatype fields. _id is optional. If _id column is supplied then it does UPSERT action (Update or Insert) if _id not supplied then does only insert (auto generate new _id) Some fields can accept value as Raw JSON (e.g. nested, object, geo_point, geo_shape). Object field type can also accept value by nested field (e.g. [object_field.field1] ). Look at the Result column in the output to see if document was created or updated.

UPSERT INTO datatype_test (

_id,

binary_field,

boolean_field,

byte_field,

date_field,

double_field,

float_field,

geo_point_field, --raw

--OR--

--"geo_point_field.lat",

--"geo_point_field.lon",

geo_shape_field, --raw

--OR--

--"geo_shape_field.type",

--"geo_shape_field.coordinates",

integer_field,

ip_field,

keyword_field,

long_field,

nested_field, --raw

object_field, --raw

--OR--

--"object_field.field1",

--"object_field.field2",

short_field,

text_field

)

VALUES(

2, -- _id (Optional - if not supplied then it inserts with auto-generated _id)

'SGVsbG8gd29ybGQ=', --binary_field --base64 value of "Hello world"

false, --bool

117, --byte_field

'2012-12-31T23:59:59.123', --date_field

1.123456789, --double_field

1.123456789, --float_field

--raw JSON must be in one line

'{ "lat": 40.7128, "lon": -74.0060 }', --geo_point_field

--OR--

-- 40.7128, -74.0060,

'{ "type": "polygon", "coordinates": [[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]] }', --geo_shape_field

--OR--

--'polygon',

--'[[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]]',

123, --integer_field

'127.0.0.1', --ip_field

'thhi is text', --keyword_field

1234567890, --long_field

--raw JSON must be in one line

'[{"nested_property_1":"nested text 1", "nested_property_2":100}, {"nested_property_1":"nested text 2", "nested_property_2":101}]', --nested_field

'{"field1":"A","field2":"B"}', --object_field (Raw Value)

--OR--

--'object field keyword 1', --object_field.field1

--123, --object_field.field2

1, --short_field

'text field ' --text_field

)Upsert (Update or Insert) documents using various data types (supply _id)

This example shows how to update or insert document for different datatype fields. _id is optional. If _id column is supplied then it does UPSERT action (Update or Insert) if _id not supplied then does only insert (auto generate new _id) Some fields can accept value as Raw JSON (e.g. nested, object, geo_point, geo_shape). Object field type can also accept value by nested field (e.g. [object_field.field1] ). Look at the Result column in the output to see if document was created or updated.

UPSERT INTO datatype_test (

_id,

binary_field,

boolean_field,

byte_field,

date_field,

double_field,

float_field,

geo_point_field, --raw

--OR--

--"geo_point_field.lat",

--"geo_point_field.lon",

geo_shape_field, --raw

--OR--

--"geo_shape_field.type",

--"geo_shape_field.coordinates",

integer_field,

ip_field,

keyword_field,

long_field,

nested_field, --raw

object_field, --raw

--OR--

--"object_field.field1",

--"object_field.field2",

short_field,

text_field

)

VALUES(

2, -- _id (Optional - if not supplied then it inserts with auto-generated _id)

'SGVsbG8gd29ybGQ=', --binary_field --base64 value of "Hello world"

false, --bool

117, --byte_field

'2012-12-31T23:59:59.123', --date_field

1.123456789, --double_field

1.123456789, --float_field

--raw JSON must be in one line

'{ "lat": 40.7128, "lon": -74.0060 }', --geo_point_field

--OR--

-- 40.7128, -74.0060,

'{ "type": "polygon", "coordinates": [[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]] }', --geo_shape_field

--OR--

--'polygon',

--'[[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]]',

123, --integer_field

'127.0.0.1', --ip_field

'thhi is text', --keyword_field

1234567890, --long_field

--raw JSON must be in one line

'[{"nested_property_1":"nested text 1", "nested_property_2":100}, {"nested_property_1":"nested text 2", "nested_property_2":101}]', --nested_field

'{"field1":"A","field2":"B"}', --object_field (Raw Value)

--OR--

--'object field keyword 1', --object_field.field1

--123, --object_field.field2

1, --short_field

'text field ' --text_field

)

upsert_documents endpoint belongs to

[Dynamic Table]

, [Dynamic Table]

table(s), and can therefore be used via those table(s).

SQL Server

Use these SQL queries in SQL Server after you create a data source in Data Gateway:

Upsert (Update or Insert) documents using various data types (supply _id)

This example shows how to update or insert document for different datatype fields. _id is optional. If _id column is supplied then it does UPSERT action (Update or Insert) if _id not supplied then does only insert (auto generate new _id) Some fields can accept value as Raw JSON (e.g. nested, object, geo_point, geo_shape). Object field type can also accept value by nested field (e.g. [object_field.field1] ). Look at the Result column in the output to see if document was created or updated.

DECLARE @MyQuery NVARCHAR(MAX) = 'UPSERT INTO datatype_test (

_id,

binary_field,

boolean_field,

byte_field,

date_field,

double_field,

float_field,

geo_point_field, --raw

--OR--

--"geo_point_field.lat",

--"geo_point_field.lon",

geo_shape_field, --raw

--OR--

--"geo_shape_field.type",

--"geo_shape_field.coordinates",

integer_field,

ip_field,

keyword_field,

long_field,

nested_field, --raw

object_field, --raw

--OR--

--"object_field.field1",

--"object_field.field2",

short_field,

text_field

)

VALUES(

2, -- _id (Optional - if not supplied then it inserts with auto-generated _id)

''SGVsbG8gd29ybGQ='', --binary_field --base64 value of "Hello world"

false, --bool

117, --byte_field

''2012-12-31T23:59:59.123'', --date_field

1.123456789, --double_field

1.123456789, --float_field

--raw JSON must be in one line

''{ "lat": 40.7128, "lon": -74.0060 }'', --geo_point_field

--OR--

-- 40.7128, -74.0060,

''{ "type": "polygon", "coordinates": [[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]] }'', --geo_shape_field

--OR--

--''polygon'',

--''[[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]]'',

123, --integer_field

''127.0.0.1'', --ip_field

''thhi is text'', --keyword_field

1234567890, --long_field

--raw JSON must be in one line

''[{"nested_property_1":"nested text 1", "nested_property_2":100}, {"nested_property_1":"nested text 2", "nested_property_2":101}]'', --nested_field

''{"field1":"A","field2":"B"}'', --object_field (Raw Value)

--OR--

--''object field keyword 1'', --object_field.field1

--123, --object_field.field2

1, --short_field

''text field '' --text_field

)';

EXEC (@MyQuery) AT [LS_TO_ELASTICSEARCH_IN_GATEWAY];Upsert (Update or Insert) documents using various data types (supply _id)

This example shows how to update or insert document for different datatype fields. _id is optional. If _id column is supplied then it does UPSERT action (Update or Insert) if _id not supplied then does only insert (auto generate new _id) Some fields can accept value as Raw JSON (e.g. nested, object, geo_point, geo_shape). Object field type can also accept value by nested field (e.g. [object_field.field1] ). Look at the Result column in the output to see if document was created or updated.

DECLARE @MyQuery NVARCHAR(MAX) = 'UPSERT INTO datatype_test (

_id,

binary_field,

boolean_field,

byte_field,

date_field,

double_field,

float_field,

geo_point_field, --raw

--OR--

--"geo_point_field.lat",

--"geo_point_field.lon",

geo_shape_field, --raw

--OR--

--"geo_shape_field.type",

--"geo_shape_field.coordinates",

integer_field,

ip_field,

keyword_field,

long_field,

nested_field, --raw

object_field, --raw

--OR--

--"object_field.field1",

--"object_field.field2",

short_field,

text_field

)

VALUES(

2, -- _id (Optional - if not supplied then it inserts with auto-generated _id)

''SGVsbG8gd29ybGQ='', --binary_field --base64 value of "Hello world"

false, --bool

117, --byte_field

''2012-12-31T23:59:59.123'', --date_field

1.123456789, --double_field

1.123456789, --float_field

--raw JSON must be in one line

''{ "lat": 40.7128, "lon": -74.0060 }'', --geo_point_field

--OR--

-- 40.7128, -74.0060,

''{ "type": "polygon", "coordinates": [[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]] }'', --geo_shape_field

--OR--

--''polygon'',

--''[[[-74.0060, 40.7128], [-73.9960, 40.7128], [-73.9960, 40.7028], [-74.0060, 40.7028], [-74.0060, 40.7128]]]'',

123, --integer_field

''127.0.0.1'', --ip_field

''thhi is text'', --keyword_field

1234567890, --long_field

--raw JSON must be in one line

''[{"nested_property_1":"nested text 1", "nested_property_2":100}, {"nested_property_1":"nested text 2", "nested_property_2":101}]'', --nested_field

''{"field1":"A","field2":"B"}'', --object_field (Raw Value)

--OR--

--''object field keyword 1'', --object_field.field1

--123, --object_field.field2

1, --short_field

''text field '' --text_field

)';

EXEC (@MyQuery) AT [LS_TO_ELASTICSEARCH_IN_GATEWAY];

upsert_documents endpoint belongs to

[Dynamic Table]

, [Dynamic Table]

table(s), and can therefore be used via those table(s).