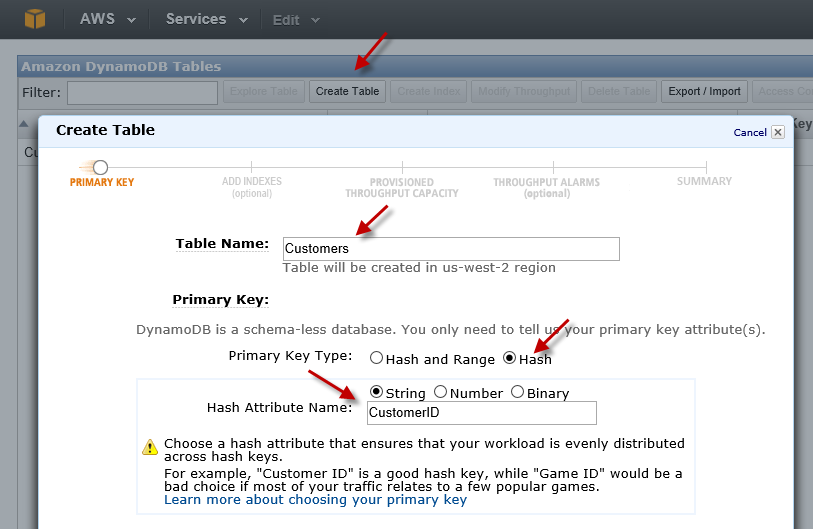

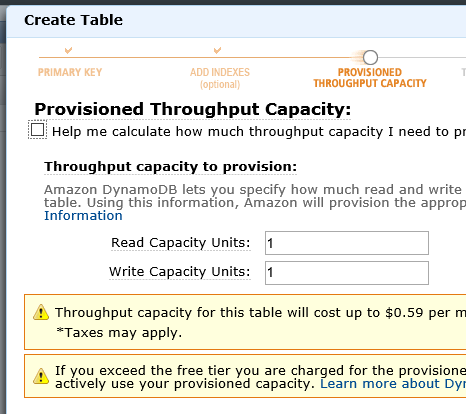

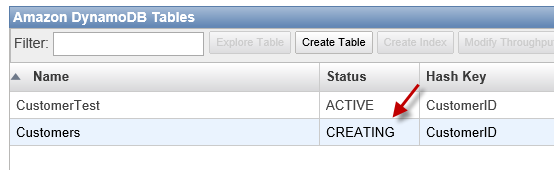

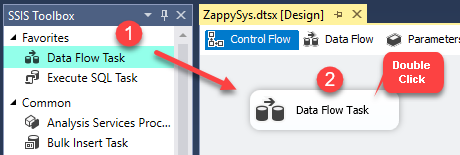

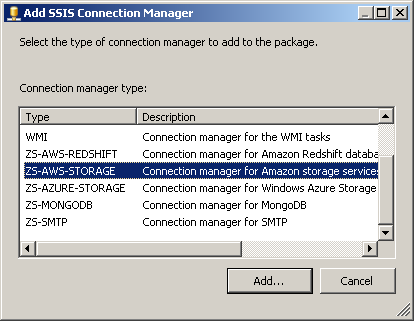

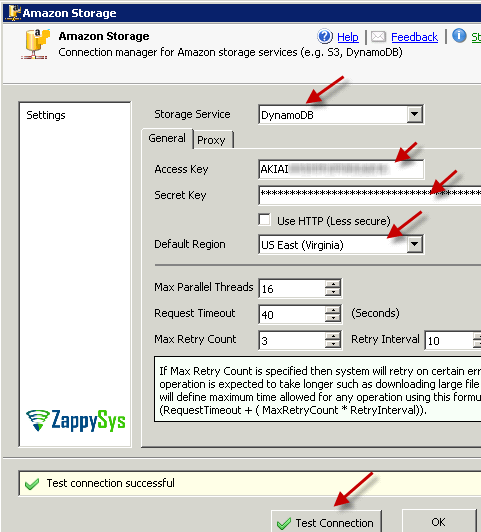

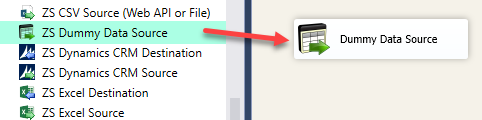

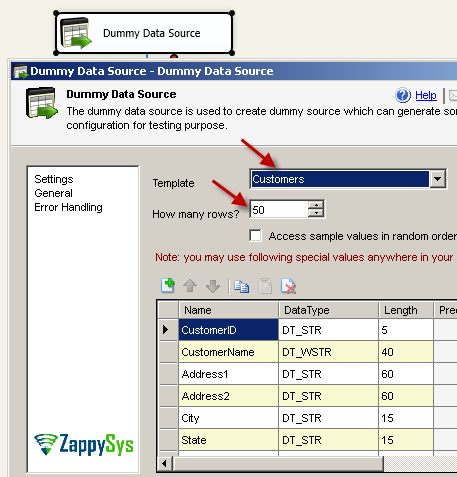

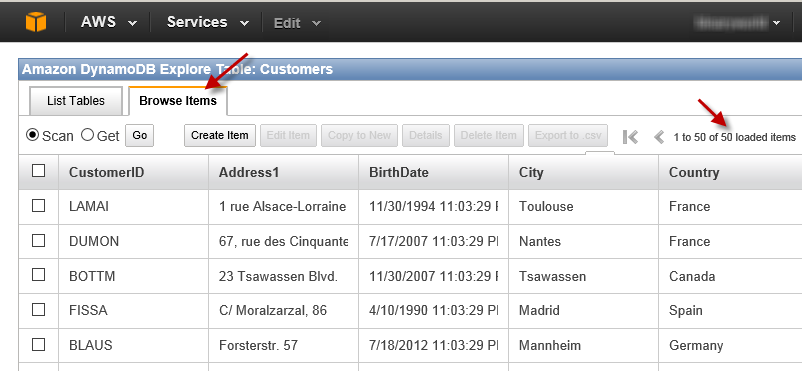

Introduction In this article we will look at how to Read / Write Amazon DynamoDB in SSIS. ZappySys developed many AWS related components but in this article we will look at 3 Tasks/Components for DynamoDB Integration Scenarios (Read, Write, Update, Bulk Insert, Create / Drop Table etc.). We will discuss how to use SSIS DynamoDB Source Connector […]

|

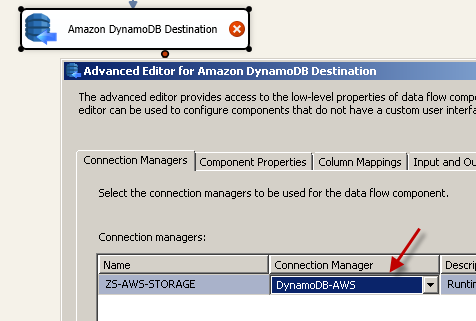

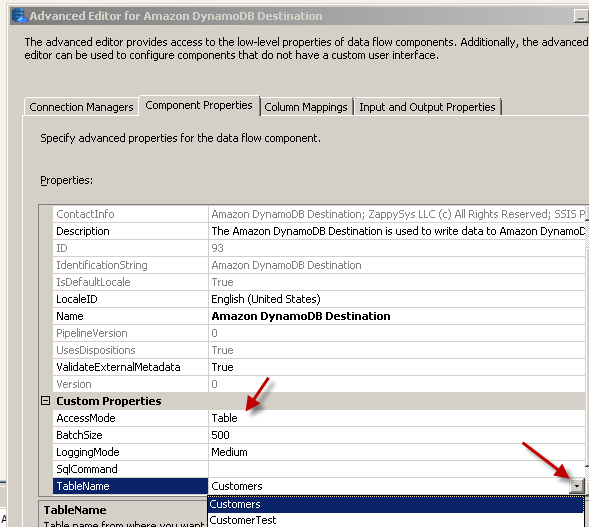

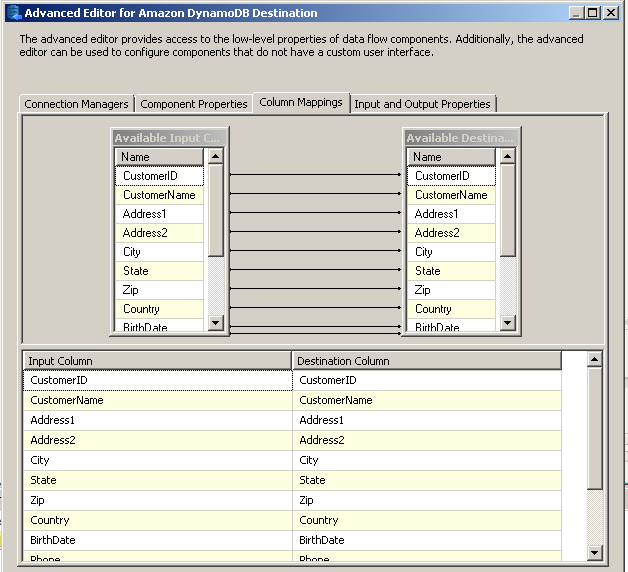

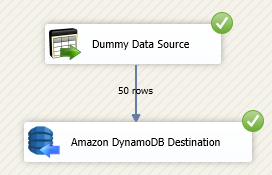

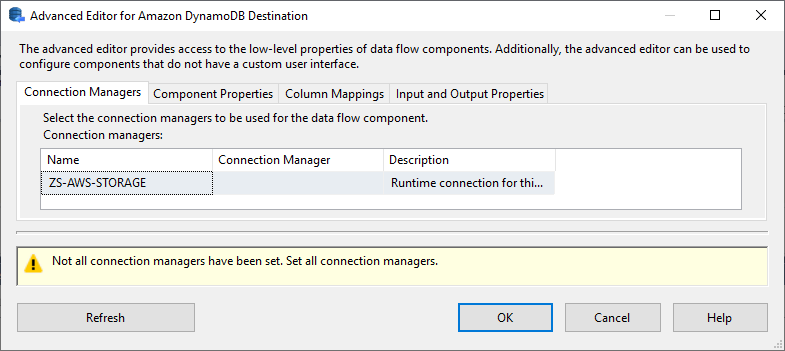

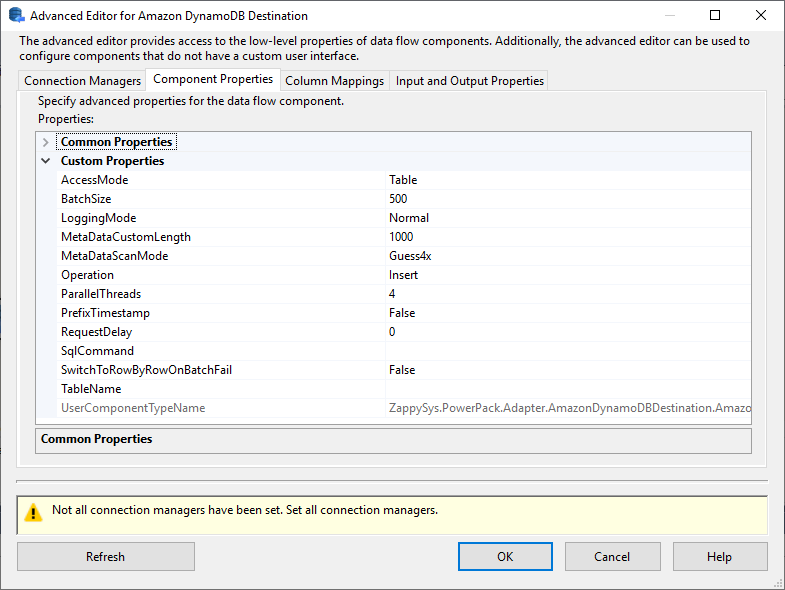

SSIS Amazon DynamoDB Destination Adapter (Bulk Load,Write,Copy NoSQL Data)

|

|